SMITH PREDICTOR vs DEADTIME COMPENSATED PID

BETTER PROTECTION FROM WILDFIRES

SMITH PREDICTOR vs DEADTIME COMPENSATED PID

BETTER PROTECTION FROM WILDFIRES

It's real, it works, and it's making money

Process improvement is like a trapeze act. You need a trusted partner who lends a hand at the right moment.

Just as athletes rely on their teammates, we know that partnering with our customers brings the same level of support and dependability in the area of manufacturing productivity. Together, we can overcome challenges and achieve a shared goal, optimizing processes with regards to economic efficiency, safety, and environmental protection. Let’s improve together.

Industry enters its AI era

AI-enabled technology has become too enticing for process operators to simply "shake it off"

Overcoming safety, sustainability, reliability and productivity challenges

Advances in pressure relief valves and associated monitoring systems help process manufacturers reduce emissions, increase performance and make other improvements

ON THE BUS

Prepare to justify

Ethernet-APL migration

Migrating Foundation Fieldbus to Ethernet APL will take conviction and investment

IEC 62433 and zero trust

There's a fundamental shift in security philosophy that requires a change in mindset.

The world is not as it seems How we perceive the physical world may differ from the scientific world 13 IN PROCESS

ARC meets AI head on Honeywell plans split into three companies; PI debuts procurement website

16 INDUSTRY PERSPECTIVE

Getting the most from data on the edge

AI fuels digital transformation

35 RESOURCES

All about alarm management

Control's monthly resources guide

38 ROUNDUP

New forms, same control mission

PLC, PACs and PCs are taking on all kinds of newly digitalized faces, but their jobs remain the same

40 CONTROL TALK

The AI reality—part 2

How to train an AI for process control engineering

42 CONTROL REPORT

ISS, MOD and O-PAS

If we can't rely on the shoulders of giants, are we willing to lean on each other?

Endeavor Business Media, LLC

30 Burton Hills Blvd, Ste. 185, Nashville, TN 37215

800-547-7377

EXECUTIVE TEAM

CEO Chris Ferrell

COO

Patrick Rains

CRO

Paul Andrews

CDO

Jacquie Niemiec

CALO

Tracy Kane

CMO

Amanda Landsaw

EVP/Group Publisher

Tracy Smith

EDITORIAL TEAM

Editor in Chief

Len Vermillion, lvermillion@endeavorb2b.com

Executive Editor

Jim Montague, jmontague@endeavorb2b.com

Digital Editor Madison Ratcliff, mratcliff@endeavorb2b.com

Contributing Editor

John Rezabek

Columnists

Béla Lipták, Greg McMillan, Ian Verhappen

DESIGN & PRODUCTION TEAM

Art Director

Derek Chamberlain, dchamberlain@endeavorb2b.com

Production Manager

Rita Fitzgerald, rfitzgerald@endeavorb2b.com

Ad Services Manager

Jennifer George, jgeorge@endeavorb2b.com

PUBLISHING TEAM

VP/Market Leader - Engineering Design & Automation Group

Keith Larson

630-625-1129, klarson@endeavorb2b.com

Group Sales Director

Mitch Brian

208-521-5050, mbrian@endeavorb2b.com

Account Manager

Greg Zamin

704-256-5433, gzamin@endeavorb2b.com

Account Manager

Kurt Belisle

815-549-1034, kbelisle@endeavorb2b.com

Account Manager

Jeff Mylin

847-533-9789, jmylin@endeavorb2b.com

Subscriptions

Local: 847-559-7598

Toll free: 877-382-9187

Control@omeda.com

Jesse H. Neal Award Winner & Three Time Finalist

Two Time ASBPE Magazine of the Year Finalist

Dozens of ASBPE Excellence in Graphics and Editorial Excellence Awards

Four Time Winner Ozzie Awards for Graphics Excellence

AI-enabled technology has become too enticing for process operators to simply "Shake it off"

THE uneasiness you may feel about artificial intelligence (AI) might just be the push you need to take it seriously. A little anxiety over change never hurts because it often leads to inspiration.

Don’t believe me? Just ask Taylor Swift, who made herself a billionaire by writing about the pain caused by relationship changes. OK, that's a different kind of anxiety, but nonetheless, everyone at last month’s ARC Industry Forum in Orlando, Fla., seemed to be getting past those uneasy feelings about how AI might change their operations—and businesses. Instead, they started discussing, as the conference theme indicated, “Winning in the industrial AI era.”

As ARC president and CEO Andy Chatha put it during his opening remarks, "AI changes are happening so fast, we must manage it. It has woken CEOs up."

At ARC forum, you were hard-pressed to find a presentation, roundtable or sidebar conversation that didn’t include the use of AI in control systems going forward. What struck me most was the different tone, especially when talking about AI technologies—in particular, large-language models such as ChatGPT—and how they augment humans on the plant floor. Sure, the requisite disclaimers that workers will not be replaced but helped by AI consistently followed just about every presentation I attended. Somehow, I got the feeling a lot of folks believed it this time.

For example, I had a chat (a live one, not AI) with Pramesh Maheshwari, president and CEO at Honeywell Process Solutions, who pointed out that AI is making operations technology (OT) interesting again for newer generations of workers. Another way to put it is, when it comes to working with AI, "The kids are [going to be] alright."

One of the more exciting aspects of the quest to figure out how AI can help operators is establishing partnerships, around the data analytics needed to train and rely upon AI for productivity. It’s these types of collaborations that will bring out the best in supplier innovations around the use of AI.

It promises to be an interesting year as industry ushers in its AI era. I have a feeling that, while I promise this is the last time I’ll try to write a song about it, it won’t be the last time you hear me—or yourselves—talking about it.

LEN VERMILLION editor-in-chief lvermillion@endeavorb2b.com

“One of the more exciting aspects of the quest to figure out how AI can help operators is establishing partnerships around the data analytics needed to train and rely on AI for productivity.”

PETER MATHEW

VP, pressure relief valves Emerson

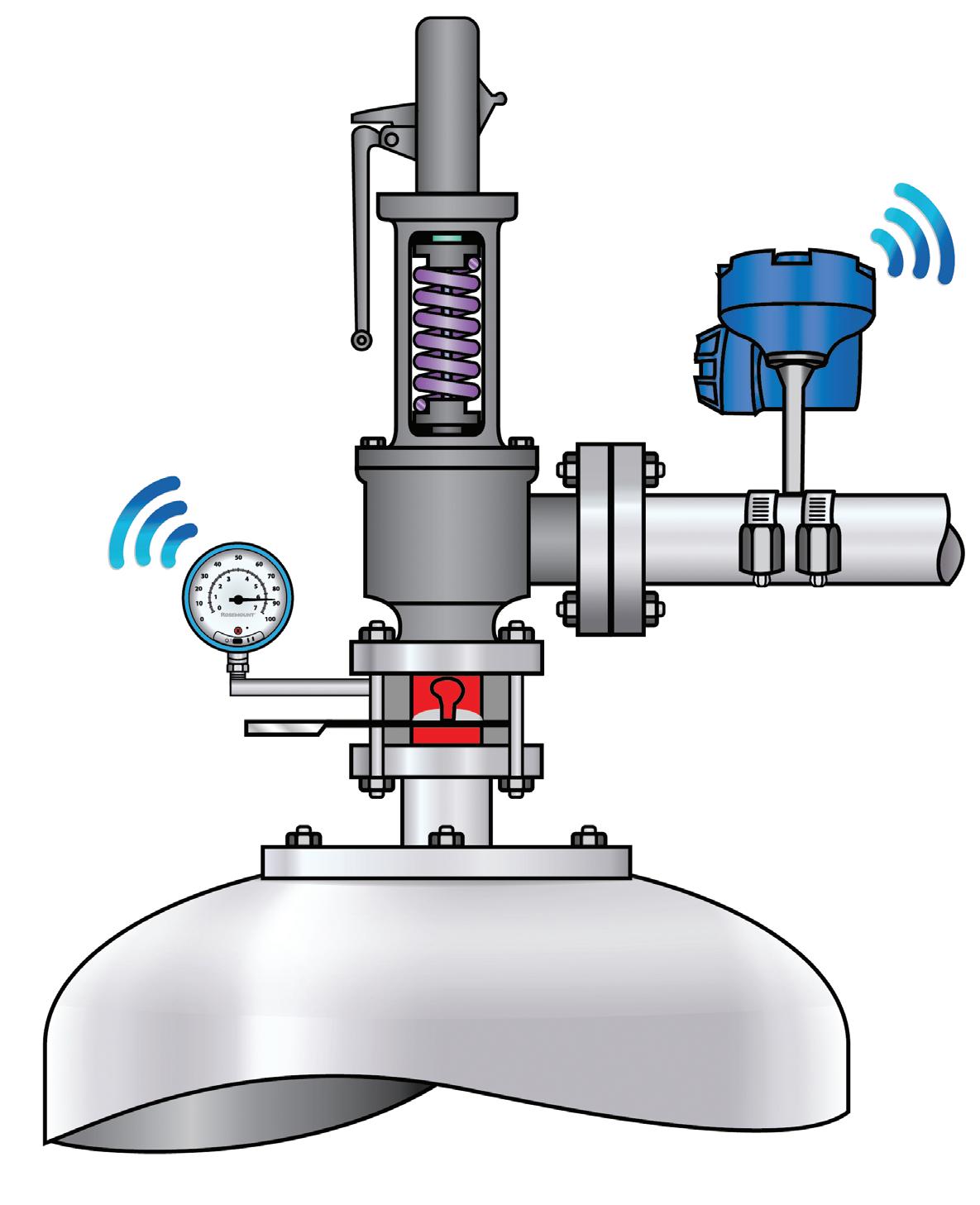

“PRVs prevent safety incidents from occuring in all types of pressure vessels, boilers, reactors and other process equipment.”

Advances in pressure relief valves and associated monitoring systems help process manufacturers reduce emissions, increase performance and make other improvements

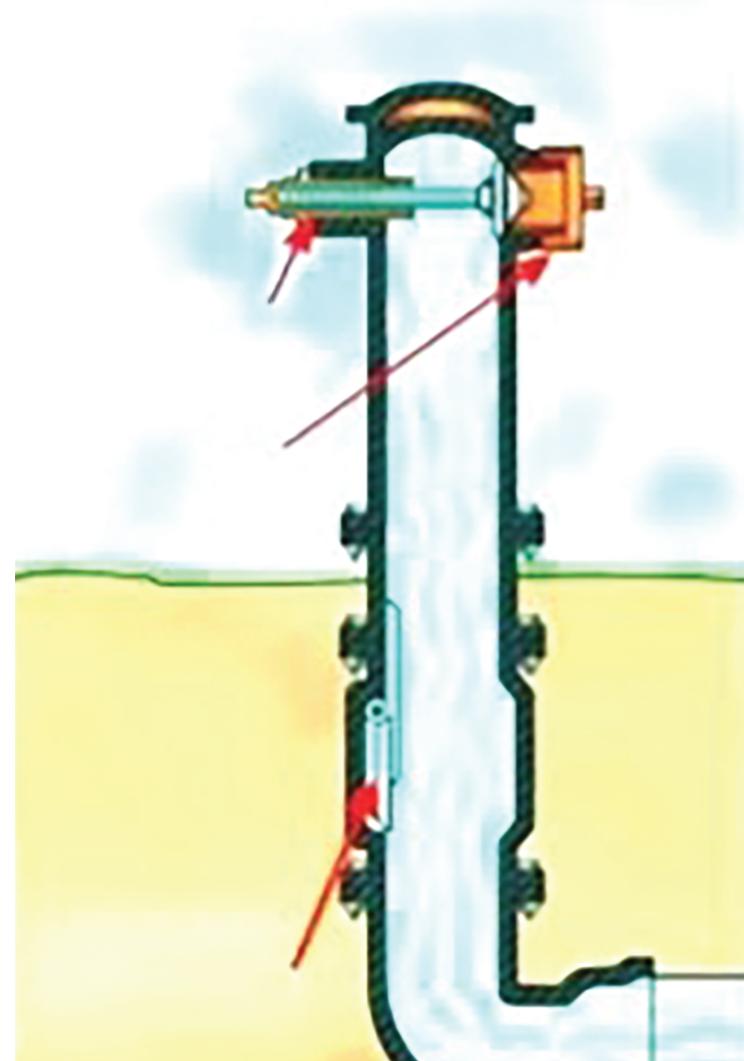

PROCESS manufacturers face many challenges in the areas of safety, sustainability, reliability and productivity. In this column, we'll examine how pressure relief valves (PRV), a seemingly simple but in fact sophisticated device, can help improve performance in all these areas.

Over the past few decades, emphasis on safety increased due to regulations, insurance, public perception and recruiting. Consequently, protecting personnel, equipment and the environment has never been more important.

Regulatory pressures may ebb and flow, but they’re increasing and will continue to do so. Similarly, insurance rates and public scrutiny only seem to grow. Meanwhile, personnel are increasingly difficult to recruit and retain, and a safe workspace is critical to ensuring retention. Finally, equipment and construction costs increased over the past few years, so avoiding safety incidents that cause material damage is more important than ever.

PRVs prevent safety incidents from occurring in all types of pressure vessels, boilers, reactors and other process equipment. In the past, these devices opened at setpoint to release pressure to the atmosphere. Once pressure dropped, they simply opened a closed position, ready to activate when another overpressure event occurred. There was no direct indication of opening or closing, so operators had to determine activation indirectly.

Manual inspection rounds are an option, but these activities often require plant personnel to enter hazardous areas or access valves in hard-to-reach locations. Adding an external monitoring device that won’t interfere with the PRV’s ability to open is a better approach. Acoustic monitoring devices supporting wired and wireless protocols (Figure 1) are now

available and designed to mount directly on pipes adjacent to PRVs.

These devices can detect PRV events in real-time, and analytics can correlate process data with maintenance records to determine root causes. Immediate notification via wireless communication with a host system keeps workers safe and ensures regulatory compliance.

Sustainability has also increased in importance, moving from an afterthought to a necessity as public demand for more environmentally conscious practices increases.

Releases and leaks from PRVs open to atmosphere can lead to fines, lawsuits and extra work to identify the source of each release. Even small leaks can generate large amounts of volatile organic compounds (VOC), depending on concentrations and pressures.

As with safety, PRVs can play a vital role in improving sustainability, but only if their operation is continuously monitored to detect releases. The type of monitoring can detect release events, report duration and severity, and quantify the amount of emissions. This information empowers plant personnel to quickly identify issues, and repair or replace defective devices.

Reliability is particularly important in industries facing intense global competition. Downtime can be costly, not just in terms of lost production, but also due to unplanned maintenance. This increases the drive for maximum uptime with minimal operating expenditures, and the need to detect minor issues before they develop into serious incidents.

A PRV release isn't a regular event, so each occurrence should be logged for further

Figure 1: An acoustic transmitter (blue device, upper right), can be mounted on the discharge pipe of a PRV, allowing it to detect the vibrations caused by any release. It sends data via WirelessHART.

Source: Emerson

investigation. If a PRV opens frequently, that’s a strong indication of a process problem that deserves more attention, or it indicates a valve wasn’t properly specified for the application. In either case, it should be treated as an important alert.

Once again, PRVs play a crucial role because they can warn of impending issues with their own operation, as well as with the equipment they’re designed to protect. Unmonitored PRVs simply operate as designed until they fail, so it’s often difficult to detect even complete failures.

By contrast, monitoring also reveals if a valve is leaking or simmering, and alerts for unwanted behaviors, such as cycling or chattering. With real-time information about each release, correlating relief events with process data becomes possible, helping to detect issues with equipment to which the PRV is affixed.

Relief events can be correlated with historical records and maintenance data. Process manufacturers can perform in-depth, accurate, rootcause failure analyses, not only for the valves, but also for the process.

There are two aspects of plant productivity: personnel and production. The drive to increase manufacturing productivity amplified as difficulties in hiring and retaining staff grew. However, process manufacturers can work with suppliers to increase automation, multiplying the efforts of their staff. The demand for increased productivity is growing due to heightened, worldwide competition.

PRV monitoring can address both of these issues. By removing the need for manual rounds to inspect valves, these systems free up personnel to focus on

higher-value tasks, such as supervising numerous sites from a control center. Software applications are available to speed up and simplify these monitoring activities.

Automated PRV monitoring also increases manufacturing productivity by providing early warnings of impending issues in the devices themselves, as well as in external equipment. This facilitates proactive and planned maintenance, rather than reactive upkeep, eliminating extra costs and downtime that accompany the latter.

In addition to monitoring, instantaneous, bellows-leak detection is a critical safety feature designed to immediately identify and alert operators to leaks occurring in the bellows of PRVs. Bellows are integral to isolating a valve's internal components from external factors, such as process backpressure, which can influence the PRV’s setpoint and performance. Over time, bellows can deteriorate due to mechanical stress, corrosion, metal fatigue or exposure to harsh process environments. This deterioration leads to leaks, compromising the effectiveness of the valve and introducing several safety hazards.

Service records from 30,000 valves in multiple applications and industries show that 2-6 % of all PRV bellows are damaged and potentially emitting process media to the atmosphere. Incorporating instantaneous, bellows-leak detection into PRVs is a proactive safety measure that mitigates risks associated with bellows failure to ensure operational reliability, environmental protection and compliance with safety standards.

These advances will persist as automation suppliers continue to innovate, a task that Emerson takes very seriously. Combined with monitoring systems, modern PRVs help process manufacturers increase safety, sustainability, reliability and productivity.

JOHN REZABEK contributing editor JRezabek@ashland.com

“End users may hope for a migration less burdened by cost and complexity.”

Migrating Foundation Fieldbus to Ethernet-APL will take conviction and investment

A field network for process gas chromatographs is indispensable if you have two or more. Why? A typical process gas chromatograph is microprocessor-based with multiple analog outputs—so why consume multiple 4-20 mA analog inputs on your DCS if you can bring all the data in over, say, Modbus?

The same is true of current process spectrometers. If there are issues, operators can view chromatograms or spectra from a safe, climate-controlled, control house or shop location, change or run calibrations, and do extensive troubleshooting if issues persist. Even the relatively simple pH analyzer can be examined—if digitally connected—to better assess its health or issues that must be addressed before striking off, getting permits, advising operations, and putting loops in manual prior to maintenance. Field networks integrating microprocessor-based devices are a great aid to maintainers, or at least those operators knowledgeable and motivated to use them.

If you’re familiar with this boost to productivity and reliability, why not do the same for the remaining field devices? Practically all of them have microprocessors, and many—even temperature transmitters—have multiple variables. That’s why several end users deployed Foundation Fieldbus (FF) and Profibus PA (PA). While saving copper (less wire), labor (fewer terminations) and rack-room real estate, such economies were obscured by other project expenses, such as the cost of other instrument and electrical infrastructures. But after startup, many fieldbus champions were discouraged by the light use of field diagnostics, and many asset management consoles collected dust. What was missing? Unlike analyzer techs, who were brought up on digitally integrated analyzers, instrument techs’ work habits were already well established, and leadership to provide knowledge and motivation was absent or ineffective.

Why do I bring this up (again)? End users must have a solid plan—and a record of successes—for utilization of field-device intelligence. Once needed FF proxy switch technology is available, migrating FF to Ethernet-Advanced Physical Layer (APL) will take conviction and investment. How much cash remains to be seen. Having recently acquired some “managed” commercial and industrialgrade switches, you’re looking at costs on the order of a big-screen MacBook Pro (each)— just for a switch. If one’s field junction box houses a couple APL switches, the installed cost could easily be well into five figures per box. Last year’s FieldComm Group Technical Recommendation, FCG TR10365 (bit.ly/ TR10365 ), warns that existing junction boxes could get too hot, especially if local power supplies are added to ensure the switches have clean, redundant DC power. Unlike FF and PA, this would involve bringing additional AC circuits—ideally from diverse AC power distribution infrastructures—into every APL field junction box. I imagine a gradual migration of FF/PA devices to APL devices as legacy devices fail due to age. APL devices could consume up to four times more power. A model favoring redundant, field DC power may preempt providing power over existing 18 AWG fieldbus trunks.

End users may hope for migrations less burdened by cost and complexity. Meanwhile, we put our supplier community in a predicament. The market for FF-to-APL switches will not likely take off like a rocket until FF sites are under the gun—when a fieldbus device fails and no replacement can be obtained, even on eBay. Perhaps our infrastructure suppliers should start a side business buying and refurbishing FF and PA field devices as they’re exchanged for APL instruments. But it’s a good bet that an FF or PA device purchased today will still function, barring extreme abuse, past 2045 or even 2050.

There’s a fundamental shift in security philosophy that requires a change in mindset

THE most referenced document in the IEC 62443 cybersecurity series (bit.ly/ISA62443) is IEC 62443-3-3, “System security requirements and security levels.” Jointly developed with ISA 99, it details technical control system requirements (SR) associated with seven foundational requirements (FR):

1. Identification and authentication ensure only authorized users and devices can access the system.

2. Use control defines what actions authorized users and devices can perform within the system.

3. System integrity ensures system and components aren’t corrupted.

4. Data confidentiality protects sensitive information from unauthorized disclosure.

5. Restricted data flow controls the flow of information in the system and between different systems.

6. Timely response to events makes sure the system can respond to security events.

7. Resource availability guarantees the system and its resources are available to authorized users when needed.

IEC 62443-3-3 was issued in 2013 and defined the requirements for control system security levels. Though the standard is now more than a decade old, its foundational requirements shouldn’t change. Likewise, the zone and conduit model introduced by ISA-99 in 2007 and incorporated into the IEC 62443 series is widely accepted for developing and maintaining industrial control environments.

Most IT and IoT systems are also pushing to implement zero-trust protections. Their core requirements are:

• Continuous verification: every access request, regardless of origin (inside or outside the network), must be explicitly verified and authorized.

• Least privilege access: users and devices only have the absolute minimum permissions necessary to perform their required tasks.

• Assume breach: users act as if it already occurred, and focus on containing the impact of any successful intrusion.

Zero trust is a fundamental shift in security philosophy that requires a change in mindset, and a commitment to continuous verification and least-privilege access. While zero trust isn't a specific legal requirement in all cases, it's becoming the de facto standard for federal cybersecurity. Its principles are likely to influence future regulations and best practices.

Europe has two key pieces of cybersecurity legislation: NIS2 directive (bit.ly/NIS2directive) and the Cyber Resilience Act (bit.ly/EUcyberreliance) that reference the zero trust.

The National Institute of Standards and Technology (NIST) integrates zero trust as a core component of its comprehensive Cybersecurity Framework (CSF). Organizations can use the CSF as a general roadmap, and then leverage specific guidance in SP 800-207 to implement zero trust in their overall network. Another way to look at it is: CSF is the overall blueprint for your cybersecurity architecture, and zero trust is a critical security system you install within that architecture to protect your valuable assets.

Similarly, defense-in-depth cybersecurity strategy provides a foundation for zero trust. The multiple layers of security in a defensein-depth strategy become the components that zero trust uses to enforce its "never trust, always verify" principle down to a zone of one device, one port and one application.

Defense-in-depth is about building multiple layers of security, while zero trust is about continuously verifying every access attempt. If you consider a zone of one element, as might be the case for an IoT or IIoT device providing an input via a SCADA or other control system, then IEC 62443’s seven foundational requirements not only support the principles of zero trust, but also provide solid recommendations about how to implement it.

IAN VERHAPPEN solutions architect Willowglen Systems Ian.Verhappen@ willowglensystems.com

“Zero trust is a fundamental shift in security philosophy that requires a change in mindset, and a commitment to continuous verification and least-privilege access.”

JESSE YODER

founder and president Flow Research Inc. jesse@flowresearch.com

“The properties of solid objects we perceive in real life are emergent properties of the molecules and atoms that make them up.”

How we perceive the physical world may differ from the scientific world

BRITISH philosopher Bertrand Russell said of the difference between our physical world experience and the scientific world of continually changing matter, “There is [pause] some conflict between what common sense regards as one thing and what physics regards as an unchanging collection of particles. To common sense, a human body is one thing, but to science, the matter comprising it is continually changing.”

What composes the matter of science? Is there an indivisible particle? The Greeks believed they found this in the atom. Today, we know that atoms are not single, indivisible particles. Instead, they're made up of protons, neutrons and electrons. The protons and neutrons form the nucleus of the atom, while the electrons orbit around the nucleus in constant motion. The term “orbit” suggests that the electron is a solid object traveling around the nucleus, somewhat like the moon orbits Earth. However, current thinking is electron are more like a field of energy than a solid particle, and they exhibit both wavelike and particle-like qualities.

We can take Russell’s world of “continually changing matter” to mean the world of molecular and atomic motion. There are six identifiable levels of what is physical.

Matter is the world of objects as we experience them—solid, observable entities, such as rocks, trees, stars and human bodies. These are made up of molecules, which in turn are made up of atoms. Atoms are made up of protons, neutrons and electrons. Protons and neutrons are made up of quarks and other subatomic particles.

We can explain the relations among these six physical levels with the concept of “emergent property.” An emergent property is a property that arises from the interactions of multiple components within a system, but isn't a property of the individual components themselves. Emergent properties aren't predictable

from the properties of the individual parts. One example of an emergent property is sports fans performing the wave. You can’t understand or perceive the wave by studying the behavior of single, individual fans. Yet, the wave is clearly perceived when the coordinated actions of groups of fans are observed. Another example is the roar of the crowd at a baseball game or other event. The roar is the result of the screaming and yelling of thousands of fans. Yet, listening to one fan doesn't yield this roar. It's only the collective action of many fans that generates it.

The properties of solid objects we perceive in real life are emergent properties of the molecules and atoms that make them up. The atoms are moving so fast that they create the appearance of a solid object. The molecules and atoms themselves aren't solid, but when they move so rapidly, they create the impression of a three-dimensional object.

A similar thought experiment explains other levels of this concept. Molecules are an emergent property of their constituent atoms in motion, while atoms are an emergent property of the motion of protons, neutrons and electrons, while protons and neutrons are an emergent property of quarks and other subatomic particles.

The world isn't as it seems. What we find when we look more deeply into it is a hierarchy of particles that are built on each other. Today, scientists believe that Democritus’ indivisible particles are not his atoms, but subatomic particles such as quarks. Beginning with subatomic particles, we can build a “ladder of emergent properties,” until we reach the stable, solid objects of our everyday experience.

Can the concept of emergent properties also explain the relationship between a neural event and a thought, or between brain and mind? Quite possibly, but this is a story for another occasion.

29th annual industry forum attracts more than 650 attendees and 200 visiting companies

HUNDREDS of billions of dollars are expected to flow into artificial intelligence (AI) and other digitalization efforts in every industry, and they’ll all require massive amounts of energy, equipment and infrastructure—even as they seek to maintain cybersecurity and decarbonize at the same time. How can anyone make sense of these faster-than-ever and accelerating digitalization, technological and economic upheavals?

Well, some of the smarter players in the process industries got on the same page once again at ARC Advisory Group’s 29th annual Industry and Leadership Forum (www. arcweb.com/events/arc-industry-leadership-forum-orlando) on Feb. 10-13 in Orlando, Fla. The event attracted more than 650 attendees, including 75 international visitors from 15 nations. They interacted with 190 speakers and panelists, and more than 200 exhibitors and visiting companies.

“We’ve got lots of production data that could help run our businesses, but it’s usually located all over the place,” said Eryn Devola, sustainability head at Siemens Digital Industries (www.sw.siemens.com). “It’s a vast and continuous challenge to connect these sources, gain efficiencies, and achieve more value.”

Lizabeth Stuck, VP of government affairs and MxD learning at Manufacturing and Digital Institute (www.mxdusa.org), added, “About 95% of the 240,000 manufacturers in the U.S. are small companies. So, when we talk about cybersecurity and sustainability, these issues are more challenging because they often don’t have the resources to address them.”

Several presenters and exhibitors at ARC’s event showed how the plug-and-play Open Process Automation Standard (O-PAS), recently expanded OPC UA capabilities, and other network simplifications can make digitalization and cybersecurity approachable and affordable for a much wider circle of potential users.

“We’re still dealing with phishing and ransomware, but AI and digitalization are creating new cybersecurity challenges,” said Sid Snitkin, VP for cybersecurity at ARC. “Cyber-attacks on OT infrastructure are increasing in frequency and impact, which makes it even more important for OT and IT to collaborate on strategies to prevent and respond to them.”

ARC is presently surveying end-user companies about their IT/OT cyber-convergence programs (www.arcweb.com/ best-practice-surveys), and has 25 responses, but is seeking more. So far, 70% of respondents report they’re working to converge their IT and OT cybersecurity programs, while 30% feel they’ve accomplished their cybersecurity goals. More

Among the speakers presenting their experiences with artificial intelligence (AI), digitalization, sustainability and cybersecurity at ARC Industry and Leadership Forum 2025 were panelists (l. to r.) Eryn Devola, sustainability head at Siemens Digital Industries, Lizabeth Stuck, VP of government affairs and MxD learning at Manufacturing and Digital (MxD) Institute, and Billy Bardin, global climate transition director at Dow.

than half add that AI and machine learning (ML) will have the greatest impact on the cybersecurity of their plants and companies during the next five years.”

Neal Arnold, regional cybersecurity advisor and lawenforcement liaison at the Cybersecurity and Infrastructure Security Agency (www.cisa.gov), added it can check an organization’s network traffic, provide alerts about ransomware and whether it’s been activated, and identify external scans that haven’t been patched, including associated PCs, users names and files.

“Ransomware remains a huge issue, but now there’s more data infiltration and exfiltration. We can find anomalies and vulnerabilities, but humans are still the biggest problem because most cyber-attacks still come from phishing, including increasingly sophisticated versions like Salt Typhoon,” explained Arnold. “We recommend joining CISA’s Protected Critical Infrastructure Information (PCII) program (www.cisa. gov/resources-tools/programs/protected-critical-infrastructure-information-pcii-program).”

As usual at the ARC event, several suppliers delivered press conferences on their latest solutions, services and other news: • To reduce electricity consumption and ownership costs of rotating machinery, Nanoprecise (nanoprecise.io)

reported on its five-step Energy-Centered Maintenance strategy that includes its EnergyDoctor six-in-one sensor and software-based data storage, cloud computing, and AI and predictive analytics program.

• Hexagon (hexagon.com) showed how its Alix asset lifecycle intelligence software can retrieve code generation, fine tuning and other data; reported that it will add database queries and action-generation capabilities in 2025; and will launch prescriptive recommendations later. After acquiring Itus Digital last year, Hexagon is also combining their enterprise asset management (EAM) and asset performance management (APM) software platforms.

• L&T Technology Services (www.ltts.com) unveiled its RefineryNext software that features digital governance and cybersecurity; AI-powered tools for predictive maintenance, intelligent asset management and demand forecasting; sustainability practices like energy optimization and net-zero carbon compliance; and smart workforce deployment with augmented training and remote operations.

• Schneider Electric (www.se.com) demonstrated its SCADAPack for edge computing and control, which uses Python programming software to shape, filter, and analyze data and operational decisions at the edge. It also publishes a software-based HMI via an online server, and integrates with third-party tools, legacy devices and webservers. SCADAPack works with the company’s 470i smart, cyber-secure, remote terminal unit (RTU) that combines the capabilities of an RTU, flow computer and edge controller, protects against denial-of-service attacks, and just added Lightweight Directory Access Protocol (LDAP) and LDAP over secure socket layer (LDAPS) protocol. For more coverage and information, visit www.arcweb.com/events/arc-industry-leadership-forum-orlando

Following a yearlong business-portfolio evaluation, Honeywell (www.honeywell.com) reported Feb. 6 that it’s dividing its automation and aerospace divisions into two separate companies. Coupled with the previously announced spinoff of its advanced materials division, this plan will result in three public companies. Their separation is expected in the second half of 2026 and will be taxfree to Honeywell’s shareholders. "Forming three independent companies builds on the foundation we created, positions each to pursue tailored-growth strategies,

and unlocks value for shareholders and customers," says Vimal Kapur, chairman and CEO of Honeywell. "Our simplification of Honeywell rapidly advanced over the past year, and we’ll continue to shape our portfolio to create further shareholder value. We have a rich pipeline of strategic, bolt-on acquisition targets, and we plan to continue deploying capital to further enhance each business as we prepare them to become leading, independent public companies.”

The three independent companies created by Honeywell's separation will be:

• Honeywell Automation will maintain its global scale with 2024 revenue of $18 billion. It will connect assets, people and processes to power digital transformation, and build on its technologies, domain experience, and an installed base serving vertical markets. "As a standalone company with a simplified operating structure and enhanced focus, Honeywell Automation will be better able to capitalize on the global megatrends underpinning its business, from energy security and sustainability to digitalization and AI," explains Kapur.

• Honeywell Aerospace has solutions that are used on virtually every commercial and defense aircraft platform worldwide and include aircraft propulsion, cockpit and navigation systems, and auxiliary power systems. With $15 billion in annual revenue in 2024 and a large, global installed base, Honeywell Aerospace will be one of the largest, publicly traded, pure-play aerospace suppliers, and will continue to increase electrification and autonomy of flight.

• Honeywell Advanced Materials will be a sustainabilityfocused, specialty-chemicals and materials manufacturer of fluorine products, electronic materials, industrial-grade fibers, and healthcare packaging. With nearly $4 billion in revenue last year, its brands include Solstice hydrofluoroolefin (HFO) low-global-warming technology. As a standalone company with a large-scale, domestic manufacturing base, it’s expected to benefit from its investment profile and a more flexible and optimized capital allocation strategy.

To streamline procurement for control engineers, machine designers and automated factories, PI North America reported Feb. 21 that it’s launched a new website called Where to Buy (us.profinet.com/resources/where-to-buy). This resource is designed to help users easily find distributors of Profibus, Profinet, IO-Link and omlox products that are members of PI North America. Because automation products can be complex and require close consultation with suppliers, Where to Buy also makes it easy for users to find local contacts, and quickly access each distributor’s product knowledge, availability, and support.

• Galco Industrial Electronics Inc. (www.galco.com) reported Feb. 27 that it’s purchased Brozelco Inc. (brozelco. com), a Tennessee-based provider of automation solutions, specializing in engineering, system integration, fabrication and contracting. This acquisition expands Galco’s capabilities, particularly in custom control, turnkey electrical projects and hazardous location-certified system integration.

• Water Research Foundation (WRF, www.waterrf.org) is accepting nominations for the 2025 Paul L. Busch Award. The $100,000 prize recognizes an outstanding individual for innovative research in the field of water quality and the water environment. Applications can be submitted at forms. waterrf.org/team/plb-team/2025--PLB-Award-Application. The deadline for nominations is June 2, 2025.

• Motive Offshore Group (www.motive-offshore.com) reported Jan. 28 that it’s opened a new office in Houston, following a fourfold increase in available equipment. The company rents inspection, engineering, marine and lifting devices for the onshore and offshore oil and gas, renew -

ables, marine and decommissioning industries. It also plans to increase its staff by 75% in the coming year.

• DigiKey (digikey.com) reported Jan. 30 that it added 455 new suppliers and more than 1.1 million products in 2024. Some of these include Amphenol Airmar that manufactures marine and industrial sensors, Halo Electronics that supplies communication and power magnetics, Menlo Micro that produces RF switches that combine the capabilities of electromechanical and solid-state switches, and Jameco that sells electronics hobby kits.

• Motion Industries Inc. (www.motion.com) agreed Jan. 29 to buy Hawaii-based distributor Maguire Bearing Co. (www.maguirebearing.com) that’s served the Pacific Rim market since 1955. Founded by William H. Maguire, the company is headquartered in Honolulu with three branches in Kapolei, Kahului and Hilo.

• Weber-Hydraulik GmbH (www.weber-hydraulik.com) reported Jan. 22 that it’s moved its valve technology division to a new, company-owned building in Reichenau, Konstanz district, Baden-Württemberg, Germany.

The LASC microprocessor based series is available in open board, 8 or 11 pin socket, and din-mounting designs, for single level, two level, or any number of level operation needed.

The LASC series of conductivity level controls instantaneously measures the liquids resistivity and automatically sets optimum sensitivity of the control eliminating the manual setting of potentiometers. No longer worry about manually setting the sensitivity too high causing false operation, or too low causing no operation when liquid reaches the sensing probe.

1. LASD-201 Auto-Sensitivity, 3 Push Button Programming, User Defined Time Delay & Sensitivity Range, Straight Or Fail Safe Relay Operation, Temperature Sensing & Control Option

2. LASC-201 Auto-Sensitivity, Pre-Programmed Time Delay & Sensitivity Range

3. LASC-DM-201 Din-Rail Mounting, Auto-Sensitivity, Single, Dual Level, 6 Level Versions

4. LASC-SM-201 Socket Mount, Auto-Sensitivity, Single Or Dual Level Operation

ALEJANDRO TREJO

Offer Manager, Digital Services

Schneider Electric

JAMES

REDMOND

Global

Offer Manager, SCADA and Telemetry

Schneider Electric

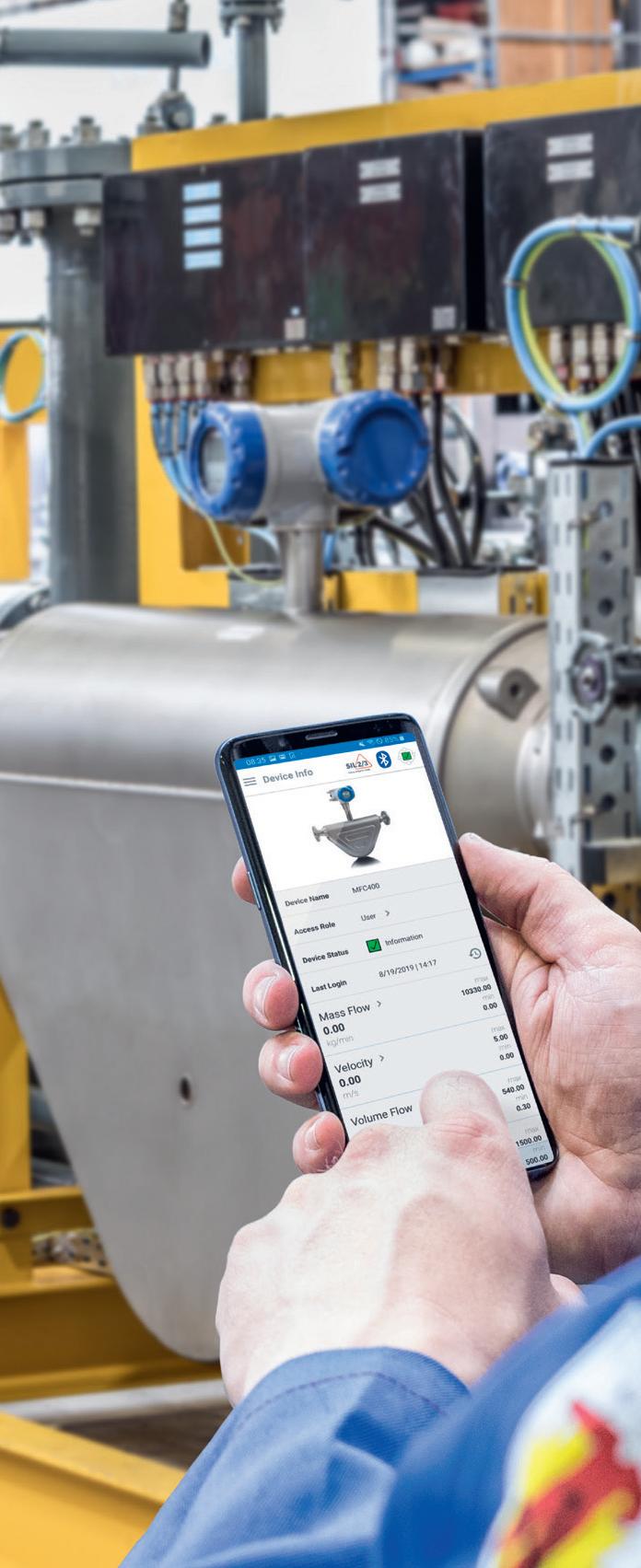

THE only constant in today's industrial landscape is the rapid evolution fueled by digital transformation and artificial intelligence (AI). It's estimated that more than 90% of businesses engage in some form of digital activity. While innovation and adaptability are keys to staying ahead, the digital transformation journey for industrial organizations is by no means an easy one, particularly in water and wastewater. Control talked with Alejandro Trejo, Offer Manager for Digital Services, and James Redmond, Global Offer Manager for SCADA and Telemetry, of Schneider Electric to get further insight into the challenges and solutions for operators.

Q: Why is real-time data analysis and increased automation needed in industry, particularly in water and wastewater?

AT: In today's world, there is a need to make the most of our resources, especially those related to water. To make better decisions, we must consider two relevant things. The first is the information available for us to understand what’s going on with the process and that comes from a SCADA system. The second is the data we gather must be understood as quickly as possible to make better decisions that comply with the goals and to be done with extreme accuracy. Accuracy is provided by considering all available variables, and the need for it only increases with time. In summary, there is a need for real-time data analysis because the amount of data that we need to make the most of our resources is increasingly high. So, the accuracy of our decisions becomes more relevant as times goes by.

Q: Why does industry under-utilize data?

AT: I think under-utilization of data is the equivalent of having the power to fly but deciding to crawl from one point to another.

There are a couple of reasons for underutilization of data in our industry.

The first is PLCs do not have the required memory and power to run advanced AI applications. Many AI models require intense computational power, often requiring substantial resources to train and execute tasks in an efficient way. The second is data granularity—the level of detail available in a set of data.

AI models demand large volumes of data to ensure accuracy and high performance for tasks such as abnormal event detection and pattern recognition, and data granularity and frequency play pivotal roles. Without sufficient data, these models may overlook critical anomalies, compromising effectiveness in real-world applications.

Q: How do AI and edge computing impact the ability to leverage data and automation not only in water, but also industry in general?

JR: We see it as impacting water, energy, and oil and gas, for instance, which are the other major industries for telemetry. There's a desire to gather additional data and to turn it into information to drive more efficient operational decisions. For instance, we see in oil or gas production edge computing being used to reduce lift costs, determining preventative maintenance routines, optimizing the deployment of personnel, or limiting travel time to these remote sites. These are recurrent concerns.

What AI provides is the ability to automate this analysis and drive better decisions. You can project when a technician is required to go out to a site to adjust the valve or move the counterweights rather than someone driving around on a rotation. Labor shortages in oil and gas industries are a major concern. Automated decision making allows for more efficient deployment of personnel.

AI has a massive demand for computing power, and what we see is an increase in more effective algorithms that can be then produced using that master processing power and put into smaller computing impacts. So, we can drive this information decision-making directly to the edge, and better shape the data to free up resources at the SCADA level, where this information is being processed exactly right.

Q: What role do SCADA systems play in the water industry, and how is it evolving as AI comes on board?

JR: That's a very important question. Traditional SCADA systems obviously need to gather the data and apply it relatively simply. Traditionally, they are quite effective in terms of when something's above or below a certain threshold, creating an alarm, and driving an action. Now, with the greater processing power at the edge, we gather more data, and then we create tension. A plant isn't generally constrained by what type of data it consumes. It can pull out everything that a PLC or edge computer can provide. The question is do you process the data at the SCADA level? We see some industry trends where there's a desire to push more of that processing into the SCADA or use it to pre-process data and then pass it to a tool further up the chain, residing perhaps in the cloud or in premise specifically designed to analyze it. If you look more at the telemetry aspect, there are two additional challenges outside the plant. You must consider the security of the data you're transmitting and the cost and bandwidth restrictions you're under while moving the data. What is clear to me, though, out of all of these competing trends or needs, is that the expectations the industry has for visualization and presentation capabilities of the SCADA, are increasing. Users demand tools to create mechanisms to present the data or correction to present the products of the data as information to their users, operators and supervisors.

AT: There isn’t a magic wand for us to get industry ready, but there are some actions that companies might start looking at to prepare for the technology. First, consider the power of edge systems. I know that cloud solutions are powerful and very common, but the cloud is not for every aspect of our processes. We might start with powerful, yet accessible, solutions on the edge to harness the potential data from connected products in our premises. Edge solutions are powerful and safe, and our cloud component can be an element of reinforcement for data processing, providing our industries with a higher level of insights and AI driving solutions. This hybrid approach is probably the best way to bring industries from zero to 100 in a safe and smart way.

Artificial intelligence (AI) and edge computing increase the power of real-time data analysis

JR: Those looking ahead to how they can best take advantage of these trends to improve their operational effectiveness should have a clearer idea of what they're trying to accomplish and what would be a meaningful improvement as they're looking to conduct an experiment or an exploration, or to create a prototype of a possible system. I think that kind of focus makes the experiment or the exploration a lot more successful.

Q: Everyone wants to maximize value. What is the value proposition for this kind of hybrid approach?

AT: We have different solutions designed to maximize the value of data in our industries. For example, we have solutions specifically designed to gather information related to environmental conditions from a pump. The value on this type of edge device application is it is connected to our cloud service where we process the data to provide our customers with a single user interface, and you can see data in near real time.

Q: How can organizations be ready for all these changes?

LISTEN UP!

Hear an extended version of this conversation on the Control Amplified podcast, available at www.controlglobal.com.

The value is to be able to process as fast as we can in edge devices and reinforce that data processing with AI-powered solutions. With this type of solution, you are one step ahead of any kind of failure you might have in the facility. This type of architecture lets us process both at the edge and in the cloud, while the users receive real value from its connected products.

JR: I think the one thing I’ll add is our ability to provide the seamless integration across a series of toolsets to provide that information right from the edge to the control room. We also have mechanisms to bring that information directly to the technicians, operators, supervisors and engineers.

by Jim Montague

It's real, it works, and it's making money

THE Open Process Automation Standard (O-PAS) is bringing home the bacon. The “standard of standards” and its real-world applications are generating revenue and earning a living.

After eight years of development and testing, O-PAS has achieved its goal of plug-and-play process automation and control, but its benefits don’t end there. Its interoperability allows simpler and faster network links, which let users design and build process applications, interfaces and systems more quickly, as well as add devices and scale up applications more easily. All of these plugand-play capabilities make life easier, which is what the Open Process Automation Forum (OPAF, www.opengroup. org/forum/open-process-automationforum) pursued from the start.

“Sixteen years ago, Don Bartusiak began talking about using open architectures and standards to make the changes we wanted, primarily gaining interoperability for process controls

to address growing obsolescence in increasingly large and complex processes. Seven years ago, we successfully completed our open process automation (OPA) proof of concept (PoC), including concepts for distributed control nodes (DCN) that could swap microprocessors in and out to match required operating loads,” said Dave DeBari, OPA technical team leader at ExxonMobil (corporate.exxonmobil. com), during his presentation at the ARC Industry Forum on Feb 10-13 in Orlando. “We also used these interoperable and interchangeable process control technologies in our OPA Lighthouse Project, which was moved after factory acceptance testing (FAT) last August, and is now operating as the first commercial instance of the OPA architecture at our resin-finishing plant in Baton Rouge, La. We ripped out the plant’s former DCS. There’s no piece of it left operating onsite. It’s gone, so there’s really no going back.”

Plug-and-play punches in

The OPA Lighthouse’s process controls are managing about 100 control loops and 1,000 I/O. The system was fully powered up and finished hot cutover tasks on Nov. 8. A cold cutover was completed on Nov. 17, and the commercialized, OPA-guided application began making product and generating revenue for ExxonMobil the next day (Figure 1).

“We’ve done it. It works, and it isn’t that hard to do,” exulted DeBari. “The Lighthouse plant uses Version 2.1 of O-PAS, and we completed a long and through FAT on Aug. 28 to make sure it was safe and reliable because this plant must stay online and continue making products for our customers.

“Using orchestration, we can take a controller computer out of the box, and get it talking to I/O on the O-PAS connectivity framework (OCF) in less than 20 minutes. This includes setup, instantiation, and adding the operating system,

Figure 1: The Lighthouse open process automation (OPA) project that ExxonMobil relocated to its resin-finishing plant in Baton Rouge, La., consists of interoperable process controls that comply with the Open Process Automation Standard (O-PAS), Version 2.1. Their FAT was completed last Aug. 28, and the project was powered up on Nov. 8. A cold cutover was performed on Nov. 17, and the commercialized, OPA-guided application began making product and generating revenue the next day. Its 100 control loops and 1,000 I/O are based on an OPA reference architecture that includes an IPC-based distributed control node (DCN), remote I/O, advanced computing platform (ACP) hosting virtual DCNs, and DCNs with local I/O. Source: ExxonMobil

applications, control logic and security. This would take hours to a day with a regular PLC, and days or a week with a traditional DCS. We estimate we could go from blank hardware to a running control system with full settings in about eight hours, just by running scripts instead of doing these tasks manually. This is how plug-and-play becomes a reality.”

DeBari reported that operators at the Baton Rouge facility have been getting used to their new OPA system’s tools and generally appreciate them. “Our operations people are still our toughest critics and best assets. They found some faults we hadn’t thought of, and are giving us good feedback,” explained DeBari. “Most operators in brownfield facilities like the controls and tools they’ve always had. So, the best news about O-PAS at Lighthouse is that within three weeks, the operators reported liking it, and began telling each other about tips and tricks for using it, such as easier ways to make process-run changes. Since then, they’ve settled into a normal cycle for making product and initiating process enhancements.”

Even beyond operators sharing tips at the Baton Rouge facility, O-PAS is garnering interest and inquiries from other ExxonMobil business lines. In fact, the company is already moving to commercialize and deploy O-PAS-based automation architectures elsewhere in its organization, according to Kelly Li, commercial lead at ExxonMobil.

“We’re seeking commercially viable solutions, with the OPA Lighthouse as the foundation project,” says Li. “We’re thinking of multiple facilities and users that could apply O-PAS to capture benefits sooner. In fact, we just awarded our next O-PAS project for a PLC upgrade project at an ExxonMobil Midstream facility in Baton Rouge.”

Li reports that ExxonMobil’s objective for commercializing O-PAS is to encourage an industry shift towards open, secure, standards-based adopting systems via decisive capital allocation. Its adoption and scale-up plan includes:

• Starting with deploying the Lighthouse project in 2024 to confirm its capabilities, performance and support model;

• Implementing smaller, less-complex, O-PAS solutions in 2025 to gain experience and reduce costs; and

• Building an ecosystem of commercially available products for applications of all complexities at ExxonMobil. This ecosystem includes engaging its suppliers to screen and develop O-PAS deployment opportunities, such as near-term PLC and SCADA projects; collaborating with end-users to create demand for O-PAS solutions; and continuing to help OPAF and other industry associations develop related standards.

“We’re also collaborating with other users on implementing O-PAS, and participating in other field trials, so we can all get more experience,” adds Li. “Beyond interoperability, optionality, cybersecurity, scalability, portability and transferability, the key takeaway is deployment, and we’re inviting everyone to join us.”

OPAF plugs away

Beyond ExxonMobil’s achievements, OPAF reported at the ARC event that O-PAS and its members have also been making gains on multiple fronts.

“We congratulate ExxonMobil because they’ve proven that deploying O-PAS in a front-line, revenue-generating, process operation can be done, and that its DCN, OCF and ACP sections are an architecture that can be built on,” said Jacco Opmeer, co-leader of OPAF and principal automation engineer at Shell (www.shell.com). “The Lighthouse project shows it can be done, and that small changes and additions can be fixed by system integrators using OPC UA, for instance, for some extended alarm functions.”

OPAF now has more than 100 member companies, including large DCS suppliers and many end users, such as Petronas and Reliance. Most of them are testing O-PAS, developing PoCs, and/or conducting field trials. For example, Opmeer reports that Shell’s test bed was finalized in 2022, and was tested throughout 2023. It’s based at Shell’s technology center in Houston, and it comprises a small distillation column simulation with Phoenix Contact’s 3152 I/O module and two additional DCNs, including one from Advantech that sits on top of the I/O devices.

“We’re satisfied with the results so far because we’re using a technology maturity process, which indicates that O-PAS has reached a mature level,” explained Opmeer. “We’ve been mixing our O-PAS components to see how they behave, and researching where deploying O-PAS fit-forpurpose devices makes the most sense. Next, we’ll explore implementation opportunities.”

Likewise, OPAF launched its certification program last year for evaluating compliance with O-PAS, Version 2.1. It started by making OPC UA connectivity and global discovery services available, and plans to soon recognize verification labs, certify physical platforms, and integrate networks. “Certification gives users the parts for building their own O-PAS systems,” added Opmeer. “We believe O-PAS should be the USB of process control—users should be able to just plug in devices and have them work. This is what OPAF intended, and it’s what O-PAS has become.”

Opmeer reports that OPAF also conducted a week-long plugfest for O-PAS in September 2024 at Shell’s offices in Amsterdam. It let developers and users test their O-PAS kits and prototypes, determine improvements, and better understand the standard-of-standard’s requirements. This testing of O-PAS’s capabilities encouraged OPAF to add sections to O-PAS, Version 2.1, during 2025-26, such as:

• Ensuring security by employing role-based access and managing certificates;

• Collaborating with the OPC Foundation to add OPC UA Field eXchange (FX) field-level extensions, including its publish-subscribe and I/O functions;

Peer Networking

Social Events

Career Development

Control systems

Instrumentation supervisor, engineer, technician

Maintenance manager

Technical

Product

User

Plant

Production operator, supervisor

Project director, manager

Exchange

Technology Demonstrations

Meet the Experts

Educational Services

Reliability manager or engineer

VP or director of

engineering or operations

EPCs and OEMs

• Incorporating hardware and architecture updates that reflect what OPAF has learned along with demands from overall ecosystems;

• Integrating updates to Automation Markup Language (ML) to allow software portability and enable digital engineering. AutomationML is a neutral data format based on XML for storing and exchanging plant engineering data; and

• Using software orchestration to automate IT-related functions, and help manage O-PAS components.

“We’re not waiting for the next version of the standard. We’re ready to continue adding features that users want, such as working closely with OPC Foundation to add more security levels,” added Opmeer. “We’re also looking to add AutomationML and develop more O-PAS standard content at the engineering level.”

Dave Emerson, co-chair of OPAF’s enterprise architecture working group and retiring VP of Yokogawa’s U.S. Technology Center, adds that interest in O-PAS is increasing worldwide because users at brownfield facilities are facing increasingly obsolete and/or end-of-life equipment, while greenfield processes need new devices at the lowest total cost of ownership (TCO). Yokogawa served as system integrator for ExxonMobil’s testbed and Lighthouse field-test projects.

“Users want to create new value for their applications and companies, and O-PAS provides a new, simpler model for process control and automation. Its decoupling of software from hardware enables its interoperability, flexibility and scalability, while its orchestration lets users determine what functions to run, how much of each, and where to run them,” says Emerson. “In this case, O-PAS covers two software categories: controlling operating processes and seeking greater efficiency, and management and health of its systems and tasks, such as data collection, historization and HMIs. This is how O-PAS brings IT-level capabilities to process engineering, including built-in cybersecurity. It and other virtualized functions can run in O-PAS’s DCNs.”

Mark Hammer, OPA product and services manager at Yokogawa, adds that it’s released a system management tool called Watcher that monitors qualified devices and systems, including those from other suppliers, and presents details about all of them on a single-pane display. It provides openprocess, secure-by-design capabilities by using Fast IDentify Online (FIDO) open, standardized authentication protocols and Trusted Platform Modules (TPM) tamper-proof microprocessors that follow the ISO/IEC 11889 standard.

“FIDO and TPM were also used in ExxonMobil’s Lighthouse project,” says Hammer. “Components employ FIDO to report their availability, and certificates authenticate them, so they can add operating systems and other functions.”

Figure 2: The Coalition for Open Process Automation (COPA) recently launched its COPA 500 kit for entry-level use with 100-900 I/O for brownfield replacements or greenfield systems. It consists of I/O modules and 24 V power supplies from Phoenix Contact, a distributed control node (DCN) such as ASRock’s industrial personal computer (IPC), virtual PLC/DCS control with Codesys software, and an advanced computing platform (ACP) such as SuperMicro’s IPC.

As more O-PAS applications pass field tests, developers and suppliers plan to offer more products that are certified as compliant. This will let them assemble systems more easily and quickly, and be sure their components are interoperable. For example, the Coalition for Open Process Automation (COPA, www.copacontrol.com) group of companies recently launched their COPA 500 kit for entry-level systems with 100-900 I/O (Figure 2).

“Last year, we began selling O-PAS devices. Now, COPA 500 brings systemness to them, so users can deploy them as one unified solution that’s resilient, secure and manageable,” says Bob Hagenau, CEO at CPlane and co-leader at COPA. “Proprietary products can achieve systemness, but they all have to be from the same vendor. However, if a user has an open control system with devices from multiple vendors, it’s been a problem to managed them as a single system. COPA and CPlane have created a unified system with multiple vendor components built on O-PAS.”

The COPA 500 kit includes: • I/O modules from Phoenix Contact or R. Stahl that are OPAS conformant, DCN I/O variants;

• An O-PAS distributed control node (DCN), such as ASRock’s industrial personal computer (IPC) that runs software function blocks and containerized software;

• A management node that O-PAS labels as an advanced computing platform (ACP), in this case, Supermicro’s IPC that provides added computational power for supervisory and historian functions;

• Cisco’s industrial Ethernet switches;

• 24-V power supplies from Phoenix Contact;

• Software-based PLC/DCS functions from Codesys use the IEC 61131 regulatory-control standard; and

• Inductive Automation's Ignition SCADA/HMI software.

“As an entry-level device, COPA 500 could replace a PLC or small DCS, and then scale up by adding more I/O to communicate with more sensors, actuators and other devices,” says Don Bartusiak, former co-chair of OPAF and president of COPA-member Collaborative Systems Integration Inc. (www.csi-automation.com). “COPA 500 can support P&ID or sequential control, advanced regulatory control, model-predictive control (MPC) or batch processes management, including NAMUR’s Module Type Package (MTP) process orchestration layer applications.”

In fact, Bartusiak reports that COPA 500 is one configuration of a scalable platform that can include different types of I/O, more DCNs, and more ACP capacity. Users will be able to buy them as a product, and configure them as needed thanks to swappable and/or redundant I/O, DCN, ACP and network devices. Instances of COPA's Control Platform will be sold by system integrators like Wood, Eosys or Burrow Global, and be supported by them using CPlane’s systems management and orchestration software.

“O-PAS is starting to be used for on-production systems. It’s no longer just in testbeds and laboratory systems,” adds Bartusiak. “And, now that it’s moving into production service, and end users, suppliers and system integrators can see it working, this should help reinvigorate participation on standards development. This is important because this work isn’t finished, and not all of the capabilities we need are done yet. We must optimize the learning rate of the entire OPA business ecosystem to manage the transformation, and assure its sustained success.”

Likewise, COPA 500 is also being used by one of COPA’s system integrators, Wood (woodplc.com), as a part of its own O-PAS testbed that was launched in October 2024. It

includes a couple of DCN types for running applications, such as ASRock’s IPC or Supermicro’s serverclass IPC, along with Stahl’s I/O modules, Phoenix Contact’s Ethernet switches and power supplies, Ignition SCADA software, Codesys control software, and CPlane’s orchestration software (Figure 3).

“Our testbed uses IEC 61131 for developing traditional programming and IEC 61499 to adapt to new control paradigms,” says Brad Mozisek, program manager of Wood’s OPA Center of Excellence. “IEC 61131 is for polling base systems running cyclical operations, It's similar to most PLCs and DCSs, while IEC 61499 is for event-driven, processes that only relay data when their values change.”

To help develop and demonstrate small, modular reactor controls, Texas

A&M University’s Nuclear Engineering Dept. (engineering.tamu.edu/nuclear) reports it selected COPA 500 with OPAS as its testbed for electrical heaters. They're adjusted based on control rod positions, which regulate reaction rates in nuclear reactors. These standardized, micro-style reactors operate between 1 kW to 5 MW.

“We were seeking the most appropriate control system, and determined that O-PAS best fit the needs of our research initiatives because it had strong cybersecurity and great adaptability,” says Timothy Triplett, control consultant at TAMU and president of Coherent Technologies Inc. (www. coherent-tech.com). “We picked COPA 500 as the best starting point for our research because O-PAS is standards-based, provides the latest cybersecurity for evolving threats, and supports commercially available components. The standard is also

reliable due to its software-defined redundancy, and also supports multiple computing layers that users often need for high-data computing tasks. This combination lets the testbed evaluate critical factors for scaling reactors for commercial applications. We can also use it for control via digital twins, and perform rapid iterations with standard tools, such as Codesys, Python and Matlab software.”

One snag to adopting O-PAS is it likely requires users to learn some new, possibly unfamiliar, IT-based skills, such as applying software containers, using Linux and related programming, and implementing virtual machine (VM) controls. Because ExxonMobil removed its old DCS from the Baton Rouge plant, DeBari reported its support staff has been mastering some of these new skills, including:

• Cybersecurity in compliance with the IEC/ISA 62443 standard that includes hardening and secure device onboarding; adding certificate authorizations to role-based access controls (RBAC); and deploying a zones-andconduits network architecture.

• Automating the automation system with orchestration tools for automating lifecycle tasks; using systemness thinking to introduce new technologies; and employing automated workflows to simplify deployment when bringing systems online. These include support controllers, networks and software, and performing initial configurations, lifecycle maintenance and decommissioning.

• OPC UA for interoperability by communicating between control logic, I/O, HMI and historian functions using a standard information model.

• Advanced computing platform (ACP) as the OT data center, which lets users pick control devices and software with most suitable size and performance level for the instantiations. The ACP also enables use

Figure 3: System integrator Wood’s O-PAS testbed uses a version of the COPA 500 kit that relies on a couple of DCN types, including ASRock’s IPCs and Super Micro’s server-class IPCs, along with Stahl’s I/O modules, Phoenix Contact’s Ethernet switches and power supplies, Ignition SCADA software, and CPlane’s orchestration software.

of hyperconverged infrastructures, VMs, containerized software and Kubernetes, and virtualized, software-based controllers with high availability.

• IT capabilities implemented with OT sensitivities, including Realtime Linux, meshed network, firewall rules and virtual local area networks (VLAN).

“The network uses OPC UA to communicate with everything in the system, and lets us build the most suitable ACP for its process requirements,” adds DeBari. “We’re also using containerized software, Linux and VM controllers ready for time-sensitive networking (TSN). They’re working well, and managing machines, energies, chemistries and process operations. With ExxonMobil’s OPA Lighthouse running successfully, the O-PAS-based automation architecture has gone from being a crazy idea to what’s normal.”

Even though O-PAS already covers many areas, it can still overlap and fit into the larger worlds of digitalization, the Industrial Internet of Things (IIoT) and data analytics.

“We often don’t get the value from 3D scans and models because we don’t know how to store and centralize visual information well enough to make its useful content accessible to operators. If we did, we could develop new ways to work, and scale available technologies to allow more innovation and produce greater value,” says Michael Hotaling, innovation and digital strategy manager at ExxonMobil. “This is similar to the OPA vision that OPAF had when it was formed. We’re all seeking an ecosystem that relays data from open, physical assets to virtual, digital twins. However, this requires standardized interoperability that’s free of custom

or proprietary automation, so more vendors can participate, and users can integrate better products with less effort. OPA also preserves each asset owner’s applications and data, and make sustainability cost effective.”

Just as drivers expanded their perspective from rearview mirrors to global positioning systems (GPS), Hotaling reports that industrial work processes are moving beyond static models. This begins with taking data from reality, applying integrated engineering, using geospatial awareness to deliver information when and where it’s needed, ensuring interoperability, and accelerating the whole process with artificial intelligence (AI) and machine learning (ML).

“Users must ask if they’re doing digitalization for its own sake, or are they using it to improve how they’re working in the field,” explains Hotaling. “The process industry has had a ‘not built by us’ disease, and needs realize it doesn’t have to reinvent the wheel, so it can give workers what they need, where and when they need it. This transformation can begin by forming organizations like our Open-Asset, Digital-Twin working group. This can help asset owners and suppliers collaborate to separate hardware from software, and separate software from data in conjunction with OPA and digital-twin projects. These groups can also help members deploy agentic AI models in their ecosystems.”

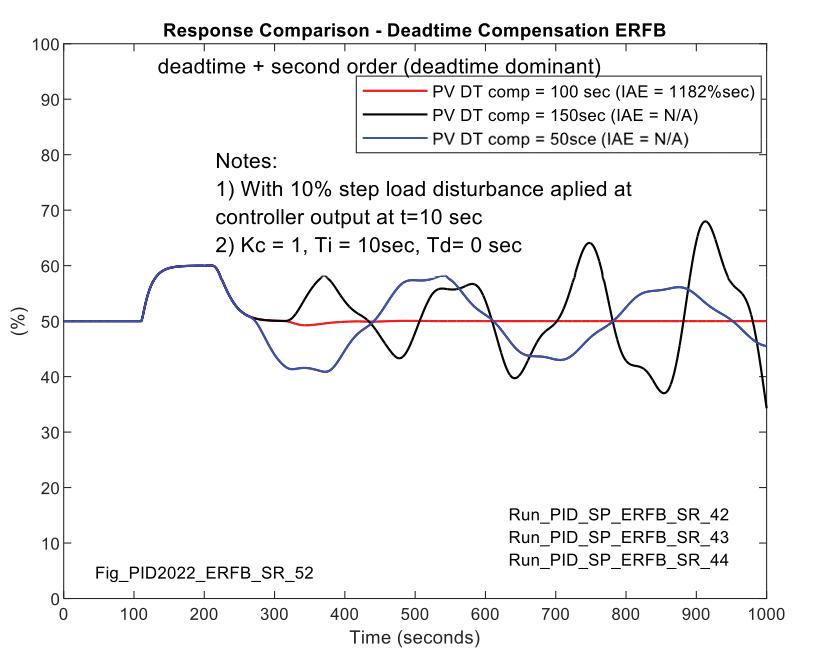

A performance comparison of the Smith Predictor and deadtime-compensated PID

by Peter Morgan and Greg McMillan

IN a previous article by the authors, “Deadtime compensation opportunities and realities,” evidence showed how simple implementation of deadtime compensation improves loop performance over and above what’s achievable with a conventionally implemented proportional-integral-derivative (PID) controller, when it adopts filtered positive feedback and a deadtime is inserted in the feedback signal. This article goes further and challenges the traditional view that the Smith Predictor is the pre-eminent choice for deadtime compensation. It establishes, by analysis, the best theoretical integral absolute error (IAE) for a Smith Predictor exactly modeling the process. Then, it compares the performance of the Smith Predictor and deadtime-compensated controller employing external reset feedback (ERFB).

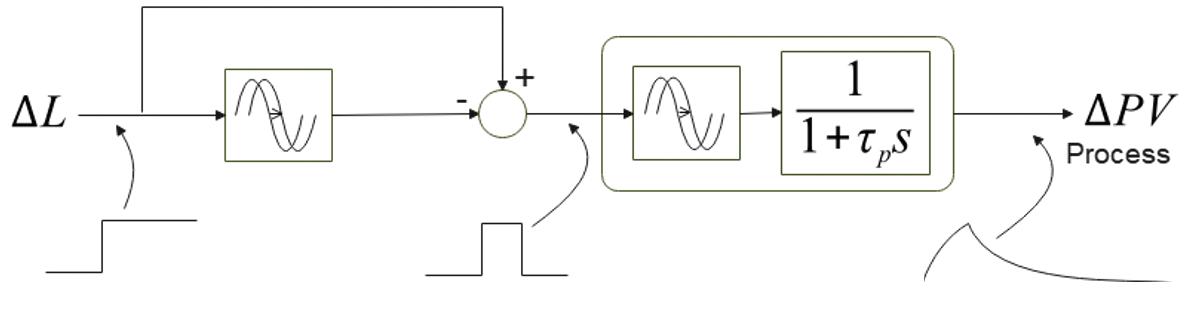

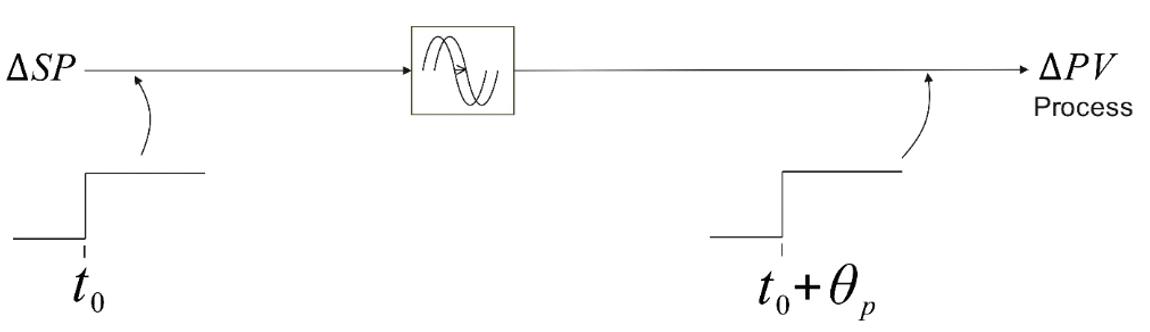

Figure 1 shows the Smith Predictor controlling a self-regulating process with a response characterized by a deadtime followed by a first order lag.

Analysis: Normalized values are assumed, so there’s no requirement to be specific about units of measurement. Normalized values are derived by dividing the physical quantity by a base quantity, which for these studies is a load or setpoint change, so the normalized quantity (lb/hr, psi etc) per unit (PU) doesn’t have units. For emphasis and to avoid confusion, units for the calculated IAE are expressed as per unit (PU) seconds.

For an unmeasured load disturbance: Referring to Figure 1, when the model and process are exactly matched, a change in controller output equally affects signals “b” and “c,” so there’s no change in signal “a”. This suggests that, for load changes, the Smith Predictor can be represented by Figure 2, which lends itself to a simple analysis. It should be noted that the load change is inserted at the controller output (input to the process) and not at the PV, which is a common assumption in academic studies, and leads to performance predictions for the Smith Predictor for load changes that aren't achievable in practice.

In Figure 2, when controller gain is high, the transfer function of the PI controller (with negative feedback inherited from the Smith Predictor as the response of the process without deadtime) reduces to a lead term of 1 + p to cancel the lag term characterizing the process response to the load change. The net result is a correcting change in controller output matching the load change, but of opposite sign and delayed by the process deadtime. Figure 3—a distillation of Figure 2—illustrates the action of the Smith Predictor for a load change. It should be noted that the performance with high gain, which might not be achievable in practice due to the presence of secondary lags in the process,

3: Equivalent schematic for Smith Predictor for load changes when process is exactly modeled. Controller gain is high and integral action time matches process time constant.

For the Smith Predictor, for a step load disturbance (Figure 3), the integral error is given by:

represents the best theoretical performance. Even if derivative action is added to the controller, the response can’t be improved due to the feedback local to the controller.

Not surprisingly, when the integral of the PV disturbance (integral error) is calculated from the exponential expressions for the process response in the interval before corrective action is taken and the interval of recovery when the corrective action is taken, for a PU change in load, the integral error depends directly, and only, on the process deadtime.

For a change in setpoint: Figure 4 illustrates the equivalent transfer function block diagram for a setpoint change when the model exactly matches the process, and the controller integral action time equals the process time constant. In this case, for high controller gain, the transfer function for a PI controller with the negative feedback inherited from the Smith Predictor reduces to 1 + ps, canceling the process lag to leave only the deadtime to determine the PV response for a change in setpoint (Figure 5). It should be noted that, since the output of the Smith Predictor is the controller output (and MV), practically, the response of the lead term (1 + pS) to the step change in SP is limited in amplitude and can’t completely cancel the process lag. The effect of the controller output limit on achievable IAE will be illustrated later.

Model results—Smith Predictor: To directly compare modeled response with theoretical calculations, the process is first modeled as deadtime (30 sec), followed by a firstorder lag (200 sec) and the Smith Predictor implemented as shown in Figure 1. This study also shows and discusses the results for the equivalent second-order system and deadtime, since few systems are adequately represented by a first-order lag plus deadtime. The Series PID

Figure 5 Equivalent schematic for Smith Predictor for setpoint changes, when process is exactly modeled, controller gain is high and integral action time matches process time constant

For the Smith Predictor, for a step change in setpoint (Figure 5), the integral error is given by:

form (per ISA 5.9) is adopted and implemented using filtered, positive feedback to replicate the method adopted by a recognized DCS vendor.

Note that the IAE on the plots is reported in units percentage second (%sec) and is the integral of the absolute error (|pv-sp|), which depends on the magnitude of the load or setpoint change. When discussing performance and tabulated comparisons, per unit (PU) IAE helps compare performance. PU IAE is the reported IAE divided by the magnitude of the load or setpoint change. The dimension of PU IAE is seconds.

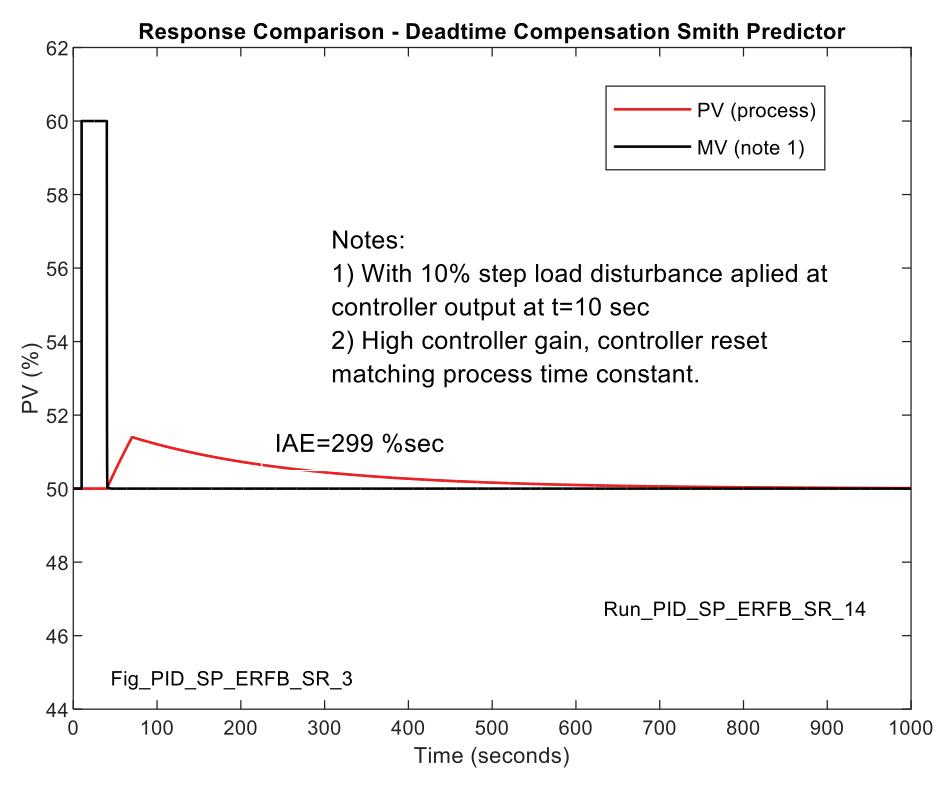

For an unmeasured load disturbance (first order plus deadtime process approximation): The step

disturbance is applied at the controller output, so the manipulated variable first increases by 10%, where it remains until corrected by the controller under the influence of the Smith Predictor.

Figure 6 illustrates the response of the Smith Predictor for a 10% increase in load. In this case, the controller gain is high, so the PU IAE is 29.9 sec (299/10), closely matching the process deadtime (30 sec) and theoretical IAE. When gain is reduced, typically a practical requirement due to the presence of higher order poles in the process, PU IAE increases as shown in Figure 7 to 55 sec (550/10), which is 1.8 times the best achievable value.

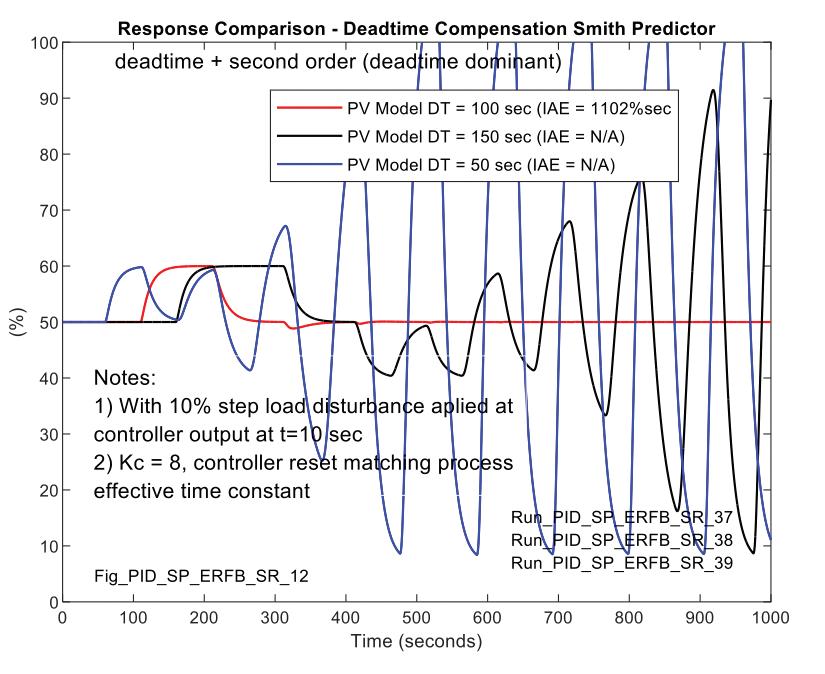

For an unmeasured load disturbance (second-order plus deadtime process approximation): In this case, the process is modeled as a deadtime equivalent to the actual process transport delay, and second-order interactive lags to better represent the typical process, for instance, a shell/tube heat exchanger. For equivalence to the deadtime plus first-order lag approximation, for the secondorder process model, the actual deadtime is 16 sec and the lags are 160 sec and 25 sec, respectively. Figure 8 illustrates the response for the second-order process, and includes the response for the first-order plus deadtime approximation for comparison. Note that the first-order approximation includes the apparent deadtime assessed in approximating the second-order response, so the total deadtime is larger. Notably, when the Smith Predictor utilizes the deadtime plus first-order lag approximation of the controlled second-order process, the IAE is close to that obtainable when the Smith Predictor model and process exactly match. It’s worth noting that with a second-order process, the theoretical lowest achievable IAE (for a firstorder process) can’t be achieved by increasing the gain, and with high gain, low-level oscillation occurs.

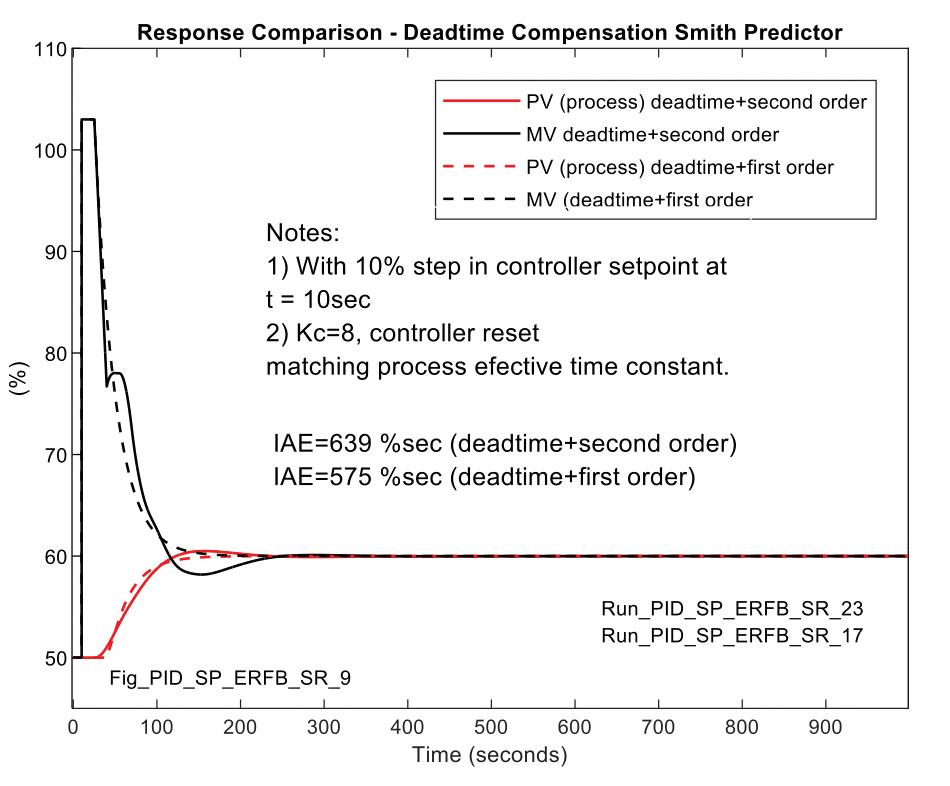

For a setpoint change (first order plus deadtime process approximation): For a setpoint change the theoretical best performance (minimum IAE) is only achievable if the controller output and the MV (for instance the valve) has no limit. This is never the case in practice, but it’s allowed here to demonstrate the alignment between the model results and the theoretical behavior of the Smith Predictor. If this practicality is ignored for the sake of verifying the model behavior, the model predicts that the step in setpoint is followed by a step in PV one process deadtime later, and that the PU IAE is 30.2 sec (302/10), matching (very nearly) the process deadtime. Recognizable in Figure 9 is the proportional plus derivative action characterizing the MV as the leading response to the setpoint change shown in Figure 4.

In Figure 10, response for a 10% setpoint change, with Smith Predictor implemented and the modeled process in the Smith Predictor matching process, illustrates the response when the controller output is limited (to 103%) and gain is reduced to a more practical value, in this case 8 (same as the reference case for a load disturbance). The PU IAE increases to 57.5 sec (575/10), which is approximately the same as for the load disturbance.