Instruction list is no longer part of IEC 61131-3, but ladder diagram, structured text, sequential function chart and function block diagram are being explored in new ways

Need your variable speed drive to have an easy and problem-free connection to your favorite Ethernet or Fieldbus network? Yaskawa takes your desire for control and data seriously.

Our new GA800 is no exception. It provides data-rich connectivity with all major industrial networks. The Industrial “Internet of Things” is here. Let Yaskawa help satisfy your appetite for it.

Your days are complicated enough. Let us help simplify them. Call Yaskawa today at 1-800-927-5292.

Endres s+Hauser ’ s Micropilot radar sensors FMR10 , FMR20 and FMR30 s tand out with fas t commis sioning and intuitive operation Setup wizards guide you through each s tep –your de vice is ready to use in les s than 3 minutes.

cover story

PLC programming expands its universe

Instruction list is no longer part of IEC 61131-3, but ladder diagram, structured text, sequential function chart and function block diagram are being explored in new ways

Anna Townshend, managing editor

machine input

How are PLC R&D teams affected by SDA?

Protocol and standard adaptability makes software-defined automation impervious to obsolescence

Mike Bacidore, editor in chief

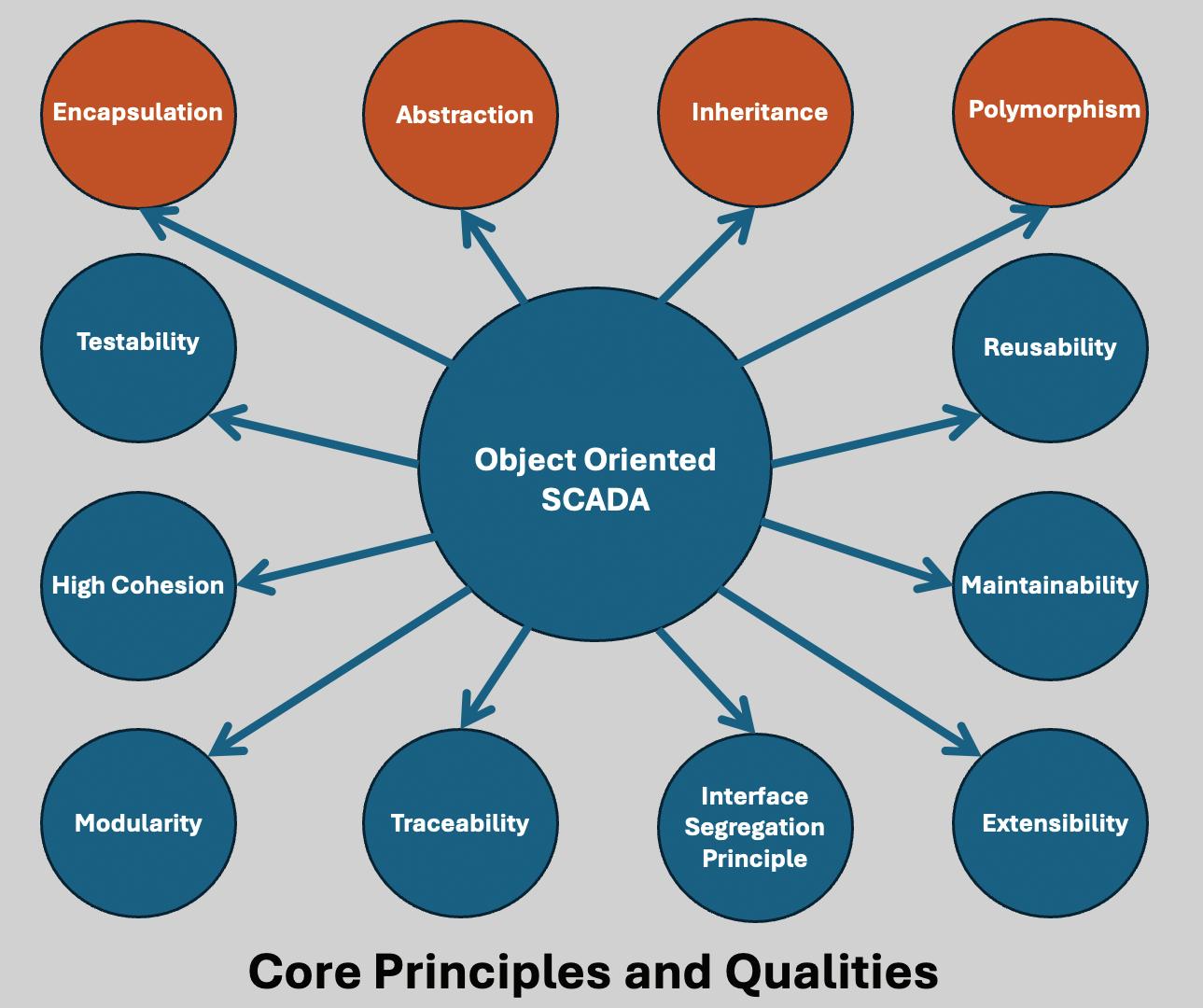

SCADA From spaghetti to a structured blueprint How object-oriented SCADA enables scalable, replicable industrial control systems

Nestor Arria, Disruptive Automation Solutions

product roundup Motors, motion, movement and more Components and devices to drive actuation

CEO

Endeavor Business Media, LLC

30 Burton Hills Blvd, Ste. 185, Nashville, TN 37215

800-547-7377

Chris Ferrell

COO

Patrick Rains

CDO

Jacquie Niemiec

CALO

Tracy Kane

CMO

Amanda Landsaw

EVP Manufacturing & Engineering Group

Lisa Paonessa

VP Corporate Content

Travis Hessman

VP Content Strategy Manufacturing & Engineering Group

Robert Schoenberger

Group Editorial Director

Keith Larson

editorial team

editor in chief

Mike Bacidore

mbacidore@endeavorb2b.com

managing editor

Anna Townshend

atownshend@endeavorb2b.com

digital editor

Madison Ratcliff

mratcliff@endeavorb2b.com

contributing editor

Rick Rice

rcrice.us@gmail.com

contributing editor

Joey Stubbs contributing editor

Tobey Strauch tobeylstrauch@gmail.com

contributing editor

Charles Palmer charles101143@gmail.com

columnist

Jeremy Pollard jpollard@tsuonline.com

design/production

production manager

Rita Fitzgerald

rfitzgerald@endeavorb2b.com ad services manager

Jennifer George

jgeorge@endeavorb2b.com art director

Derek Chamberlain

subscriptions

Local: 847-559-7598 • Toll free: 877-382-9187

email: ControlDesign@omeda.com

sales team

Account Manager

Greg Zamin gzamin@endeavorb2b.com

704/256-5433 Fax: 704/256-5434

Account Manager

Jeff Mylin jmylin@endeavorb2b.com

847/516-5879 Fax: 630/625-1124

Mike

Jeremy

Rick Rice, contributing editor

component considerations Make smarter drive choices for equipment

Tobey Strauch, contributing editor 14 automation basics Robotics’

Charles

contributing editor

Joey Stubbs, contributing editor

Elevate your energy infrastructure with IIoT-enabled HPS Smart Transformers.

Mike Bacidore editor in chief mbacidore@endeavorb2b.com

MITSUBISHI ELECTRIC IS READY to put a ring on it. Its collaboration with Nozomi Networks, which specializes in cybersecurity for OT, Internet of Things (IoT) and cyber-physical systems, has been expanding for well over a year.

Now, it’s official. Nozomi Networks will become a wholly owned subsidiary, operating independently of Mitsubishi Electric. The acquisition is designed to strengthen Mitsubishi Electric’s Serendie-related business, which includes OT security. Serendie is a digital platform designed to foster serendipitous data, human and technology encounters. Its name is coined from the combination of serendipity and digital engineering.

“We’ll be implementing Nozomi’s business assets, such as SaaS products, cloud-service platform and AI technology to strengthen Serendie business quality,” said Satoshi Takeda, Mitsubishi Electric senior vice president, CDO, chief information officer and board member. “We will place Nozomi’s OT security technology into our components, as well. An example is in the sequencer, equipped with intrusion detection sensor, which was launched in September 2024 to enforce edge security.”

zomi data collection technology, we’ll be able to get rich data from the site. Through our Serendie, we will fully utilize this data to create a new service, along with our customers.”

Nozomi technology is being used in 75 countries with a customer base of 1,000 companies, not just in manufacturing, but in building and infrastructure, said Takeda.

In the industrial automation area, multiple suppliers have collaborated with Nozomi. Yokogawa began offering Nozomi’s OT visibility and threat detection to its OpreX Managed Services customers in 2024, and in 2025 Nozomi announced its Arc Embedded was embedded in Schneider Electric’s SCADAPack 47xi smart remote terminal units (RTUs).

Nozomi technology is being used in 75 countries with a customer base of 1,000 companies.

Mitsubishi Electric’s MELSEC iQ-R programmable logic controllers (PLCs), as well as the field assets these PLCs control, down to levels 1 and 0 in the Purdue Model, include Nozomi Arc Embedded, which provides real-time visibility of internal operations. The data collected by Arc Embedded enhances anomaly and threat detection.

“The biggest aim will be co-creating new services by utilizing data,” added Takeda. “Nozomi’s solution does not just strengthen security, but will enable data collection in the overall OT arena. This is an area where various components from different vendors operate in data collection. Origins or meanings of the data were often missing. There were issues at the time of analysis and utilization of data. By using No -

Mitsubishi Electric participated in Nozomi’s $100 million Series E funding round in March 2024, and the two companies have collaborated on innovation ever since.

“Mitsubishi Electric purchasing Nozomi Networks signals that OT/IoT capabilities are being valued in the cybersecurity arena, data services and with artificial intelligence,” said Tobey Strauch, a controls engineer and a contributing editor to Control Design who wrote about the deepening relationship between Mitsubishi Electric and Nozomi Networks more than a year ago. “When traditional hardware companies are expanding OT interface capacity, then you know the importance of data, even in the OT environment. Every machine will be connected. Companies are profiting by doing so.”

The acquisition of Nozomi Networks brings an AI-powered, cloud-first cybersecurity software business with scalability to Mitsubishi Electric.

How will the Nozomi technology in other suppliers’ components be supported, and how will the fruits of these serendipitous data encounters be shared? Stay tuned. Exciting times are ahead, as AI and cybersecurity move deeper into the manufacturing space.

Jeremy Pollard jpollard@tsuonline.com

INDUSTRIAL ETHERNET IS the same as commercial Ethernet, except for the hardware, which has been hardened for the factory floor. TCP/IP is widely used, along with specific protocols such as Ethernet/IP and Modbus/TCP.

It has become a de facto standard in plants and machines for interconnecting systems and in fact individual devices. So, what happens when it fails? What would make it fail?

I read a post on LinkedIn that went something like this: The plant warehouse management system (WMS) stopped communicating with the programmable logic controller (PLC) that had all the connected sensors attached to it. The first step in troubleshooting this issue was to check the PLC code, wrote the author.

Evidently the PLC code checked out. Everyone commented that it’s the WMS that isn’t communicating.

col (ICMP). This tests the physical cable, as well as the integrity of both ends of the message route. A positive ping response suggests that the link is alive and responsive at both ends.

If the ping command fails, it suggests that one of the nodes is disconnected from the network. Most if not all Ethernet ports have two indicating lights—one that blinks with activity and a second LED that indicates the link connectivity and the speed at which it is connected. Note that, for 10 MBPS, that light will be off, so it’s important to know the configuration.

If the ping command fails, it suggests that one of the nodes is disconnected from the network.

The next frame on the graphic shows the Ethernet connection, and funnily enough it shows a failed diagnostic light. The resulting reboot of the WMS fixed the problem.

It probably was a Windows-based computer running the WMS, which leads us to a probable option of the PLC driver going off-line. The diagnostic failure light on the switch, router or PLC indicates that there was a network failure of some sort. It wasn’t clear as to where it was.

So, a review of the systemic information should have taken minutes and not the 13 hours the author suggests it took to find and fix the issue.

What if there was no failure light? How do we troubleshoot network issues, and what tools do we have to help?

There may be helpful displays on the PLC itself, or maybe not. A network mapping tool would be helpful which would show the user what devices are successfully connected to the network and in the same subnet. The IP address range for areas of the plant and/or machine typically will be the same and typically connected to the same group of switches.

The mapping tool will tell you if a node is off-line and not responding. If the link is alive and well, then the issue would typically be a software or message-framing issue.

Step one is to confirm cabling on a network computer with the ping command using the Internet control message proto-

Ethernet modules check for situations such as jitter, which is, in one word, inconsistent. This can be due to many things such as network congestion, which can happen when an Ethernet module or card goes rogue. This typically suggests that a piece of hardware is intermittently failing.

This is not an easy find however and may require a deep dive into the network.

There have been times when a port on a switch drops the connection, and a power cycle was required to bring it back online. With a Windows computer and application, a restart is successful some of the time.

In the above case, that is what restarted the communication between the WMS and the PLC, and the user had the benefit of a diagnostic LED to aid in the troubleshooting.

In the event of not having that periscope into the network, tools such as protocol monitoring software may be needed to find a rogue node.

If the systems are connected to a managed switch, there are various interfaces that a user can use to monitor each node and view the configuration.

There are various ways one can troubleshoot a communication issue within a subnet, and activity lights and link connectivity lights would be the first step. Specific hardware device status lights may be present.

JEREMY POLLARD, CET, has been writing about technology and software issues for many years. Pollard has been involved in control system programming and training for more than 25 years.

Rick Rice contributing editor rcrice.us@gmail.com

IT’S HARD TO ADMIT IT, but perhaps we do get more complacent with time and resistive, perhaps ignorant, of change. After nearly four decades in this compelling industry of controls and automation, a lot of knowledge and experience is crammed into this old brain of mine. I have always prided myself on keeping up with technology, and I am always scanning the myriad emails I get each week extoling the virtues of the next greatest thing from my favorite vendors.

After the scramble around the pandemic to get parts to complete controls projects, I would have to say that I am significantly more open to considering products that wouldn’t have been on my radar even months before. With all the buzz around tariffs now, I wonder if we are, once again, going to find ourselves opening up our choices to the realm of possibilities from previously unrecognized sources.

Our interaction with the newer generation came in the form of simply having some bits to exchange back and forth with our line control panel so we could tie all the various unit ops together and do some data collection for our OEE software package.

At first glance, the newer PAC looks mostly like a cosmetic change to what I would call more of a European look with removable spring-load terminals. However, the real changes are inside the package.

Working with the PAC/safety controller has been an eye-opening experience for us.

As it happens, this is a very busy time at my place of employment. We currently have four major capital projects on the go with startups scheduled for January through September of next year. These are exciting times for our controls team as the projects are new production lines and heavily automated.

Building structures are being modified. New utilities are being deployed to service the production space. Integrating all the various parts is a huge endeavor that is elevated by the fact that we have multiple projects on the go at the same time.

We are a small group, and, like many small companies or integrators out there, we rise to the challenge when a bigger project comes in. We have great successes in our past endeavors and try to do each project better than the one before. An essential part of this is to be aware of advances in technology and utilize them in our designs when they make sense.

A great example of technology helping a design came in the form of a newer generation of programmable automation controller (PAC) from our favorite hardware supplier. This new generation has been out for nearly two years now, but, aside from finding them in some vendor-supplied equipment, we really haven’t taken a close look at the product because our existing generation of PAC is still actively available, and it does everything that we’ve asked it to do.

Compliance with the IEC 61131-3 programming standard necessitated a change in some of the traditional ladder-logic instruction mnemonics. For newer programmers who are accustomed to clicking an icon to insert an element into a software design, this change might not even get noticed. However, for those of us who have been around for more than 20 years or so, this change is quite uncomfortable.

For example, the traditional GRT (Greater Than) is now GT. LES (Less Than) is now LT. These might seem like ridiculously insignificant changes, but for those of us who have programmed by entering text on a line, the change is like learning to speak French when all you know is English, but having to do it in a few hours.

Fortunately, or perhaps less fortunately, the vendor provides an “automated” way of upgrading a software application that was created in a previous firmware revision to this later hardware and firmware. The surprise was that the transition forward converts most of the application but flags the “old” instructions as not being recognized, instead of just going ahead and updating them.

One larger program that I recently migrated to the new firmware/hardware resulted in nearly 1,000 instances of an instruction that I had to manually navigate to and convert to the new instruction.

Another surprise was the dropping of some traditional instructions and changing them over to function block (FB). One example is the SCL (Scale) instruction. For someone without previous experience with FB, this would be a very big surprise. Further bumps in the road happen if your

software platform is a legacy version—for me, that was just 14 years ago—where FB, structured text (ST), instruction list (IL) and sequential function chart (SFC) were not part of the original hardware platform but were added in the ensuing years. That surprise resulted in having to purchase further licenses in order to include that feature in our base of installed software development laptops. This isn’t as important if you are just troubleshooting but hugely important if you are developing new code.

In our usual control design, we pick our favorite model of PAC with the favored firmware level installed and then include a safety relay in the design. The hardware vendor makes that part even nicer by having an interface module that connects the PAC to the safety relay using Ethernet/ IP. It turns out most of the safety relays can communicate across an optical bus that is already built in. Just add the comms module, and you can get status from the channels and nodes on the relay plus have control over the outputs on things like door latches.

This brings us to a big feature in our newer PAC platform—the ability to include a safety processor with the logic processor. For sure, this is not that new of a feature but if you are happily building controls using the now mature generation of PAC, the thought would not even come to mind. In fact, our experiences, thus far, with the new generation of PAC did not expose us to the embedded safety controller.

During the process of working out the integration for our newest production line, we learned that several of our equipment vendors will be providing the dual-processor PAC in their designs. Curiosity, they say, kills the cat and there we were diving down the rabbit hole to see what all the fuss was about. To find out that our hardware vendor is offering safety and non-safety PACs at the same price pretty much sealed the deal for us.

The financial gain by buying a PAC with a safety controller included was significant. The comms module and safety relay are fairly expensive on their own. Taking that cost out of the design was a bonus, as was being able to use the same safety devices that we were using previously. There was no reason not to change.

When using a dual-processor PAC, one adds slices of input or output modules that are specifically for safety. They are even colored red to differentiate from normal I/O modules. The slices only come in eight-input or -output versions because the safety devices are two-channel. If desired, we can

use a field-mounted interface module that communicates on Ethernet/IP with the safety controller.

Where one might use the IO-Link type safety network and nodes with the separate safety relay, the field interface module provides a means of connecting the M12 terminated safety channel directly to the module and, thus, to the safety controller in the PAC.

Programming with a safety controller is less complicated as we simply wire up each of the safety devices to a channel on the safety I/O module and use software to create the combinations of inputs that will produce an outcome on a channel on the safety output module. With the safety controller being part of the PAC, we also have a direct connection with the safety outputs that form the dual channel Safe Torque Off (STO) feature on our servos and variablefrequency drives (VFDs).

The only caveat that we found in our experience with the PAC/safety controller was that our programming software, yet again, didn’t have the safety programming module enabled on it, and, yet again, we were back to the vendor to buy licenses for the computers that will be developing code for the safety side of things.

There is a price, it seems, for hanging on to legacy hardware and software for too long, even if it is still available from the hardware vendor.

Teething issues aside, working with the PAC/safety controller has been an eye-opening experience for us. With six out of the 10 units of operation on our new production line coming with the dual processors, we decided to jump into the fray and designate one of these newfangled things for our main-line control cabinet. The ability to program both the main control algorithm, as well as the safety zones and devices of our line, with the same single point of connection and program application has us placing a whole new emphasis on this approach as a default direction for us in future projects.

With three other production lines going in within the next nine to 12 months, we anticipate some good practical experience to go with our decided path. We already love it, and we don’t even have the panel built yet. The off-line design and development of the software application is already seeing a savings in time and money.

RICK RICE is a controls engineer at Crest Foods (www.crestfoods.com), a dry-foods manufacturing and packaging company in Ashton, Illinois.

Tobey Strauch contributing editor tobeylstrauch@gmail.com

IN A WORLD OF BLACK-BOX MAINTENANCE and increased prices, where time is precious, how do you know what kind of drive to choose for your application? Choices and brands are abundant, but choosing a drive can be difficult or confusing. For the sake of simplicity, let’s consider drives supporting motors under 70 hp and simple inductive ac motors. Things to keep in mind:

1. Application requirements: How do you want to control the function of the motor? Speed, torque, precision? It’s good to know what you are moving, how fast and to what degree of error you may have.

2. Environment: Is the environment harsh, wet, oily, dry, hot? Ruggedized electronics will be needed in specific cases.

stored and loaded from the PLC. This includes motor voltage, motor current, motor frequency, motor speed, motor power or kilowatt rating and motor type.

Control parameters can be tabled with acceleration time, deceleration time, minimum and maximum speed boundaries in revolutions, Hertz or some unit derived thereof, stop/ start mode and control mode.

Communication settings might be monitored for validating that the drive is on the network.

3. Safety: What features do you want the safety to have? Safe torque off is a go to for most environments and applications.

4. Control: How many inputs can the drive take? Is there an option to program the inputs without a programmable logic controller (PLC)? How does the PLC need to interface? Do you need to buy software to program the drive, or can you do so from the PLC, or is there an interface?

5. Communication: It’s imperative to get a drive that fits the communication architecture and that can provide feedback for control. This means, adaptations, such as Ethernet, Profinet, Modbus, EtherCAT. When purchasing, it’s good to understand the availability, lead times and the cost of ownership. Many maintenance departments want to standardize on a brand due to company preference and engineer/technician comfort. Familiarity sells. There can be a dependence on who the plant sees the most often, as far as field support. Industries also have their favorites based on the history of what automation brand supported that industry from its inception.

Most of the drives supporting the lower hp market are going to be similar. Things to consider at that point are price, integrability with the PLC platform, reliability and trainability. Keep in mind also that, just because one may grab the 1,000 parameters on a drive from the Ethernet, it does not mean that you should. Basic motor parameters may be

Input and output parameters are discrete for start, stop, forward, reverse, analog for speed reference and torque feedback. Relay outputs may have status signals like running or drive fault. Analog outputs include current and speed feedback to validate that the drive spun up to the command given. Communication settings might be monitored for the purpose of validating that the drive is on the network. The PLC should throw an alarm if it does not see the hardware. Network addresses could be assigned at the switch or in the PLC to allow for hot swaps without having to program the address. Protocol selection is a parameter that is important.

Overload and protection settings may be monitored by the PLC and human-machine interface (HMI) for the purpose of drive health.

This would include overcurrent, temperatures and overspeed, with voltage and frequency limits.

Specialized feedback may be required for tuning or proportional–integral–derivative (PID) settings. This includes auto-tune capacity and torque boost. Specialized parameters would depend on the application and would be subject to maintenance accessibility. Sometimes customers want these on the HMI display.

Keeping a table of parameters for each drive and knowing where the drive stores them will allow for reading and writing parameters in specific modes or when a status changes so that traffic from drives may be limited. Better yet, if drives are put on their own subnet, then drive traffic can be isolated from process and not cause other issues.

Tobey Strauch is an independent principal industrial controls engineer.

Charles Palmer contributing editor

THE LANDSCAPE OF INDUSTRY is undergoing a profound transformation, largely driven by the increasing integration of robotics. Once confined to the realm of science fiction, industrial robots are now an indispensable part of manufacturing and production processes worldwide. This shift is not merely about automation; it represents a paradigm change in how goods are produced, supply chains are managed and human labor interacts with advanced machinery.

Industrial robots are deployed across a vast array of applications, revolutionizing production processes in numerous industries. Their versatility allows them to perform tasks ranging from repetitive, highvolume operations to intricate, precision-demanding procedures. The manufacturing sector, particularly automotive, electronics and metal industries, has been at the forefront of adopting robotic solutions.

extreme temperatures or repetitive motions that can lead to musculoskeletal injuries. By taking over these tasks, robots create a safer working environment.

The choice of robot type depends on the specific application, required precision, payload capacity and reach.

While the initial investment in industrial robots can be substantial, the long-term cost savings are significant. Robots reduce labor costs, minimize material waste due to errors and decrease energy consumption through optimized processes. Furthermore, their consistent performance and reduced downtime contribute to a higher return on investment (ROI) over time. The falling prices of robots and increased accessibility have made automation more economically viable for a wider range of businesses, including small and mediumsized enterprises.

The integration of industrial robots offers a multitude of benefits that significantly impact productivity, quality, safety and cost-efficiency in manufacturing and other industrial sectors. These advantages are driving the widespread adoption of robotics globally.

Robots can operate continuously without fatigue, breaks or performance degradation, leading to significantly higher throughput and production rates. They perform repetitive tasks with consistent speed and accuracy, reducing cycle times and optimizing the overall manufacturing process. This continuous operation maximizes machine utilization and streamlines workflows, resulting in increased output and improved efficiency.

Another advantage of industrial robots is their ability to perform tasks with extreme precision and repeatability. Unlike human workers, robots do not experience variations due to fatigue or distraction, ensuring uniform product quality and consistency. This leads to fewer defects, reduced rework and less material waste, ultimately improving the overall quality of manufactured goods.

Robots can handle dangerous, dull, and dirty tasks that pose risks to human workers. This includes operations involving heavy lifting, exposure to hazardous materials,

Industrial robots are highly flexible and can be reprogrammed and reconfigured for different tasks and product variations. This adaptability allows manufacturers to quickly respond to changing market demands, introduce new products and customize production runs without extensive retooling. This agility is particularly beneficial in industries with short product lifecycles or high customization requirements.

Robots often have a smaller footprint compared to traditional machinery or manual workstations, allowing for more efficient use of factory floor space. Their ability to work in confined areas or perform multiple tasks within a compact cell further optimizes space utilization.

Many industrial robots are equipped with sensors that collect vast amounts of data on their performance, production metrics and environmental conditions.

This data can be analyzed to identify bottlenecks, optimize processes, predict maintenance needs and make informed decisions to further enhance operational efficiency and productivity. This integration with the Internet of Things (IoT) enables predictive maintenance and smarter manufacturing processes.

Charles Palmer is a process control specialist and lecturer at Charles Palmer Consulting (CPC). Contact him at charles101143@gmail.com.

Protocol and standard adaptability makes software-defined automation impervious

by Mike Bacidore, editor in chief

THESE INDUSTRY VETERANS shed some light on the increasing use of software-defined automation.

Mark Collins is a senior engineer at Mazak with a bachelor of science degree in electro-mechanical engineering from Miami University. With more than 25 years of experience in a variety of roles in the manufacturing and machine tool industry, Collins has acquired a breadth of experience in robotic automation, machining process, industrial networking and security. He is looking forward to seeing how artificial intelligence, big data and the continued move toward a more connected manufacturing industry will affect the future of manufacturing.

Sarah McGhee is product owner, Simatic AX, at Siemens. Thomas Kuckhoff is product manager of core technologies at Omron Automation Americas.

Michael Kleiner is vice president (VP) of edge AI solutions at OnLogic.

Rahul Garg is vice president, industrial machinery, at Siemens Digital Industries Software.

Kurt Braun is director of automation sales engineering and development at Wago.

Ken Crawford is senior director of automation at Weidmuller USA.

Ken Crawford, senior director of automation, Weidmuller USA : The primary focus so to allow a high level of hardware integration into a software platform where you can condense and consolidate a lot of features and functionality into a single hardware platform that runs and supports a wide range of applications that define the platform’s operation and decouple the hardware dependencies from the software. An early example is when the relay logic technology back in the 1970s was replaced with programmable logic controllers (PLCs) that eliminated the

hardwiring of relays that acted like a sequential logic engine to define a process. Once these rigid and nonflexible circuits were deployed, changes were very slow to make and very costly. With a programmable controller, software defines the logic and operation, making it much quicker and easier to instantiate changes. This makes the system much more flexible, scalable and adaptable for many applications.

Kurt Braun, director of automation sales engineering and development, Wago : The primary focus of softwaredefined automation (SDA) is to decouple control and management software from the underlying hardware. Unlike traditional platforms, which are tightly integrated and designed for specific purposes, SDA provides a more flexible and modular approach to automation.

Rahul Garg, vice president, industrial machinery, Siemens Digital Industries Software : Programmable logic controllers (PLCs) have been the backbone of industrial automation for decades, providing reliable, real-time control of physical automation equipment and processes. Hardware-centric controllers will continue to be

an essential part of your automation architecture, particularly for mission-critical, time-sensitive control applications (Figure 1). At the same time, software-defined automation (SDA) leverages the flexibility and intelligence of software-based control systems, allowing you to adapt more quickly to changing production requirements, integrate advanced analytics and optimization and achieve greater IT/OT convergence.

Michael Kleiner, VP of edge AI solutions, OnLogic : The main idea with software-defined automation is to move away from having the control of your automated systems tied directly to specific, one-off pieces of hardware. Instead, you’re putting the smarts into the software. This gives you a lot more wiggle room to set things up, tweak them and manage your automation. Ultimately, it’s about being more efficient and getting different systems to play nicely together by using software as the main driver and then picking the right hardware for the job based on the particular needs of the project or the environment in which it will live.

Thomas Kuckhoff, product manager, core technologies, Omron Automation Americas: The primary focus of softwaredefined automation is machine performance. Powered by the advancements on the IT side of automation, software-defined automation focuses on machine performance through automation that is robust, collects holistic data and maximizes factory talent collaboration.

Mark Collins, senior engineer, Mazak : Software-defined automation (SDA) is a concept that continues to evolve, but, at its core, the primary focus of SDA is to decouple automation logic from hardware, allowing software to define, control and manage automation processes. This means that instead of being locked into rigid, hardware-centric configurations, manufacturing systems can be rapidly reconfigured, customized and optimized through software. The term “define” is crucial here because it highlights how SDA enables software to manage not only control, but also the setup, configuration and initialization of automation systems.

Sarah McGhee, product owner, Simatic AX, Siemens: The primary focus of software-defined automation (SDA) is to leverage proven methodologies from the

software development space and apply them to the OT space. This focus can be broken down into several key pillars:

• Integration of IT-oriented tools: Utilizing IT-oriented tools brings software engineering practices into the automation programming space. While automation does not always allow for the “fail fast and break things” approach typical in software development, it should enable rapid iteration in a safe manner.

• Virtualized programmable logic controllers (PLCs) and controls: With the creation of virtualized PLCs, we are concretely decoupling control tasks from hardware devices and providing the ability to run them on highperformance industrial PCs or computers, increasing flexibility and scalability. This approach also facilitates easier integration with other applications.

• Data-driven production: Leveraging data collected from automation systems to make intelligent decisions or suggestions is critical. With the addition of artificial intelligence (AI) models, it is essential to utilize data effectively to enhance automation systems. For example, predictivemaintenance algorithms can analyze data to foresee equipment failures and optimize maintenance schedules. In summary, SDA focuses on integrating IT tools, virtualizing control systems and utilizing data-driven approaches to create more flexible, scalable and intelligent automation solutions.

Mark Collins, senior engineer, Mazak : Software-defined automation’s primary benefit is time savings. In modern manufacturing, whether it is downtime, changeovers or engineering hours, the pressure is constant: deliver more, do it faster and achieve better results with fewer resources. The potential for SDA to significantly reduce the required engineering efforts to design, reconfigure and/or scale your manufacturing process can be impressive. Companies that fail to integrate SDA may find themselves with longer delays switching production lines and implementing the changes that our customers demand of all of us.

Ken Crawford, senior director of automation, Weidmuller USA : Having a software-defined platform means that the solution is abstracted from the hardware,

eliminating the need for single-source automation and can run on any compute device leaving the definition of the control solution to be made through an application or algorithm written by controls engineers that can be automatically maintained, upgraded, updated and monitored thereby greatly increasing flexibility and decreasing costs. Software-defined platforms are infinitely easier to deploy, are adaptable to many protocols and standards and are mostly impervious to obsolescence.

Kurt Braun, director of automation sales engineering and development, Wago : Software-defined automation offers enhanced security, portability and scalability through the use of technologies like Docker containers. For instance, some controllers can implement Docker containers to improve interoperability compared with traditional closed and proprietary systems. In light of recent supply-chain disruptions, SDA’s ability to facilitate multiple vendor sourcing options has become a significant advantage.

Rahul Garg, vice president, industrial machinery, Siemens Digital Industries Software:The main benefits of software-defined automation include the increase in agility and flexibility it offers, as well as its ability to work with highly complex applications. The ultimate goal is to create a more agile and efficient automation system or systems that can rapidly adapt to changing production requirements while maintaining robust performance and reliability. Yet another benefit SDA offers is the opportunity it provides to work with a larger pool of software engineers. SDA and using artificial intelligence (AI) are two big factors that can attract more software engineers to manufacturing and facilitate innovation.

Michael Kleiner, VP of edge AI solutions, OnLogic : The big wins with software-defined automation are flexibility and being able to quickly change things when your operations evolve. It can also save you money by letting you use more standard computing hardware instead of always needing specialized devices. Plus, scaling up or down your automation becomes much easier. And a huge benefit is better teamwork between different systems, making it simpler to share data and make smarter decisions. Managing and updating everything also becomes a lot less of a headache with software-based control.

Sarah McGhee, product owner, Simatic AX, Siemens : Among the many benefits of SDA are its adaptability, its appeal to the workforce and its contribution to sustainability (Figure 2).

• Adaptability: SDA provides easy scaling up or down of control systems without the need for additional hardware or significant changes. This flexibility enables quicker responses to customer and market needs, reducing the headaches associated with hardware availability and back orders. Additionally, SDA promotes modular automation system design, where components can be operated and tested separately, enabling easier and more reliable updates by focusing on specific portions of the system.

• Workforce attractiveness: By adopting SDA principles, companies can attract a wider range of talent. SDA expands the job market for potential hires in automation positions. This cross-disciplinary approach opens opportunities for individuals with diverse skillsets to contribute to automation projects. Additionally, the efficiency gains from SDA reduce the need to fill every open position, allowing companies to maximize the productivity of their existing workforces.

• Sustainability: SDA significantly contributes to sustainability by reducing the need for hardware, which minimizes waste and lessens negative environmental impact. Additionally, data-driven production enables more efficient use of data, optimizing processes to make them more sustainable. For example, predictive-maintenance algorithms help reduce capital and human-resource consumption wrought by unnecessary prescheduled maintenance routines, in addition to environmental impact. By

leveraging these capabilities, SDA creates more sustainable and environmentally friendly industrial operations. Ultimately, SDA’s flexibility, efficiency and sustainability pave the way for a more innovative and responsive automation environment.

Thomas Kuckhoff, product manager, core technologies, Omron Automation Americas : The primary benefit of software-defined automation is the deployment of new technologies quickly—eliminating the latency between R&D validation and factory-floor deployment. This not only helps operations teams to continuously find new efficiencies, but also decreases the incremental costs commonly associated with keeping up to date.

How does software-defined automation figure in the convergence of IT and OT?

Thomas Kuckhoff, product manager, core technologies, Omron Automation Americas : At the heart, softwaredefined automation is the connection between different technologies. Where globally open industrial protocols on the OT side, such as EtherNet/IP, EtherCAT, CIP Safety, Fail Safe Over EtherCAT and IO-Link, allow teams to not only connect current machines to each other but also create more robust data repositories on the IT side with database connectivity, such as OPC UA, MQTT and SQL.

Mark Collins, senior engineer, Mazak : Software-defined automation is a critical enabler of the ongoing convergence between information technology (IT) and operational technology (OT) in manufacturing environments. Traditionally, IT, focused on data processing, networking and security, and OT, focused on direct control of machinery and industrial processes, operated in silos. SDA breaks down this divide by making automation processes software-driven, allowing seamless communication and data exchange between the two domains. SDA allows IT systems, such as enterprise resource planning (ERP) or manufacturing execution systems (MES) to directly access real-time data from shop floor equipment. This means that production data, machine status and maintenance information can be instantly shared with IT systems for analysis, optimization and decision-making.

SDA also brings DevOps practices into the industrial environment. Traditionally, DevOps has been a set of practic-

es, tools and a cultural philosophy aimed at automating and integrating processes between software developers and IT teams. In SDA, this philosophy will extend to OT. Software developers will work alongside OT engineers to create, test and deploy automation applications that will be the building blocks for the software-defined system.

Ken Crawford, senior director of automation, Weidmuller USA : Software makes the plant floor or remotely deployed machine infinitely available to the broader IT networks by allowing common industrial and Ethernet-based protocols to communicate with one another while allowing the industrial platform to be maintained by the broader network team. SDA makes more common and mainstream applications and computer capabilities available to traditional plant-floor devices in the OT network making them easier to maintain and administer through traditional IT resources and network administrators.

Kurt Braun, director of automation sales engineering and development, Wago : SDA leverages containerization to enable development teams to adopt best practices for continuous integration and continuous deployment (CI/CD). By storing files on secure servers rather than individual laptops, SDA enhances security and ensures reliable version control. Additionally, tools like Kubernetes and Portainer support mass deployment of these containers across operational technology (OT) environments.

Rahul Garg, vice president, industrial machinery, Siemens Digital Industries Software : IT/OT convergence is a key enabler of software-defined automation. It provides a framework that allows organizations to integrate and manage both IT and OT systems with agility, flexibility and scalability, while enhancing security, operational efficiency and decision-making capabilities. Quite often, a lot of the OT structure is very hardware-specific. Using software-defined systems allows for the ability to work in a much more common way across different platforms and simplifies the integration with IT and OT networks. Another major takeaway is the implementation of secure practices. Using SDA for IT/OT convergence provides a significant increase in cybersecurity.

Michael Kleiner, VP of edge AI solutions, OnLogic : Software-defined automation is a major component of bringing IT and OT closer together. By using software for both control and communication, it helps bridge that traditional gap. It allows your operational tech to integrate much more smoothly with your IT infrastructure, making it easier to get data flowing from the plant floor to the folks doing analysis. This means you can do things like remote monitoring, predict when maintenance is needed and just get a much better overall picture of what’s happening, which can help boost efficiency and create opportunities for innovation in your processes and products.

Which standards and protocols will be affected most or increase/decrease in use because of software-defined automation?

Michael Kleiner, VP of edge AI solutions, OnLogic : We’re expecting to see more reliance on open communication standards. Things like OPC UA, MQTT and flexible industrial Ethernet solutions are likely to become even more popular because they play well with IT networks. On the flip side, those closed-off communication methods that are tied to specific hardware might become less common as companies look for more open, software-driven ways of doing things. Also, with the need for really reliable, real-time control, standards like time-sensitive networking (TSN) will become more important.

Mark Collins, senior engineer, Mazak : Portability and interoperability are key to SDA. Open protocols such as OPC UA, which facilitate secure and reliable data exchange across different platforms, and message queuing telemetry transport (MQTT), which supports lightweight, publish/subscribe messaging, are ideal for SDA applications. Also, domain-specific semantic vocabulary standards like MTConnect will provide clear, consistent data. Real-time communication protocols like EtherNet/IP, Profinet and others will continue to provide time-sensitive communication.

The mandatory requirements of interoperability in SDA, along with a growing library of code, will diminish vendorspecific protocols. The rise of SDA and the growing library of open-source software and industry standards will diminish the dominance of proprietary, vendor-specific

protocols. Companies that rely solely on closed, vendorspecific solutions will struggle to achieve the flexibility and scalability that SDA offers.

Thomas Kuckhoff, product manager, core technologies, Omron Automation Americas : While there are plenty of open protocols that have increased in adoption, OPC UA and EtherCAT are two that are worth noting. OPC UA has seen a 9% compound annual growth rate over the past five years and EtherCAT has seen a 12% compound annual growth rate. As we look forward, we can expect both of these and MQTT to continue to gain adoption across a wide range of automation fields.

Ken Crawford, senior director of automation, Weidmuller USA : The widest adoption of Internet protocol (IP) will always be found in the highest-selling segment: desktops and laptops. These computers use the most common, transmission control protocol/Internet protocol (TCP/IP). For industrial protocols, TCP/IP can often be deployed on the same networks that utilize the more industrial protocols like Profinet and EtherNet/IP. The industrial protocols will always be deployed and used due to the specialization that dictates the use of these protocols. Motion, for instance, is heavy EtherCAT usage and has advantages that a laptop’s TCP/IP will never provide.

Kurt Braun, director of automation sales engineering and development, Wago : SDA is likely to accelerate the adoption of modern communication protocols such as message queuing telemetry transport (MQTT) and OPC UA, which offer encryption and enhanced security. In contrast, many legacy fieldbuses, which were designed before cybersecurity became a concern, lack encryption and may see a decrease in use over time because of this. MQTT, in particular, is an effective protocol for secure communication between containerized applications.

Rahul Garg, vice president, industrial machinery, Siemens Digital Industries Software: Communication protocols such as OPC UA and MQTT will see an increase in importance with the use of software-defined automation. Industrial Ethernet protocols will also increase in importance because of software-defined automation, while network and security protocols such as secure sockets

layer/transport layer security (SSL/TLS), Zero Trust security models and 5G and edge-computing protocols will see an increase in software-defined automation driven environments. On the other hand, legacy IT and legacy industrial protocols will likely experience a decrease in use as they are not typically optimized for real-time, secure and cloud-based integrations, which are prioritized in softwaredefined environments.

Which components will see the biggest impact from software-defined automation?

Rahul Garg, vice president, industrial machinery, Siemens Digital Industries Software: Software-defined automation will complement PLCs. In addition, other impacts software-defined automation can have will be in human-machine interfaces (HMIs), engineering tools, data management systems and security components. The primary shift will be toward more software-centric, flexible and scalable systems that break down the traditional silos between IT and OT, enabling efficient operations, real-time decision-making and better security. As software-defined automation integrates and modernizes these components, it will drive innovation, improve efficiency and deliver more intelligent, automated environments.

Thomas Kuckhoff, product manager, core technologies, Omron Automation Americas : The programmable logic controller (PLC) will continue to see its R&D teams affected by software-defined automation user trends. Historically, PLCs have not been required to manage both logic control and data processing, where teams have deployed gateways or data collectors to bridge the gap between current automation architecture and future state designs. Larger facilities are looking to become data-centric and in doing so are requiring the PLCs to have as much OT as IT connectivity. Where OPC UA, MQTT and SQL are becoming standard rather than an add-on, allowing all spares in the crib, and all new design specifications to have database connectivity as standard.

Michael Kleiner, VP of edge AI solutions, OnLogic : You’ll probably see the biggest changes in traditional hardware controllers, as more of their functions get virtualized in software. Industrial computing platforms and edge devices will become even more crucial because

they provide the muscle and adaptability to run these software-defined control systems. Network infrastructure will also need to keep up with more data and real-time communication, pushing the adoption of technologies like TSN and software-defined networking. And, of course, software platforms for managing everything and crunching the data will become central.

Mark Collins, senior engineer, Mazak : Software-defined Automation will have a transformative impact on a wide range of industrial components, but the most significant changes will occur at both ends of the automation spectrum — the largest control systems and the smallest sensing devices. Traditionally, industrial control systems like programmable logic controllers (PLCs), programmable automation controllers (PACs), and industrial PCs (IPCs) have been hardware-centric, with dedicated devices handling control logic. However, SDA is pushing these control functions into the cloud or distributing them across multiple software layers. This means that, instead of a single, fixed controller managing a process, control logic can be dynamically assigned, scaled and managed through virtualized software environments. At the opposite end, even the smallest components like sensors are undergoing a transformation. Traditional sensors were simple devices that fed raw data to controllers and up the line of distributed control systems, but, with SDA, sensors are becoming intelligent, capable of processing data locally and communicating directly with multiple higher-level software platforms. A temperature sensor can now have built-in processing power, filtering and analyzing data locally before sending it directly to a cloud dashboard; and sensors that support open protocols like OPC UA or MQTT can seamlessly connect to SDA systems without needing intermediate control devices.

Ken Crawford, senior director of automation, Weidmuller USA : Controllers/industrial PCs (IPCs) are definitely the compute engines that SDA runs on. Other devices that are not as compute-heavy as PLCs are edge devices, gateway devices and motion controllers. SDA may be deployed in smart sensors, smart actuators and smart pumps. As we lean into artificial intelligence (AI), more devices that have been traditionally stand-alone devices will be more connected, allowing for and requiring SDA to maintain service.

Kurt Braun, director of automation sales engineering and development, Wago: SDA can significantly impact all layers of advanced automation systems. For example, [some] automation products can deploy PLC runtimes as containers, alongside other workloads such as databases like InfluxDB, SCADA systems like Ignition and IIoT platforms like Node-Red. This modular approach streamlines deployment and management across diverse environments.

In what ways does software-defined automation allow machine builders more flexibility in hardware selection and management?

Kurt Braun, director of automation sales engineering and development, Wago : Containerization provides machine builders with greater flexibility by enabling software to be portable across different hardware platforms, with minimal modifications. Even when specialized hardware features are required, the adjustments needed are usually minor, allowing for a broader selection of compatible hardware.

Mark Collins, senior engineer, Mazak : Properly configured SDA systems will give machine builders greater freedom in choosing and managing hardware components. The ability to select hardware from different vendors without compatibility issues will help with scalability and customization without needing to overhaul the entire manufacturing system. A machine builder can integrate a new sensor from a different manufacturer into an existing system without significant reprogramming, thanks to SDA. The decoupled control of individual pieces of hardware will allow for quick deployment into new or updated manufacturing systems.

Rahul Garg, vice president, industrial machinery, Siemens Digital Industries Software: One of the biggest benefits software-defined automation allows machine builders is providing the ability to decouple the hardware and software on your controls and offer more standardized interfaces. With software-defined automation, hardware deficiencies and hardware failures are easier to address. And software-defined automation obviously promotes much easier development of software for your automation needs because now you are using more standard IT-based software capabilities, and it can be used for more IT-based technologies.

Michael Kleiner, VP of edge AI solutions, OnLogic : Software-defined automation gives machine builders a lot more freedom when it comes to picking hardware because the control software isn’t tied to specific vendors anymore. This means they can choose the hardware that best fits the job and their budget, without getting locked into one company’s ecosystem. Managing things also gets easier with software-based setup and updates. Machine builders can often handle and check on their automation systems remotely, making it simpler to scale, fix issues and adapt to new requirements without having to rip and replace a bunch of hardware.

Ken Crawford, senior director of automation, Weidmuller USA : The SDA capabilities are usually abstracted from the hardware. As long as the hardware can support the applications running on it, any hardware platform can be selected, as long as it meets the environmental, footprint, port and networking requirements.

How does software-defined automation build on existing IT and network infrastructure in factories and plants?

Michael Kleiner, VP of edge AI solutions, OnLogic: Softwaredefined automation is designed to play well with the IT and network infrastructure that’s already in place. It uses standard networking protocols and IT best practices, allowing OT systems to connect to regular Ethernet networks using things like TCP/IP. Plus, virtualization techniques that are common in IT can be used to run control software on standard industrial PCs. This means you don’t necessarily need completely separate OT networks, and you can apply IT security measures and management tools to the OT side, leading to a more unified and efficient setup.

Ken Crawford, senior director of automation, Weidmuller USA : As long as the SDA applications support the protocols running in these plants and as long as the machines have the resources and performance when supporting the added or differentiated network-traffic burdens, the systems will be interoperable.

Mark Collins, senior engineer, Mazak : Software-defined automation should be designed to leverage existing IT network infrastructure within

factories, minimizing the need for extensive hardware upgrades. Integrating SDA with ERP and MES enables real-time monitoring and control of production processes without major changes to the infrastructure. While many Internet of Things (IoT) devices can generate a large amount of data, a properly developed SDA solution can introduce intelligent edge devices that function as local data processors. These edge devices filter and process high-frequency data pushing only critical or processed information to higher network layers. This approach reduces network load, and it prevents network congestion, while also enhancing security since sensitive data can be processed locally without unnecessary exposure to the network.

Kurt Braun, director of automation sales engineering and development, Wago: SDA can integrate seamlessly with existing IT and OT infrastructures by adopting a hybrid approach. Legacy systems, which can be costly and complex to replace, can coexist with containerized applications that introduce new capabilities. This phased approach allows for manageable milestones and minimizes disruption to production environments.

Thomas Kuckhoff, product manager, core technologies, Omron Automation Americas: Deploying new technology completely incompatible with existing network infrastructure puts an almost insurmountable barrier between the factory and the ability to make better production predictions. Software-defined automation acts as a bridge between where current IT and network infrastructure is today and where it

would need to be for more powerful technologies to be deployed without compromising network security. Software-defined automation begins building this bridge by giving IT teams

ways to manage network security through tools such as subnets on controllers, where the term subnet denotes different network segments that may use the same subnet mask.

From intake to outtake and everything in between, STOBER has the products needed to optimize your application.Our breadth of knowledge and breadth of product means you can trust us to provide the ultimate solution.

Rahul Garg, vice president, industrial machinery, Siemens Digital Industries Software: Software-defined automation builds on existing IT and network infrastructure in factories and plants by extending and enhancing capabilities already in place. This is where we rely on existing ethernet infrastructure to add more of IT/OT convergence capabilities, cloud infrastructure/cloud platforms when deploying automation software and utilizing existing IT infrastructure for hosting virtual and existing data centers. Data analytics platforms can be added, as well as data integration enterprise platforms like enterprise resource planning (ERP) or manufacturing execution system (MES) software. Automation is a software layer atop a factory or plant’s IT and network infrastructure and provides greater flexibility and ability to leverage intelligence and minimize disruptions in those factories and plants.

Tell us about your company’s state-of-theart product that involves software-defined automation.

Rahul Garg, vice president, industrial machinery, Siemens Digital Industries Software: Industrial Operations X, our overarching portfolio for industrial production, features our Industrial Edge devices, our MOM portfolio, Insights Hub for IT/OT convergence, Simatic AX and Opcenter X (Figure 3). Industrial Operations X is based on four pillars: software-defined automation; data-driven production; modular operations; and industrial ecosystem. Industrial Edge is a key enabler for software-defined automation.

Thomas Kuckhoff, product manager, core technologies, Omron Automation Americas : The Omron Sysmac automation platform is at the very core of Omron software-defined automation. This all-in-one automation platform uses globally open industrial protocols on controllers, such as the NX102, and integrated development environments, such as Sysmac Studio, to design, commission and scale robust automation.

Omron Sysmac Studio embodies software-defined automation through its ability to integrate with mechanical design software, such as SolidWorks, Autodesk and ePlan; its connectivity to digital twin simulation environments with universal scene description (USD) output formats; and its quick programming with globally open industrial proto -

cols such as IO-Link, EtherCAT, Fail Safe over EtherCAT, CIP Safety, and EtherNet/IP. Omron NX502 controls logic with a quad core CPU and manages data as standard with OPC UA, MQTT and SQL connectivity out of the box.

Ken Crawford, senior director of automation, Weidmuller USA : Our latest SDA-based product is our M series of programmable automation controllers (PACs). At the heart of these multi-core controllers is a open architected operating system we call u-OS. U-OS is a hardened Linux-based operating system (OS) that utilizes portainer.io to support containerization allowing users to install and run their third-party applications including Inductive Automation’s ignition platform. The ability to install and run your control applications alongside our core controller capability of CoDeSys allows users to customize the controller with the features they need to complete the control system.

Michael Kleiner, VP of edge AI solutions, OnLogic: The backbone of software defined automation is really the computing infrastructure. What we bring to the table is a range of super reliable, high-performance industrialgrade computers—things like our industrial and rugged PCs, panel PCs and edge servers. These are the workhorses that run the software doing all the SDA heavy lifting.

Mark Collins, senior engineer, Mazak : Mazak has a long history of pioneering software-driven automation, starting in 1981 with the introduction of Mazatrol, one of the world’s first conversational CNC controls. This innovation allowed operators to program CNC machines using user-friendly, graphical commands rather than complex G-code, significantly enhancing efficiency on the shop floor. In 1998, Mazak continued to lead by integrating PC technology directly into our CNC controls with the launch of the Fusion CNC, bringing advanced computing capabilities to the manufacturing floor.

Mazak remains at the forefront of software-defined automation (SDA) with our industry-leading SmoothAi control. SDA refers to the concept where automation logic, monitoring and management are driven primarily by software, rather than being hard-coded into hardware. This approach provides unmatched flexibility, enabling rapid adjustments to machining parameters, seamless integration with other factory systems and remote monitoring capabilities.

Mazaks SmoothAi control exemplifies this philosophy. Mazatrol SmoothAi is an intuitive, software-driven programming interface that accelerates setup and programming. Smooth Project Manager is a centralized software solution for managing machining programs and configurations. Smooth CamAi is an advanced CAD/CAM integration for rapid part programming. Digital twin integration enables operators to simulate and optimize machining operations virtually before execution.

Sarah McGhee, product owner, Simatic AX, Siemens: At Siemens, we offer a range of tools to support users in adopting software-defined automation principles. One of our standout products is Simatic AX, an innovative engineering tool for PLCs that reconfigures the popular Visual Studio Code environment to meet the unique needs of the automation space. By leveraging a state-of-the-art IT environment, Simatic AX enables engineers to use familiar tools and methodologies in their automation programming. This approach not only reduces the learning curve, but also fosters collaboration between software and automation engineers. Simatic AX facilitates seamless integration with existing IT systems because it was developed with these sorts of systems in mind from the outset. It provides simple source control management, a built-in unit testing framework and smooth visualization into continuous integration pipelines, enhancing the overall efficiency and effectiveness of automation projects.

by Anna Townshend, managing editor

LADDER LOGIC MAY STILL REIGN supreme in the world of programmable logic controller (PLC) programming, but the full story is far more dynamic. As manufacturing systems grow more complex and connected, and as the workforce evolves, many machine builders and system integrators are rethinking how they write controls code. While ladder diagram (LD) remains dominant for its readability, ease of troubleshooting and deep roots in electrical engineering, other IEC 61131-3 programming languages—like structured text (ST), function block diagram (FBD) and sequential function chart (SFC)—are increasingly being adopted to meet the demands of data-heavy applications, advanced motion control and scalable system design.

Why ladder logic is still PLC programming king Ladder diagram has long held the crown as king of the IEC 61131-3 programming languages, and for good reason. Its electrical schematic-style visuals and straightforward logic have long made it the go-to language for control systems using programmable logic controllers, especially in North America.

Familiarity holds some staying power for LD, but it remains a practical choice for machine builders and system integrators who prioritize ease of maintenance.

LD is the backbone of industrial controls, but ST is a rising star, especially among younger engineers and software-savvy startups and for applications involving data manipulation, modularity and integration with modern technology and design tools. In many cases multi-language programming strategies are the key to clarity, functionality and future-proo ng.

Ladder logic forms the bulk of code for most customer software at Dynamic Motion Control (DMC). “It’s familiar and easy to troubleshoot for the majority of the industry,” says Patrick Smith, senior project engineer at DMC, a CSIAcerti ed system integrator, who has worked with manufacturers of all sizes since 1996.

Unless an original equipment manufacturer (OEM) plans to own the long-term maintenance of a machine or is selling its aftermarket service to end users with the machines, it’s essential to consider the skill set of the end user and the maintenance techs’ abilities.

Most maintenance teams that DMC works with are comfortable with LD, with some experience working with other languages. “DMC always provides the full source code to customers, allowing them to make their own changes and more easily troubleshoot, so taking the needs of their maintenance team into account is a key part of our programming approach,” says Smith, who notes it makes customers happy and saves middle-of-the-night phone calls if a machine stops working.

“Ladder is typically used for applications that involve discrete logic control, such as turning a valve on or off or programming a state machine that involves a sequence of operations,” says Imran Mohamed, motion control application engineer at Yaskawa America. “Automation tasks demand deterministic execution, real-time control and robust safety functions. IEC 61131-3 languages provide the clear and structured approach that is essential for these requirements. It’s not uncommon for safety logic to be written exclusively in ladder diagram for this reason.”

The visual of LD represents the electrical ow or schematic, so it’s a way to truly visualize the system. “A picture is worth a thousand words, so the ladder diagram has all the boxes and every representation of the electrical ow,” says Hoat Phung, senior application engineer at ABB. Many younger engineers come out of college with experience in languages like Python, but industrial customers still look to LD for simplicity, largely for ease of maintenance.

and version control are needed. With all that involved, it’s time to pause and evaluate which way is best for everyone to interact with the code.

“How does this PLC integrate into the entire ecosystem?” asks Kuckhoff. “What’s the complexity of this program? What’s this skill set of the team that’s going to be, of course, developing it, but primarily the maintenance team? And when it comes to that maintenance and those production engineers, what also are they familiar with?”

Newer programming tools and methods are also altering how engineers use LD. “LD is often considered easier to read and troubleshoot, though it may not scale as ef ciently as other languages. However, modern programming features such as labels, label groups, program organization units (POUs), and function blocks make it possible to structure and scale applications effectively, even when using LD,” says Tim Hider, industry marketing manager for smart manufacturing and digital solutions at Mitsubishi Electric Automation.

Instruction list is no longer part of IEC 61131-3, but ladder diagram, structured text, sequential function chart and function block diagram are being explored in new ways

“That visual nature is really nice and especially good for when you start playing back recorded data, where you can start to see ‘this is normally open, and it’s closed, or ‘this is normally closed, and it’s open,’” says Thomas Kuckhoff, senior product manager at Omron Automation. “It can help people troubleshoot really nicely.”

The choice of programming language can depend on how the PLC is being used in the application. “You’ll see some PLCs that are locked away behind a ton of rewalls, a separate network,” explains Kuckhoff. “No one touches it for years, and it’s doing the same thing all the time. But then you see some that are not on open networks, but larger factory networks that are required to comply with strict cybersecurity requirements.” This means updates

The industrial workforce is also changing, as younger engineers are bringing new skill sets to controls, at the same time as industry is embracing new technology, which is often influenced by the software world and text-based languages like structured text. “It is also important to know your audience,” says Casey Taylor, software product manager at Beckhoff Automation. “Who will need to read and support your code? What will be the easiest for them to understand? Don’t assume this will always be ladder. More of the workforce has been exposed to textbased programming of various types than ever before, and this shift makes them more comfortable with a wider range of languages.”

Taylor also reminds programmers not to forget about code management. Tools for version control are often built around text-based languages. Ultimately, Beckhoff sees the human-machine interface (HMI) as the best diagnostic tool for troubleshooting and maintenance of complex systems. “This is the rst and best place for maintenance teams to troubleshoot a system. If you can avoid having maintenance staff get into the code, you should. That said, the programming language inside the system will matter if changes are made in the future,” Taylor says.

“The choice of programming language also relies on the OEM’s support model,” explains Mohamed. “Some OEMs expect the end user to take full ownership of the machine’s source code and modify the program in the field as needed. However, not all OEMs or end users operate this way. In some cases, OEMs retain ownership of the source code and version control to ensure consistency across multiple plants operating in different geographic locations.”

The more complex the machine, the less the knowledge of the maintenance staff matters, Mohamed says, and less of that work may be done by maintenance crews in the future. “Even if a maintenance technician is familiar with a programming language, it doesn’t necessarily mean they understand how the software works,” Mohamed explains. “There is always a tradeoff between the risks and outcomes associated with allowing code edits on a production line. It’s the responsibility of the developer to carefully assess these risks and outcomes before committing to a particular language for a project.”

While ladder diagram reigns supreme among IEC 61131-3 languages, especially in the United States, in part due to its wide use and recognition, structured text is finding more room in industrial applications. ST is also more widely used in Germany and across Europe and Asia.

A growing number of engineers and integrators everywhere are making the case for ST, particularly for applications that demand more complex data handling and modularity at scale. LD won’t be replaced anytime soon, but ST is gaining momentum, helping engineers take on more software-centric tasks and embrace flexibility for array manipulation, loop and algorithms.

As an integrator, DMC prioritizes programming that is accessible and modifiable by its customers. “As such, we typically default to ladder logic, as it’s the industry standard in our main service area of the United States,” says Smith. “We then identify any logic that would be best served by structured text. In particular, we focus on logic that either would be excessively hard to read in ladder or is self-contained enough that it should not have to be frequently investigated as part of troubleshooting.” DMC uses ST for functionality that leverages its strengths, such as working with arrays and other complex structures.

ST shines for data handling. “It can be written compactly, which is helpful when working with a large amount of data,” explains Smith. “It has built-in support for control structures like for loops that make working with large datasets, such as arrays, much easier.” Because it is text-based, programmers can more easily use other development tools like integrated development environments (IDEs) to quickly generate large amounts of repetitive code.

“Over time, structured text is seeing more usage due to how flexible it is, and how it can integrate with other tools,” Smith continues. “In particular, startup companies that are often coming from a traditional tech background usually prefer structured text, as it looks more familiar and can better integrate with tools they are experienced with.”

Structured text is better suited for implementing loops, algorithms and other computationally demanding tasks,” agrees Yaskawa’s Mohamed. “Among the IEC 61131-3 programming languages, structured text offers the greatest flexibility for data-handling tasks, particularly when performing operations such as array manipulation and nested loops capabilities that are essential for data parsing and to establish communication interfaces,” he explains.

An array is a powerful type of data structure, used to store and manage similar types of data, such as sensor readings or status flags. An array is a structured list of elements, all the same data type, organized by index. Think of a row of mailboxes, each one numbered and holding a specific piece of mail, or value. Arrays are efficient for data handling because they manage multiple inputs or outputs of the same data type and store a history of readings.

“Arrays are best handled through structured text,” says Ken Crawford, senior director of automation at Weidmuller. “The least capable data-handling language would be ladder.”

In ST, arrays can simplify repetitive logic and enable more dynamic programming. The indexes can be variables,

so arrays support flexible logic, which is great for test sequences. Arrays also support modular programming and can handle structured, repeatable and dynamic data.

Beckhoff’s Taylor says it’s important to provide the option to program in all languages of the IEC standard, but ST is the most commonly used language among Beckhoff’s customer base. “Once a programmer learns how to create code in structured text, it typically becomes the language of choice,” Taylor says. “Other languages do have their strengths depending on the use case.”

Of the IEC 61131-3 programming languages, ladder diagram and structured text are the most popular, but some of the others still have a place in certain applications. One programming language rarely fits all, and, while some machine builders and system integrators stick to a single language for consistency, other languages might offer benefits for certain applications, and, ultimately, dynamic industrial automation might need a combination of languages to find the best code.