Plug-and-play products that comply with the Open Process Automation Standard are turbocharging testbeds and coming to supplier shelves

ADVANCING AN ENERGY DATA REVOLUTION IMPACT OF 'EVERYWHERE

Process improvement is like a trapeze act. You need a trusted partner who lends a hand at the right moment.

Just as athletes rely on their teammates, we know that partnering with our customers brings the same level of support and dependability in the area of manufacturing productivity. Together, we can overcome challenges and achieve a shared goal, optimizing processes with regards to economic efficiency, safety, and environmental protection. Let’s improve together.

Connect

The Unlimited Platform for Total System Integration

ALL YOUR DATA

ALL YOUR DEVICES

ALL YOUR PEOPLE

ALL YOUR LOCATIONS

ALL YOUR OPERATIONS

ONE PLATFORM TO CONNECT ALL YOUR PROCESSES, PEOPLE & PROGRAMS

Learn more about Ignition: ia.io/platform

Are we better prepared?

Four years after the COVID-19 pandemic, process control solutions are tackling the warts it exposed

No time to waste

Circularity report shows effects of resource scarcity

Lost manufacturing time causes

How Syngenta uses advanced analytics software to identify production losses and increase operational efficiency

Overcoming temperature measurement uncertainty

Temperature certainty requires proper testing at operating points

Impact of 'everywhere HMI' Context is critical for process information, so mobile displays must handle the job

32

ARC Forum tackles AI, sustainability, etc Also, NIST updates cybersecurity framework

35

The many faces of interfaces Control 's monthly resource guide

Non-contacting radar takes pressure off LPG tank gauging

Solutions tackle the growing need for accurate inventory management and overfill prevention for pressurized gas storage

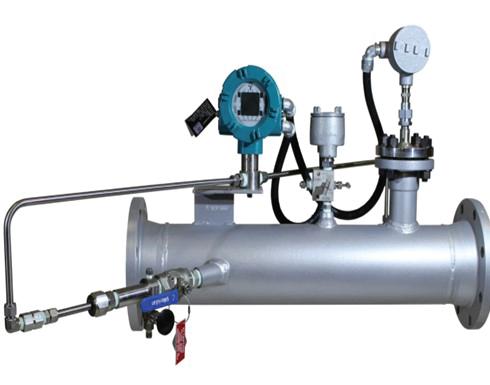

Wedge flowmeter 'seals' the deal Rosemount 9195 wedge flowmeters enhanced by standardized seal assemblies

Choosing the right flowmeter for flare gas detection

Our experts recommend ultrasonic flowmeters to get the job done right

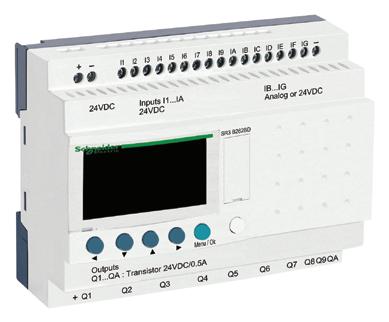

Controller/computer lines

blurred by software

PLCs, PACs and IPCs digitalize, and add more I/O, ports, network links and physical protections

Achieving plantwide, multivariable control

These practical techniques help eliminate compartmentalization of multivariable control

See the forest

Prepare to look back, be patient, and explain the basics

Designed for compact installations without compromising on capability, Emerson’s Micro Motion

G-Series Flow Meter delivers the benefits of Coriolis technology and is the perfect fit for general purpose applications. G-series is notable for its lightweight design and is the most compact dual-tube Coriolis meter available. Operators can gain measurement confidence and process insight in every corner of the plant.

Learn more at www.Emerson.com/MicroMotion

Endeavor Business Media, LLC

30 Burton Hills Blvd, Ste. 185, Nashville, TN 37215

800-547-7377

EXECUTIVE TEAM

CEO Chris Ferrell

President

June Griffin

COO

Patrick Rains

CRO

Paul Andrews

Chief Digital Officer

Jacquie Niemiec

Chief Administrative and Legal Officer

Tracy Kane

EVP/Group Publisher

Mike Christian

EDITORIAL TEAM

Editor in Chief

Len Vermillion, lvermillion@endeavorb2b.com

Executive Editor

Jim Montague, jmontague@endeavorb2b.com

Digital Editor

Madison Ratcliff, mratcliff@endeavorb2b.com

Contributing Editor

John Rezabek

Columnists

Béla Lipták, Greg McMillan, Ian Verhappen

DESIGN & PRODUCTION TEAM

Art Director

Derek Chamberlain, dchamberlain@endeavorb2b.com

Production Manager

Anetta Gauthier, agauthier@endeavorb2b.com

Ad Services Manager

Rita Fitzgerald, rfitzgerald@endeavorb2b.com

Operations Manager / Subscription requests

Lori Goldberg, lgoldberg@endeavorb2b.com

PUBLISHING TEAM

Group Publisher

Keith Larson

630-625-1129, klarson@endeavorb2b.com

Group Sales Director

Amy Loria

352-873-4288, aloria@endeavorb2b.com

Account Manager

Greg Zamin

704-256-5433, gzamin@endeavorb2b.com

Account Manager

Kurt Belisle

815-549-1034, kbelisle@endeavorb2b.com

Account Manager

Jeff Mylin

847-533-9789, jmylin@endeavorb2b.com

Subscriptions

Local: 847-559-7598

Toll free: 877-382-9187 Control@omeda.com

Jesse H. Neal Award Winner & Three Time Finalist

Two Time ASBPE Magazine of the Year Finalist

Dozens of ASBPE Excellence in Graphics and Editorial

Excellence Awards

Four Time Winner Ozzie Awards for Graphics Excellence

Four years after the COVID-19 pandemic, process control solutions are tackling the warts it exposed

IT’S sometimes hard to wrap my head around the fact that it’s been four years since the COVID-19 pandemic changed, well, a lot. It sure doesn’t seem that long ago. Then again, in terms of our preparation for another globally disruptive event, it seems like generations have passed.

There shouldn’t be any doubt that companies and office workers are now better set up to work remotely in a pinch. Many schools and universities don’t even have “snow days” anymore. Instead, they use the remote learning processes honed during the pandemic to replace downhill sleds with online skeds (poor kids). But, what about industry?

It’s worth pondering if the world’s plants, refineries, et. al., are in a better position to handle another pandemic, or worse. Would the global supply chain suffer the same fate that (still) has many scrambling for resources? More importantly, would fewer people be put in harm's way to perform essential jobs such power generation, food processing orpharmaceutical manufacturing?

The short answer is nothing beats experience. Thankfully, process control and automation technologies continue to refine solutions for increasing operational efficiency, remote maintenance and data security. While not specifically focused on another pandemic, the groundswell for automated process control has reached a fever pitch these days. Just attend any industry event this spring conference season and your certain to hear experts talk at length about the needs to shore up data analytics, communicate seamlessly across products and systems, and to be able to transfer knowledge at a moments notice. This month, we take a look at some of these advancements throughout our coverage in Control and ControlGlobal.com.

Our cover story examines developments for open process automation (p. 38), which should enable faster technology development and deployment of the solutions that can foster a more relilient opearations across several industries. In addition, there's increased recognition of the need for investment in data analytics (p. 44), particularly cloud-based software that lets technicians access vital decision-making data from just about anywhere and on any device.

So, the next time we're all confined to our homes, productivity won't be stuck next to us.

LEN VERMILLION Editor-in-Chief lvermillion@endeavorb2b.com

LEN VERMILLION Editor-in-Chief lvermillion@endeavorb2b.com

"Thankfully, process control and automation technology continues to refine solutions for increasing operational efficiency, remote maintenance and data security."

A new report shows that 92% of industrial businesses in the U.S. have been affected by resource scarcity, which has led to increasing costs, supply chain disruptions and production slowdowns. In response, most businesses surveyed in the report support circularity regulations, and 67% say they’ll invest more over the next three years despite the lack of a standardized approach and slow adoption of key practices.

The global survey, "Circularity: no time to waste," from ABB Motion and conducted by Sapio Research in October 2023, shows industry connects improving circularity with energy efficiency, and that energy is among of the biggest waste sources.

Raw materials (39%) are seen as the scarcest resource, followed by labor (35%), and electronic components (33%). Resource scarcity has led to increased costs for 39% of businesses, as well as supply chain disruptions for 39%, and slowdowns in production capacity for 29%. Despite energy being an increasingly scarce resource, more than 40% reported it’s their biggest source of waste.

The global survey gathered responses from 3,304 industrial decision-makers across 12 countries, including 400 respondents in the U.S. Respondents represented a range of industries, such as energy, chemicals, oil and gas, and utilities.

While there’s optimism about investing in circularity, the survey identified obstacles to immediate progress. For example, no single definition of "circularity" was accepted by a majority of the respondents. Also, only 14% saw circularity as a company-wide responsibility, but this group experienced the highest level of improvements across key circularity metrics, such as energy

consumption, use of recycled materials and carbon emissions.

The survey also revealed limited adoption of important circular practices in the U.S., including partnering with waste management companies (41%), incorporating energy-efficient technologies (37%), and promoting circular principles in the supply chain (36%). Meanwhile, 81% are using recycled materials in their products to some extent.

Investing in circularity has already led to measurable benefits, including waste reduction (44%) and energy efficiency improvements (49%). Though some businesses express concern about upfront investment required, many anticipate long-term improvements in process efficiency and cost control.

Most respondents (84%) agree a circular economy encourages innovation and drives competitiveness. They also support increased regulation and reporting requirements (80%), and want more government support for adopting circular business practices (82%). The full report is available at bit.ly/CircularityReport

Another report ABB released recently covers the journey to fossil-free steel,

and examines decarbonization challenges including cost, complexity in transitioning to lower-carbon technologies, and access to hydrogen, clean electricity, and fossil-free carbon.

Current steel production is carbonand energy-intensive, and is classified as one of the six “hard to abate” sectors. Globally, the steel industry is responsible for an estimated 8% of the world’s energy demand, and generates 7-9% of CO2 emissions, mostly from burning fossil fuels.

To meet U.N. Paris Agreement criteria on climate change, and limit global temperature increase to less than 1.5 °C compared with pre-industrial levels, the steel industry must achieve net-zero emissions by 2050. This will require radical transformation, especially as global steel demand is projected to increase 30% by the same date.

The report spotlights fossil-free steel innovation in five steel-producing markets, and presents actions that steel producers can make to reduce carbon in the short and medium term, as well as steps to take with industry suppliers and partners to work together towards a fossil-free steel future.

The full report is located at bit.ly/Fossil-FreeSteel

SPONSORED

SPONSORED

BY

More than six decades of innovation as Emerson’s Rosemount™ technologies continue to transform industrial temperature measurement

6 Error-proof DESIGN

8 Simplified INSTALLATION

10 Resilient OPERATIONS

12 Lifecycle PERFORMANCE

Temperature is the most widely measured variable in industries ranging from oil & gas to chemical and food and beverage to pharma. Accurate measurements are vital to product quality as well as an operation’s safety and efficiency. But ensuring that critical measurements are delivered in a timely, reliable and trustworthy fashion can present operators with a range of challenges. For more than 60 years, Emerson’s Rosemount™ Temperature Measurement solutions have led the industry in combating such challenges through new and innovative technologies. Control recently caught up with Michael Olivier, Vice President of Temperature Measurement Instrumentation at Emerson, to learn more about how Emerson’s innovative solutions continue to help processors tackle their biggest temperature measurement challenges.

Q: Why are temperature measurement solutions vital to the success of process industries?

A: Accurate temperature measurements help ensure product and process safety, quality, and efficiency in a variety of process industries. Temperature measurement serves as a key indicator of many processes such as complete reactions for chemical producers, distillates of desired purity for oil refiners, and thorough clean-in-place cycles for the food & beverage industry, just to name a few examples. In addition, the global push for increased sustainability means inaccurate temperature measurement can lead to not

only inefficient processes, but also the discharge of unnecessary greenhouse gases into the atmosphere.

Q: What distinguishes Emerson as a leader in temperature instrumentation?

A: Emerson focuses on creating innovative and sustainable solutions, producing the highest quality outcomes, and ensuring our customers’ needs are exceeded with our unmatched support and expertise. Our Rosemount Temperature Measurement solutions are no different. From transmitters to thermowells to sensors and more, we offer a complete breadth of temperature products and solutions available in fully integrated assemblies ready for out of the box installation. Our reliability, quality, expertise, and innovation set us apart from our competitors.

Q: What are the primary challenges associated with temperature measurement?

A: ere are four phases in the lifecycle of temperature solutions: design, installation, operation, and maintenance. Challenges can occur in each phase. Most design challenges are associated with thermowell calculations to ensure they are designed appropriately for their application.

Temperature installations often need to be done when the process is not running and can require piping modifications to facilitate the installation of the thermowell. Unexpected shutdowns can occur when the

device is operational due to failing or degrading sensors or environmental factors. Failing, degrading, or malfunctioning sensors can also cause inaccurate measurements when maintaining the device throughout its lifecycle.

It is important to consider all phases and their potential challenges when specifying the temperature solution that best fits your application. Emerson considers these potential challenges in all phases when engineering our products and tools.

Q: What solutions does Emerson offer to help combat these challenges?

A: Emerson offers free thermowell design software that significantly decreases design time and eliminates

“Accurate temperature measurements help ensure product and process safety, quality, and efficiency in a variety of process industries.”

— Michael Olivier

manual trial-and-error calculations. We created a more robust thermowell with a unique design that can withstand harsh process conditions that are unsuitable for traditional thermowells. In addition, we were the first to design a technology to accurately measure process temperature without a thermowell.

Emerson also offers a full portfolio of temperature transmitters that have been recognized by our customers as the number one brand of transmitters for over 20 years in Control’s Readers’ Choice Awards. ese transmitters have a full suite of advanced diagnostic capabilities to help customers do more with less by optimizing operations and simplifying maintenance.

Q: Finally, what future temperature measurement innovations can readers expect from Emerson?

A: Emerson continues to be at the forefront of temperature measurement innovation. With the next generation of temperature instrumentation, we aim to provide our customers with even higher quality devices with superior performance and usability to match. This includes improved user interfaces, advanced diagnostics, industry-leading accuracy specs and other features to make operation more intuitive for decades to come.

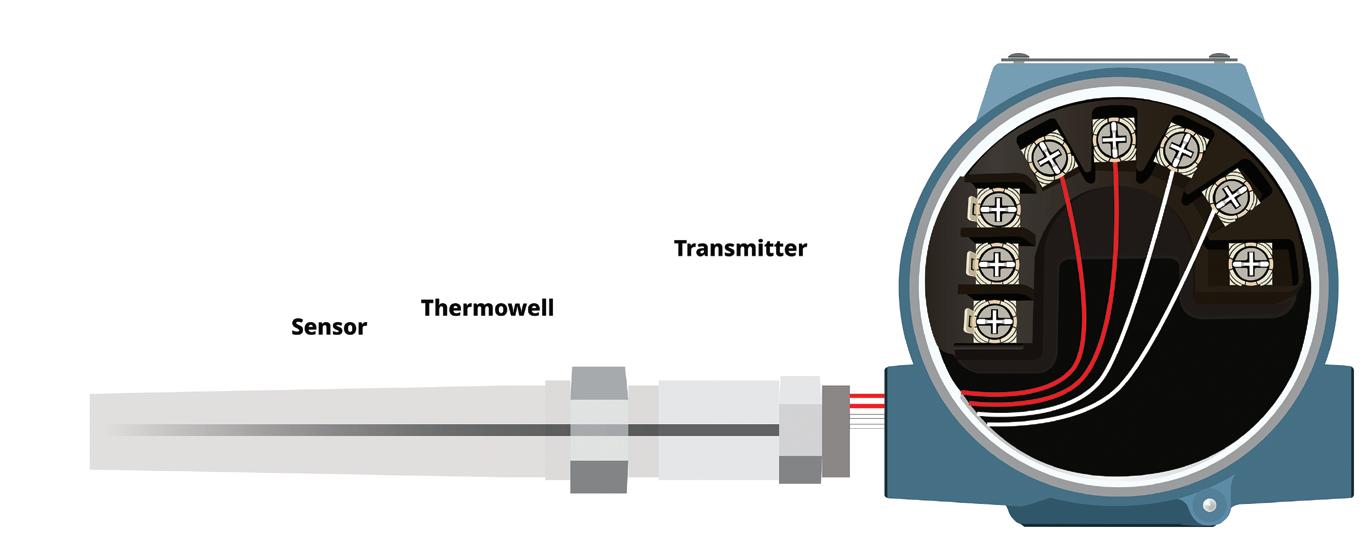

In most cases, a complete process instrumentation solution for temperature measurement is comprised of three components: a transmitter, a sensor and a thermowell. Traditionally, its design comes with its share of complexity and a risk of failure if not done properly. Most of the challenge lies with the design of the thermowell, a metal alloy sheath that penetrates the process piping and protects the sensor from often harsh process conditions. Think about an oil refinery’s catalytic cracker regenerator that runs in excess of 1,000º F. Add to such high temperatures turbulent flows and fluid velocities that can flirt with the speed of sound, and one quickly appreciates the need for a well-designed thermowell.

Industry best practice is to perform thermowell wake frequency calculations, which ensure that the thermowell design will withstand the process conditions to which it is exposed. These calculations account for 75% of the overall engineering work to design and specify a complete temperature measurement point. Most of the design time is spent on the thermowell because it is the component that comes into direct contact with the process. It is also a pressure-retaining component, so a poorly designed thermowell can lead to safety concerns as well as unplanned and costly shutdowns.

application. Other considerations may revolve around your measurement objective. Is your temperature measurement intended to be used for closed-loop control or monitoring purposes?

In addition, thermowell calculations must adhere to the most current ASME PTC-19.3 TW industry standard. Most individuals are not well-versed in the requirements of the standard and could potentially design a thermowell that fails to meet these requirements.

Historically, performing such calculations included the use of manual spreadsheets and numerous manual trialand-error iterations. This process was tedious, time-consuming and prone to errors.

Emerson developed the Rosemount™ Thermowell Design Accelerator to ease the complexities of the thermowell design process. This free, easy-to-use and intuitive online software tool can execute thermowell calculations up to 90% faster by eliminating many of the manual tasks of the past.

Designing a thermowell is a complex task, and there are several considerations that must be factored into both the overall design and the specifications for each application. Among those considerations are allowances for material compatibility with the process as well as the mounting types and style appropriate to the

For example, if a user changes process specifics, they’ll have to do another calculation to ensure the thermowell isn’t affected. In the past, that required the use of trial and error and spreadsheets, resulting in a 50-tag project typically taking about 40 hours to calculate. The Rosemount Thermowell Design Accelerator can reduce that same project design time to about two hours. It is able to upload and calculate up to 1,000 thermowell tags at once and, uniquely, includes auto revision functionality, allowing the Accelerator to continue revising the thermowell dimensions after a failed calculation until it finds a passing solution.

Rosemount Thermowell Design Accelerator can execute thermowell calculations up to 90% faster by eliminating many of the manual design tasks.

Another feature that makes the software unique is its ability to not only recalculate failed tags, but also automatically generate thermowell and sensor model numbers that are specific to the solution that meets the application’s process conditions.

In addition, to ensure the most current standards are being used, all information from the software is based on the ASME PTC-19.3 TW standard.

In situations where a traditional thermowell won’t work for the specific application, the software can recommend a different type of product to meet the needs of the application.

Among those products are the Rosemount Twisted Square™ ermowell and Rosemount X-well™ Technology. e Rosemount Twisted Square dampens the effects of the vibrations on the thermowell, thus making it a more robust solution. is is achieved by using a unique helical-shaped stem profile that is designed to eliminate more than 90% of the dynamic stresses that a conventional thermowell would experience. is design allows for operation at higher fluid velocities.

It is also designed to improve the reliability of a thermowell and to reduce the risk of thermowell failures with changing process conditions, including start-up, shutdown

or unintended events. In addition, because of its ability to withstand harsher process conditions, the Rosemount Twisted Square allows for insertion lengths that reach the middle of the pipe for highest temperature measurement accuracy. e Rosemount Twisted Square ermowell can easily be expanded to new applications and can reduce inventory since one thermowell fits a range of requirements.

Rosemount X-well Technology is Emerson’s non-intrusive solution to accurately measure process temperatures without using a thermowell. It features a patented thermal conductivity algorithm and, with an understanding of the thermal conductive properties of the temperature measurement assembly and piping, can calculate internal process temperatures with accuracy on par with a traditional thermowell. In addition, Rosemount X-well Technology simplifies measurement point specification, installation and maintenance while reducing possible leak points.

To avoid the complexities of thermowells, or if a thermowell solution is not possible for an application, Emerson offers Rosemount X-well Technology which accurately measures process temperature without a thermowell.

e Rosemount ermowell Design Accelerator can help turn a once timeconsuming and tedious process into an efficient and accurate thermowell design solution. Whether it is recommending a traditional thermowell or an alternate approach, you can trust the Rosemount ermowell Design Accelerator to give you the best possible temperature measurement solution for your application.

When it comes to accurately measuring the temperature of the flow inside a pipe, the thermowell has long played an essential role. It brings the sensor into close, conductive proximity with the process fluid while also protecting the sensor from often harsh conditions. But the thermowell also comes with several installation challenges.

First, installation of a thermowell into a pipe requires a shutdown of the process, directly affecting productivity. Pipe modifications, such as welding or cutting, are required to install a thermowell, and classified environments may need to be fully cleared of explosive hazards for the work to be performed.

In addition, small line sizes present a challenge. Stem conduction of ambient heat sources can impact measurement accuracy when the immersion depth is less than 10 times the thermowell tip diameter. It is often impossible to achieve this immersion depth in small line sizes without significant modifications to the pipe, such as adding a tee.

Finally, installation of a temperature measurement solution becomes more challenging when components are sourced from multiple vendors and made to fit properly. Assembling components from multiple vendors complicates and lengthens the overall installation process.

With these and other thermowell-related challenges in mind, Emerson developed Rosemount X-well Technology, which measures process temperature without the need for a thermowell. This technology is non-intrusive, as the instrumentation attaches around the outside of the pipe. When installing Rosemount X-well, users don’t need to shut down the process because the instrumentation isn’t going inside the pipe, in sharp contrast to thermowell installations. Instead, the sensor contacts

Rosemount X-well Technology accurately measures process temperature without the use of a thermowell or process penetration, thus avoiding process shutdowns to install a new temperature measurement point.

only the outside surface of the pipe, resulting in a 75% reduction in overall installation time.

Rosemount X-well Technology is available with either the Rosemount 3144P Wired Temperature Transmitter or the Rosemount 648 Wireless Temperature Transmitter. Using the wireless transmitter option provides additional benefits of not having to run new wires to the device for power and communications. As a result, wireless instruments are routinely commissioned in less than an hour, leading to an even greater reduction in overall installation time.

Rosemount X-well Technology uses a built-in algorithm to extrapolate the internal process temperature based on surface temperature, plus the conductive properties of the pipe (composition and thickness). This delivers temperature measurement accuracy in line with that of a sensor in a traditional thermowell. Plus, Rosemount X-

well does not encounter the issues that often make thermowells inaccurate for line sizes smaller than 5 inches.

Rosemount X-well Technology is also suitable for any applications that have high-velocity, abrasive material within the process or corrosive processes that dictate an exotic material for the thermowell. It also makes sense for any application where a traditional thermowell path is too costly or too complicated.

One European chemical maker recently utilized Rosemount X-well Technology with Rosemount 648 Wireless Temperature Transmitters to further simplify the installation of 65 temperature measurement points to determine flowrates via energy balances for a heat exchanger that was particularly sensitive to erosion. The solution was commissioned in under an hour and seamlessly connected to the existing wireless infrastructure without process shutdown or production loss.

multiple shipments and lead times from separate vendors. Plus, different sources of each of those components can require time-consuming workarounds to accommodate unanticipated incompatibilities.

Sizing the thermowell and the sensor together is more complicated if you purchase them from different sources. It can increase the risk of a misfit—either the sensor’s too long and it won’t fit in the thermowell, or the sensor’s too short, which could lead to measurement inaccuracies if the tip of the sensor is not making contact with the inner wall of the thermowell.

Emerson offers full integrated temperature assemblies that are ready to install upon arrival at your facility.

Universal Pipe Mount’s cut-to-fit banding design brings new functionality to this technology. Standardized units can be stocked in inventory, installed, moved, and reinstalled on pipes of different line sizes. This provides value to operators, as they can rapidly deployment measurement solutions from their standardized inventory in emergency situations. They can also use a single device to validate several existing insertion measurement points over time.

Overall, for those looking to avoid installation and other thermowell challenges, but want to obtain accurate process temperature measurements, Rosemount X-well Technology is your go-to solution.

For those applications where a thermowell still makes sense, another Emerson approach that significantly streamlines installation time and effort are fully integrated solutions that include sensor, transmitter and thermowell already fully assembled and ready to install.

This approach offers advantages from a procurement standpoint, eliminating the need for multiple quotes and purchase orders. In addition, it avoids the management of

Meanwhile, each temperature transmitter must be configured to match the sensor type that’s being wired. If the user buys them pre-assembled from the same vendor, this extra step is eliminated. Separate sourcing also creates extra work when it comes to wiring in the field. Separately purchased transmitters require the sensor to be physically wired to the terminal block.

Users can achieve a higher level of performance when the transmitter and sensor are ordered together by specifying Callendar-Van Dusen (CVD) constants. These are coefficients that characterize how a specific resistance temperature detector (RTD) operates at different temperatures. Sourcing components together makes it easier to achieve transmittersensor matching because Emerson can preconfigure the transmitter with CVD constants for that specific RTD at the factory. There’s no manual entry of the constants into the transmitter as would be required to pair an RTD from one company with a transmitter from another.

Emerson’s complete point solutions and Rosemount X-well Technology give process industry users streamlined and non-intrusive temperature measurement solutions that help reduce the time and effort spent during the installation process.

In the list of sentences that operators and plant managers don’t want to hear, “We need to shut down the plant” ranks near the top of the list. Unplanned shutdowns can have a significant impact on the profitability of a business. Furthermore, accurate process measurements throughout an operation are critical to the quality, safety and yield of the process. That’s why continuous measurement in process control applications is essential. To operate continuously, users face two main challenges: performance optimization, ensuring sensors are working properly and electrical noise interference is minimized; and accuracy optimization, ensuring that the sensor physically accesses the most representative measurement point.

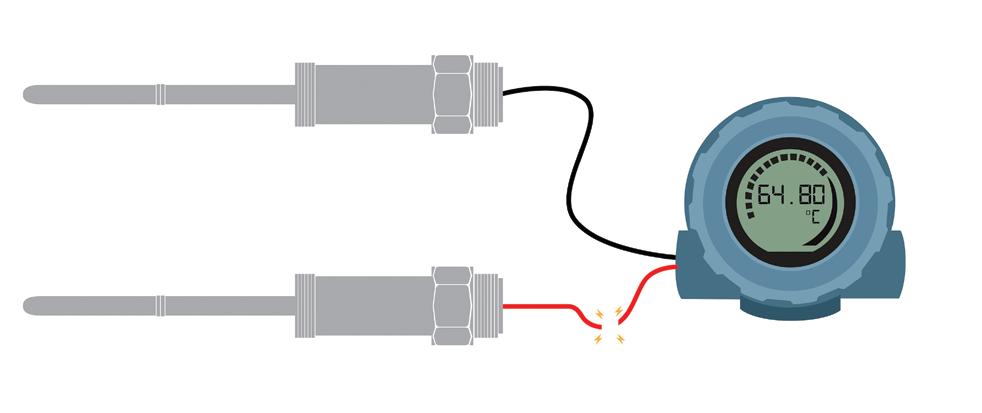

Temperature measurements can experience a multitude of issues that impact plant operation. Despite protective measures, sensors are prone to failure and degradation that can cause a loss of measurement integrity. Sensors can also have small voltages (known as thermal electromagnetic fields, or EMFs) build up in their wiring and can cause inaccuracies in temperature readings due to a resultant change in resistance.

In addition to issues with sensor failure and degradation, environmental factors can have a significant impact on the quality of a temperature reading received by the control system. Transmitter wiring can be susceptible to electrical noise and vibrations. Suboptimal conditions are commonly found in installations near blowers, pumps and compressors. Transient events such as lightning strikes or electrical discharges can cause inaccurate readings, which lead to false alarms. Such alarms can result in a shutdown of the process and require operations personnel to perform a check on the sensor.

Critical control or custody transfer applications, such as batch reactors, lease automatic custody trans -

Emerson’s Hot Backup Capability will automatically switch to a secondary sensor if the primary sensor fails.

fer (LACT) skids, and safety loops require a high level of temperature measurement accuracy. Often, resistance temperature detector (RTD) sensors are used to take such measurements. These sensors work by measuring resistance changes in a temperaturesensitive alloy; and the accuracy of that correlation can be affected by errors and inconsistencies introduced during the sensor’s manufacture.

Accuracy of a temperature measurement can also be impacted by the immersion length of the thermowell used to protect the sensor. The highest level of accuracy and time response in a pipe application is obtained by inserting the tip of the thermowell (along with the tip of the sensing element) into the very center of the pipe. However, this goal is often not achieved, as thermowell length sometimes must be reduced to endure harsh process conditions and vibration stresses.

Emerson offers a range of solutions to help tackle these challenges and to better ensure continuous temperature measurement and operation. These include Transmitter-Sensor Matching, the Rosemount™ Twisted Square™ Thermowell, and a range of advanced diagnostic features included in Rosemount Temperature Transmitters.

Temperature transmitters improve the performance and reliability of industrial temperature measurements. They are commonly used in the chemical, oil and gas, refining, food and beverage, life sciences and many other process industries. Rosemount Temperature Transmitters are available with a suite of sensor and environmental diagnostic features that help users proactively identify and address issues before they impact productivity or safety.

One of these features is Emerson’s Hot Backup™ Capability. This capability features a redundant, dual-input sensor configuration designed to mitigate the effects of a failed sensor. If the primary sensor fails, the transmitter will automatically switch to the secondary sensor.

The Hot Backup feature also displays an alert indicating that the primary sensor has failed so that it can be replaced. This capability is especially beneficial for critical applications where a failed sensor and subsequent lost measurement could cause safety concerns.

Emerson’s Rosemount Temperature Transmitters also offer several advanced diagnostic features to limit the impact of environmental conditions on the accuracy of a temperature measurement. One of those diagnostic features is transient filtering, which prevents intermittent transient signals (such as those resulting from an electrically noisy environment or high vibration) from affecting the measurement. By disregarding apparent temperature spikes, sensor signal interruption is prevented and the last known reliable temperature value continues to be transmitted, thus saving a potential process upset or trip condition.

Another useful diagnostic feature is Open Sensor Hold-Off. Based on calculations performed by the trans-

mitter, this feature determines whether a high-voltage transient event (i.e., lightning or electrostatic discharge) or an actual open sensor event has occurred. Inaccurate open sensor conditions can cause unnecessary alarms. To avoid these alarms, the transmitter ignores the outlier and outputs the previously established value.

Additionally, Rosemount Temperature Transmitters are equipped with an electromagnetic field (EMF) compensation feature. This diagnostic analyzes sensor loops and compensates for the thermal EMFs, resulting in more accurate temperature readings.

Apart from their diagnostic features, Rosemount Temperature Transmitters are available in a variety of form factors and housing styles to optimize their performance based on the needs of the application. Of the available form factors, the field mount is the most robust design. This solution features a dual compartment housing, meaning that the device electronics are in a separate chamber from the terminal block. This helps prevent the presence of moisture (from humidity or other sources) and subsequent corrosion of the device electronics.

Thermowells that must be shortened from their optimal length to withstand an application’s process conditions necessarily forfeit some degree of accuracy. Emerson’s innovative solution, the Rosemount Twisted Square Thermowell, features a unique helical stem profile that reduces dynamic stresses by more than 90%. This reduction in vibrational effects allows for the tip of the thermowell to rest in the center region of the pipe, allowing for the most accurate measurement possible.

In some cases, users can live with the error associated with the actual resistance curve of an RTD. In critical control and custody transfer applications, however, this error can be detrimental to the plant’s operation. Fortunately, Emerson’s temperature transmitters have the option to be specified with a Transmitter-Sensor Matching option. Transmitter-Sensor Matching decreases the error associated with the total measurement by up to 75%.

‘This reduction in error is achieved by programming the four constants from the sensor’s Callendar-Van Dusen equation into the transmitter. When specified with the Transmitter-Sensor Matching option, Emerson programs the transmitter with the appropriate constants from the factory, allowing users to achieve highly accurate temperature measurements that reliably optimize operational performance.

Once designed and installed correctly, maintaining temperature measurement accuracy and operational integrity over the long haul becomes the focus. Proper maintenance of a temperature measurement point can help reduce the risk of measurement failure as well as ensure ongoing accuracy. High-quality resistance temperature detectors (RTDs) can be extremely stable, but thermocouples can begin drifting as soon as they are put into operation. All temperature sensors, even high-quality ones, can degrade over time due to harsh process and environmental conditions.

Sensor degradation can lead to an abnormal measurement condition called on-scale failure. This is the indication of a valid measurement value that appears to be within process alarm limits, when the data is, in fact, inaccurate. If personnel cannot identify atypical temperature behavior such as on-scale failure, they might be unaware of problems occurring within the process. This lack of awareness can lead to unnecessary process shutdowns and safety issues, as well as negatively impact process efficiency and quality.

Diagnostic innovations have advanced the continuous maintenance capabilities for process instrumentation and sensor health monitoring, giving users confidence in both instrument performance and measurement accuracy. Emerson’s Rosemount™ Temperature Transmitters feature advanced diagnostic capabilities that help proactively identify issues before they impact productivity and provide information to the right people at the right time, resulting in faster decision making.

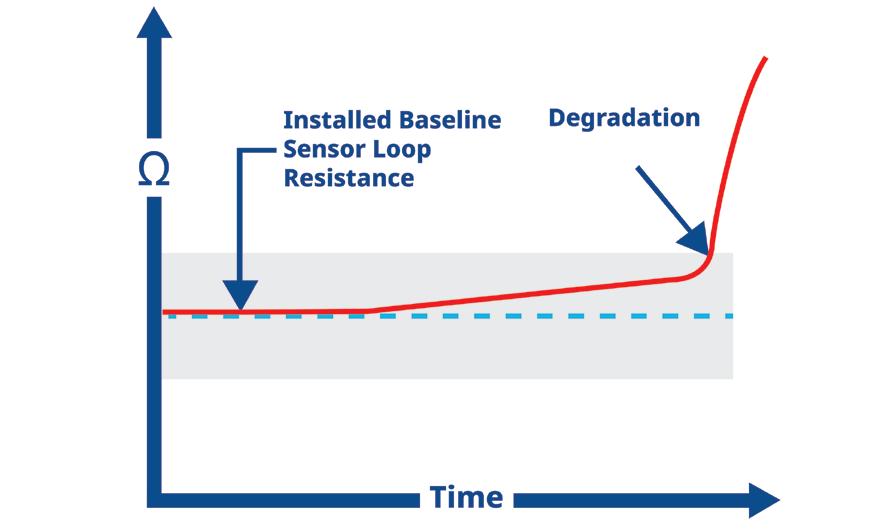

The Thermocouple Degradation Diagnostic monitors the resistance in a thermocouple sensor loop. This diagnostic notifies operators of an increase in sensor loop resistance, which can indicate that the sensor is deviating from the true temperature value and potentially failing.

T/C Degradation alerts users of a degrading sensor by detecting loop resistance.

It lets users set a resistance limit for each unique installation. For example, if a plant’s standard installation is running at 30 ohms, the transmitter can be set to alert technicians if it hits a threshold of twice the baseline, or in this case, 60 ohms. Once it hits the threshold, the transmitter will keep the process operating but send an alert.

Another diagnostic available in Rosemount Temperature Transmitters is Measurement Validation. It works by evaluating sensor noise. Before a sensor fails, it will exhibit signs of degradation such as increased signal noise, which will often result in inaccurate but transient on-scale readings. Measurement Validation monitors the signal noise and uses it to calculate a deviation value, indicating the magnitude of the noise, which is compared to a user-selected alert limit. If this limit is exceeded, the user is notified, allowing action to be taken.

Measurement Validation can detect increases in signal noise due to loose or corroded connections, high vibration levels or electronic interference. In addition to detecting on-scale failures as a result of these conditions, Measurement Validation also performs a rate of change calculation

Transmitters

to differentiate abnormally fast temperature changes due to sensor failure from actual temperature swings.

Sensors are sometimes prone to drift, especially when exposed to extreme process conditions. Sensor drift is a common issue found in the chemical, refining and power industries and in applications such as coker heaters, crude vacuum distillation units, furnaces and hydrocrackers. Drift can have a significant impact on the accuracy and reliability of sensor data. Gradual, subtle changes in the sensor happen over time, causing discrepancies between the true process temperature and the output of the sensor.

For transmitters with dual sensor input capability, Sensor Drift Alert is another diagnostic tool that provides insight into sensor health. Sensor Drift Alert works by measuring two sensors simultaneously and monitoring the temperature difference between them. If one starts to drift, the other can be relied upon to continue to provide accurate data until the failing sensor is replaced. Because there are two readings for the same measurement point, technicians are quickly alerted if the readings diverge.

Another important component of maintenance of a temperature measurement point is calibration frequency. When using Rosemount Temperature Transmitters, it is possible to calculate the frequency needed for calibration, as the stability specification plays a large role in how often they must be recalibrated.

For example, the Rosemount 3144P Temperature Transmitter has a five-year stability specification. Users should take into account a transmitter’s stability and accuracy specifications in conjunction with their own onsite requirements to calculate how often units should be inspected. This approach can often extend the calibration interval, freeing maintenance personnel to do other important tasks.

Maintaining accurate instrumentation is a vital element in the productivity of a process. Temperature sensors will degrade over time, and the inability to monitor this behavior can lead to false alarms, lower product quality, energy inefficiencies or process shutdown. Emerson’s Rosemount Temperature Measurement Solutions are designed to help maintain accurate instrumentation and keep your process up and running over the long term. Advanced diagnostic capabilities also help users do more with less by enhancing overall operations, augmenting the capabilities of their front-line teams, and empowering them to direct their efforts towards the highest value-added tasks.

As people around the world demand a more sustainable way of life, process industries need innovative solutions that are proven safe, reliable, and efficient. Emerson’s Rosemount Temperature Measurement Solutions are designed to tackle the most challenging process design, installation, operation and maintenance challenges to ensure industry can meet its safety and sustainability goals. In addition, the MyEmerson portal serves as go-to source for the necessary service, education and training to make this vision a reality.

Emerson’s Rosemount™ X-well™ Technology delivers accurate and reliable process temperature measurements without a thermowell. Simplify design, installation, and maintenance, and save up to 30% on lifetime costs per temperature measurement point.

Learn more at www.Emerson.com/Rosemount-X-well

In part two of this series, agrochemicals producer Syngenta shows how it implemented Seeq software to identify production losses, increasing operational efficiency and profitability.

by Dr. Stephen Pearson and John W. CoxUNDERSTANDING asset utilization is key to maximizing productivity in any industrial process. In manufacturing, production can be held up by mechanical breakdowns, material shortages, external delays, operator errors and equipment degradation.

In part one of this case study (Control, Oct ‘23, p. 13, bit.ly/QuantifyingManufacturingTime), we showed how advanced analytics can classify lost productivity with a basic set of assumptions, without requiring operatoror equipment-provided reason codes. This enables fine-tuned targeting to deploy limited resources where improvements are most likely and impactful.

When investigating deviations from ideal profiles, multivariate correlations between sensors must be considered in addition to individual sensor profiles (Figure 1). Frequently in industry, we find the low-hanging fruit of univariate issues have already been solved, increasing the likelihood that chronic issues are multivariate in nature.

In part two, we demonstrate how Seeq was deployed at Syngenta to benchmark operations against ideal practices, and identify operational anomalies using univariate and multivariate approaches.

Benchmarking against the best (univariate)

The fastest 25% of batches can be used to create a reference profile for each relevant process sensor, for example, those that directly impact phase duration or product quality. Slower batches in the upper percentiles can be compared to this reference profile to look for possible delay causes. Rather than targeting the entire batch profile, attention is given to phases responsible for a high proportion of losses.

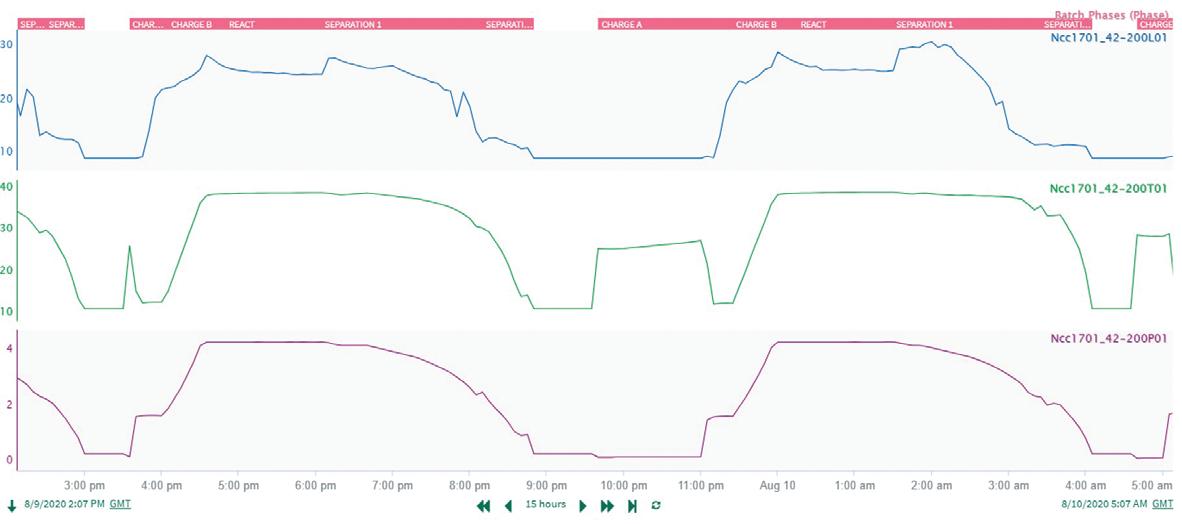

To illustrate this, focus can be applied to the combined separation phases, where characteristic temperature and pressure profiles are known to directly influence overall batch quality.

The “best separation phases,” a subset of all the “separation phases,” are identified quantitatively, based on a duration of less than or equal to the 25th percentile benchmark. These results can be easily examined by users (Figure 2).

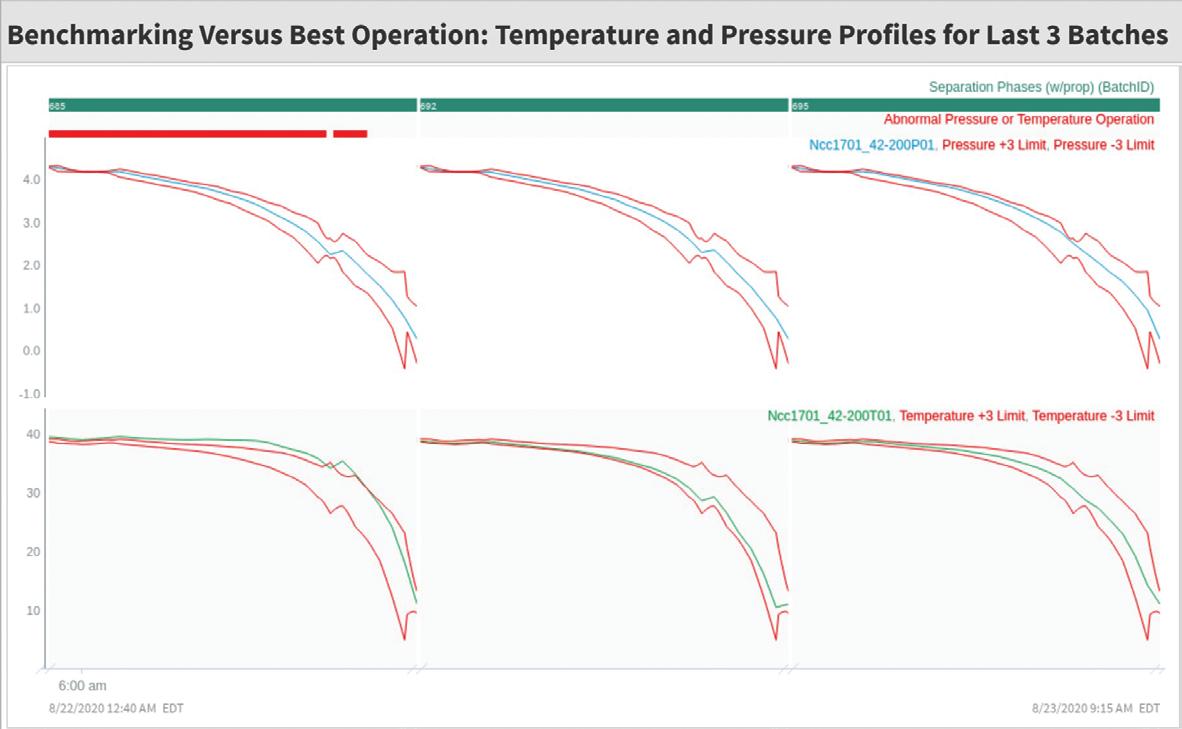

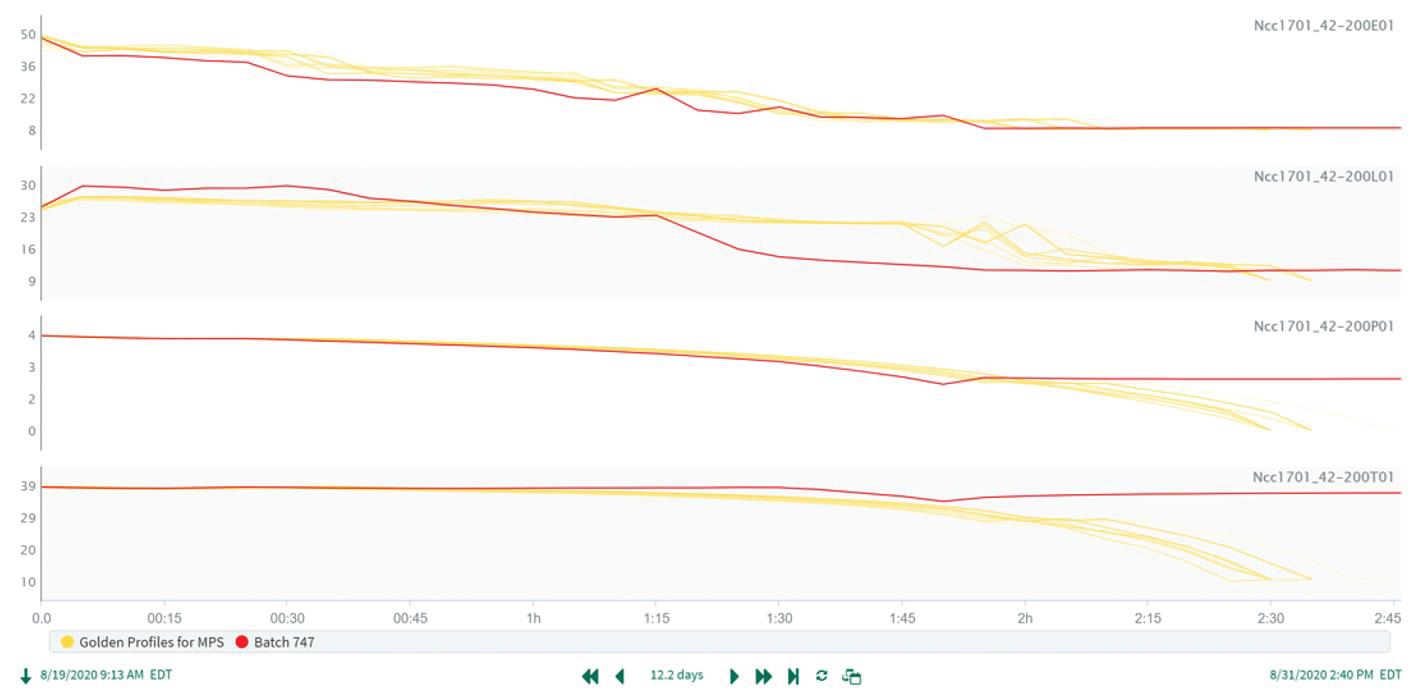

Next, “golden” or best profiles for temperature and pressure signals are calculated as the ±3 standard deviation limits, based only on data from the best separation phases (Figure 3).

With the best operation profiles developed for temperature and pressure, current operations can be benchmarked using autoupdating reports easily shared with operations teams. Here, reporting date range functionality displays the last X number of batches (where X is user-configurable), and abnormal deviations are highlighted whenever temperature or pressure falls outside of best operating limits (Figure 4).

With this approach, any relevant signals can be monitored univariately to identify operating issues in a timely manner.

Connecting the quality result to corresponding process batch data can be challenging because this result is commonly lab-measured and reported at varying time intervals following completion of the process batch. Seeq provides a single-line, built-in formula function to join batch and qualityresult time periods just above in the trend, based on matching batch number values (“Overall batches joined to quality results,” Figure 5).

From this point, with quality results automatically transferred as a capsule property on the resulting condition, it’s easy to create the “Line 1 batch quality aligned to process batches” as a signal representing the quality result, which is shifted back in time by varying amounts to align with the batch operation.

With quality results now listed, along with lost time and time outside best operation metrics in the monitoring dashboard (Table 1), users can rapidly identify which batches merit investigation, and whether the anomalies resulted in inadequate quality or waste.

This lets users differentiate between lost productivity due to different operating profile characteristics compared to other causes.

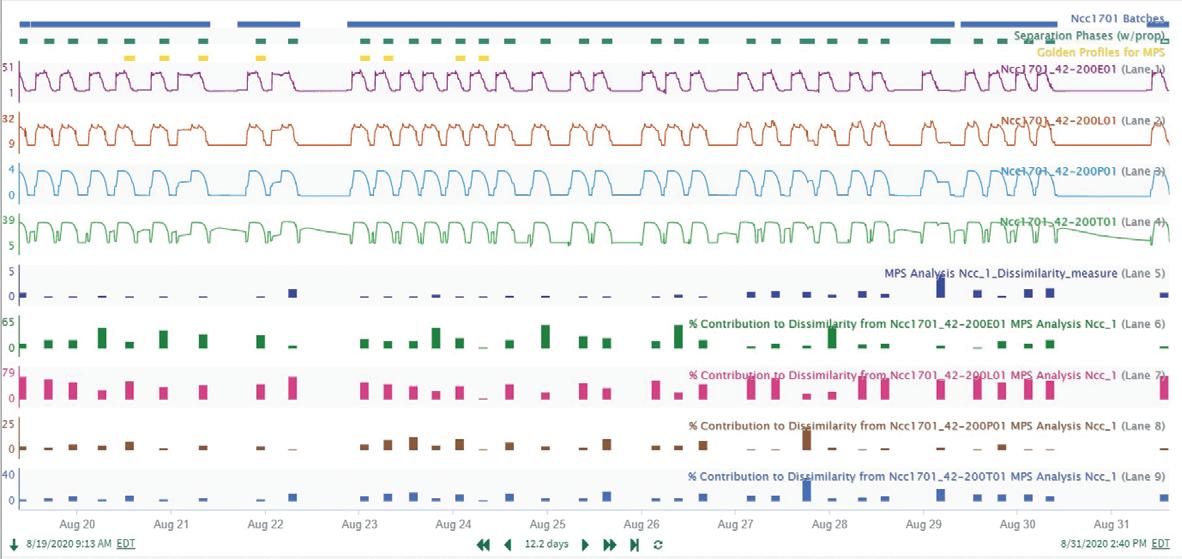

Benchmarking against the best (multivariate)

While anomalies in single process variables are often easy to detect visually, multivariate issues are typically more complex. Sometimes, profiles for various sensors may be within the individual reference boundaries, but combinations of profiles can create unexplained quality issues.

These multivariate anomalies can be difficult to detect, but they’re critical to understanding underlying causes of lost productivity and

inferior quality. To identify these anomalies, Syngenta used the multivariate pattern search (MPS) function in Seeq to make multivariate comparisons of batches against a reference

or “golden” set. After training on the best historical separation phases, MPS produces overall “dissimilarity” and individual signal dissimilarities for each batch (Figure 6).

The resulting MPS model can be used in near real-time to monitor dissimilarities for each of the recent separation phases. In Table 2, Batch 747 was the most dissimilar to the historical best separation phases during the time of analysis. In particular, the L01 signal for Batch 747 was the most dissimilar,

and it contributed the most to anomalous operation.

These sorts of diagnostic insights enable users to investigate suboptimal operation in a targeted, streamlined manner, identifying many process improvement opportunities.

The next investigative step at Syngenta was generating a capsule view

trend to compare Batch 747 data to the best operation profiles (Figure 7).

This showed signal L01 with the largest deviation from ideal. With this information, a user can apply process expertise to understand the contrast of the L01 profile with the best operational time periods, which can lead to improvements that eliminate this issue in the future.

Syngenta identified multiple areas for operational optimization by leveraging modern data analytics in Seeq, identifying operational anomalies using univariate and multivariate approaches, and benchmarking current operation against ideal profiles.

Multivariate approaches can reveal valuable insights that aren’t easily detected via visual inspections of signal trends. Alo, subject matter experts can efficiently examine individual batch profiles in the same trending and visualization software environment to identify further actionable root causes from process sensors. By leveraging modern software, companies can implement process improvements to increase productivity and maximize uptime in their manufacturing environments.

Dr. Stephen Pearson is a principal data scientist at Syngenta. He helps manufacturing sites improve data management and analyses. John Cox is a principal analytics engineer at Seeq, where he works on advanced analytics use cases.

JOHN REZABEK Contributing Editor JRezabek@ashland.com

JOHN REZABEK Contributing Editor JRezabek@ashland.com

" While RTDs are inherently more accurate and linear than thermocouples, they’re not perfectly linear, and each sensor deviates from the established tables to some degree."

The path to temperature certainty requires proper testing at the operating point

I happened upon one of Scott Adam’s “Dilbert” comics, where his pointy-haired boss asks, “Are you sure the data you gave me is correct?” Dilbert replies, “I’ve been giving you incorrect data for years. This is the first time you’ve asked.” I can relate. What’s my answer if my boss asks the same question?

It’s generally accepted that a platinum resistance temperature detector (RTD) will achieve better accuracy and stability than a thermocouple, where two dissimilar metals are joined and produce a millivolt signal in proportion to the difference in temperature between the two junctions. Out of the box, a standard 100-ohm platinum RTD will have better than 1 degree accuracy below 100 °C, with increasing uncertainty as the measured temperature increases. Some vendors offer RTDs for specific ranges or maximum temperatures. If your process is running at 751 °F/40 °C, the path to temperature “certainty” most likely requires a test at the operating point.

Whether you have an in-house calibration capability or rely on your supplier, a temperature “bath”—an apparatus for applying a known temperature to the sensor of interest— is often used. The temperature of the bath is typically measured by a National Institute of Standards and Technology (NIST)-certified or a NIST-traceable temperature sensor. For a fee of a few hundred dollars to maybe around $20,000, NIST will characterize a given sensor against other certified sensors or “triple points” (melting points) of various fluids. The triple point of water is around 0 °C (at specific pressure conditions). NIST uses the melting points of various other substances from near absolute zero to more than 1,000 °C to “standardize the standards.”

Once compared to the NIST-traceable sensor in one’s temperature bath, you might find yours is deviating at some points of interest. While RTDs are inherently more accurate and linear than thermocouples, they’re not

perfectly linear, and each sensor deviates from the established tables to some degree.

British physicist Hugh Longbourne Callendar labored to elevate the RTD as an accurate temperature sensor, and his equation relating resistance to temperature was later refined by NIST chemist M.S. Van Dusen. For a specific sensor, your temperature transmitter might have the capability to include the CallendarVan Dusen coefficients, which provide a characterization of temperature to that sensor’s resistance, improving accuracy versus standard tables and linearization.

If you’ve pursued certainty, you might feel comfortable, but you’re not finished. Since you’ve established a relationship between resistance and temperatures, you must now measure that resistance at the tip of a probe that might be many feet away (some elements are more than 50 ft. long). A change of 1 °F changes a standard 100-ohm platinum RTD’s resistance less than 0.2 ohms at 750 °F. RTDs are commonly supplied in three- or four-wire varieties, permitting the transducer to subtract the resistance of the conductors and terminations between the sensor and the transmitter. Both lead wire and RTD must be measured with comparable accuracy.

Interest in improved accuracy motivates some to locate a transmitter as close as possible to the sensor. While this is likely better than a kilometer of lead wire, the influence of ambient temperature on the transmitter might be worth a look. Unless the transmitter is digitally integrated using fieldbus, you’ll have uncertainty introduced through D/A (generating a 4-20 mA signal) and the corresponding A/D at the DCS or PLC I/O card.

When operators or my boss ask me how sure I am that their reading is correct, I can reply it’s as good as the standard to which it was calibrated on the day it was calibrated. Who knows what other vagaries of uncertainty might have crept in since then?

Context is critical for process information, so mobile displays must be able to handle the job

LOOK around. How many people do you see staring at their phones or tablet PCs? One reason is because mobile devices provide people with everything, all in one place.

Despite regulations in some jurisdictions— where work stays within working hours—the expectation for individuals to at least be able to connect from anywhere at anytime now extends to information from the real-time control realm. The thin edge of this wedge is remote access to diagnostic information on complex equipment with high impacts in the event of failure, such as compressors. However, once the infrastructure for making this happen is in place, the barrier to entry is significantly lower, and we can do the same thing for motors, process analyzers, etc.

Of course, this information needs to support different form factors to satisfy our curiosity about how things are going. The same platforms allow us to reach out from wherever we are, provided we don’t require a full-size, 1,920 x 1,080 pixel display to get the level of detail required to understand the situation— or satisfy our curiosity.

Unfortunately, a cell phone isn’t the same size as an HMI. It can be used to display specific information remotely, but most HMIs are now web-based, and it’s unlikely mobile displays can present information in context, at least not all at once. Understanding the context of information is critical, particularly for process operations. For example, even “accidentally” affecting a variable while viewing diagnostic information can have unintended and potentially catastrophic consequences.

One possible solution is to develop HMIs based on human factors. The design principles may have new schematic types with better KPIs to provide what users need. Similar to the “map app,” they can zoom in on the detail needed to make correct decisions.

Having information with context is consistent with another expectation of devices that

are always connected—the ability to have or find context on the same platform either through a search engine or intelligent links between different data points. To achieve this capability, an HMI is no longer a series of static displays of process information, but a view to equipment health status, manuals, performance metrics and statistics. Making something available doesn’t mean the user should have access to it, especially as awareness of OT systems as the “easy” backdoor increases in the hacker community.

Fortunately, as we learned during the COVID-19 pandemic, adding more remotely connected “bring your own devices” is simply another permutation of the work-from-home concept that was already in progress in part due to demographics prior to the pandemic. The ability to manage increased surface area by implementing practices such as zero-trust is better understood now with the tools in place to implement and support it.

Just as with any technology transition, the adoption curve also needs to be considered. One factor in adoption is the expectations of the audience. Newer workers, who have always had context-sensitive interactive displays available, are more likely to accept and expect similar (interactive) capabilities from their HMI devices. Meanwhile, workers at the other end of the work spectrum, though familiar with new display technologies, are often happier leaving well enough alone and avoiding the risks associated with change.

The dichotomy of generational expectations, included potentially in future immersive environments, and added guidance from artificial intelligence to capture and incorporate not only procedures but also knowledge of experienced workers, will certainly impact future HMIs. One thing is certain, being tethered to a single location by a cable to access process information is no longer necessary, and for many technologies, it’s not even feasible.

IAN VERHAPPEN Solutions Architect Willowglen Systems

Ian.Verhappen@ willowglensystems.com

IAN VERHAPPEN Solutions Architect Willowglen Systems

Ian.Verhappen@ willowglensystems.com

“The ability to manage increased surface area by implementing practices like zero-trust is better understood now with the tools in place to implement and support it.”

IF the diffuse and multivariate process industries have a bellwether for what’s going on and the undercurrents they share, the ARC Industry Leadership Forum is it. That’s why close to 800 visitors attended the 28th annual event on Feb. 4-8 in Orlando, Fla., where they listened to and interacted with close to 200 speakers, who focused on cybersecurity, digitalization, artificial intelligence (AI) and sustainability.

“We’ve delivered energy and products for 140 years. Even as alternative sources of energy ramp up, oil and gas will continue to play a role in meeting energy needs for decades to come," said Wade Maxwell, engineering VP at ExxonMobil Technology & Engineering Co. (corporate.exxonmobil.com), during his keynote address on Feb. 6 at the ARC Industry Forum in Orlando, Fla. "However, we also need innovative technologies to handle new and diverse energy sources, so we can meet society’s energy and product needs and reduce emissions.”

Maxwell reported these efforts include:

• Carbon capture and sequestration (CCS)—ExxonMobil has captured about 150 million metric tons of CO2 over the past 30 years. It’s working on accelerating CCS deployment, and establishing a CCS business together with other companies with the potential to capture and store more than 100 million metric tons annually (MTA) of CO 2 per year on the U.S. Gulf Coast.

• Hydrogen—ExxonMobil is one of the world’s largest hydrogen players, producing and consuming more than 1 million tons each year in its refining and chemical operations. It’s announced plans for the world’s largest, low-carbon hydrogen facility at a planned startup in Baytown, which is

expected to produce 1 billion cubic feet of low-carbon hydrogen per day, and capture 98% of the CO2 produced by the facility.

• Sustainability—ExxonMobil is investing in projects to develop lower-emissions fuels, such as renewably produced diesel at a new plant in Canada. This site has a capacity of 20,000 barrels per day. ExxonMobil also plans to increase plastics recycling to 1 billion pounds per year by 2026.

• Efficient operations—Consists of better methane detection, improved equipment inspections by drones and other robots, and more efficient operations using advanced process control and reduced flaring.

• Artificial intelligence (AI)—For accelerating technical development, more effective knowledge management, subsurface modeling, and concept analytics and development optimization, which uses mathematical models to identify net-zero pathways, and to optimize production and manufacturing operations.

• Standards and Interoperability— Relies on open standards such as Open Process Automation (OPA) to bring value and ExxonMobil encourages the process industries to adopt open, secure, standards-based automation systems.

Mike Carroll, innovation VP at Georgia-Pacific (www.gp.com), reported these and similar efforts will require greater “factfulness,” which means relying on strong supporting facts to avoid exaggerations and distortions that cause problems. Fortunately, even though people often aren’t good at getting their facts straight, Carroll adds that computing and AI can provide an assist in the future in with more fact-based analytics and better decision-making.

“The three elements of AI are the ability to learn, predict, and reason/decide,” explained Carroll in his keynote address. “We can use our knowledge and AI to build better hypotheses and decisions based on the world as it is, and enable AI to work on our behalf to help us navigate.”

In the following panel discussion, Rashesh Mody, business strategy and realization EVP at Aveva (www.aveva. com), explained that AI can gather knowledge and learnings from three to six months of operations, and use them to serve as an improved advisory system. “AI can augment each user’s intellect and assist it,” said Mody. “You can give it complex questions, and it should be able to answer it. For example, asking ‘Why was production down eight hours last week?’ likely needs information from many sources, but AI can help trace it to vibration data or other probably causes.”

Beyond its keynotes and technical sessions, ARC forum also featured many informative press conferences and exhibits. Those presentations included:

• FDT Group (www.fdtgroup.org) announced Feb. 20 the certification of its first device-specific, device-type manager (DTM) flow-control software driver based on the latest FDT 3.0 standard supporting the HART protocol. The newly certified device is Logix 3820 Series DTM from Flowserve Corp. (www.flowserve.com), which uses its positioners that support HART 6/7 to tackle flow control problems.

• To expand production capacity in the Americas, improve lead times, and enhance service, Omron (www.omron.com) reported Feb. 8 that it’s relocating its current facility in Renton, Wa., to Greer, S.C. The company will manufacture motion controllers and drives, machine vision, barcode readers and verification systems at its new facility.

• Yokogawa Electric Corp. (www.yokogawa.com) announced Feb. 21 that it’s partnering with Tsubame BHB Co., Ltd. (tsubame-bhb.co.jp/en) on developing ammonia production solutions. Tsubame BHB is a university-based startup that invented an ammonia synthesis method using electride catalysts.

• Motion Industries Inc. (www.Motion.com) agreed Feb. 20 to acquire Perfetto Manufacturing (www.perfetto.ca) and SER Hydraulics (serhydraulics.ca. Both companies provide engineered solutions, service and equipment for hydraulic/ pneumatic cylinders, complex power units and other assets.

• Newark (www.newark.com) announced Feb. 22 that it’s partnering with Auer Signal (www.auersignal.com) to provide customers with a diverse range of audible and visual signaling devices, including steady beacons, flashing beacons, strobe lights, horns, buzzers and electronic sirens.

• Safety automation provider HIMA Group (www.hima.com) reported Feb. 5 that it’s acquired the Norway-based Origo Solutions (www.origo-solutions.com), which provides safety, automation and instrumented systems for monitoring, control and protection of offshore and onshore facilities, as well as complete SCADA systems for the wind industry. For more than 20 years, Origo Solutions has also been HIMA's exclusive representative in Norway.

• ABB (www.abb.com) demonstrated its ABB Ability Connected Worker apps to enhance health and safety, increase efficiency with digitalization and standardization, improve collaboration in the field, and ensure complete, digital audit trails. ABB also demonstrated its ABB Ability PlantInsight—Operator Assist software that can provide early alert, daily support and incident mitigations.

• ARC Advisory Group (www.arcweb. com) launched its subscriptionbased Sustainability Data as a Service (SDaaS) that provides a use case-centric view of key opportunities related to energy transition and sustainability in the global industrial marketplace. SDaaS combines the qualitative market perspective of ARC domain experts with quantitative market data capabilities to provide unmatched insight into key trends and growth areas of within

process and discrete industries to uncover specific sustainability-related business opportunities.

• Honeywell (www.honeywell.com) is driving new automation capabilities into its Experion Process Knowledge System (PKS) with its Release R530, and expanding support of Experion PKS Highly Integrated Virtual Environment (HIVE). Experion PKS R530 introduces Experion Remote Gateway, which enables remote operations by providing a browserindependent method to simplify monitoring and operations. Also, its updated Ethernet Interface Module lets Experion PKS HIVE integrate smart protocols while optimizing the C300 controller’s processing load.

• Opswat (www.opswat.com) reported on advances in its MetaDefender Kiosk for securing critical environments. These include its more-portaable Kiosk Mini form factor, VSA-mountable

Kiosk Stand, and integration with Opswat’s MetaDefender Sandbox scanning solution and Media Firewall technologies to enable defense-indepth for peripheral media.

For more coverage and information about the ARC forum, visit www.arcweb.com/events/arc-industry-leadership-forum-orlando

In the first major update since it was created in 2014, the National Institute of Standards and Technology (NIST) reported Feb. 26 that it’s updated the widely used Cybersecurity Framework (CSF) document. The 2.0 edition (www.nist.gov/cyberframework) of this landmark guide for reducing cybersecurity risk is designed for all audiences, industry sectors and organization types, from the smallest schools and nonprofits to the largest agencies and corporations—regardless of their degree of cybersecurity sophistication.

In response to the numerous comments received on the draft version, NIST expanded CSF 2.0’s core guidance, and developed related resources to help users get the most out of the framework. These resources are designed to provide different audiences with tailored pathways into the CSF, and make the framework easier to put into action.

“The CSF has been a vital tool for many organizations, helping them anticipate and deal with cybersecurity threats,” says Laurie Locascio, undersecretary of commerce for standards and technology and NIST’s director. “CSF 2.0 is a suite of resources that can be customized and used individually or in combination over time as an organization’s cybersecurity needs change and its capabilities evolve.”

Control ’s monthly resources guide

The website maintained by the Abnormal Situation Management (ASM) Consortium includes overviews and its guidelines on alarm management, HMI design, procedural practices, change management in HMI development and operations practices, as well as sections on control room design and others. The overviews and guidelines are at process.honeywell.com/us/en/site/ asm-consortium

ASM CONSORTIUM www.honeywell.com

This four-part video series starts with the 10-minute, “What is highperformance HMI?,” and continues with videos on design basics, developing an HMI philosophy, and detailed design principles. The series starts at www.youtube.com/ watch?v=5GEvFF8pGlc&t=79s. There’s also an online article version at www.realpars.com/blog/hmi-design

REALPARS www.realpars.com

This feature article, “How to design effective HMIs” by Bridget Fitzpatrick, covers the ANSI/ISA-101.01 standard, “Human machine interfaces (HMI) for process automation systems,” shows how to use questionnaires and interviews with operators to learn what HMI capabilities they require before building them. It also shows how to use storyboarding workshops and advanced methods to further refine HMIs. It’s at www.controlglobal.com/visualize/ hmi/article/11306756/how-to-designeffective-hmis

CONTROL www.controlglobal.com

This online article, “Design like a pro, part 2: developing dynamic HMI/ SCADA projects with speed and precision,” covers the processes for set up, layout, templates, development, startup and others. It’s at inductiveautomation.com/resources/ article/design-like-a-pro-part-2. There’s also a 49-minute video/webinar version at www.youtube.com/ watch?v=V8LGl7JSNLE

INDUCTIVE AUTOMATION

www.inductiveautomation.com

This 14-minute video, “Better SCADA design tips: high-performance HMI,” covers analog values, color palette, animation, trends and radar charts. It’s at www.youtube.com/ watch?v=UK6dRGmz8MQ

SCADA TORCH

www.scadadatorch.com

This online article has two versions, “Design tips to create a more effective HMI” by Chip McDaniel and “HMI best practices for an effective HMI every time.” The first is longer and covers storyboarding, operator interviews, judicious use of color, situational awareness, limiting required access clicks, providing feedback, avoiding pop-ups, alarm and event logging, password protection, and creating a style guide. It's at blog.isa.org/designtips-effective-industrial-machine-process-automation-hmi. The second version is shorter, but has links to other HMI articles. It’s at library.automationdirect.com/best-practices-effectivehmi-every-time

ISA AND AUTOMATIONDIRECT www.isa.org ; www.automationdirect.com

This online article, “Leading the way in HMI design 4.0” by Manabu Kawata, covers the history of HMIs, the current state of screen design, layout options and screen structures. It also links to a six-minute video about empowering workforces with HMI-centric concepts. It’s at www.proface.com/en/solution/ article/design_4

PRO-FACE BY SCHNEIDER ELECTRIC www.proface.com

This online article, “HMI design—best practices for effective HMI screens” by Vladimir Romanov, shows how to select screen sizes and colors, pushbuttons vs. touchscreens, user inputs, local vs. distributed data processing, navigation issues and design tasks. It’s at www.solisplc.com/ tutorials/hmi-design

SOLIS PLC www.solisplc.com

This online article, “Integrator experts provide visualization options for clients” by Joshua Choe, SCADA engineering manager at system integrator Tesco Controls, shows how industrial HMI/SCADA design practices are transitioning from traditional graphics to situational awareness principles, which systems integrators can efficiently tailor and standardize to best meet the individual needs of their clients. It was published in Processing magazine, but it’s accessible at tescocontrols. com/2022/12/16/hmi-scada-designbenefits-from-si-expertise

TESCO CONTROLS www.tescocontrols.com

TOMAS HASSELGREN Manager, Global Business Development Emerson

TOMAS HASSELGREN Manager, Global Business Development Emerson

LIQUEFIED petroleum gas (LPG) is a hydrocarbon gas consisting primarily of propane, butane, or a mixture of both. Because it produces lower greenhouse gas emissions than traditional solid fuels, there's increasing demand for LPG across the world. In fact, some governments have implemented policies and initiatives to promote the use of LPG to reduce air pollution and improve public health. This increased demand puts the onus on storage terminals to use their capacity as efficiently as possible, by optimizing inventory management and safety. This can be achieved by integrating noncontacting radar level gauges into tank gauging systems.

Control ’s editor-in-chief, Len Vermillion, spoke with Tomas Hasselgren, manager of global business development at Emerson, about the challenges of LPG tank gauging, and the benefits that can be achieved by using non-contacting radar level gauges rather than traditional level measurement technology.

Q: What are the main challenges for tank gauging when it comes to LPG storage tanks?

A: LPG is usually in a liquid phase when it's stored, so the tanks are pressurized or in some cases cryogenic to support the liquid phase of the LPG product.

Typically, there are two types of tanks: a bullet-shaped tank or a spherical tank. The spherical tank can normally take a larger volume than the bullet tank. It's also more complicated to build those spherical tanks. Some refrigerated tanks can take a lot more volume but are more expensive to build and more difficult to handle.

In pressurized vessels and during certain conditions such as rapid depressurization or temperature changes, the level surface is affected by boiling or foam on the surface. This

can also happen when filling the tank, and that makes it difficult in these applications.

Also, the density can vary. When filling or emptying the tank, the technologies used for level measurement depend on the actual density of the product and are affected by it.

Q: There are the traditional mechanical methods and non-contacting radar measurement. Can you explain the differences between the two?

A: One is a fully electronic device without any moving parts, and the other is a mechanical device.

Mechanical devices have a float or displacer that goes down, touches the product surface, or sometimes goes into the surface. Non-contacting radar doesn't have any moving parts, meaning there's no need for maintenance, which the mechanical device requires. Also, mean time between failure is much longer for non-contacting radar gauges than mechanical devices.

Q: What are the specific benefits of radar for LPG tank gauging?

A: It's a benefit for all tanks independent of what product is stored in the tanks. The difference with LPG is that you can't access the tank; they're pressurized. You can't go into the tank, you can't maintain it, and you can't change the wire or the displacer easily on a mechanical device. Whatever you install on the tank, you must be sure it will work for a long time.

The maintenance of these tanks is normally done about every 10 years. It's important to have a device that doesn't require constant maintenance or repair. You don't have any recalibration needed and there's no drift. The density varies in these tanks, and radar isn't affected by density changes.

Q: We hear a lot about the 2-in-1 technology present in Emerson's radar solutions. Can you explain what that means and how it works?

A: It means there are two radars in the same metal housing, and they're galvanically separated with independent communication and independent power supply. The benefit for LPG tanks is you only need one tank opening for both level and the overfill prevention system (OPS). When it comes to LPG tanks, users don't want any more tank openings than needed.

The challenge users face when upgrading an existing LPG tank from a mechanical device to radar technology is the ease with which the new technology can be installed. If an existing LPG tank has only one opening available, the tank would need to be modifi ed to enable two separate radar level gauges to be installed to support the tank gauging system and the OPS. Making that modifi cation may be cost-prohibitive, as it would involve the tank being taken out of service, thereby impacting throughput and profi tability.

However, this challenge is solved by the Rosemount™ 5900S 2-in-1 Radar Level Gauge from Emerson, which

Non-contacting radar technology provides accurate and efficient level measurement of pressurized liquefied petroleum gas (LPG) storage tanks.

Source: Emersonconsists of two separate and independent electrical units and a common antenna. This enables a single device to be used for both tank gauging and separate OPS purposes, requiring only one tank opening and minimal or no modifi cations.

Q: Let’s talk about some of that available technology, especially for tank gauging, in general, and specifi cally for LPG.

To hear more of this discussion, including added benefi ts such as automatic vapor compensation and identical separation and future developments for non-contacting radar measurement, check out the Control Amplified podcast at ControlGlobal.com/Podcasts

A: Everything we do, and have been doing for the last 50 years, is based on radar measurement, and then we build a system around the level because there are so many other things needed at the site.

In general, the basic requirements are to provide accurate and reliable level and temperature measurement, perform calculations for volume, mass, density, and then you need to view it somehow on the display. We provide our own software for viewing or sending data to the SCADA, DCS, PLC or similar.

As a result, non-contacting radar technology offers an effi cient and maintenance-free level measurement solution that tackles the growing need for accurate inventory management and overfi ll prevention for pressurized gas storage.

by Jim Montague

by Jim Montague

WHAT’S the goal of an interoperable process control standard? Plug-and-play products—and they’re almost here.

“We advocate open and standardized automation, and Open Process Automation (OPA) is a great example. We’re executing our OPA lighthouse project in Baton Rouge this year, and advancing open-asset digital twins as the foundation for achieving speed and scalability from wherever,” said Wade Maxwell, engineering VP at ExxonMobil Technology & Engineering Co. (corporate.exxonmobil.com), during his keynote address on Feb. 6 at the ARC Industry Forum in Orlando, Fla. "OPA is demonstrating its value in improved turnrounds, project planning and visual inspections. Openness and interoperability enable faster technology development and deployment at scale.”