Open industrial networks are taking root in the industry

here to stay

MQTT DEFINED

Automation starts with precision. We deliver the measurement technology.

Everything is possible. With VEGA.

Industry 4.0 sets high standards for the future of sustainable production. Our level and pressure instrumentation is designed to meet these demands, combining the essential features that enhance quality, efficiency, and flexibility in your processes –every single day.

Beyond

Endeavor Business Media, LLC 30

COLUMNS

Luck be a robot

Mike

Charles Palmer, contributing editor

Jeremy Pollard, CET

7 technology trends

More data, less cabinet

Rick Rice, contributing editor

10 component considerations What is MQTT?

Tobey Strauch, contributing editor 11 automation basics Harness vision systems for

Joey

You’ll

CEO Chris Ferrell COO

Patrick Rains CRO

Paul Andrews CDO

Jacquie Niemiec CALO

Tracy Kane CMO

Amanda Landsaw EVP, Design & Engineering Group

Tracy Smith VP/Market Leader - Engineering Design & Automation Group

Keith Larson

editorial team

editor in chief

Mike Bacidore mbacidore@endeavorb2b.com

managing editor

Anna Townshend atownshend@endeavorb2b.com

digital editor

Madison Ratcliff mratcli @endeavorb2b.com

contributing editor Rick Rice rcrice.us@gmail.com

contributing editor

Joey Stubbs contributing editor

Tobey Strauch tobeylstrauch@gmail.com

contributing editor

Charles Palmer charles101143@gmail.com

columnist

Jeremy Pollard jpollard@tsuonline.com

design/production

production manager

Rita Fitzgerald rfi tzgerald@endeavorb2b.com ad services manager

Jennifer George jgeorge@endeavorb2b.com art director

Derek Chamberlain

subscriptions

Local: 847-559-7598 • Toll free: 877-382-9187 email: ControlDesign@omeda.com

sales team

Account Manager

Greg Zamin gzamin@endeavorb2b.com 704/256-5433 Fax: 704/256-5434

Account Manager

Jeff Mylin jmylin@endeavorb2b.com 847/516-5879 Fax: 630/625-1124

Account Manager

Kurt Belisle kbelisle@endeavorb2b.com 815/549-1034

Mike Bacidore editor in chief mbacidore@endeavorb2b.com

Luck be a robot

JOE GEMMA WAS THE 2024 winner of the Joseph F. Engelberger Robotics Award for Leadership, but that was just a touchstone along a career that continues to move robotics and automation forward.

He serves on the board of directors for the Association for Advancing Automation, A3, which hosts the annual Automate Show. He’s also past president and board member of the International Federation of Robotics. He’s been the CEO of KUKA Robotics Americas and CEO of Staubli North America, and in May he was named CEO of Wauseon Machine, which specializes in automation, endforming equipment and precision machining, where he’s been since 2022.

Since its inception in 1977, almost 50 years ago, the Engelberger Award has been received by fewer than 150 individuals. Typical of Gemma, he accepted his award on behalf of all the people he’s worked with along the way.

His interest in robotics and automation happened almost by accident, admits Gemma. “I worked for a company right after school, designing piping systems for power plants,” he recalls. “I worked in what was then the nuclear division of that company. There was a family-owned company, three different divisions, and they were expanding from materialhandling solutions to more automation technology, and they were looking for some people to add on to the team, more like a project-management type role, project engineering, dealing with the public or customers but also have an understanding of technology.”

Luck has had very little to do with where Gemma currently walks on his journey.

While he will tell you that being in the right place at the right time started him on the path, it’s obvious that luck has had very little to do with where Gemma currently walks on his journey.

The Engelberger Award is an accolade received by few, but Gemma is a worthy recipient. “It certainly is an incredible honor,” he says. “It’s something that’s coveted in terms of recognition globally. I’m honored and certainly humbled, but it’s really an award of recognition of all the people that I’ve had the opportunity to work with together in this industry.”

It’s not just manufacturing, explains Gemma. “It’s automation; it’s the passion that we have and what we’re trying to do to help mankind. Robotics has helped us in so many ways, and pretty much everything you touch on a daily basis has some connection somewhere down to automation, so it’s that recognition of that passion, that effort—what we’ve done through the years.”

Gemma knew somebody that worked part of that business and thought he could be part of the growth. He believed he had the type of talent they were looking for. “I met with the owners,” he explains. “I didn’t really know a lot about what they wanted to do, but he shared that with me and convinced me that this could have a bright future. It was certainly the early stages of automation. I saw the light, so to speak, and I was really lucky to get into the space at that point because it was a burgeoning industry.”

At first, the job focused on the engineering side, but then eventually it developed into more customer interfacing, project management, sales and other roles. “I was in the right place at the right time,” he says. “It’s worked out pretty well.”

It’s doubtful Gemma envisioned recent robotics advancements such as autonomous mobile robots (AMRs), 3D vision and advanced sensors. But the possibility of capable, adaptable AI-driven humanoid robots spark excitement for Gemma. “It’s fascinating—Isaac and an open humanoid robot foundation model,” he notes. “As a person growing up with the Jetsons, it’s an exciting development. It signals a significant step toward creating general-purpose humanoids. It can learn and perform a wide range of tasks.”

Jeremy Pollard jpollard@tsuonline.com

Don’t get stuck; master e-stop recovery

IN INDUSTRIAL MANUFACTURING and machinery, an emergency can take various forms, but the common thread with it all is that either the control system—safety programmable logic controller (PLC) or relay—or human imust intervene to prevent or stop a process action.

What constitutes an emergency? In this case, there is no reality, only perception. What I think requires immediate action vs. what the operator thinks may be different. Let’s say there are three moments of an emergency: preemptive, in the moment and post event. Regardless of the emergency, the required action must not fail.

A preemptive situation could be a person walking into a robot cell. An in-the-moment event could be a runaway automated guided vehicle (AGV) about to hit an impediment. A post event would be after the AGV hit the impediment, when the system needs to stop what it’s doing.

supposed to open under any circumstances. Once the button is pressed, the user must rotate the button to release it and allow the system to recover.

This brings us to a software component of the e-stop question. Once power is removed from the machine or process and the operation is stopped, recovering from this event becomes paramount.

Since power is removed, the control system cannot put the system into any state.

In most cases, when the safety system or devices physically remove power, the control system receives that status via an input or inputs, and then the system logically puts the machine or system into a mode to allow for manual or automatic recovery once the e-stop event has been dealt with. Since power is removed, the control system cannot put the system into any state since it is stopped where it sits.

The beginning of the emergency-stop (e-stop) system started with a master control relay (MCR). While fraught with issues, the MCR removed power from the control system’s outputs stopping all devices immediately. The main issue was relay failure and troubleshooting the resulting problem to determine which device caused the stoppage.

It could be caused by wiring or an event triggered by an external action. Keeping downtime minimal made for some creative methods to determine what caused the stoppage.

E-stop actions are not stop actions. An e-stop action cannot fail in its responsibility of terminating all movement on the leading edge of the event. Because of the potential failure of the MCR implementation, innovation created safety-rated devices and control systems.

A safety PLC or relay provides the ability to interface with various devices that can be used for those situations that require emergency actions.

Safety devices connected to a safety system can use a test pulse continuously monitoring the device to be sure that, when the device is to be actuated, it will serve its purpose.

Modern e-stop buttons, whether they are interfaced with a safety subsystem or not, stay activated once activated. They typically have redundant contact blocks, which are

Once power comes back and the e-stop condition is absent from the equation, the process or machine needs to get back into a state to continue operation.

An application that I was involved in was programmed by an outside contractor. I was called in to evaluate the system since there was a major problem when recovering from an e-stop event. The machine was an underground drilling machine with a large hydraulic motor. The contractor used sequential function chart (SFC) to program the sequence.

The reason I was called in was that, upon recovery from the e-stop event, the hydraulic motor came on without operator intervention. This resulted in a hydraulic hose flex which took out the operator since he was not supposed to be there when the pump was on. The contractor used a retentive, or latched, output to control the motor and didn’t use proper recovery methods to reset it.

Regardless of the application, the control system must start up in a mode that allows for recovery and should not present any unexpected situation to the operator so that the operator is in charge of what happens.

JEREMY POLLARD, CET, has been writing about technology and software issues for many years. Pollard has been involved in control system programming and training for more than 25 years.

Rick Rice contributing editor rcrice.us@gmail.com

More data, less cabinet

LOOKING BACK OVER NEARLY 40 YEARS in the business, the approach to automation has changed dramatically, and the impact on how we approach a new design barely resembles its former self.

In the early 1980s, when I was just cutting my teeth in this career, electronics for automation were big and clunky. Control panels had to be large to contain all the components. Devices ran hot, and that meant even bigger enclosures to help dissipate the heat. Power and control components were usually in the same enclosure and the general philosophy was to centralize the power/control enclosure and run all of the wires and cables out to the various parts of the machine or process.

Today, we approach packaging machinery in a very different direction. Programmable logic controllers (PLCs) and programmable automation controllers (PACs) are modulebased, meaning that you only connect as many modules to the base controller as you need for your project without being confined to predefined chassis sizes that eat up extra space in a control cabinet.

I/O implementation has had a serious boost over the past few years.

The march of time has made the job of control design much easier, and one place this has really had an impact is in packaging machinery. I’ve had the good fortune to spend most of my career in and around packaging machinery. Various positions as an OEM/integrator and the past 14 years as an end user has provided me with a great ride through the evolution of automation.

When I first started out, I worked for a newly formed company that was just planting roots in the industry. We had the good fortune of leveraging some established relationships in the food industry to help with that growth, and it allowed us to explore new technologies and quickly become known for our innovation. I feel like I have been riding the crest of that wave for most of my career.

Back in those days, the Allen-Bradley PLC-2 and PLC-3 were in common use in the packaging industry with the PLC-5 just starting to make an appearance. Modicon and Siemens had similar, chassis-based platforms. They were big and robust and occupied a huge footprint in the electrical enclosure, but if you wanted computing horsepower, this is what you worked with.

In those days, some of those enclosures were big enough to stand in and, for that reason, were usually mounted against a wall with myriad conduit paths winding their way over the machine to get to all the functional devices.

After years of proprietary network protocols, usually manufacturer-driven, the industry has pretty much standardized on Ethernet or a derivative of it, and this means that controls designers aren’t necessarily limited to just the brand of the controller when selecting other components that will talk on the control network.

This open architecture has led to much more competition and helps to keep pricing down. This competition has also encouraged a lot of innovation, especially on products like operator terminals, as the various hardware producers introduce new features to keep their products on the radar of prospective users.

For the programmer, this unification of network protocols has been a blessing. Not too long ago, I used to carry around a tool bag just for the various cables and interface modules that I might need to connect to the various brands of devices on my production floor.

As we have upgraded older technologies in my manufacturing environment, I am reduced to just having an Ethernet cable and a USB to Ethernet dongle to connect to pretty much everything in our plant. Since wireless communication is pretty much standard any more, in many cases we have connected at least part of the operations level to our plant network, and we can reach many controllers without even plugging a cable in at all.

Through the use of network address translation, we can selectively add private network devices to our plant network—thus, wireless—during line startups or troubleshooting sessions and then remove them later to keep the operation technology (OT) out of the information technology (IT) environment.

technology trends

Following on the previous point, the availability of devices from the OT level on the IT level has enabled an easy and obvious way to collect data from our operations lines and use that for critical decision-making at the management level. Several software providers have leveraged this availability to introduce products that place key performance indicators (KPIs) on a dashboard that is readily available to decision makers.

We jumped on this technology fairly early in the game, starting out with just overall equipment efficiency (OEE) mapping. This is done by defining what constitutes a good product run on a given shift and then counting products made at the subassembly level and finished cases leaving the end of the production line to determine if we are meeting our production goal for that shift.

For our operations, we have grown with the software developer to add quality assurance (QA) and maintenance management modules to the base solution.

Connectivity is the key element. In years past, this might be restricted to the controller and remote input/output (I/O) modules, operator stations and variable-frequency drives (VFDs) or servo drives, but the number and means of connecting to other devices has expanded greatly.

For example, VFDs and servo drives aren’t necessarily tied to the main control or power cabinet any more. Most hardware vendors now have drives that can be mounted right on the equipment, in close proximity to the motor they control. Further, servo-drive manufacturers have refined the technology behind them, so that some drive modules can house two drives in a common package, halving the footprint required in the panel for such devices. Others have integrated the drive and motor functions such that the servo drive is right there on the back of the motor housing.

I/O implementation has had a serious boost over the past few years. Formerly remote I/O would involve a chassis or module group mounted in a junction box somewhere on the machine where a cluster of devices was located.

While this method is still commonly used, the use of field distribution blocks (FDBs) has greatly improved both the deployment and connectivity to field devices. Field distribution boxes are quick-connect modules that mount right on the equipment, where the devices live.

The M12 connector is a popular choice for this type of connection, and most sensors and many output devices can be purchased with M12 as a standard connector, or the traditional four- or five-wire pigtail can be field-converted to an M12 connector.

The connection from the controller to the field distribution block is often a single cable/connector combining both power and communication in a single connection or, at the most, one cable for power and another for communications.

One key development has been a configurable field distribution block. This allows the designer to define a particular port as either input or output or both. Most FDBs come in either four- or eight-port versions, but those ports can also be split into two connections each for a total of up to 16 I/O connections per FBD.

The ability to user-define the function of a port on an FBD not only helps at the design stage, but can quickly facilitate a field change if a spare connection is available.

Following closely on the development of the FDB is the introduction of what I call the smarter I/O system like IO-Link. This uses the same power and communication of a fieldbus device but connects it to a smart node where additional information is also available to the control system.

These smart nodes not only act as input/output mapping of the field devices, but can also provide information like the reliability of the signal. Not just a digital off or on, but how strong is the decision of off or on. Most sensors are IOLink-ready, so the decision to include this extra level of data exchange is a fairly simple move.

Most providers of pneumatic and hydraulic manifolds that utilize fieldbus connectivity have also broadened their product lines to include I/O functionality on that same manifold assembly. This greatly helps with control design, as we don’t have to have a separate I/O block or remote I/O junction box if there is a fieldbus manifold present in the same area of concern.

So, what does all this mean for packaging machinery design? Using remote devices and I/O blocks means that we don’t have to build these huge main control cabinets.

Cabinets can be smaller and, in most cases, can be easily designed to fit within the framework of the machine itself. Choosing remote devices and I/O blocks also means that we can reduce not only the number of conduits and cables leaving the control cabinet, but the physical size of those conductors, as well.

Going to a machine mounted I/O system means everything is plug-and-play.

RICK RICE is a controls engineer at Crest Foods (www.crestfoods.com), a dry-foods manufacturing and packaging company in Ashton, Illinois.

The quality of Yaskawa products is second to none, but Yaskawa Quality goes beyond that. It’s the total experience of purchasing Yaskawa products and working with people.

ü Global Expertise

ü Proven Quality Management

ü MTBF of up to 28 Years

ü Award Winning Customer Service

ü Product Lifetime Training

ü Free 24/7/365 Technical Support

Want to have that working for you? Contact Yaskawa today.

component considerations

Tobey Strauch contributing editor tobeylstrauch@gmail.com

What is MQTT?

MESSAGE QUEUING TELEMETRY TRANSPORT (MQTT) is a protocol. It has a broker and a client and can run over Ethernet. It was created by IBM in 1999 and was used in oil and gas. The idea was that it required low bandwidth and low power. It was standardized as a open protocol in 2014. How does it apply to automation and machine building?

Broker and client

First, lets understand that MQTT simplifies communications, because it decouples the subscriber/publisher idea. There is space decoupling, in which the clients are unaware of each other and only communicate to the broker. There is time decoupling, in which clients can publish and subscribe at different times. And there is synchronized decoupling, in which clients can operate asynchronously, without needing to wait on a response.

chitecture are key. Creating an MQTT namespace configured on the server may move the data quickly.

Keys to success include mapping the MQTT payload, mapping MQTT topics and implementing transformation logic.

Network interface

What is the big deal? It’s important as integrators to understand that, when you set up a new machine with older machines, the chances of having to work with old protocols increase.

Creating an MQTT namespace configured on the server may move the data quickly.

What does that mean in simplified terms? If you, the client, are in a crowded room, being spoken to by a broker, and you have a question, then you don’t have to raise your hand or wait your turn. You just say it to the speaker, and the speaker will hear you. Or, at least, that is the idea. The broker must know you are out there and have continuity.

Clients must be configured as a subscriber, publisher or both. Brokers mediate all client-to-client communications. Brokers make sure the communication is routed properly. OT networks and machine builders are reliant on flexibility, scalability and real-time data exchange. MQTT allows this to happen. The beautiful thing is that MQTT can be leveraged to integrate old and new platforms, if brokers are used efficiently.

Gateway connection

One example is that if a factory or plant does not have a good infrastructure for a network, it may utilize a broker to get process areas connected without wiring. Gateways can bring OPC UA, OPC DA, Modbus, DNP3, Ethernet/IP, Profinet, SQL, MQTT and Representational State Transfer (ReST) to a centralized data hub. There is also the capacity to do OPC UA over an MQTT broker. The gateway selected and the ar-

MQTT allows for a reliable convergence and manageable network interface in a brownfield environment, according to “Brownfield Devices in IIoT,” a white paper published by Fortiss. This means creating an intentdriven network with interactions of property affordance, action affordance and event affordance. In short, the data is merged at the network level using namespace and multiparty synchronization. A web of things (WOT) is used to bring protocols of different devices to a central location.

Understanding that network levels can equate to time helps to support operational optimization. For instance, field devices are at level 0 and communicate in microseconds. Field to programmable logic controllers communicate in seconds at Level 1. Level 2 is at process management and requires minutes for a supervisory control and data acquisition (SCADA)/human-machine interface (HMI). Manufacturing execution system (MES) devices track on Level 3, in hours, and function as operational maintenance. Business planning is Level 4 and has a time factor of days. Using MQTT to gather and convert gives more control, from the floor to the cloud. HiveMQ puts the goals of the smart factory at agile software delivery, faster mean time to recovery, centralized command and control, and consistent but flexible software architecture. MQTT is a main tool to accomplish this. Why? Bi-directional connectivity, interoperability and OT security.

Tobey Strauch is an independent principal industrial controls engineer.

Charles Palmer contributing editor

Harness vision systems for better quality

A

VISION INSPECTION SYSTEM, also known as a machine vision system, uses cameras, sensors and artificial intelligence (AI) to automatically assess products for quality. These systems can help identify defects, misalignments and other issues, and they can also perform other tasks like counting items and measuring dimensions.

Using vision inspection systems ensures quality control across the board for consumers and manufacturers. Vision inspection systems catch problems early to avoid a defective product moving down the supply chain or being sold to a consumer.

There are various systems and types of technology that can be employed for vision inspection. These inspections are generally performed in applications from different industries.

(UV), depending on the requirements of the inspection process. 3D cameras provide depth perception and allow for detailed, dimensional inspection, including surface irregularities or defects that might be missed by 2D cameras.

Optical character recognition is used to read and verify text or numbers on products.

Inspecting for product defects and flaws is the most popular of all vision system inspections. Presence/absence refers to inspecting the quantity or presence/absence of something on a target.

Product verification uses the vision inspection system to check that a product’s label matches the product. Vision inspection systems can be used to read both human-readable and machine-readable codes. Barcodes and human-readable characters must be checked for accuracy.

Quality control via vision systems relies on various advanced technologies to inspect, monitor and assess products for defects or inconsistencies. These systems combine hardware and software to automate and enhance the inspection process in manufacturing and other industries. Some key technologies used in quality control via vision systems include machine vision cameras, image processing software, lighting and illumination systems, optical character recognition (OCR) and barcode scanning, artificial intelligence and machine learning (ML), 3D vision and depth sensing, sensor integration, robotic integration, edge computing and cloud processing, software for data analytics and reporting and automated decision-making and feedback.

High-resolution cameras are used to capture images of products in real time. These cameras may operate in visible light or other wavelengths like infrared (IR) or ultraviolet

Software analyzes images for edges, contours and boundaries of objects to detect features or defects, such as cracks or misalignment. Pattern recognition allows the system to identify specific patterns, shapes or characters, which is useful for sorting, classification or defect detection. Blob analysis is used for detecting and analyzing objects based on the size, shape and position, typically for quality control tasks like counting or verifying the presence of parts. Template matching compares the captured image to a reference template to detect discrepancies in size, shape or orientation. With structured light, a projector casts a pattern, such as a grid or stripes, onto the object being inspected. The system captures the deformed pattern to assess the object’s 3D geometry or surface defects. Backlighting is used for detecting features on opaque objects or for examining the transparency of materials. Diffuse or directed lighting helps highlight surface texture and defects by using various lighting angles or intensity levels.

Optical character recognition is used to read and verify text or numbers on products, such as serial numbers or expiration dates. Barcode and QR-code scanners are often integrated into vision systems to automate the identification and tracking of parts or products.

Convolutional neural networks (CNNs) and other deeplearning models are used to classify and recognize complex patterns and defects that traditional algorithms might miss. These models are especially useful for tasks like defect detection, quality classification and anomaly detection. Machine-learning algorithms are trained to recognize various product types and detect misalignments or defects.

Charles Palmer is a process control specialist and lecturer at Charles Palmer Consulting (CPC). Contact him at charles101143@gmail.com.

AARON ROTHMEYER Manager, Market Product Manager, SICK

IO-Link and LiDAR rise in sensor popularity and implementation

Two technologies that have risen to prominence in industrial settings over the past few years are light detection and ranging (LiDAR) and IO-Link. The discussion, popularity and implementation of both continue to rise.

Q: For those who might be new to the technology, could you explain in simple terms what LiDAR is, what makes it a unique and powerful sensing method that transcends its origins in surveying and how it compares to other technologies like radio detection and ranging (radar) or other types of vision and sensing devices?

A: In simple terms, any LiDAR-based technology is nothing more than a collection of distance measurements obtained using light, most commonly generated using lasers. The distance measurements are calculated by bouncing several pulses of light off of a target and using the constant speed of light to calculate the distance travelled by each pulse. These distances are usually accumulated and reported in a group known as a point cloud.

This technology was first widely employed in surveying and academic pursuits but was quickly adapted to industrial applications back in the mid-1900s. SICK actually pioneered this adaptation by using the technology to create safety-rated sensors that used LiDAR to detect people present in dangerous environments. Since then it’s really grown to become a staple in industrial automation, especially in mobile robotics but also in warehouse logistics and machine design.

Just like any other sensing technology, LiDAR has its strengths and weaknesses. In general, it has great resolution at longer distances even in nasty environments. Where cameras, for example, can be affected by ambient light, LiDAR generates its own infrared pulses so it can operate completely fine in

total darkness. Radar can do this, too, but the technology is also typically lower-resolution than the “laser precision” that LiDAR provides. The downside to all this power has traditionally been cost, but that has also changed quite a lot over the last few years, enabling sensors like the TiM100 to be used in an increasing number of applications.

Q: What role does IO-Link, an open communication protocol, play in simplifying or complicating the integration of sensors? Are there specific IOLink features that are particularly helpful?

A: The advantages of IO-Link are quite clear as the protocol enables various “smart sensing” capabilities on low-cost sensors. Because it communicates right over the standard transistor-based digital output wires, it doesn’t require any extra cabling, so both cost and complexity are minimized. As a matter of fact, I’m certain many customers are using IO-Link sensors right now and don’t even realize it! Often, these sensors can be fully configured and employed just using the buttons or display on the sensor.

However, tapping into the sensor using an IO-Link gateway or programming tool unlocks some great features that elevate a basic sensor to a smart sensor. For example, most current versions of IO-Link support some level of cloning where the configuration of a sensor can be stored remotely and loaded onto a blank sensor if anything should happen to the original. This can be very helpful for minimizing unplanned downtime.

The IO-Link connection also often unlocks access to more advanced functions. For example, the TiM100 sensor uses IO-Link to allow users to configure zones or areas of interest so that an object entering those zones will trigger one of the two digital outputs.

IO-LINK LIDAR SENSING

The overlaid blue fields demonstrate what the sensor is seeing.

Q: Can you share some examples of use cases that have integrated IO-Link and LiDAR sensing in industrial applications? What made these integrations go smoothly?

A: As I mentioned, one of the most common use cases where we see IO-Link based LiDAR sensors being used is in logistics automation. As warehouses employ such extraordinary lengths of conveyors, there is often a need to detect boxes or objects as they travel along the conveyors, and sometimes, too, detecting when objects are where they aren’t supposed to be, such as overhanging or fallen packages.

Since these sensors are often numerous and spread out over hundreds or thousands of feet, the IO-Link protocol allows users to connect several sensors to a single gateway and then run a single, usually Ethernet, cable back to their controller. This function alone really makes the integration go much smoother, but it

also makes troubleshooting easier down the line. Since each sensor is addressable, they can be accessed remotely and often identified individually by, say, flashing the lights on the sensor to quickly find the one in question.

Q: What are some of the common misconceptions surrounding the implementation of IO-Link LiDAR sensors?

A: The biggest misconception is that they don’t exist! LiDAR has a reputation of being expensive and complex. That’s not without merit; in many cases, LiDAR can be very complex to integrate and certain high-end models can cost several thousand dollars. SICK has been doing LiDAR long enough, though, to recognize that not every customer needs to have a full 3D point cloud for their application and not every customer has a software engineer standing by to work with raw Ethernet data. What we’ve done is “sensorize” LiDAR by making it smaller, lower cost and easy to integrate. IO-Link is a big part of that for all of the reasons I listed. With the TiM100, all of the strengths of LiDAR can be used for basic and advanced machine applications with minimal headaches during setup.

Q: From your experience, what are the most significant hurdles that engineers or technicians face when trying to integrate an IO-Link LiDAR sensor into a system?

A: Alignment. One of the downsides of working with infrared light is that humans are not well-equipped to see it, so, when it comes time to align the sensor on the machine or equipment, it sometimes requires some thoughtfulness and strategic

hand-waving. I’ve personally spent many hours waving at what appears to be nothing in order to verify where the infrared plane of light is located.

The good news is that SICK has been working with infrared light long enough that we have noticed that alignment is a pain point and have developed a solution. In our catalog of accessories, there is a tool called the “Alignment Aid,” which is simply a bar containing an array of infrared light sensors.

For each sensor, there’s a corresponding red LED so the bar lights up red wherever the LiDAR plane hits it. These alignment aids can be of great value when it comes time to align the sensor. I was recently doing an install at a warehouse and was able to align the sensor from the top of a 30-foot pallet rack system by placing two alignment aids on the floor.

Q: What advice would you give to someone who is considering using an IO-Link LiDAR sensor but is hesitant due to the perceived complexity? Are there any “beginner-friendly” approaches or resources you’d recommend?

A: Simple! Reach out to SICK as a resource! We’ve been using LiDAR in industrial automation applications for over 75 years and, as members of the international IO-Link consortium, have the expertise and understanding to help with any IO-Link questions or integration. Our application engineers work with these technologies greatly and we’re always excited to see what problems IO-Link and LiDAR can solve next.

For more information, visit www.sick.com.

Open industrial networks are taking root in the industry

by Anna Townshend, managing editor

INDUSTRIAL NETWORKS ARE generally no longer locked into a speci c hardware or communication protocol choice. The vendor-speci c design has likely seen its heyday, and the overwhelming majority supports open communication protocols, supported by non-pro t industry organizations.

However, complete system interoperability is not always as easy as adding the latest open protocols or hardware, when upgrading an existing production line with established legacy equipment. All the choices about devices, I/O, protocols and software will affect system interoperability and factors like ease of installation or operational maintenance down the line. The application will ultimately dictate what it needs, based on many factors. The convergence of information technology (IT) and operational technology (OT) is the nal frontier for industrial networks.

The hardware choice

Hardware standardization can be a successful tactic, says Harrison Davis, team lead of mechatronics at Schunk. Standardizing robots, control systems, communication protocols and end-of-arm tooling (EOAT) as much as possible is the simplest way to ensure that a new machine is compatible with existing systems and machines. “While factories may have different lines with varying requirements, using the same robot brands and controllers across as many systems as possible will ease the learning curve for those responsible for maintaining and programming the manufacturing lines,” Davis says.

However, standardization or nding the easiest solution might not always be possible for many reasons: end user preference, product supply availability, legacy system networks or the machine design.

“We often start with a review of the customer’s existing facility, as well as any standards documents they may have,” says Rylan Pyciak, president of Cleveland Automation Systems, a CSIA member. Then the integrator will want to identify what software packages and versions are in use, communication protocols and spare parts availability. “The last thing you want to do is install a one-off system that requires more monetary spend to have the proper software or additional spare parts,” Pyciak adds.

The machine will still need to t with the overall plant architecture and existing systems, so proper system integration will also consider factors like electrical standards and compliance and communication compatibility, says Ranjit Maharajan, head of product group, automation solutions, at Andritz Feed and Biofuel.

Andritz prefers to match protocols like EtherNet/IP, Pronet or Modbus with existing machines and other control systems. In choosing hardware components, it also tries to prioritize suppliers with reliable local support. Environmental considerations might also play a role, such as ingress-protection (IP) ratings, temperature or vibration, and cost will be the nal decider in most projects, Maharajan says. Consider the project budget but also product lead times and lifecycle.

“Generally, there are different requirements that determine the machine architecture and control design,” says Martin Schütz, product manager of smart sensors at Hottinger Brüel & Kjær (HBK). “From the application perspective it is necessary to take into consideration the needed physical measurands, actuators for motion control and other peripheral systems interfacing with the machine

and process. Secondly, the performance requirements for data transmission of the sensors and controllers—real-time requirements—need to be checked.” Other considerations, Schütz says, are the openness of the machine and how it will connect to other devices and equipment.

The IT department on-site also has a role to play or can help understand the data architecture, and the new machine will need to be designed to fit that system. “If sensor readings should be transferred to IT-level applications too, then the question arises at which level the sensor data needs to be transferred. Programmable logic controllers (PLCs) can send data directly into the cloud, but this means all the data needs to be sent to the PLC from sensors and/or amplifiers in the first place,” Schütz says.

Using, for example, IO-Link sensors make it possible to send data to IT-level applications on the IO-Link master level. “If more complex monitoring tasks using higher data rates in parallel to the running PLC process are required, then edge amplifiers using dedicated Ethernet ports for ITlevel applications might be considered,” Schütz adds.

Network devices and I/O

Ease of installation is paramount for any application, as downtime equals money, and the choice of network and I/O devices can affect connectivity time and cost. “Ensuring seamless communication between your devices is crucial, especially as manufacturing increasingly relies on electrically based systems,” Davis says. Even with varying production lines, the devices can all speak the same language, essentially. “Effective communication between your robot controller or PLC and your tooling can significantly enhance the ease of integration,” Davis adds.

Pyciak says Cleveland Automation Systems prefers remote I/O and I/O over Ethernet communications. “By utilizing I/O via communication protocol, this allows for much simpler and easier integration,” he adds. “We no longer have to run multiconductor cable halfway across a plant facility. We are able to run one Ethernet cable and one power cable, and that’s it.”

When connecting sensors at the field level to the PLC or the control system in the automation level, the choice of sensors and electronics can have huge implications on the ease and efficiency of installation, Schütz says.

“Using passive sensors requires adding amplifiers,” Schütz says. “These setups have their own set of advantages but require manual parametrization in order to ensure correct sensor and amplifier pairing. Using smart sensors with integrated amplifiers provides a digital signal at the sensor’s output. Depending on the digital communication protocol used, the system setup is correspondingly easier and more efficient.”

For example, a force, load or torque sensor with an IO-Link interface can behave like any other IO-Link sensor and make integration into the machine and system architectures via standardized methods more efficient. “The sensors directly output the correct physical quantities—N, Nm, kg—without any manual parametrization needed,” Schütz adds.

Industrial communication protocols

For a long time, communication protocols were driven by vendor choice, with many vendor-specific protocols locking end users into a system. But that reality is no longer the case, as even vendors have mostly let go of proprietary protocols, in place of open communication standards. There

It’s more difficult to integrate devices that all use different native network protocols.

still may be applications where older protocols are necessary, but they’re quickly being replaced by open standards. But each application still has individual requirements and choices to be made.

“Choosing the right industrial protocol depends on factors such as system architecture, device compatibility and integration with existing infrastructure,” Maharajan says. “In most cases, the choice is influenced by the platform in use or selected architecture, which may limit the flexibility of using proprietary protocols.” He adds that Andritz strongly prefers widely adopted open protocols, which provide simplified integration and interoperability across devices and vendors.

Pyciak says 95% of Cleveland Automation Systems’ projects use EtherNet/IP. “We like to push for the latest and greatest technologies, so often, that is some sort of Ethernet-based communication protocol. We still have customers utilizing RS-232, DeviceNet and ControlNet; however, we try to encourage our customer base to upgrade from these protocols,” he adds. With older protocols, finding readily available parts and hardware can be challenging, and fewer personnel understand these older systems.

The choice of communication protocol for the application should consider the process requirements on time synchronization and the need for real-time loop control. “As soon as highly deterministic communication for loopcontrol applications is needed the current solutions are typically going to be industrial Ethernet-based fieldbus protocols. A common misconception though is the thought of needing to send all sensor readings to the central controlling unit or PLC and thereby taking up high bandwidth and cycle time requirements on the industrial communication protocol,” Schütz says. “This concept relies on the idea that a central controlling unit performs all the process control and application-specific calculations, which results in more costly PLCs to be installed. The concept of decentralized intelligence is emerging in the domain of sensors and controllers too.”

The application will dictate if open standard protocols like IO-Link can be used for field-level to automation-level communication or if industrial Ethernet communication is needed to connect all the way down to the nodes in the field level, Schütz says.

Legacy technology and obsolescence

Designing a seamless system under all the same commu-

nication protocol isn’t always possible when combining new machines with old. “When designing, we take into consideration the architecture that supports both old and new systems. This includes choosing the right protocol converters, I/O gateways and software that supports multiple systems,” says Maharajan.

Obsolescence is a concern for components and systems, he says, and should be considered when integrating new machines into older production lines. “We take a proactive lifecycle management approach in close collaboration with suppliers and vendors to help mitigate this risk during the design phase and through the life of the delivered systems,” he adds.

Pyciak of Cleveland Automation Systems says he is already seeing obsolescence become an issue. “Customers have legacy systems in place which have no readily available hardware or other components to keep these systems running. We like to identify the latest technologies currently on the market and implement the solutions wherever possible. We must also identify strong systems and networks that will withstand the test of time,” he adds.

The future of industrial networking

“The clear demand from the customers is for open standard solutions and a higher interoperability between existing control system solutions. The truth is that a full compatibility is hard to achieve with existing solutions, which lead to new initiatives being started,” Schütz says. He points to the many open protocols in existence like IO-Link, MQTT or OPC UA.

The next level and the future of industrial networking for automation is an infrastructure with deterministic OT communication and IT-level communication over a single network. “Solutions making this possible are currently being developed in organizations and working groups pushing for open standard solutions, but we are not there yet,” Schütz says. “For the current solution landscape, we at HBK follow the path of parallel connectivity.”

“The gap between IT and OT is getting closer, leading to a stronger focus on cybersecurity and edge computing, and wireless technology is becoming more prominent,” says Maharajan.

Pyciak also predicts that the future of industrial networking will shift to IT/OT systems in the future. “More and more systems are becoming interconnected, and companies want access to real-time production data, monitoring and statistics,” he adds.

Schütz agrees that industry is pushing for the convergence of OT/IT communication over the same network. New technology like time-sensitive networking will help that become a reality. He recommends watching closely the initiatives supporting communication protocol standards with wide industry support. “From our viewpoint the current proprietary solutions have their justification, and this will be the case for the mid-term future. In the long-term there will be a shift to open standards allowing for OT/IT communication on single networks,” he adds.

Industrial network best practices

Device management foundation: “There’s a lot of work and a lot of conversation today about the IT/OT integration,” says Steve Biegacki, strategic integration committee (SIC) chairman at FieldComm Group. “When you talk to IT companies and ask, ‘Where do you think the data for that machine comes from?’ they look at you and go, ‘It kind of comes from the controllers that are in there.’ I say, ‘Where do you think the controller information actually generates from?’ The controller only knows what it sees in the sensors and actuators on the machine. It’s not out of the ether someplace.”

Cybersecurity priority: “It’s challenging to keep pace in the ever-changing cat-and-mouse game of cybersecurity,” says Aaron Dahlen, applications engineer, DigiKey. “When is the last time you updated the virus definition on your PLC? Popular PLCs are targeted. Even the white hats learn the names of PLC families as they practice launching and defending attacks against critical infrastructure.”

Choose your PLC wisely: “When it comes to building or upgrading an automated system, the selection of network devices and I/O components plays a crucial role,” says Bill Nyback, senior application engineer at ABB. “Choosing components that integrate smoothly with your existing controllers and automation architecture can significantly reduce the time needed for setup and programming.”

Scope and scale for expansion: “Certain network topologies lend themselves better to expansion than others,” says Natalie Co, product engineer at Misumi. “Bus topology is cheap and easy to set up but has limited scalability due to data degradation as it travels down a single line. This thought process also applies to the physical hardware devices. Unless you’re willing to make semi-regular component upgrades, it may be worth investing in more capable components from the start.”

Network topology map: “Start with a network topology map to evaluate integration points,” says Felipe Costa, senior networking and cybersecurity product manager at Moxa. “Compatibility with existing industrial protocols, such as Profinet, EtherNet/IP and Modbus, and the system’s deterministic performance requirements are also critical.”

Modular software architecture: “A modular software architecture allows individual components or functions to be developed, tested and updated independently,” says Azad Jafari, I/O product manager at Beckhoff. “This not only simplifies maintenance and troubleshooting, but also makes it easier to adapt or scale the system in the future without disrupting the entire network. The software must support the same industrial communication protocols used in the existing network and be capable of interacting with devices using these protocols reliably and efficiently.”

Supply chain diversification: “If supply chain teams are all at risk for systematic disruptions, then focusing on unsystematic risks yields a more robust position,” says Thomas Kuckhoff, automation product manager at Omron. “Diversifying the number of sources of replacement sensors, light curtains or servo motors allows supply chain teams to take the risk out of one manufacturer having delivery issues.”

Expense of backward compatibility: “Availability of machines that support the latest technologies but also maintain backward compatibility with legacy systems is a goal organizations strive for, but it is rarely achieved,” says Dave Boldt, product manager lead of factory automation at Pepperl+Fuchs. “Designing new devices with backwardcompatible protocols and physical interface capabilities comes at the price of significant complexity and cost.”

Addressing schema: “Any I/O needed to interconnect the machinery should be consistent,” says Joe Biondo, strategic marketing manager for OEMs and machine builders at Rockwell Automation. “The equipment designer should allocate a given number of I/O to machine interconnectivity, using the same physical addressing schema.”

Ease of total ownership: “Teams should focus on ease of total ownership,” says Aaron Crews, global director of modernization solutions at Emerson. “The more complex the integration, the more expert skillset it will be necessary to have to maintain automation technologies.”

Unity gaming engine powers digital twin

Platform allows real-time data to flow from industrial manufacturing equipment

by Cole Switzer, E Tech Group

IN THE FIFTH VOLUME of his famous series In Search of Lost Time, published in 1923, Marcel Proust wrote: “The real voyage of discovery consists not in seeking new landscapes, but in having new eyes.”

Proust wanted readers to understand that breakthroughs often arise from reimagining how existing tools and knowledge can be applied in different contexts. More than a century later, his insight remains highly relevant in industrial manufacturing, where the continued pursuit of increased operational efficiency perpetually drives innovation.

A project completed for a manufacturer recently demonstrated the merit of Proust’s principle. A remarkable unique advantage was obtained from an unexpected tool outside the traditional industrial toolkit for digital twin implementation. Rather than relying on industry standard simulation software, E Tech Group selected Unity, a video game development platform known for its advanced 3D graphics, powerful physics simulation engine and cross-platform deployment capabilities.

Over six years of implementation, these Unity-based digital twins have evolved from a novel solution into a missioncritical system, reducing downtime through simplified troubleshooting and improving operator training, becoming so integral that operators access them as part of their regular workflow. The next iteration will introduce two-way communication with physical equipment to enhance existing line technologies. It will also incorporate machine learningbased analytics for advanced predictive maintenance.

What is a digital twin?

A basic digital twin visualizes the behavior of the physical system it represents in real time—a virtual display of what’s happening right now with the real equipment on the manufacturing floor. From a high level, data from sensors and other equipment in live machinery is continually collected. This telemetry information is then sent to and visually interpreted by a virtual representation of each component of the system in the simulation environment. As a result, the twin’s motion and state are directly tied to that

of the equipment it represents.

More generally speaking, a digital twin can be connected to any data source that describes equipment operation. This broader capability opens the door for other off-line uses of the digital twin application:

• Operator training: Past failure events recorded in a timeseries database can be replayed for training purposes. Simulated failure scenarios can be used similarly.

• Software virtual commissioning: A physics-based engine can simulate system behavior. By integrating PLC logic and other control sequences, the application can be used to virtually debug programming logic and verify system performance before deployment.

• System optimization: CAD models, physics-based simulations and performance data can be used to optimize the system layout for better efficiency and throughput.

• Predictive maintenance: Historical data can be modeled using machine learning to detect anomalies and predict upcoming system failures.

• Process control: An advanced digital twin application can incorporate two-way communication with the equipment allowing it to send control commands and dynamically adjust system parameters based on real-time data.

Unity as the core of a real-time digital twin

For a digital twin model to realistically express the state of operation and motion of a physical system, it must receive accurate datasets that describe its next state. While an active digital twin obtains this information from the physical equipment to which it’s connected, offline applications of the twin require a different source, and the accuracy of its motion depends on the quality of the predictive model or simulation engine. Unity is exceptionally well-suited for this task, as its mature physics engine can generate the next states by applying classical mechanics principles, including kinematics, dynamics and force interactions, to simulate real-world behavior. For instance, it can accurately simulate the effects of gravity, friction, collisions and inertia. The physics engine enables Unity to predict

Figure 1: This diagram shows how data flows from the production line into digital twin clients. Data from production floor devices is collected through standard industrial protocols, such as OPC UA and then structured and contextualized by the unified namespace (UNS). A message queuing telemetry transport (MQTT) broker distributes live data from the UNS to the Unity-based digital twin clients on compatible devices for accurate visualization of the production line. Time-series and SQL databases store historical data that Unity can access as needed. The supervisory control and data acquisition (SCADA) system obtains the same data as the UNS in parallel and operates on it before supplying its data to the UNS, offering additional process visibility and control. Cloud-based services like Amazon Web Services or Azure can be used to host the UNS, databases and other backend components so that Unity clients can access the digital twin securely over the internet.

real-world behavior, which expands the digital twin’s usefulness to offline applications including development, testing and optimization.

As a game development platform, Unity excels at graphics rendering and scripting capabilities, providing a robust 3D environment for visualizing virtual models of real components. Within Unity, a user can maneuver freely in virtual 3D space, zooming in and out with ease around moving components. For troubleshooting purposes, this movement capability allows operators and support engineers to visually explore the state of the digital twin in real-time, aiding in the identification of production issues as they arise. Finally, Unity is platform-agnostic, allowing digital twin applications to be deployed on various devices including PCs, laptops and mobile devices.

Data flow and real-time considerations

For a Unity-based digital twin to reflect the current state of physical equipment, there must be a clear path for data to

flow from the production line into Unity. Real-world production line data can either be fed directly into the model or be routed through a database or a unified namespace (UNS), where it is then accessed or pushed to Unity to drive the digital twin.

Direct data feed—live data

In some basic implementations, sensor data from the production line and programmable logic controllers (PLCs) can be sent straight to Unity without first being stored in a database. This method often uses industrial protocols like OPC UA, message queuing telemetry transport (MQTT) or Ethernet/IP to transmit the data in real time. Unity then updates its 3D simulation based on each new data packet, creating a highly responsive twin (Figure 1). However, a direct feed can be vulnerable to temporary network delays or downtime. If the network link goes down, the twin might not receive any new updates until the connection is restored.

digital twin

Database-driven feeds

A typical implementation is to send sensor and control data to a high-performance database before Unity retrieves it. E Tech Group’s development team uses InfluxDB for this purpose. This near real-time approach involves time-series storage of data at configurable intervals—for example, every 100 milliseconds or 1 second. Data can either be pulled by Unity clients or pushed via an MQTT broker. E Tech Group prefers the latter and uses HiveMQ for this purpose. An MQTT broker implementation reduces network load since it does not require Unity to poll for updates. Rather, data is delivered to Unity as soon as it changes, so that the twin is fully synchronized with the current state of the real equipment.

While a database-driven approach may introduce a small delay—milliseconds to seconds—it offers significant advantages in comparison to a direct data feed implementation:

• Data buffering and reliability: If a connection to Unity is lost, the database still collects the data for historical record and picks up where it left off once Unity reconnects.

• Historical analysis: Storing data chronologically enables engineers to look back at previous events and trends.

• Scalability: Multiple Unity instances or other applications can simultaneously access the same data source, supporting enterprise deployments.

Unified namespace (UNS)

A more recent innovative connection technique involves the utilization of a unified namespace (UNS), which provides a centralized, standardized way of collecting and contextualizing data from various sources. In digital twin implementations, the UNS can serve as a structured data hub, feeding real-time data from production systems into downstream applications, including SQL, InfluxDB and the Unity-based digital twin itself.

The UNS replaces multiple direct connections, which reduces network congestion, improves data consistency and ensures the digital twin receives accurate, up-to-date system information. Additionally, it simplifies the integration of new data sources or data consumers over time. As production systems change, new machines, sensors or analytics tools can be added to the UNS, allowing the digital twin to easily maintain an accurate representation of the physical environment.

Regardless of which method is chosen, the goal is to keep the digital twin synchronized with the physical equipment.

In true real-time implementations, Unity reads data as soon as it becomes available, matching the physical system’s behavior with only minor latency. In near real-time systems, buffering may mean the twin is a fraction of a second behind live events, which is typically acceptable for most troubleshooting and monitoring needs.

CAD models

as the basis of virtual representation

To create a fully visualized digital twin, all elements of the real system must be represented within Unity’s virtual environment, including the following:

• Physical components such as robots, conveyors, machinery, safety features and general-purpose areas.

The goal is to accurately represent the layout of the physical system.

• Control systems: Modeling all control and automation logic—PLCs, human-machine interfaces (HMIs) and supervisory control and data acquisition (SCADA) systems—within the digital twin will ensure it replicates the behavior of the physical control system.

A CAD file can be used as the basis of each component in the digital twin. Unity allows CAD files to be imported directly into a project, although they must first be converted into a supported file format. Unity Pixyz is a plugin tool that accomplishes this task for most proprietary CAD file formats, including Solidworks, AutoCAD and CATIA. Within Unity, each component representation should be configured to connect to the appropriate data stream from its associated live equipment.

As an extension, virtual sensors can also be incorporated into the digital twin as an innovative means of capturing real-time data for monitoring, analysis and production optimization.

System performance requirements

The following list outlines the performance requirements for different components of the digital twin system.

• Control system: The actual equipment, including PLCs must be connected to a reliable OT network designed for high-speed data transfer. Legacy controls may need to be upgraded to meet the processing demands necessary for running real-time control operations and transmitting data to the database.

• Simulation: Unity’s physics engine requires significant computational resources for accurate and timely simulation. This includes robust central processing unit (CPU)

and graphics processing unit (GPU) capabilities to handle complex, time-sensitive calculations without lag.

• Database server: The server should be fast and reliable, optimized for handling large volumes of time-stamped data. InfluxDB is recommended for its efficiency in writing and querying large datasets, which is important for maintaining high performance in industrial settings.

• Visualizing clients: The visualizing clients can run on a wide range of laptops or devices, as long as they have sufficient graphics and processing power. Each client establishes a connection to the system to display real-time data and view the digital twin.

• Network and back-end infrastructure: Because multiple clients may connect simultaneously, the network and back-end infrastructure must be designed to support concurrent sessions without performance issues. E Tech Group uses cloud hosting solutions including Microsoft Azure and Amazon Web Services (AWS) for this purpose because they offload much of the networking, security, and IT maintenance responsibilities away from the manufacturer. This implementation also provides easy scalability of resources as demands change, ensures multiple users can connect concurrently without performance drops and allows users to securely connect to the digital twin application over the internet.

sulted in many improvements to the production processes for the manufacturer.

Production lines are easier to understand and operate

Because the digital twin visually displays the equipment’s current state, it is inherently easier for operators and engineers alike to understand and troubleshoot it. In addition, the digital twin has been programmed to clearly communicate actions required to address an issue. The overall result is that operators better understand the line and therefore can often identify and address issues themselves without additional support. The production lines with digital twins have proven efficiency improvements because they remain operational a greater percentage of time compared to those without.

As production systems change, new machines, sensors or analytics tools can be added to the UNS.

Digital twin implementation for a manufacturer

The first Unity-based digital twin was introduced six years ago to visualize a manufacturer’s magnetic conveyor-based production lines and integrate with a specific PLC. Although this initial design remains in active use at multiple facilities worldwide, the latest version has been upgraded to be control system agnostic. This means it can gather data from any source, including various PLCs, program architectures, computers, application programming interfaces (APIs) or databases. Each data stream is mapped to its corresponding component within the digital model, ensuring accurate visualization. The current revision is already running on nine major production lines, with three more in development.

Results and ongoing improvements

The combination of Unity’s 3D visualization and movement capabilities alongside its mature physics engine has re -

Remote monitoring and troubleshooting

A Unity-based digital twin simplifies remote monitoring and troubleshooting. The digital twins are essentially a live feed of the current state of each line, so when an issue arises, engineers viewing the digital twin can easily detect and mitigate the issue. In fact, this particular client has created a centralized control room that displays the digital twins of all their major production lines so that support engineers can monitor all of them from this singular location. It’s not uncommon for the support engineers to notice an issue before the line operators. When this occurs, a phone call is made to make them aware of the issue. The digital twins have resulted in a tremendous savings through reduced downtime and travel costs for support engineers.

A second server for offline applications

Once a digital twin is fully built and connected to a telemetry data source, a second copy of the twin can be implemented to function offline, fully disconnected from the live equipment. Unity’s sophisticated physics engine can generate the data inputs to determine the motions of the system based on realistic modeling of classical mechanics principles. The current revision of the manufacturer’s digital twin is used for several interesting applications of offline functionality.

• Software virtual commissioning:

The manufacturer is using the offline digital twin to debug control programs prior to testing them on the physical equipment.

• System design and optimization: The manufacturer is using the offline digital twin for line design including optimizing the physical layout to maximize production rate, minimize overall footprint and select best-suited equipment.

• Training simulation: This manufacturer uses the offline digital twin for training purposes. By recreating failure scenarios, operators can interact with the system to learn how to address them.

Next-generation digital twins will support direct process control

The next revision of the digital twin, currently in development for the manufacturer will introduce the following additional functionality:

• Two-directional communication allowing the digital twin to control the line: While the current revision of the digital twin only reads and interprets data from the line, the next revision will allow the digital twin model to send control information directly to the line, offering increased flexibility for production line control.

• Failure prevention and analysis: Machine learning algorithms can

be applied to historical Internet of Things (IoT) data to analyze the trends over time and train a model. Initially, this model can help identify and diagnose points of failure. As it continues to learn, the model can be set up to alert engineers before a critical failure occurs, allowing them to take immediate action. Once two-directional communication is completed with the next revision, plans are in place to incorporate allowing a trained model to adjust the production line parameters—for instance, conveyor speed—based on learned acceptable ranges.

Final note

As manufacturers continue seeking innovative ways to boost efficiency and reduce costs, digital twin technology is emerging as a powerful driver of industry growth. For this manufacturer, the unconventional decision to adopt Unity, a platform originally designed for video game development, has delivered significant gains in overall equipment effectiveness while creating additional possibilities for future advancements. With each revision, the digital twin continues to become ever more mission-critical to daily operations including advancing toward machine learning-based production line control and implementation of predictive analytics. Indeed, it proves that breakthroughs are often found not in entirely new tools, but in reimagining how existing ones can be applied in transformative ways.

Cole Switzer is lead software engineer at E Tech Group. Contact him at cswitzer@etechgroup.com.

Protect control circuits and OT data

Mitigate damage from sudden voltage increases

by Larry Stepniak, Flint Group

EARTH GROUND, HIGH-CAPACITY gas discharge tube, clamping diodes and metal oxide varistors can keep OT data safe from voltage spikes. And programmable logic controller (PLC) outputs can be shielded from induced voltage spikes and component failure. Sudden increases in voltage can come from different causes, and there’s more than one way to mitigate the risks.

How to protect networks against transient spikes

Operational-technology (OT) networking connects the hardware that monitors or controls devices and processes in an industrial plant. This hardware can be connected in different ways, but it is most often some form of Ethernet, such as Profinet, Modbus or Common Industrial Protocol (CIP). The advantage of Ethernet-based systems is the commonality of cables, routers and switches that are used in the information-technology (IT) infrastructure, although OT-specific hardware may be ruggedized to work in a more severe industrial environment.

There is a lot of information available to help protect your OT network in regard to cybersecurity and hacking, but how do you protect against transient spikes?

Transient spikes are very short bursts of voltages that can range from a few Volts to thousands of Volts induced on a signal cable. These last only a few milliseconds but can damage circuits and components. Communication cables and analog signal cables are vulnerable.

There are several methods to discharge or dissipate transients. Most Ethernet switches have transient protection, but this protection relies on a proper path to Earth ground. On an automation network, the most basic form of protection is to start by providing an Earth ground to the network switches. If the ground is not connected, the device is not protected. Ensure network switches are Earth grounded. A second form of protection for the network data lines is to install data line transient suppressors. These are normally devices that are mounted and grounded in the cabinet near the network switch or other network device.

The suppressors are placed in series with the network cable via onboard network-in and network-out connections. They may use a high-capacity gas discharge tube, clamping diodes, metal oxide varistors (MOVs) or a combination of these devices to dissipate the voltage spike and prevent damage to connected equipment.

A gas discharge tube (GDT) consists of a gas mixture in a sealed glass tube, placed between two electrodes. When a high-voltage spike is realized across the device, the gas becomes ionized. This ionized gas provides a controlled discharge of the spike. Given their small size, they can handle a surprisingly large amount of current. These are typically used on higher-frequency signal lines, so they work well for protection of network data cables and systems.

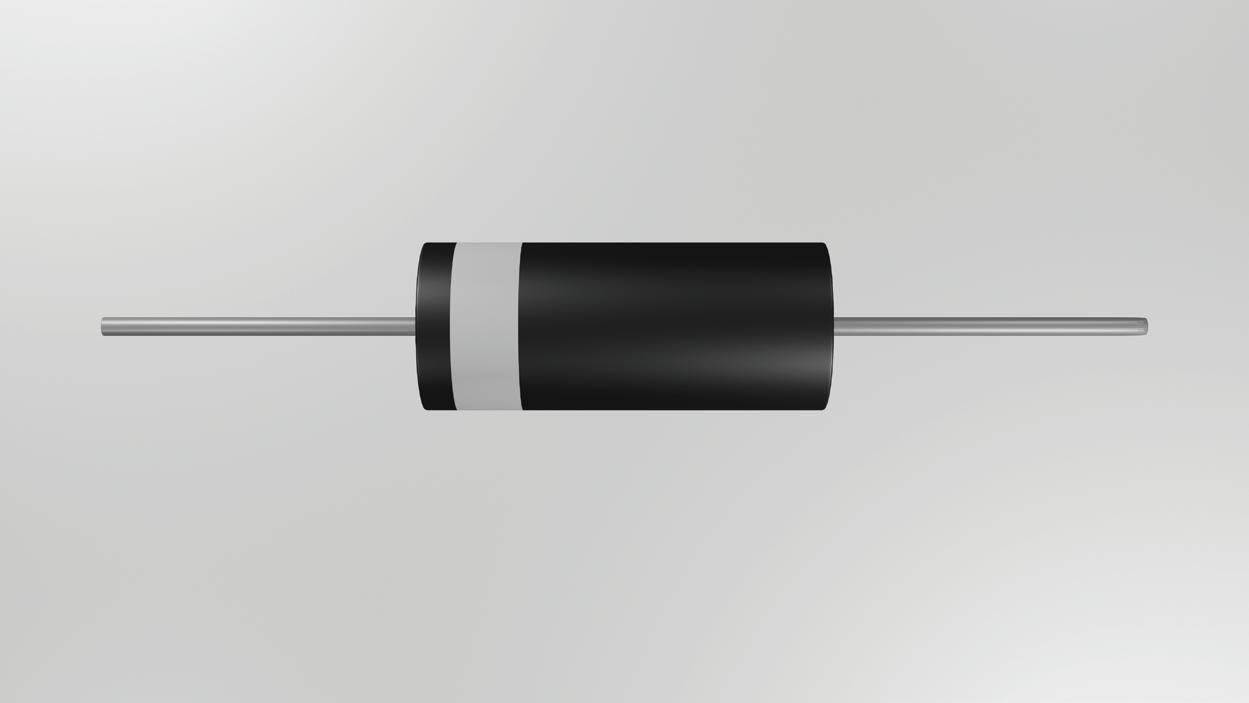

Clamping diodes, specifically Zener diodes, are utilized to limit voltage levels. These are particularly useful for analog signal levels such as 4-20 mA signal levels used for control or instrumentation. Two 47-V Zener diodes connected cathode-to-cathode and placed across the signal and common of a PLC analog input module will protect the module and any connected devices from transient spikes. Zener diodes have a specified breakdown voltage (Vz) and the voltage across the line is “clamped” to this level.

A common method for Ethernet transient protection is a transient-voltage-suppression (TVS) array. These devices are usually an array of both standard and Zener diodes, which shunt the current to ground to protect data ports. A normal Ethernet signal is just under 2 V. A TVS with a clamping voltage of at least 2.5 V is necessary.

Metal oxide varistors also protect against transient voltages. The resistance of the MOV varies with the voltage. When the voltage applied is less than the device’s rated voltage, the MOV has essentially infinite resistance. When the rated voltage is exceeded, this resistance drops to zero. The transient current then passes through the MOV instead of through the protected device. MOVs are typically used in addition to gas discharge tubes to protect network components. For transient protection, an MOV is more likely to be used across a power supply.

circuit protection

How to choose a diode to mitigate back EMF

A starship moves through the neutral zone on a routine survey mission. The captain and the crew are busy performing their normal duties when, suddenly, sensors detect an enemy vessel approaching with their shields up and weapons locked. The captain quickly reacts with an order to fire an energy pulse at the ship. The lieutenant commander locks on and fires.

Lieutenant commander: “Captain, we have fired an energy pulse at the target. Their shields absorbed the pulse, and that has created a large electromagnetic field. This field has collapsed and has sent the energy pulse back at us.”

Captain: “Shields up! Prepare for impact!” The captain is, of course, correct to raise shields. A returning energy pulse can be very damaging. This is fiction, but it also describes what happens whenever a solenoid on a relay or valve is turned on and off by a controller output. It generates a pheonomenon called a back electromotive force (EMF).

What is a back EMF? A solenoid is a coil of wire that creates a magnetic field whenever current passes through it. The magnetic field then triggers relay contacts or a valve to change state. This field is maintained as long as current flows through the coil.

When the current is switched off, the magnetic field collapses. This collapse induces a reverse polarity voltage in the coil, which is the back EMF. This voltage travels back to the source, which in many cases is the output of a programmable logic controller (PLC) and can damage an unprotected output circuit.

The voltage spike from a back EMF can cause damage in several ways. If the output circuit is a set of relay contacts, it can cause these to arc. Arcs create small pits in the surface of the contacts. The pits make the arcing worse and the effect amplifies over many cycles until the contacts no longer make a good connection.

A voltage spike to a transistor output can damage the circuit components by overloading them and causing them to fail. Even if the components are not damaged, the spikes can act as transient noise forced into the circuit and can cause unpredictable behavior.

For a direct current circuit, a diode placed between the positive side of the coil and ground will dissipate the returning voltage spike. Since the voltage spike will be reverse polarity from the source, the diode should be reverse biased so that the cathode end—the end usually marked

For a direct current circuit, a diode placed between the positive side of the coil and ground will dissipate the returning voltage spike.

with a ring—is connected to the positive side of the coil, and the anode end should be connected to ground. This diode is sometimes called a flyback diode or a snubber.