The Power Supplement

Keeping data centers going

DC power in the racks

> Silicon runs on direct current, so why not DC to power racks?

Break up the battery room

> It might make sense to store energy in the racks, instead of the UPS

Abolish all resistance!

> Want no distribution losses? Use superconductors

Powering AI innovation

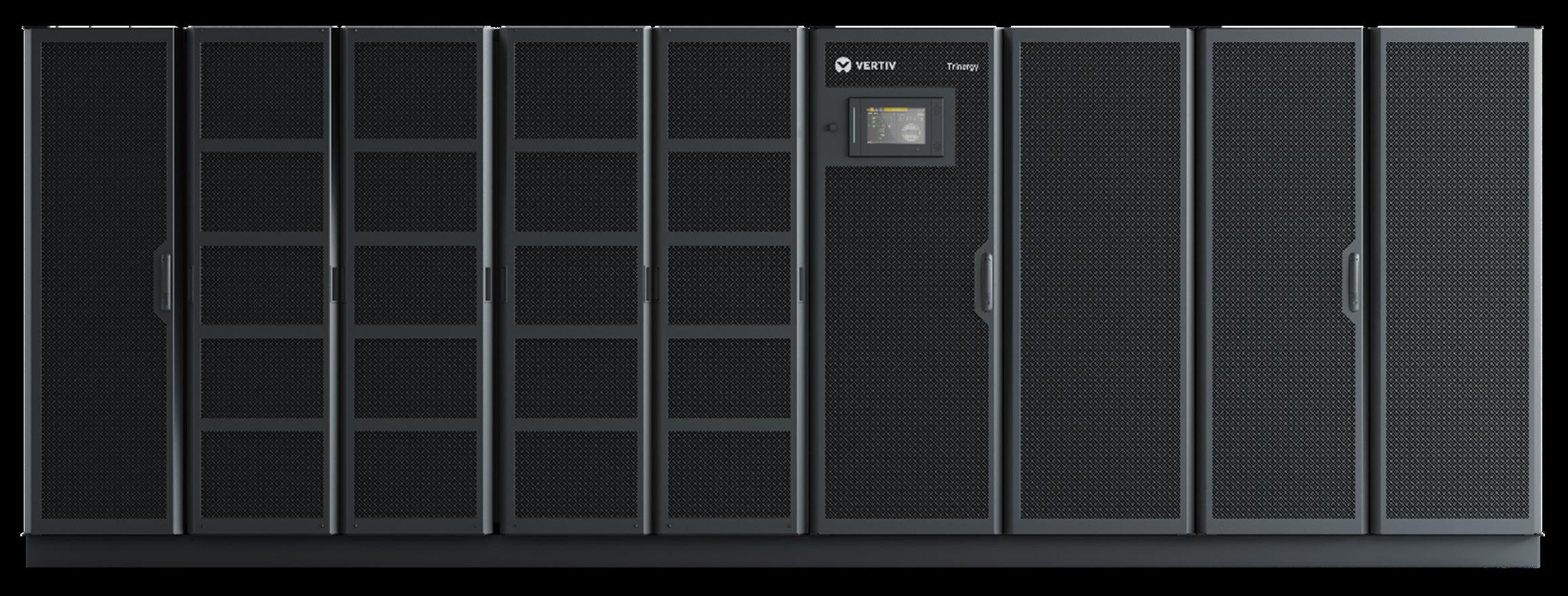

Vertiv™ Trinergy™ is not just a power solution; it is the reliable driving force behind AI workloads. Trinergy™ sets itself apart with its innovative 3-level IGBT technology to meet the volatile demands of next-generation AI systems.

Supercharge your AI capabilities.

Sponsored by

Contents

4. DC power in the racks Silicon runs on direct current, so why not DC to power racks?

7. Break up the battery room Why it might make sense to store energy in the racks, instead of in the UPS

8. Together in Electric Dreams Vertiv Trinergy brings reliable backup power to the AI future

10. DC distribution - the second coming Dismissed in the 2010s, the idea of running direct current through your building is back

13. Abolish all resistance! Why worry about distribution losses, when superconductors have no resistance at all?

War of the currents

In the 1880s, Thomas Edison and George Westinghouse engaged in the War of the Currents - one of the fiercest industrial battles in historypitting AC against DC.

Edison backed direct current (DC) distribution, and both sides used patents suits and chicanery.

Edison said alternating current was so deadly it should be used for executions by the electric chair, and Westinghouse funded legal appeals in an attempt to prevent his alternating current powering a public execution.

As we know, AC won out. In 1889, Edison's interests merged into what became General Electric, giving the company a strong AC business, and sidelining Ediuson's opposition.

But DC lingered on. Consolidated Edison switched off its last DC customer in 2007.

By that date, there was already a debate in the data center sector about which system is best.

Chips want DC

The chips inside our servers all natively use DC power. Inside the box, a rectifier turns AC into the direct current which the silicon wants.

Several initiatives have been putting the rectifiers together at one point, and using DC within the rack, with a DC bus bar providing a miniature grid which would have Edison's approval.

The majority of racks in the world still have AC power strips or "power distribution units," but there's a head of steam for DC distribution.

There's still room for argument, however. Hyperscalers and enterprises have proposed both 12V and 48V (p4).

Greater simplification

When you let DC out of the rack, things get contentious.

Pioneers have claimed that DC distribution through the building can save energy that would be wasted in converting back and forth to AC.

But DC is now seen as exotic, and in this iteration of the war of the currents, it is DC that is seen as unsafe.

DC distribution experiments have not gotten far - until now.

Environmentally-conscious data centers are re-examining efficiency, and it's been noticed that a lot of new power sources such as fuel cells inherently provide DC power.

Experimental DC data centers are still running - and new ones are being created (p10).

Invention doesn't stop

But it's more than a rerun of old battles. Modern day Edisons are coming up with alternative ideas.

It's possible to do away with the inefficiencies of the battery room by simply removing it, and installing smaller batteries within the racks.

The idea has at least been tried out by at least two of the large providers we call "hyperscalers" - and in this supplement we talk to a company that has used the method in many sites (p7).

And beyond that, there are companies and researchers who want to get around the problems of resistance, by simply eliminating it.

Supercondoctors have zero resistance, at low temperatures.

We close the supplement with a glimpse of a theoretical superconducting facility (p13).

DC power in the racks

Silicon runs on direct current, so why not DC to power racks?

Hyperscalers do it. Some big enterprises do it too. But how widespread is the use of DC power within data centers?

And more importantly, why are organizations using it?

Why data centers didn’t adopt 48V DC

DC racks have a long history- and if you are not currently using DC power distribution, it is pretty certain that you have encountered it in the past, and may still be using it every day - in your phone.

“Power infrastructure has been somewhat black magic to most

Peter Judge Executive Editor

organizations,” says My Truong, field CTO at Equinix. “DC power distribution has been around for a long time in telecoms. We've largely forgotten about it inside of the colocation space, but 30 to 50 years ago, telcos were very dominant, and used a ‘48V negative return’ DC design for equipment.”

Telephone exchanges or central offices - the data centers of their day - worked on DC. “A rectifier plant would take AC power, and go rectify that power into DC power that it would distribute in a negative-return style point-to-point DC distribution.”

Those central offices had lead acid batteries for backup and landlines, and

the traditional plain old telephone system (POTS) is based on a network of twisted pair wiring that extends right to your home, where it uses a proportion of that DC voltage to ring your phone and carry your voice.

“We actually still see 48V negative return DC style equipment inside Equinix legacy sites and facilities,” says Truong. “We still use -48V rectifier plant to distribute to classic telecom applications inside data centers today, though we don't do much of that anymore.”

When mass electronics emerged in the 1980s, the chips were powered by DC, and tended to operate at fractions of 12V, partly as a legacy of the voltages used in telecoms, and in the automotive industry.

Data centers adopted many things from telecoms, most notably the ubiquitous 19-inch rack, which was standardized by AT&T way back in 1922. Now, those racks hold electronic systems whose guts - the chips inside the servers – fundamentally all run on DC power. But data centers distribute power by AC.

This is because the equipment going into data center racks had been previously designed to plug into AC mains, says Truong: “Commodity PCs and mainframes predominantly used either single phase or three phase power distribution,” he says.

Commodity servers and PCs were installed in data closets powered by the wiring of the office build, and they evolved into data centers: “AC distribution is a direct side effect of that data closet, operating at 120V AC in the Americas.”

Rackmount servers and switches are normally repackaged versions of equipment which all contain a power supply unit (PSU), also called a switchmode power supply (SMPS) which is essentially a rectifier that takes mains AC power, and converts it to DC to power the internals of the systems. In the 1990s and 2000s, that is the equipment that data centers were built around.

Around that time, telco central offices also moved away from DC says Truong: “48V was a real telco central office standard and it worked for the time that telco had it, but in the 2000s we saw a natural sunset away from 48V DC, in telco as well. There’s some prevalence of it in telco still but, depending on who you ask, the value isn't there, because we can build reliable AC systems as well.”

AC inefficiencies

In a traditional data center, power is distributed through the building mostly as AC.

Power enters the building as highervoltage AC, which is stepped down to a voltage that can be safely routed to the server rooms. At the same time, a large part of the power is sent to the Uninterruptable Power Supply (UPS) system where the AC power is converted to DC power to maintain the batteries.

Then it is converted back to AC, and routed to the racks and the IT equipment. Inside each individual switch and server, the PSU or rectifier converts it back to the DC that the electronics wants.

But there are drawbacks to all this. Converting electricity from one form to another always introduces power losses and inefficiency. When electricity is converted from AC to DC and vice versa, some energy is lost. Efficiencies can be as low as 73 percent, as power has to be converted to DC and back multiple times.

Also, having a PSU in every box in the rack adds a lot of components, resources, and expense to the system.

Over the years, many people have suggested ways to reduce the number of conversions - usually by some form of DC distribution. Why not convert the AC supply to DC power in one go, and then distribute DC voltages to the racks? That could eliminate inefficiencies, and potentially remove potential points of failure where power is converted.

Efforts to distribute DC through the building have been controversial, as we’ll see in our next feature (p9). DC distribution within the racks means a significant change from traditional IT equipment, but the idea has made strong progress.

Enter the bus bar

In traditional facilities, AC reaches the racks and it is distributed to the servers and switches via bars of electrical sockets into which all the equipment is plugged. These are often referred to as power distribution units (PDUs), but they are basically full-featured versions of the power strips you use at home.

In the 2000s, large players wanted to simplify things and moved towards bus bars inside the racks. Rectifiers at the top of the racks convert the power to DC, and

then feed it into single strips of metal which run down the back of the racks.

Servers, switches, and storage can connect directly to the bus bar, and run without the need for any PSU or rectifier inside boxes which fed from rectifiers at the top of the rack. These effectively replace the PSUs in the server boxes, and distribute DC power directly to the electronics inside the server boxes, using a single strip of metal.

It’s a radical simplification, and one which is easy to do for a hyperscaler or other large user, who specifies multiple full racks of kit at the same time for a homogeneous application.

Battery backup can also be connected to the bus bar in the rack, either next to the power shelf or at the bottom of the rack.

At first, these were 12V bus bars, but that is being re-evaluated, says Truong: “Some hyperscalers started to drive the positive 48V power standard, because there was a recognition - at least, from Google specifically - that 48V power distribution does help in a number of areas.”

“48V power distribution is evolving and becoming very necessary in the ecosystem,” says Truong. “The 12-volt designs are out there but have their own set of challenges on the infrastructure side.”

There’s a basic reason for this: Power is the product of voltage and current (P=VxI), but voltage and current are also related by the resistance by Ohm’s Law (V=IR).

In particular, at a high voltage, less energy is consumed by the resistance of the bus bar, because less current is needed to deliver the same power. The power loss in the bus bar is proportional to the square of the current (P=I2R).

The Open Compute Project (OCP), set up by Facebook to share designs for large data centers between operators and their suppliers, defined rack standards, The first Open Rack designs used Facebook’s 12V DC bus bar design, but version 3 incorporated a 48V design submitted by Google.

Meanwhile, the Open19 group, which emerged from LinkedIn, produced its own rack design with DC distribution, but used point-to-point connectors - these are simplified snap-on connectors going

from a power shelf to the servers but are conceptually similar to the connections within an old-school central office.

Racks go to 48V

Like OCP, Open19 started with 12V DC distribution, and then added 48V in its version 2 specification, released in 2023.

Alongside his Equinix role, Truong is chair of SSIA, the body that evolved from Open19, and develops hardware standards within the Linux Foundation. Truong explains the arrival of a 48V Open19 specification: “One of the things that we thought about heavily as we were developing the v2 spec, was the amount of amperage being supplied was maybe a little bit undersized for a lot of the types of workloads that we cared about. So we brought around 48V.”

Also, power bricks in Open19 now have 70 amps of current carrying capability

Both schemes put the power supplies (or rectifiers) together, in a single place in the rack, which gives a lot more flexibility: “Instead of having a single one-to-one power supply binding, you can effectively move rectifier plants inside of the rack in the ‘power shelf,’” he says. “We can have lower counts of power supplies for a larger count of IT equipment, and play games inside of the rectifier plants to improve efficiency.”

Power supply units have an efficiency rating, with Titanium being the soughtafter highest value, but efficiency depends on how equipment is used. “Even a Titanium power supply is going to have an optimum curve,” says Truong. Aggregating PSUs together means they can be assigned to IT equipment flexibly to keep them in an efficient mode more of the time.

“It gives us flexibility and opportunity

to start improving overall designs - when you start pulling the power supply out of the server itself and start allowing it to be dealt with elsewhere.”

Component makers are going along with the idea. For instance, power supply firm Advanced Energy welcomed the inclusion of 48V power shelves: "Traditionally, data center racks have used 12V power shelves, but higher performance compute and storage platforms demand more power, which results in very high current. Moving from 12-volt to 48-volt power distribution reduces the current draw by a factor of four and reduces conduction losses by a factor of 16. This results in significantly better thermal performance, smaller busbars, and increased efficiency."

DC kit is available

One of the things holding back DC racks is lack of knowledge, says Vito Savino of OmniOn Power (see page 7): “One of the barriers to entry for DC in data centers is that most operators are not aware of the fact that all of the IT loads that they currently buy that are AC-fed are also available as a DC-fed option. It surprises some of our customers when we're trying to convert them from AC-fed to DC-fed. But we’ve got the data that shows that every load that they want to use is also available with a DC input at 48 volts.”

Savino says that “it's because of the telecom history that those 48V options are available,” but today’s demand is

strongly coming from hyperscalers like Microsoft, Google, and Amazon.

DCD>Academy instructor Andrew Dewing notes that servers built to the hyperscalers’ demands, using the Open Compute Project standards are now built without their own power supplies or rectifiers. Instead, these units are aggregated on a power shelf.

This allows all sorts of new options and simplifications and also exposes the historic flaws of power supply units, which Desing calls switched-mode power supplies or SMPS: “The old SMPSs were significantly responsible for the extent of harmonics in the early days of the industry and very inefficient. OCP, I'm sure, will be provisioning power to the IT components at DC with fewer and more efficient power supplies.”

Equinix field CTO My Truong thinks this could change things: “Historically, when a power supply [ie a rectifier] was tightly coupled to the IT equipment, we had no way of materially driving the efficiency of power delivery. Now we can move that boundary. You can still deliver power consistently, reliably, and redundantly on a 40-year-old power design, but now we can decouple the power supplies this gives us a lot of opportunity to think about.”

Going to higher voltage also helps within the servers and switches, where smaller conductors have the same issues with Ohmic resistance. “It is magnified inside of a system,” says Truong. “Copper traces on an edge card connector are finite. Because you have small pin pitches you are depositing a tremendous number of those pins, a tremendous amount of trace copper, to be able to move the amount of power that you need to move through that system.”

He sees 48V turning up in multiple projects, like OCP’s OAM, a pluggable module designed for accelerators that has a 48V native power rail option inside it: “I think that that could be another indicator that 48V power architectures across the industry are going to become prevalent.”

Truong predicts that even where the power supply units remain in the box, “we probably will see an evolution on the power supply side from 12V to 48V over the next few years. But that's completely hidden from anybody's visibility or view because it sits inside of a power supply.”

Break up the battery room

Why it might make sense to store energy in the racks, instead of in the UPS

Data centers traditionally have a large roomful of batteries so the IT equipment can ride out power outages until the generators can start up. These rooms necessitate lossy power conversion, so why not do away with them?

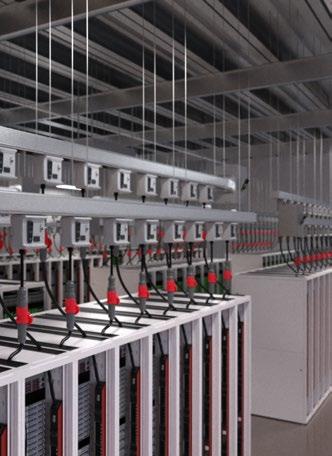

One power equipment provider, with a telco heritage, has a 48V rack system that includes lead-acid batteries within the racks instead.

“With every conversion of power, whether it's from AC to DC, or from DC to a lower voltage DC, you are you are losing energy,” says Vito Savino of OmniOn Power, a company which started out as a division of AT&T. Sent to Lucent at AT&T’s breakup, it has since gone through a series of owners, becoming ABB Power Conversion, before finally being spun out.

OmniOn sells 48V DC racks, somewhat like the hyperscaler’s OCP racks, linked to an AC building network, but the company eliminates the double AC/DC conversion at the UPS batteries, by shifting the batteries into the racks.

He points out that the traditional architecture, which is dominant across the world, takes AC power and converts it to DC to charge the UPS batteries, and then converts it back to AC.

“You've got all these conversions and a step-down, just so you've got some energy storage in the battery. And all that UPS is doing is giving you a little bit of holdover time, for a generator to start,” he says.

“Our approach is to take 48V AC, threephase, all the way to in the rack, where you have your servers and storage and networking gear, and then convert it to DC in the rack. What you've got is just a single conversion.”

And each rack gets its own energy storage: “On the left side, you’ve got rectifiers mounted vertically, and batteries also mounted vertically. They are on the same bus, which goes vertically from the top of the rack to the bottom of the rack, feeding a standard 19-inch IT rack in the middle. It is a single cabinet, a few inches wider than the standard 19-inch rack”

He rejects current fashion, sticking with traditional battery technology: “We do not use lithium because there are [safety] issues with lithium. We use VRLA [lead acid] battery packs that we have designed - and we also have nickel-metal hydride battery packs from a major supplier in Japan.”

He decries traditional UPS systems: “We've looked at the whole scope of power conversion inside the data center - and why do I need this monolithic beast that I have to pay upfront for the entire sizing of my data center? In most cases, it is not going to be generating revenue, and you're certainly not going to be using all of the capacity of that UPS for a long time. I just need some battery holdover for two minutes for the generator to start in the event that we lose grid power.”

The batteries are scaled to support up to 48kW per rack. It was “co-developed with a major service provider in the US,” he says. “We have 1,000s of these in their data centers today.”

He won’t name the customer, but with its background, OmniOn appears to work mostly for telecoms-heritage firms.

Are Microsoft and AWS doing it?

Hyperscalers have also spoken about putting batteries in their racks - but DCD is not convinced they are doing it to any great extent.

Peter Judge Executive Editor

In 2015, Microsoft announced it was using a “distributed UPS” - lithium-ion batteries inside racks instead of a large battery room. In 2020, AWS showed a similar approach in a keynote speech in 2020, claiming that distributed battery backup removed the reliability issue of powering a data center from a single large battery room.

AWS engineer and evangelist Peter DeSantis described this as a “micro-UPS” in each rack. “Now the batteries can also be removed and replaced in seconds rather than hours. And you can do this without turning off the system.”

It’s not clear how far these ideas have been implemented in production systems. Microsoft contributed its distributed UPS to the Open Compute Project (OCP) as a Local Energy Storage (LES) specification, but neither Microsoft nor OCP has said anything in public about the idea since.

Microsoft’s data centers clearly still have large battery rooms, based on recent announcements, such as its plans to share battery energy with the grid in Ireland.

Amazon returned to its micro-UPS statement in its 2022 sustainability report which said its data centers no longer have a central UPS: “In AWS, the team spent considerable resource innovating on power efficiency, removing the central Uninterruptible Power Supply from our data center design, integrating power supplies into our racks.”

DCD has not been able to confirm with AWS whether this quote is in fact “aspirational” or if the company has actually stopped buying UPSs.

Together in Electric Dreams

Vertiv Trinergy brings reliable backup power to the AI future

Not a day goes by without further evidence that the world’s power infrastructure is under severe strain. While the storm of uneven AI workloads grabs much of the attention, it has simply compounded an inconvenient truth –we need more data, which means more data centers, which require more power. This has led to restrictions from energy providers in some territories, which are only likely to increase as we come to terms with the twin pressures of increased demand and the need to embrace more sustainable ways of producing it.

With full support for the power sources of the future, Vertiv has launched its new Vertiv Trinergy UPS. This system combines modular hardware with software and monitoring capabilities to optimize power quality, backup power, and cooling performances – all backed up by service and expertise to bring the right solution to every facility.

DCD met up with Arturo Di Filippi, offering director of global large power at Vertiv, to examine industry challenges and discuss potential solutions. He tells us:

“We are witnessing the dawn of a new era. Previously, data centers grew significantly, driven mainly by cloud or colo applications, and the load was steady.

Now, with AI, the landscape has changed completely. The load is no longer steady but varies greatly depending on the AI application and scenario.”

Although the issue is often associated with AI, data center operators face similar challenges regardless of their load types. Every new data center requires power, and there often isn’t enough to meet the demand. Di Filippi adds:

“New data centers are a result of AI, but power constraints are related to availability. These constraints apply to both flexibility and AI applications, as their loads can fluctuate significantly in a short time. Some grid operators may need those megawatts at short notice. As an equipment supplier, we need to consider how we can support this because the grid needs to remain steady. One way we can do this is by using uninterruptible power supply (UPS) units that function as power centers. These units can interact with the grid for frequency balancing while supporting the volatile critical load.”

AI is a highly unpredictable commodity with workloads that constantly ebb and flow, making it challenging to quantify global demand accurately. Businesses like Vertiv must use their expertise to make informed forecasts about requirements. Simultaneously, they must prepare for

worst-case scenarios, reflecting data transfer's "always on" nature.

As Di Filippi explains, the focus has broadened from solely environmental responsibility two years ago to now also prioritizing reliable power for data centers in the age of AI. This challenge has highlighted a sense of urgency: “A couple of years ago, we were only talking about reducing our carbon footprint, and how our products could set the stage for meeting decarbonization goals. The discussion has evolved to include AI and securing power for its workloads, regardless of the source. That's why we need to be good at merging both goals and support most of the power used with more eco-friendly options wherever possible.

“Vertiv unveiled its first UPS and fuel cell integration at the new Customer Experience Center in Delaware, OH, setting a benchmark for low-carbon backup power solutions. Additionally, Vertiv has formed a strategic partnership with Ballard Power Systems, a leader in PEM fuel cells. Together, they aim to offer scalable, cost-effective fuel cell backup power solutions for data centers. These solutions produce zero Green House Gas (GHG) emissions. Initial tests have shown that the zero GHG emission backup power works successfully in an uninterruptible power system.”

Simultaneously, the rapid expansion in the data center industry has forced suppliers to become more agile in production to meet demands while reducing running costs. As facilities retrofit for increased density, demand for more powerful products with a lower footprint grows ever greater, and sometimes trade-offs have to be found, as Di Filippi explains:

“There could be solutions that improve efficiency but may bring disadvantages in terms of footprint. The end goal will be to have both, but in some cases, the most demanded feature is the chance to install within a small footprint, making it even more valuable than adding efficiency. Quality, reliability, and resilience are the most important aspects, but that is baked into everything that we do as standard, so it’s barely worth mentioning – after all, we could deliver the best-performing product in the world, but it’s no good if it doesn’t work as expected – that makes no sense.”

One of Vertiv’s goals with Vertiv

Trinergy is to evolve the traditional UPS into a “power center”, enabling the unit to interface with multiple AC/DC energy sources using a different type of fuel. By evolving to new battery standards, such as lithium, nickel-zinc, and other emerging energy storage technologies, they can enhance efficiency and capacity.

Di Filippi tells us: “We need to make sure that we can integrate our products with all the possible energy sources that a customer wants to deploy. Our UPS must be able to interface with all these options without any additional service or maintenance.”

Nickel–zinc batteries are currently more expensive than traditional lithium batteries, which have become more competitive for mainstream use in data centers and consumer products such as electric cars. For data center operators, nickel-zinc is considered a safe battery material for energy storage and represents a viable alternative to lithium-ion when its deployment is in question due to fire safety concerns.

“We still think that lithium is the best option for backup power applications for a combination of performances and TCO. Vertiv lithium batteries are certified, tested, and encased in such a way as to make sure that problems are limited and contained to the battery rack itself, without affecting any other equipment in the data center. Nevertheless, customers usually prefer to adopt a fire suppression system, which is an additional expense on their side. That's why we have extended our energy storage portfolio with nickelzinc batteries. Performance is close to lithium, but their main advantage is that they don’t have the same safety risks.”

Vertiv Trinergy's modularity concept also extends to integrating energy storage devices to meet customers' individual needs – whether they're fitting out a new data center or retrofitting existing facilities. Optimizing UPS and battery integration helps them fit better in their deployment spaces. This typically results in a smaller footprint for the entire setup. The saved space can then be used for additional racks, liquid cooling, or scaling up backup power. “This concept of modularity extends to distributed batteries, meaning that in case of a fault happening, we can make sure that the fault is limited to the group of batteries connected to one core, while all the others

can keep working. Distributed batteries offer improved performance under various failure modes, amplifying UPS performance and ensuring uninterrupted operations in critical power scenarios.”

Additionally, data centers can act as responsible grid citizens by utilizing energy storage systems to provide grid services and help balance the fluctuating demand for electricity. This not only benefits the environment but also provides an additional revenue stream for data centers:

“For AI, we know that load variations can occur. Sometimes we need to support the load during huge spikes, which can be managed through batteries if the customer chooses. Conversely, we offer what we call ‘grid support’. If there is a greater power absorption on the grid or a decrease in grid frequency (for instance, due to renewables not generating as expected because of weather conditions), the grid can request help.

“In that case, the UPS can intervene, disconnect partially from the grid, and use the batteries to support the load, helping to restore the grid frequency. The grid operator will be relieved since the temporary loss of power brough by the intermittent renewables is compensated and grid frequency re-established. In some countries, the data center owner can also receive some revenue from the grid for that.”

Although these trade-offs have come about as a result of the constraints on the power grid, Vertiv remains committed to improving its offerings to be as efficient as possible, both for the benefit of the data center and the planet. Di Filippi explains:

“We can add a lot of themed features like dynamic online and dynamic grid support, which we call operating modes. These can lead to some levels of efficiency that we have not seen before. This is our mindset – our strategy that we also apply to products for launch in the future or extensions of this one.

“We can work in a way that boosts efficiency to 99 percent. And with dynamic grid support, we can make sure that we contribute to sustainability because we encourage the introduction of renewables. After all, the UPS can assist in providing support by the time the grid goes down as an indirect approach to aiding stability. Ultimately, if and when we can work with hydrogen, you will not

produce any emissions at all.”

As Di Filippi has explained, Vertiv Trinergy has been designed to integrate with multiple energy sources. As we wrap up, we ask him to future-gaze into what he believes will become the dominant energy sources of an eco-friendlier and AI-enabled data center:

“Batteries are not prime power unless you have some other power source constantly recharging them. The first option is gas turbines. This is something that is already happening. Working with gas is adding emissions, but as a main alternative, that’s the most immediate.

“Hydrogen fuel cells show promise, but current hydrogen production levels aren’t as sufficient for continuous community operation yet. However, the progress we've seen with the UPS and fuel cell integration in the Vertiv Customer Experience Center in Delaware, OH, along with our partnerships with Ballard Power Systems, is encouraging. This indicates that we're moving in the right direction for future advancements.

“You may have also heard about SMR – small nuclear reactors, which are also feasible. You can use wind and solar in, say, a one-gig campus, in countries where there's lots of sun or wind. But for at least two to three years, maybe up to five years we will need to accept that natural gas, while not perfect, has lower emissions coupled with the same performance efficiencies.”

The big takeaway, however, is that whatever the fuels of the future turn out to be, Vertiv Trinergy is on track to becoming a Power Center. This solution will be capable of controlling multiple AC and DC energy sources, regardless of future fuel developments. Meanwhile, its primary mission remains unchanged: to protect the critical load of your data centers and ensure consistent, reliable power quality for AI and other mission-critical applications.

For more information about Vertiv Trinergy, visit the product page or explore its campaign

DC distribution - the second coming

Dismissed in the

2010s, the idea of running direct current through your building could be set to make a comeback

Early data centers were powered by masses of cables under the raised floor. To make the system easier to modify and maintain, and get rid of the skeins of cables that blocked airflow, the cables to the racks are now more usually condensed into a “bus bar” - a single solid conductor that runs overhead, carrying enough AC power to run all the equipment on a row of racks.

Racks are fed via “tap-off boxes” which simply attach to the bus bar, and connect down to the PDUs in the racks.

“AC buses are used pretty regularly for three-phase distribution,” says My Truong, field CTO at Equinix. He references Starline as “one of the most prevalent bus systems out there for three-phase power distribution in data centers.”

“Overhead bus bar systems eliminate the need for remote power panels, resulting in more usable space for server racks,” explained Marc Dawson, director for bus bars at Vertiv, in a blog. “Tap-off boxes can be placed at any point along the bus bar therefore eliminating miles of power whips.”

But what goes through the bus bar? Traditionally it has been alternating current. What if that could be replaced with DC?

DC distribution - a fad of the 2010s?

In the 2010s, a number of data centers were built which operated with DC power throughout. The goal was to eliminate the energy losses that took place when power was converted multiple times between AC for transmission, and DC for charging the UPS batteries and running the IT.

“The DC distribution ideas which first raised their head in the late noughties were based on the replacement of 'conventional' AC distribution arrangements within the building,” explains DCD Academy instructor Andrew Dewing.

Intel experimented with using 400V DC to distribute power around the data center, but the leading proponent of DC distribution was probably Validus DC Systems, a company founded by Rudy Kraus in 2006 and bought by ABB in 2011.

“Direct current is the native power resident in all power electronics,” Kraus told DCD in 2011: “Every CPU, memory chip, and disk drive consume direct current power. Alternating current was chosen as a power path based on criteria set 100 years ago, 50 years before power electronics existed.”

As Dewing explains: “The ideas that ABB tried to push utilized the idea of DC distribution from the UPS DC bus downwards to the load allowing the

removal of the inverter stage of the UPS. The idea was that by distributing at DC you could remove a lot of transformers that might be used and save energy.”

Some flagship data centers around the world tried it out. “We built three centers of mention,” says Kraus: “One at Syracuse University with IBM, one in Lenzburg [near Zurich, Switzerland] for HP, and one for JPMorgan Chase in New Jersey.”

Others were reported, including one in Ishikari City, Hokkaido, Japan.

Validus distributed power at 380V, and claimed that DC distribution could produce huge savings. Validus said 2.5MW data center using DC would use up to 25 percent less energy, saving $3 million a year at 2009 prices.

There would be big savings in the electrical distribution system, with equipment down by 50 percent, installation by 30 percent, and it would only need half the physical space. With those simplifications, it would also be more reliable, the company promised.

Other benefits include no longer having to synchronize power systems. With no cyclic variation in voltage, there are no harmonics, and no problem with devices getting out of synch.

“You could literally put anything you want that’s DC on that bus,” says Kraus. “You could do fuel cells, you could do DC

Peter Judge Executive Editor

UPS, you could do rectifiers, you could do batteries, you could do windmills. And all this stuff is very, very simple.”

With all these benefits, ABB said it had bought a "breakthrough" technology in 2011, but the industry was not convinced.

Safety first

Among the naysayers, the influential Facebook engineer James Hamilton cast doubt on the claims made: “The marketing material I’ve gone through in detail compare[s] excellent HVDC designs with very poor AC designs. Predictably the savings are around 30 percent. Unfortunately, the difference between good AC and bad AC designs is also around 30 percent.”

Hamilton reckoned the efficiency improvements might be as much as five percent, but the small market for DC systems would make the equipment more expensive equipment, along with “less equipment availability and longer delivery times, and somewhat more complex jurisdictional issues with permitting and other approvals taking longer in some regions.”

Another issue was safety.

“A big drawback with DC power distribution is that safety is much more expensive to achieve,” says Ed Wylie, of American Superconductor (AMSC).

He sees this as a general electrical principle. AC switches can be less bulky, because their current oscillates and regularly passes through zero, at which point it is easy to physically move the switch contact.

“With DC,” says Wylie, “the current might be maintained, and you burn out the switch fairly quickly. If you look at the equivalent rating of a DC switch, and an AC switch, one is monstrous, and the other is small and compact.”

In 2018, OmniOn’s Vito Savino was employed by ABB and saw Validus’ trial data center. “I went to a small data center in Syracuse University, New York, that was kind of the pilot that Validus had done,” he told DCD. “And it was appropriate for it to be in a lab environment.”

Like Wylie, Savino says DC distribution was held back by the lack of safety devices. “When you're running DC at a high voltage, over the SELV [Safety Extra Low Voltage] level of 72V, you can really hurt somebody if they happen to touch the wrong places.”

At that time, he says: “The protection

devices that are supposed to kick in were not available. They weren't made yet for DC, and therefore it was an experiment, and it did not go very well. It was not well received because nobody wanted to be around that voltage. When we talk about DC voltages, 48V is a safe voltage, unless there’s a lot of current behind it.”

As Savino puts it: “When we think about it from a safety perspective, we want that 48V to be contained inside of the rack where only a technician would ever be able to see it and the closest they get to it is putting a breaker into the power distribution unit that is encapsulated in the cabinet.”

“There's a lot of human resistance,” he says, clarifying that he is talking about social reluctance to adopt the technology, rather than electrocution, and saying, “in that case, it's justified.”

Kraus says: “It's not a safety issue, in my opinion. If you look at the Navy, the Air Force, and the phone company. They are all using it.”

As it turned out, the idea also had limited application internationally, according to Dewing: “In the US electrical systems there were many AC voltage levels and, at the time, a more conservative approach to the use of isolation transformers, for example. Whilst the theory might have worked for the US it was never viable in other parts of the world where electrical distribution was much simpler with only two or three voltage levels (e.g. UK mostly has 132kV, 11kV, 400V).”

DC distribution in the building could also have struggled to deliver enough power says Dewing: “The voltage levels of DC suggested (circa 500-700V DC) would have necessitated very large cabling requirements in a world where space was at a premium.”

The new current wars

Kraus thinks these objections were born out of simple stubbornness: “The AC guys fought it. It was back to the EdisonWestinghouse days. There was a major fight between DC and AC.”

He’s referring to the 1890s, when Edison pushed DC distribution for the grid, but ultimately lost out to Westinghouse’s AC equipment, which Edison said was unsafe.

“As time goes, we're surrounded more and more every day with DC products,” he says, citing lights, electric cars, and more. “The fact of the matter is, I think I was right. I think that DC will continue to grow.”

In the end, ABB did not develop the

Validus technology. “They had a switch of CEOs at that point, and decided not to do much with it,” says Kraus.

“Honestly, I don't think ABB learned much with the Validus acquisition,” says Savino. “It kind of just went away in terms of productizing IT and marketing it.”

DC distribution may have faded out of data centers after 2010, when it seemed too risky and unusual to run DC voltages through the building, But there have been some big changes since then.

Go Direct

Firstly, racks these days may be fitted with DC bus bars (see p4). Secondly, data centers may be able to draw their power from new sources like solar arrays or fuel cells, both of which deliver power in DC form.

Concerned about the reliability of the grid, or believing they can generate power better themselves, some operators are moving to microgrids with on-site power sources. These sources, including hydrogen fuel cells, and solar arrays, provide power as DC.

When data centers draw power in as DC, and use it to run DC racks, the AC stage looks like a smaller part of the system, and that’s making some people look again at the idea of skipping the AC part of the loop.

“A Bloom fuel cell is a really great example,” says Truong. “Those naturally produce 380V HVDC right out of the cell. But because we're so AC-centric in most localities, we DC-AC convert that to 480V AC three-phase, run it into the building, and then reconvert it back to DC at least once to go through UPS, and then reconvert it to AC to send it to the IT equipment!”

That 380V DC power could be sent straight to the IT with relatively little effort: “There are tricks you may not be aware of. A standard 240V power supply will probably conveniently ingest a 380V HVDC input with minimal friction. It's just not certified that way.”

He spells it out: “If we have power supplies that could naturally take 380V HVDC, there's nothing preventing us from passing that power directly out of a fuel cell, not taking that three, eight, or 10 percent conversion loss along the way, and having a relatively more efficient overall power distribution.”

It doesn’t just help energy efficiency, he says: “Bussing DC also has some nice other artifacts. Because it is a DC bus, you don't

have to phase sync, you don’t have to do a bunch of AC antics to synchronize up supplies. So you could actually have a solar array feed into the bus and pick up some of the slack of the system with battery systems. There's a lot of opportunity for us to go rearchitect when we go to DC buses.”

Traditional AC power distribution simply repeats what has been done before without trying to understand the underlying principles, like a “cargo cult,” says Truong: “I like to say we cargo-cult it forward until the thing stops working for us. And I think we're at that inflection point in the industry, where we have cargo-culted AC forward as far as we can.”

It’s tough to make changes inside colocation and enterprise data centers, though. “At Equinix, we are very comfortable with AC distribution today,” says Truong. “To advocate for a DC distribution mechanism, we would have to have the customer come to us and say ‘we need 480-volt distribution, and we want to do it this way.’”

Hyperscaler providers own the design end-to-end, but others don’t have that luxury, he says: “How do we collaborate with the end user and recognize the benefit to doing this, versus our classic data center designs?”

Colocation customers won’t be willing to go to DC distribution until it looks like a safe option, backed by popular standards, and that is what Truong aims to do in his other job as chair of the Open19 group, now known as SSIA.

“For us to break the paradigm, we have to break a bunch of things simultaneously, and get customers comfortable with breaking it,” he says. ”But we need to show some value to them. And that is very hard for us right now.”

Rethinking in California

One man with the freedom to rethink things is long-time data center simplifier Yuval Bachar, the original founder of the Open19 group, now at California-based startup ECL

ECL has built a 1MW data center powered by on-site hydrogen fuel cells and is proposing to build more for customers wherever there is a supply of hydrogen.

Each facility will be built for one customer, and that customer can choose how radical they want to be. That’s because, Bachar says, the power

distribution inside the facility will be the customer’s choice.

“We can actually push DC directly to the data center, and distribution inside the data center is based on customer requirements. For us, DC is better because we save stages of conversion. But if the customer wants us to actually use DC inside then we do the conversion for the customer. Our design is capable of doing either.”

Bachar is well aware of the history of DC, and the safety concerns around it. The ECL design can include transformers for conditioning, along with DC-to-DC converters: “We can actually drive any voltage of DC, we can drive AC, and we can drive a blend of them as well. You can have half DC, half AC if you want.”

For him, the key concern is how much current the design will require in the distribution bus bars that go through the building when rack densities get high. If it is distributed at a low voltage, then the amount of current required to supply multiple racks would be “challenging,” he says.

“The reason for that is, at the amount of power that we use per rack, we're going to have to drive into distribution system 1000s of amps at low voltage. You could be close to 10,000 to 15,000 amps. That requires pretty special copper distribution.”

He’s not wrong there. That much current will require carefully designed thick copper bus bars, and resistance will be a problem. So much so that at least one group of academics has suggested using high-temperature superconductors (see feature, Abolish Resistance p13).

In the real world, with regular copper conductors, high currents mean “you're going to get heat, and you can get sparking,” says Bachar.

His answer is to distribute higher voltages, which means a more reasonable level of current, and then convert the voltage down at the racks.

“We're trying to put the voltage high enough to be safe,” he says. “For 1MW, we push at 800V, 750V, or 700V. That’s about 2000A, which is reasonable. To increase that five times or six times, that is becoming a nightmare.”

Pushing for standards

Pioneers like Truong and Bachar are happy to push boundaries, and sometimes things are easier than one might expect. For instance, Savino remembers that his

company, OmniOn, was once part of General Electric (GE) and found that AC equipment worked on DC.

“GE is very big in AC distribution, and we learned that we could use their AC bus bar scheme,” he says. All we had to do was get a rating for it from UL [Underwriters’ Laboratories], at a DC level, and we could use that same bus bar for carrying DC.”

However, the broader market is not going to step outside of conventional practices until DC schemes are broadly backed by UL certificates and standards.

Truong thinks consistency might persuade more people to have a look at it. “How do we bring a consistent enough standard that decouples enough of the pieces that were historically not decoupled, and allows the various organizations to really trace advantages on the sustainability factor overall?”

Persuading people to adopt something new will depend on them seeing business value, Truong says - and he sees a promising way to deliver that: “Right now, our opportunity to show business value is really on sustainability. Frankly, I don't believe that most organizations are going to be able to meet their sustainability goals without radically changing the way they think about things.”

He also knows he has to persuade OEMs to accept a standardized approach: “OEMs are semi-reluctant to adopt this because they don't see a customer base for it. We have a bit of a loop to break through there, as well as getting OEMs to recognize that interoperable standards in server designs are actually to their best benefit. I think that they still see value in customized products, They'll probably take a little while to rethink their current product value.”

Back in 2011, when Amazon data center veteran James Hamilton dismissed DC distribution, he felt it might break through in the end: “The industry as a whole continues to learn, and I think there is a good chance that high voltage DC distribution will end up becoming a more common choice in modern data centers.”

He may turn out to be right.

Kraus is in semi-retirement, but he’s ready to get back into the industry if DC distribution makes a comeback: “We proved out some pretty interesting things. And I always believed the question was, how long would it take to come around again?”

IAbolish all resistance!

Peter Judge Executive Editor

Why worry about efficiency in electrical distribution, when superconductors have no resistance at all?

t’s fair to say that all avenues are being explored to get energy into data centers and distribute it around them, combatting resistance and compressing energy into ever denser racks.

A team of researchers has come up with an extreme solution: Wiring up facilities with superconductors.

Superconductivity is a phenomenon, first observed in 1911, in which some materials have zero electrical resistance. As resistance is a limiting factor in how much current a wire can carry, this sounds like a Holy Grail for electrical engineers. A superconductive wire won’t heat up, won’t waste energy in resistance, and can carry much more current in a narrow space than a conventional conductor.

The problem is, that superconductivity was first discovered at temperatures close to absolute zero (0°K, or -273°C), making it completely impractical for widespread use.

In the following years, scientists searched for useful materials which would be superconductive at higher temperatures. Despite years of research, there are no “room temperature” superconductors, but since 1986, “hightemperature superconductors” have been discovered.

In 1986, Johannes Bednorz and Karl Müller of IBM showed a copper-oxide compound that was superconductive at a temperature of 35°K. That was enough to

win them the next Nobel Prize for physics, and kick off a race. The current record for superconductivity is a temperature of around 140°K.

That’s still a chilly -130°C, but it’s a temperature higher than the -200°C boiling point of liquid nitrogen. Practical superconductors have to be supercold, or they “quench” and abruptly lose their magic. Still, liquid nitrogen can be bought for around $2 per liter, so engineers have stepped in and produced superconducting wires and cables.

Superconducting electromagnets are well established - they support a much higher current than regular devices and are used in science experiments, such as the Large Hadron Collider at the European particle physics laboratory CERN, and in fusion experiments. They are also used in more widespread magnetic resonance imaging (MRI) scanners.

But electromagnets have tight coils which can be fully immersed. Actual superconducting cables have to be kept cold by a continuous flow of liquid nitrogen inside an insulated sheath along their length.

There’s a tradeoff between the cost of refrigeration and the benefits of low resistance and a small number of commercial firms seeking out niches where the sums come out in favor of superconductors.

Do the sums

“With superconductors, we have high current capability,” explains Ed Wylie of American Superconductor (AMSC). “The liquid nitrogen system is a cost. It's part of the losses, which are usually the same or slightly less than the normal electrical losses of traditional installations. Really, the whole thing comes down to economics.”

The cost of the liquid nitrogen itself is not a major issue, says Wylie, pointing out that it is made as a byproduct from industrial processes aiming to make liquid oxygen, so it is incredibly cheap. It’s also very safe to work with.

“It’s something like 78 percent of the air, it's non-combustible, and it's free,” says Wylie. “There’s an excess of nitrogen used in the food processing industry, so there's an infrastructure in place. With liquid nitrogen, and appropriate cryostat equipment, the cable can do the job of superconductivity.”

He continues: “The cost of the cooling is part of a larger calculation to show the economics of superconductors. It’s project by project. People get excited because they're electrical engineers, but it’s not a universal panacea.”

It works, but only in niche applications: “It’s when all these things come together. Unless the stars align, it's always a near miss. It always comes down to economics or commercial reality, sadly, because I would love it to be everywhere.”

Long distance cables

One niche could be in some urban power distribution applications. As well as higher currents and lower losses, superconductors carry more dense current, so they can be made a lot smaller.

“The amount of power that you're trying to do in a space could be the key issue - if the client has limited space, a large amount of power to get from A to B, and a problem with heat,” says Wylie.

“If you have to take a large amount of power through a city and you have a duct, but no room for conventional cables which are too bulky, or a requirement not to heat the soil around it, that's the kind of reasons that make it work,” he says.

Other factors include the cost of real estate, difficulty in getting planning permission, or adding overhead cables. “Maybe you can’t build an overhead line in a downtown area, or you open the ducts up and there's no room for conventional cables. There might be physical room, but what about thermal room?”

The Korea Electric Power Corp. (KEPCO) did just this in a semi-commercial trial in 2019.

It needed more capacity in part of its 154kV network, which would normally be provided with a new 154kV cable, at a cost of around $3 million. Instead, it ran a 23kV superconducting cable along 1km of conduit between the secondary bus bars at two substations in Shingal and Heungdeok.

The superconducting cable project was projected to cost $12 million, but KEPCO spotted savings that could be made, including eliminating a 60MVA transformer, and using an existing conduit instead of digging a new cable tunnel.

AMSC built the earliest superconducting transmission project for the Long Island Power Authority (LIPA) grid in New York, The Holbrook Project feeds a Long Island electrical substation via superconducting

cable in a 600m tunnel and was paid for by the United States Department of Energy in 2008.

The European Union is funding Best Paths, a project to develop a DC superconducting cable operating at 320kV and 10kA. The project is planning to use a two-cable system to create a connection with a capacity of 6.4GW, or roughly twice the output of the UK’s new Hinkley C nuclear power station.

“A conventional cable system capable of carrying this level of power would have a seven-meter-wide installation footprint,” the leader of the EU project, fiber company Nexans, said in a statement. “The superconductor cable packs the same power into a corridor just 0.8 meter wide.”

The project, with partners including CERN, will use a magnesium dibromide (MgBr2) superconductor.

Also in Europe, cable company NKT is leading SuperLink, a bid to build a 12km underground link in Munich, which would be the world’s longest superconducting cable. It is expected to have a power rating of 500MW, operate at 110kV, and connect two substations using existing ducts, kept cold by a cooling system with redundant backup coolers.

“We see superconducting power cables as a part of the future to ensure optimized access to clean energy in larger cities such as Munich,” says NKT’s CTO Anders Jensen, adding that such cables “make it possible to expand the power grid in critical areas without having to dig up half the city.”

Japan also tested the idea, building 500m and 1km cables in 2016 to take power from a solar plant in Ishikari City, Hokkaido. The experiment worked, proving that the cables did not deteriorate, and could be operated reliably.

That experiment has been followed up with designs for 50km and 100km cables, which could potentially find their way into large power grids.

The original Ishikari cables actually

lead us to a data center. They terminated at the Sakura Internet Ishikari Data Center, an innovative DC distribution facility that operated in the 2010s.

Could superconductors find another niche inside such a building?

Into the data center

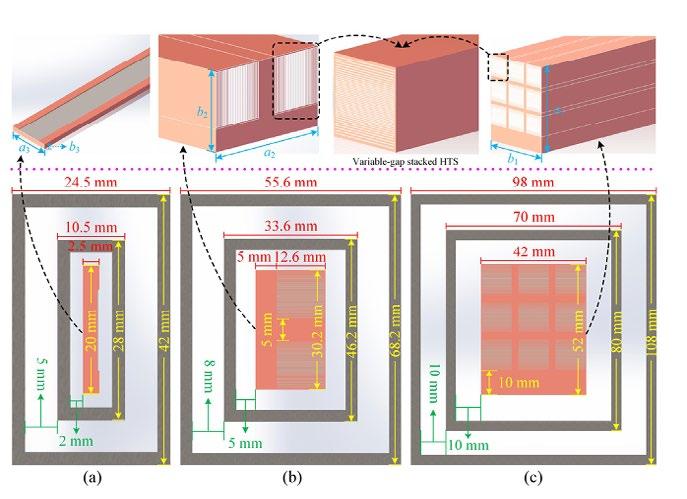

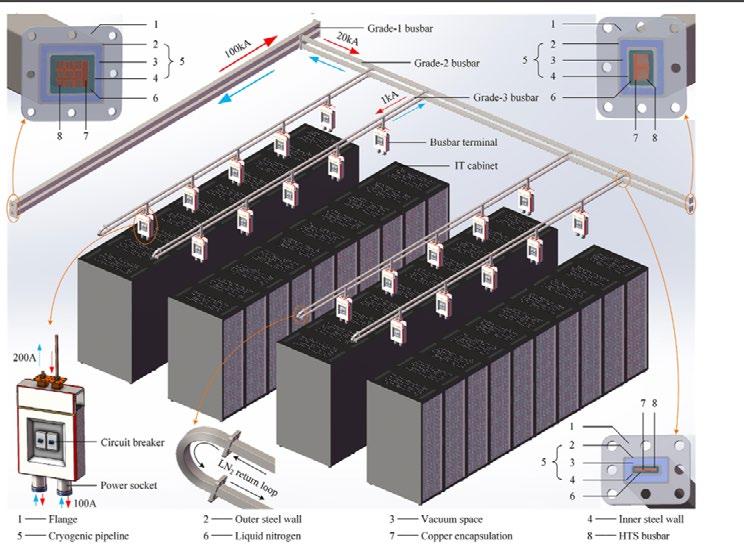

Data centers certainly have extreme demands for electrical distribution, with high power demands in small spaces. So in 2022, a team based in China and Cambridge, UK, designed a data center based on superconductors.

“In the future, it will be challenging to increase the power density for a 48V distribution system using copper cables/ bus bars, particularly for a MW class data center, as the electric current in the lowvoltage DC distribution system is fairly large,” say the authors, led by Xiaoyuan Chen of Sichuan Normal University and Byang Shen of Cambridge, in a distributing the power, in a paper published in Energy magazine.

“A 10MW class data center with ultradense high-efficiency energy distribution: Design and economic evaluation of superconducting DC bus bar networks.”

They continue: “As the copper cables/ bus bars have a certain amount of resistance, huge currents lead to huge ohmic losses, which will indirectly produce a considerable amount of GHG emissions. In order to transport huge currents, the sizes of copper cables/bus bars need to be increased, which will induce other problems such as insufficient space for cable installation in data centers, the high costs of large amounts of cable materials, and the bulky system.”

They aren’t the first to look at high-temperature superconductors (HTS) in data centers. Google has a previously unreported patent in China (202011180110.2) for a theoretical

superconducting data center.

But Chen and Shen’s group set out to design a 10MW data center built around HTS cables and connectors, and share their findings. We’ve reached out to the team, and haven’t heard back yet, but there is plenty to look at in the paper.

The group says: “Superconducting cables are well-suited for low-voltage high-current building sectors such as data centers,” citing the advantages: zero energy loss, ultra-high current-carrying capacity, and compact size.

Their paper includes layout designs for HTS bus bars, and gives an economic analysis of the effects of switching to exotic superconducting bus bars.

Conventional bus bars have limited ability to carry large currents due to “huge ohmic loss,” said the researchers, while HTS DC bus bars could have “ultra-high current-carrying capacity and compact size.”

The superconducting bus bars would be expensive and are at this stage “conceptual,” the group reported, but the paper produced actual designs based on HTS DC bus bars and modeled the economics, trading off refrigeration costs against the benefits.

It concludes the design would pay for itself within about 17 years.

The bus bars are designed around 12mm superconducting tape from Sunam of South Korea, which can carry 960A of current. Three bus bar designs are provided, using multiple tapes to carry 10kA, 20kA, and 100kA of current. Each of these designs is surrounded by a cryogenic pipeline holding liquid nitrogen.

The design takes a basic 10kW cabinet, arranged in rows of 10, in a total space of 1.700 sq m. The rows are grouped into blocks separated by hot aisles, cold aisles, “walk” aisles, and equipment transportation aisles.

Power comes from the distribution room on Grade 1 (100kA) bus bars and is passed to Grade 2 (20kA) bus bars which take it across the rows, and then to Grade 1 (10kA) bus bars extending above each row.

Attached to each row’s bus bar are five power adapters, each of which takes off power and delivers it through two power sockets to two racks.

The liquid nitrogen flows out along the branching network of bus bars, then back along a parallel return loop.

The cost of the cooling is part of a larger calculation to show the economics of superconductors. It’s project by project. - Ed Wylie, American Superconductor

How much does it cost?

Unsurprisingly, the researchers found that HTS bus bars would be much more expensive than conventional bus bars, which can be made out of ordinary copper, without the HTS systems’ cryogenic complexity.

The initial capital investment for superconducting bus bars in a 10MW data center depends on the voltage used. At 400V, the cost is around $5,000, rising to $22,700 at 50V, the report says. That’s a lot more than the cost of conventional copper bus bars, which come in at between $350 and $3,000.

However, the price difference could be made lower if a higher current system was used, and operational cost savings could tip the balance in favor of superconductors.

“Compared with the conventional copper bus bars, the HTS bus bars have the disadvantage of high capital investment cost,” say the researchers. “For example, for the 400V / 25kA scheme, the capital investment cost of HTS bus bars is about 15 times that of the conventional copper bus bars. Nevertheless, when the current level increases from 25kA to 100 kA, the capital investment cost of HTS bus bars decreases to eight times that of the copper bus bars.”

They report: “Although HTS bus bars have high capital investment costs in the initial development stage, they have significant advantages on the annual operating cost and cross-sectional area.”

The annual operating cost of a 400V / 25kA HTS bus bar would be about 18.5 percent that of conventional bus bars, while the cross-sectional area would be 38.2 percent of a conventional system. Raise the current to 100kA, and HTS bus bars could be run for 10.9 percent of the conventional cost, and be about one-fifth of the cross-sectional area.

Reality check

This is an academic paper, and it’s pretty easy to predict the reaction of data center operators to the suggestion of adding cryogenic pipelines to their existing M&E

infrastructure.

The liquid nitrogen would represent another engineering discipline required within the data center, meaning new skills would be needed. It would add to the complexity of the system.

It will also introduce another potential point of failure in a facility which must meet reliability demands. If the flow of liquid nitrogen stops, then power stops flowing, and the facility stops operating.

So it won’t happen unless there’s a really good additional benefit. Chen and Shen’s group suggest one possibility: Upgrades.

If power densities increase within a specific data center, more power has to be delivered to the racks. They point out: “The maximum allowable installation areas for bus bars cannot be enlarged, because the channel sizes of existing bus bars are fixed.” Replacing standard copper bus bars with an HTS system might allow enough extra current to be delivered to the equipment room, in the same space.

With no resistance, the bus bars won’t consume power by heating up, which the paper reckons would save power. They’d also not be a fire risk - they are artificially cooled, so there will be less risk of fire. Even if there is an interruption to the cooling, the conductors won’t suddenly heat up: the superconductor will quench, and all current will stop.

Wylie agrees that superconductors could find uses inside a data center: “If you have a major bus structure inside a building, that could utilize a superconductor. From a high-level description of the topology, we could determine whether a superconductor solution would work.”

And on a personal level, he’d be very pleased to see it happen. He joined AMSC eight years ago from the data center industry, and at the time, AMSC had done some work looking at the possibility of superconductors within facilities.

“It was one of the areas I asked about,” he told us. “They had looked at it briefly, and it didn’t come off.”

Maybe that’s about to change.

A exible power giant

The streamlined design is powerful, giving you the highest energy density available. No more wrestling with space constraints – Vertiv™ Trinergy™ will fit in seamlessly as your enterprise expands. Discover the power of modular scalability.