EVOLUTION OF DATA PLATFORMS

with TARUSH AGGARWAL

Time Series Forecasting with Deep Learning and more.. .

USING AI TO MAP FORESTS

HOW AI IS DRIVING THE ERADICATION OF MALARIA

A GUIDE TO CAUSAL INFERENCE

ISSUE 3

There are start-ups that are trying to do direct air capture - they literally just hoover CO2 right out of the air and bury it underground or compress it.

HEIDI HURST Mathematician and Computer Scientist

It’s concerning because people hear about problematic findings potentially more than about correct findings.

ÁGNES HORVÁT

Asst Prof in Communication & Computer Science at Northwestern University

I think almost every problem that Silicon Valley is trying to solve has already been solved in the cell.

PERRY MARSHALL Scientist and Founder of the

Evolution 2.0 Science Prize

Data Science Conversation

datascienceconversations.com

PRESENTED BY Dr Philipp Diesinger and Damien Deighan

“

Tune in now to be part of the Join the industry’s top trailblazers as they explore groundbreaking new ideas and push the boundaries of what’s possible in data science and machine learning.

your knowledge Enhance your career

Expand

CONTRIBUTORS

Tarush Aggarwal

Heidi Hurst

Francesco Gadaleta

Arnon Houri-Yafin

Zain Baquar

Tamanna Haque

Philipp M. Diesinger

Graham Harrison

Patrick McQuillan

Damien Deighan

Dr Anna Litticks

George Bunn

Katherine Gregory

EDITOR

Anthony Bunn

anthony.bunn

@datasciencetalent.co.uk

+44 (0)7507 261 877

DESIGN

Imtiaz Deighan

PRINTED BY Rowtype

Stoke-on-Trent, UK +44 (0)1782 538600

sales@rowtype.co.uk

NEXT ISSUE

6TH SEPTEMBER 2023

The Data Scientist is published quarterly by Data Science Talent Ltd, Whitebridge Estate, Whitebridge Lane, Stone, Staffordshire, ST15 8LQ, UK. Access a digital copy of the magazine at datatasciencetalent.co.uk/media.

DISCLAIMER

The views and content expressed in The Data Scientist reflect the opinions of the author(s) and do not necessarily reflect the views of the magazine or its staff. All published material is done so in good faith.

All rights reserved, product, logo, brands, and any other trademarks featured within The Data Scientist magazine are the property of their respective trademark holders. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form by means of mechanical, electronic, photocopying, recording, or otherwise without prior written permission. Data Science Talent Ltd cannot guarantee and accepts no liability for any loss or damage of any kind caused by this magazine for the accuracy of claims made by the advertisers.

COVER STORY We discuss data platforms with Tarush Aggarwa l, one of the world’s leading experts in helping organisations to leverage data for exponential growth. 05 START UP We speak to Jaguar Land Rover’s Tamanna Haque on what motivated her interest in the world of Data Science and her career to date. 09 INDUSTRY CASE STUDY Arnon Houri-Yafin , founder of Zzapp Malaria discusses how his company is using AI to drive the eradication of malaria. 12 CHATTING WITH CHAT GPT Francesco Gadaleta casts his expert eye over what everyone around the (Data Science) world is talking about. 18 TIME SERIES FORECASTING WITH DEEP LEARNING IN PYTORCH (LSTM-RNN) An in-depth focus on forecasting a univariate time series using deep learning with PyTorch by Zain Baquar 22 CAUSAL INFERENCE Graham Harrison explores what it is and why Data Leaders and Data Scientists should pay attention. 30 DATA SCIENCE CITY Our third city focus features London . We look at the UK’s capital city,

of the world’s Data Science and AI hotspots. 35 USING AI TO MAP FORESTS

satellite

39 THE VIRTUAL MILLION DOLLAR QUESTION:

INDUSTRIALISE

SCIENCE MVP? HERE’S

DECISION From

M. Diesinger helps you to determine if your Data Science MVP is ready for industrialisation. 45 THE PRINCIPLES OF AN EFFECTIVE DATA-DRIVEN ORGANISATION By Patrick McQuillan , Founder of Jericho Consulting and a Professor at Northeastern University and Boston University. 48 LARGE LANGUAGE MODELS Risk mitigation and the future of large language models in the enterprise market by Damien Deighan 52 ANNA LITTICKS Our spoof Data Scientist. Or is she? 54

one

Following up from her brilliant feature in ou r last issue, Heidi Hurst once again looks at satellite imaging. In this issue, she focuses on using

imaging to help tackle the complex problem of deforestation.

SHOULD YOU

YOUR DATA

HOW TO MAKE THE RIGHT

prototype to production, Philipp

THE DATA SCIENTIST | 03

INSIDE ISSUE #3

HELLO, AND WELCOME TO ISSUE 3 OF THE DATA SCIENTIST

Since our inaugural issue last November, we have received outstanding feedback from a wealth of Data Science professionals around the world - and we are thrilled to produce an even bigger and more varied third issue.

Our mission has remained uncomplicated: to produce a Data Science magazine that serious Data Scientists want to read, be featured in, and contribute to. We believe that the quality of contributors in each issue speaks for itself, and we hope that you find issue 3 informative, insightful, and engaging.

So what will you find in issue 3?

We feature two interviews - 5x’s Tarush Aggarwal and Pachama’s Heidi Hurst - and we also host a fascinating business case study too, as Arnon HouriYafin gives us a detailed insight into how his company is tackling malaria. We’re also very proud that issue 3 boasts a wealth of other outstanding content from those within the sector, focussing on their current work or Data Science & AI topics that they have a real interest in.

As ever, we welcome and seek your feedback, thoughts, and ideas, and we encourage you to contact the Editor to find out how you, your team, or your organisation can feature in future issues. Many Data Scientists and organisations already have. The Data Scientist is a broad and welcoming church and welcomes all who want to contribute to it or read it. We know that a magazine is only as good as its readers and contributors, and in that respect we are blessed.

And talking of contributors…

The Data Scientist goes out in print and digital formats to thousands of Data Scientists all around the world, providing curated content from a plethora of contributors. It’s a great vehicle to get you or your organisation’s work or words in front of the eyes that it deserves. Future issues are already filling up with content, and unlike other media outlets, we don’t charge a fee for appearing in our magazine.

We are really excited about the future of The Data Scientist , a magazine that reflects the ever-growing importance of our sector; aimed at those looking for great Data Science content to read. Thanks for joining us on board for the journey.

But in the here and now, we really hope that you enjoy this issue. Please share it with your colleagues or those you think may enjoy it, and let us know how we can improve it even more.

EDITORIAL 04 | THE DATA SCIENTIST

The Data Scientist Editorial Team

DATA PLATFORMS

TARUSH AGGARWAL

He graduated with a degree in computer engineering from Carnegie Mellon in 2011, before becoming the first Data Engineer on the analytics team at Salesforce. More recently, Tarush led the data function for WeWork before he left to found The 5x Company in 2020, which supports entrepreneurs in scaling their businesses.

THE DATA SCIENTIST | 05

IS ONE OF THE WORLD’S LEADING EXPERTS IN HELPING ORGANISATIONS TO LEVERAGE DATA FOR EXPONENTIAL GROWTH.

DATA PLATFORMS

How has the data infrastructure landscape developed over the last 10 years, Tarush?

When you look at the history of data infrastructure, it began with the online revolution. All of a sudden, we went from storing data on our own personal devices to storing data on the Cloud. With the advent of Facebook and Google, Cloud companies started collecting massive amounts of customer information, so the need to analyse this information is really where the big data revolution came from.

information back to users inside our products, and it allows anyone to answer any questions by slicing and dicing data. You would have a simple BI or reporting layer, which would pull this highly structured data which we’ve just created. In general, that’s the core framework of the different layers of infrastructure. As time progresses, we are introducing more and more categories such as reverse CTL, and then there’s observability, augmented analytics, machine learning and AutoML, which are all additional categories.

But the four core layers are the ones I just described.

Do you think that in the next few years companies will be able to manage their data more efficiently?

Along with starting to store information in the Cloud, the second thing which became prevalent is that we started having multiple different services to store this information. It was no longer one company which had all of this information. Today, your average start-up has got 10 different sources of data. This could be your backend databases, marketing data from Facebook Ads, Google Ads, data from your CRM, financial data from Xero or QuickBooks, or even Google sheets mixed with application data from Greenhouse and Lever. The number of different data sources has increased, so this has resulted in a need to centralise data again. We need to decentralise this and make sense of the data.

That’s a quick history of how we got to where we are today, and wh y it’s becoming more and more important for companies to have the right platform or the right infrastructure in place to make sense of all of this data.

What can companies do to tackle this problem of disparate and convoluted different data sources?

I think there are four core steps when we think about data platforms today... Step one is how do we pull this data from these different data sources into a single place to analyse? Once you have this, you want to store all of this inside a data warehouse, which is structured to store large amounts of data. Modern day warehouses are able to separate storage from computers, which makes them really cost-efficient in being able to store lots and lots of data without racking up large bills. That’s step two.

For step three, you have all of this raw data; it’s messy and it’s not been structured in a way to answer business questions. You want data modelling to create a clean business layer, which is optimised to answer business questions. We call that the data modelling layer.

Step four is where we want to surface this

I think what’s happened has been really interesting. If you look at the data space, it’s one of the most fragmented spaces out there. Every different layer of those four layers sees multiple billion dollar companies competing in them. Then, with the few additional categories which are named after, there are 10 different categories. What this really means for end consumers is actually pretty grim, because the space is becoming mainstream and every company needs to get value from data.

For example, imagine walking into Honda, and instead of selling you a Honda Civic, they sell you an engine and you have to build your own car. That’s really what the buying journey looks like today for the end consumers.

Although we’ve made progress and have flexibility in these tools, it hasn’t been easy for companies who don’t have large armies of data teams to actually go and get value from this. The short answer to ‘have things got better’ , is no. What I’m very bullish about in 2023 is a new category in the space called the managed data platform. This ensures that you can focus on the application of data instead of having to worry about setting up the infrastructure. In full transparency, I run a company which is focused on the managed data platform, but I’m trying to be as unbiased as possible.

Can you describe how a managed data platform works and what’s involved?

The goal is not just how we give businesses an end-toend platform across these initial four categories, but also all of the other categories as the company grows in scale. If you look at software engineering it’s a lot more mature.

How does software engineering solve this problem? Well, software engineering has Amazon Web Services and AWS, and if you really think about it, it’s just an umbrella for 50 different services. Amazon owns a lot of these 50 services, but it’s also got a marketplace where

06 | THE DATA SCIENTIST

PHILIPP KOEHN DATA PLATFORMS

It’s becoming more and more important for companies to have the right platform or the right infrastructure in place to make sense of all of this data.

you have external services, and the Amazon platform gives you a central place to do certain things like provisioning or setting up templates.

It makes it very easy by giving you a macro platform, which grows all the way from when you build your first product all the way till you are an enterprise, a large customer, and the entire journey in between. We partner with all of the different data vendors out there, so all of the different warehouses, and ingestion, and modelling, and all of these different categories are all inside our ecosystem. We make it very easy to go build your first platform. Initially, you could start from a template, or based on your industry, use case or size you could pick from a template. There will be a B2B template and a template for companies who have fewer SQL capabilities - so a low-code template, or a Web3 company which needs to pull on chain data will have a template.

We help to build your platform and then manage your users, give you all the tools to upgrade your platform as you become a bigger company, and have more advanced use cases and everything in between just like the Amazon web services. In short, we’re trying to be the Amazon web services of the data platform world.

Is this simply a case of you go to your managed platform and you select what you want?

Yes, I think that’s where the magic comes in. We integrate with all of these different vendors at an API

level. We provide, manage, build, user manage, and configure managed teams on behalf of these vendors, and make it very easy by removing all of that complexity and giving you a single platform where you can manage multiple vendors at the same time.

On average, it takes companies four months to build a data platform. Today, they have to sign multiple different enterprise contracts, and this involves work by finance, legal product, and billing. Building a platform on 5X today takes about four minutes. I’m not just making that number up, we’ve actually measured it. What we’re talking about is an end-to-end customer experience which is more streamlined and efficient than what exists inside the market today.

How do you think the whole data infrastructure and platform space plays out over the next five years, given where we are?

I think at a fundamental level, abstraction always goes upstream. We’ve seen time and time again that jobs get replaced by more automation. People always think that this is the end of jobs, but inevitably it creates a new category which employs more people, and things always go more upstream. For example, we don’t design chips anymore, we don’t write and see language anymore, and we don’t optimise how our database is run.

All of this happens automatically. Database administrators were replaced by data platform engineers,

THE DATA SCIENTIST | 07 DATA PLATFORMS

who are getting replaced by data engineers, who at some point will get replaced by Data Scientists, and so on. When I think about infrastructure, I think we’re at a point where it’s no longer relevant to hire data platform engineers to build your data platform. New categories in this space promise to give you all of these different things, which allows you to focus more of your time. Time on your data modelling, on building your BI on data science, on insights and recommendations; meaning less time worrying about infrastructure and platform which really wasn’t adding business value in the first place. It was just one of the building blocks.

As I see the space evolving, we’re moving away from a lot of the data infrastructure to more of the applications and data, and I think that’s a really exciting part of the journey.

What do you think that means for someone who’s a data engineer now in 5 or 10 years? What do you think they’ll be doing?

I think if you look at what data engineers were doing 5 or 10 years ago, 80% of time was spent on building pipelines and moving data from one place to another. Ingestion and Fivetran, Stitch, and Airy, and all of these different companies in this category came and replaced that. Whereas today, only a very small amount of a data engineer’s time should be spent thinking about pipelines because this should be fully automated. Instead, data engineering is evolving more into the data modelling side, where data engineers can spend most of their time.

This clean business layerwhich the data engineers really build - is ultimately what powers the data products. It gives the Data Scientists the core models they need in order to go and build the insights and recom mendations. It also powers data analysts to go deeper. Data engineering jobs have just moved higher up the abstraction level and they’re more important than they’ve ever been before.

to drive outsized returns in terms of the insights and recommendations they can provide for companies.

On the rise of data platforms and managed data platform providers - does that have any implications for how you structure a data team going forward?

I think a macro trend which we’re seeing in 2023 and moving forward is doing more with less. I think the downturn, or recession, has had a larger than normal impact on data teams. Globally, data teams have been quite affected, and I think in some ways it’s a correction. Some companies over-hire data teams with the big promise of everyone wanting to become data driven, and this is just part of a normal cost correction.

I think for Data Engineers and Data Scientists, being able to be more relevant over a few core areas, instead of having a data analyst, data engineer, a scientist, a data platform engineer, and then someone on MLOps inside every single team was a little bit too much. I think these skills will still exist individually, as I think specialisation is very necessary, but I think your average Data Engineer will be able to do more things on data platforms, more modelling and some level of data science. And vice versa, where your average Data Scientist will know how to build a stack from scratch.

I think in general, we are going to get a little bit more rounded and do a little bit more with less.

As I see the space evolving, we’re moving away from a lot of the data infrastructure to more of the applications and data, and I think that’s a really exciting part of the journey.

Will this differ depending on the size of a company?

What about Data Scientists? How do you see their role evolving?

Their role is getting more real. A lot of Data Scientists aren’t actually doing any data science, they’re focusing on all of the layers before. I think that if I look at ML, and data science, and MLOps, it’s finally this point where it’s less buzz-wordy and it’s becoming more real. The opportunity to go and join a company and actually do data science work in the next few years starts to become more tangible, so these people will be able

I think the general trend which I’m seeing in large organisations that didn’t start off as tech companies, is that they’re the ones struggling right now. By putting more and more people into this problem and creating more and more silos, things just aren’t getting better for them. I think there’s going to be a lot of consolidation in terms of end-to-end platforms going in there, and this could be things like Palantir or Databricks or other platforms. It’s making their jobs much easier because I think at the end of the day, these very large organisations are the ones I see suffering the most in this current landscape. I think there’s a lot of opportunity to rebalance and change.

08 | THE DATA SCIENTIST PHILIPP KOEHN DATA PLATFORMS

START-UP

START-UP

IN EACH ISSUE OF THE DATA SCIENTIST , WE SPEAK TO THE PEOPLE THAT MATTER IN OUR INDUSTRY AND FIND OUT JUST HOW THEY GOT STARTED IN DATA SCIENCE OR IN A PARTICULAR PART OF THE SECTOR.

LEAD DATA SCIENTIST AT JAGUAR LAND ROVER,

TAMANNA HAQUE , TALKS ABOUT HOW SHE LEAPT INTO WORKING WITH THE CARS SHE’S ALWAYS LOVED, AND HOW IT SATISFIES BOTH SIDES OF HER BRAIN.

GETTING INTO DATA SCIENCE

How did you start working in Data Science?

I completed a Maths degree at The University of Manchester, where I specialised in statistics. I didn’t do any post-graduate education and this was fine for me, I’ve always worked in fast-paced, commercial environments driven by value and delivery.

After graduating, I joined a digital fashion retailer (with a Financial Services proposition) as an Analyst initially. I learned a lot about real-life data and analytics itself, whilst developing a rounded understanding about the business and how to deal with stakeholders crossfunctionally. A great way for me to set solid foundations for what followed, and I personally feel this gives me

a lens that others who dive straight into Data Science don’t have.

I was soon attracted to Data Science because it tapped into what I learned at university and challenges you to keep learning; there’ll always be new advances in the field and things to learn. I gave up personal time when transitioning from data analytics towards Data Science.

Were there any roadblocks on your path into Data Science?

There were definitely some challenges. I had a few people tell me I couldn’t do Data Science or specifically the role at Jaguar Land Rover. I’m not sure if this was because it was happening quickly, or because I didn’t

THE DATA SCIENTIST | 09

fit the typical Data Scientist stereotype in several ways. This can instill self-doubt, which is why having and nurturing your wider network matters; I wouldn’t be here if it wasn’t for the people around me.

INITIAL CONNECTION WITH JAGUAR

How, when, and why did you start to follow the company?

I’ve been a fan of Jaguar since I was nine. I grew up with Top Gear and Formula 1, but it was Jaguar which really piqued my interest. At the time it was the Jaguar XJ, XK, S-TYPE and X-TYPE which turned my head, all attractive and luxurious cars with sporting pedigree; qualities which made Jaguar an aspirational brand to me.

Since then, I’ve ended up writing about the brand, attending previews of upcoming launches, and attending invitation-only experience days. I was a fan-turnedcustomer at 22 (very much in love with my Jaguar XF!) and I ultimately became an employee at 25. So, within my role at Jaguar Land Rover, I feel connected and personally committed when developing our products and customer experiences.

Anyone who knows me associates me with Jaguar and that’s a lovely feeling.

WORKING AT JAGUAR LAND ROVER WITH THE PRODUCTS I LOVE

Give us an overview of your current role?

I’ve been working at Jaguar Land Rover since 2019, as a Lead Data Scientist working within Product Engineering, specifically in the connected vehicle space.

My role involves using the connected car and AI to make our products and customer experiences better, whilst leading within our wide Data Science team too. The Data Science team in Manchester, UK, started with myself and one of my teammates - it’s since grown to nearly forty (cross-sites and countries) and developed into a high-performing, advanced Data Science team.

What makes us stand out is the nature of our workwe mostly use vehicle data (of participating customers), which is different to a lot of other commercial businesses or teams who’ll focus more on transactional or web data. The data we use lends itself to some pretty interesting projects, and a general futuristic feel here.

I’m particularly interested and active in enabling a more electric and modern luxury future from the use of vehicle data.

How did you land your current role?

I started here as a Senior Data Scientist in 2019, in a brand-new role and team. This move presented a lot of change, moving from retail into automotive, from an

established company to a tech-hub with a start-up feel, going through a general culture change and having a big step up in my career.

My interest in the Jaguar brand and corporate awareness made the interview easier because I didn’t have to ‘revise’ on these areas. In the hiring seat (which I’m now in) it’s also easy to know when someone genuinely has an interest in your business. Being able to demonstrate passion helps - you’re already aligning with some of the company values.

If you have an appetite to develop, joining a brandnew team can be great. Not only are there lots of opportunities, but there’s room to create many more and propel your development, which I did. I’m grateful to be working at a place where hard work and commitment is recognised and rewarded; last year I was promoted to Lead Data Scientist.

Looking back, I can see that this was a great career move for me, coming into a newly conceived Data Science team for a company which influences me. It satisfies both sides of my brain, ‘left brain logic’ and ‘right brain desire’. I read this phrase in an old Jaguar advert!

How does your passion for Jaguar Land Rover’s brands and cars improve your ability to perform in your role?

I have ever-growing commercial awareness and passion about our products, customers and business. These aren’t new qualities, but they now align with my professional interests, as well as personal. I’m always thinking about new ways I can add value to the business through the use of Data Science, keeping up with our competitors and trends in AI to support me. I can be sat in my car, visiting a dealership or at home with my customer app, appreciating the modern and luxurious experience in front of me whilst challenging myself to think of ways to improve it.

Day-to-day, this wider understanding complements my technical expertise, such as knowing when something should be questioned in data or outputs, which performance metrics to optimise for when building machine learning models or where a trade-off in model performance is appropriate.

It’s a pleasure to be able to do what I do best, for who I know best. I’ve found a keen appreciation for our other brands (Range Rover, Defender, Discovery) and I’ve been fortunate enough to get hands on with them too.

10 | THE DATA SCIENTIST

START-UP

Anyone who knows me associates me with Jaguar and that’s a lovely feeling.

DIVERSITY AND LEADERSHIP IN AI

You’re focussed on making an impact both inside and outside the business in terms of improving diversity and inclusion within AI. Can you describe some of your work here?

I’ve led outside the team by mentoring female apprentices with a tactical approach, working with them on original project ideas with many value streams.

More recently I founded a ‘Women in AI’ group at Jaguar Land Rover which provides female members across the business with technical and soft-skill development opportunities in a challenging, maledominated field. Additionally, I was chosen for a new (voluntary) role within Jaguar Land Rover’s Women in Engineering committee as its Analytics Lead, helping committee chairs and executive sponsors to become better informed towards improved gender diversity.

I’ve done a lot of work externally which has promoted my skillset and those of my audiences. I’ve delivered numerous talks to industry professionals, students, and women’s leadership and diversity groups, with the goal of promoting inclusion within AI and automotive. The other angle to my talks involves following your passions. Externally I have also been a guest lecturer at a leading UK university where I’m also supporting multiple students (who are also on placement with Jaguar Land Rover) with their final-year, industry-based projects.

Have you faced any challenges as a woman in Data Science, what many see as a typically underrepresented space?

I know some women in tech think they have to do more for the same worth. It’s definitely pushed me to give it everything here. My domain knowledge and Data Science expertise combined help to build my credibility and reputation.

You’ve recently transitioned into a leadership role within Data Science. What are some things you’ve learned, what are some of the challenges faced, and what are you enjoying more now?

I’m continually learning and adapting how I can be a good leader. If I had some qualities before, I realised I had more to learn, such as being assertive, a role model, a Data Science thought leader, comfortable in speaking, confident to be my authentic self. I’m providing support to a breadth of colleagues (in and outside the team) whilst delivering myself. I’m actively involved in setting and refining our team’s strategy and I’m enjoying leading projects which either deliver high financial impact or help set the path in terms of new tech and/or machine learning capability. I’m grateful that my manager (Dr Anthony Woolcock) values my opinion and gives me responsibility to lead (and on exciting things to come)!

Sometimes I’m time-poor so I need to manage my diary well to ensure effectiveness and work-life balance. I’m overseeing people, other projects, doing public speaking and trying to remain hands on. I sometimes block out chunks of time in my diary - I need some meeting-free time to produce quality technical work. I try to finish on time and enjoy a very busy social life with my family and friends. A flexible attitude to how we work helps to keep me happy and energised whilst I’m delivering from various angles.

CLOSING THOUGHTS

Have you achieved anything so far which you previously thought would be a dream, and is there anything you’ve still yet to achieve? And do you have any advice for others?

I’m most proud of my recent promotion from Senior to Lead Data Scientist. Also, it was exciting for my family and me when I gained an offer to join Jaguar Land Rover (I especially couldn’t wait to tell my grandparents)!

Despite my interest in Jaguar, I was nervous to join a much larger, global company and adjust to the changes I mentioned earlier, which took me out of my comfort zone considerably. But this was clearly a great choice for me, and has given me courage to continually find new limits in myself to serve my internal and external customers better.

Since joining Jaguar Land Rover, I’ve delivered many Data Science projects of varying natures, which have boosted my abilities whilst creating impact on our vehicles and customers of today and tomorrow. With Jaguar becoming an all-electric brand in 2025, it’s a huge milestone for the company and it’s thrilling to know that my current work contributes to this.

Out of hours, I’ve also experienced several amazing opportunities. To name some, I was sent to support Jaguar TCS Racing at the London E-Prix last Summer, I’ve tested top-tier cars such as the Land Rover Defender and I’m still trying to get used to the fact that I now drive a Jaguar F-TYPE!

START-UP THE DATA SCIENTIST | 11

HOW AI IS DRIVING THE ERADICATION OF MALARIA

WE SPOKE TO XPRIZEWINNING ARNON

HOURI-YAFIN. HE IS AN ISRAELI ENTREPRENEUR WHO FOUNDED ZZAPP MALARIA IN 2016, A START-UP WITH A MISSION TO ERADICATE MALARIA.

So, if we start with your personal journey Arnon, could you tell us how you ended up working in both AI and in the field of malaria eradication?

I have two answers. One is a personal story, where my friend took me to see malaria first-hand and then another one is poverty.

Back when I had to decide what to study in university, I chose economics. My goal was to become an economist in the context of developing countries, and specifically African countries. I believed that reducing poverty was the key to significantly improving the wellbeing of whole communities.

12 | THE DATA SCIENTIST INDUSTRY CASE STUDY

Just before I completed my MA, a friend called and told me that he was starting a company (Sight Diagnostics) dealing with malaria. I knew at the time that malaria was a huge problem in terms of public health, and that it’s one of the primary reasons for poverty. The disease prevents people from going to work, either because they are sick or they attend to sick family members, which reduces productivity. Malaria is also one of the primary reasons why children in Africa miss school, which obviously harms their ability to progress.

Sight Diagnostics developed a malaria diagnostics device, and one of my jobs was to test them. We ran these tests in public hospitals in Mangalore and Mumbai, India.

In India, you have some places with a lot of malaria and others without. I was in an area with a lot of malaria. Now, I’m a nervous parent - when my children have a fever, I get really stressed out. But when you see the moms with young children who are very feverish, they are scared, because it’s not just a fever that will probably be gone by tomorrow, it’s malaria. And when I saw that, the difference between malaria diagnostics and malaria elimination struck me. In many countries, Israel for example, malaria was a problem - a big problem. Here, malaria was all but eliminated in the 1920s and 1930s, after the stagnant water bodies where the Anopheles mosquito breed were thoroughly targeted. So, if we have such a big problem, but one that could be fully eliminated, why don’t we do it? This is what caused me to say, okay, diagnostics are very important, but we need something more radical. We need to recreate in modern Africa the successful malaria elimination operations of Cyprus, Egypt and many other countries.

Zzapp Malaria is about moving from malaria control to malaria elimination. This takes artificial intelligence and data.

Is that why you specifically founded Zzapp Malaria?

Exactly. Zzapp Malaria is about moving from malaria control to malaria elimination. This takes artificial intelligence and data. When people tried to treat water bodies in Africa, they did it with partial success. This is because tropical Africa has two rainy seasons and there are wide areas that must be searched for water bodies that once detected need to be treated regularly. A very high percentage of water body coverage is required, which is a difficult result to reach.

With our system, fieldworkers go into the field with a smartphone that guides them exactly to the areas they need to search, enables them to upload information

about the water bodies they find, and, once water bodies are detected, shows them which water body has already been treated, which needs to be treated again, which houses should be sprayed etc. So we really have a lot of information about the exact location of water bodies and the overall situation of the operation in terms of the treatment of houses and mosquito breeding sites.

Did the company have a concrete idea already?

Did they have a team? How did you start this up? Where were you when you started the company?

We actually started with an app for house spraying, but quickly moved into larviciding (the treatment of water bodies). Today, our system combines a lot of different features that, combined, are meant to provide the most cost-effective solution per location. The ideas and the team were built as we progressed. We have been receiving a lot of help from many people. It is nice to see that people understand the importance of malaria, and really try to do their best to fight it.

What were the backgrounds of the other members?

Zzapp has a strong and dedicated team with expertise in science, technology implementation, marketing and community engagement. We have leading software developers, PhD holders in veterinary medicine and communication, and team members with extensive experience in leading field operations, against mosquitoborne diseases and other impactful causes, in Africa and elsewhere.

You had a diverse set of skills when you started out. Did you have a very concrete idea already?

We started out with a clear goal that is still with us: eradicating malaria. We knew, almost from the start, that the key is locating and treating the water bodies. We have since improved our ability to do so, and have integrated in our operations other methods, but yes, we are still big believers in larviciding.

That sounds interesting. So, you stuck with your original ideas - more or less - to implement and to turn your idea into reality rather than to do a lot of research in 2016? Can you explain a little bit about how the company grew and how the project grew over time?

Malaria is unique in that the means for eliminating it are known. It’s not like having to develop a new medicine. So the trick is to think of the best waysthat are the most cost-effective ways - to do so under challenging conditions and with limited resources. So

INDUSTRY CASE STUDY

THE DATA SCIENTIST | 13

that is where our R&D goes. We began with a small budget from our parent company Sight Diagnostics, and a grant from the Israel Innovation Authority (IIA). Working lean, and gradually hiring more staff, we collaborated with leading scientists, for example Andy Hardy with whom we operated in Zanzibar. In that project, which was funded by IVCC through the Bill and Melinda Gates Foundation, we used drones to map water bodies.

Winning the grand prize in the IBM Watson XPRIZE AI for good provided us with $3 million, which is obviously a significant improvement in terms of our resources. We were able to hire additional staff and push our technology forward. It also helped us create connections with African governments. We have been going to malaria conferences in which we present our technology to malaria researchers and implementers. We have also been contacting government ministries of health and proposing our solution. So far we have been working in several African countries, including Ethiopia, Kenya, Ghana, Tanzania, Mozambique and São Tomé and Príncipe.

Can you explain the core idea that you had to combat the spread of malaria? If I understand correctly, it has to do with identifying stale pools of water? How do you prevent the spread of malaria?

In the past, the best solution to fight malaria was treating the stagnant water bodies. But today in Africa, most countries prefer bed nets. You distribute them and hope that people sleep under them. It’s a simple and effective tool, which indeed protects many people. However, it depends on peoples’ behaviour, which as we know from mask mandates during COVID, is unfortunately not always reliable. We want to make use of the proven method of larviciding and apply it in Africa.

To do so successfully, you must overcome two problems. One is coverage: you need to find all of the water bodies. The second problem is budget. Because you don’t have the money to scan every single square kilometer in Africa, you must prioritise. Based on satellite imagery and data on topography and rainfall, our system decides when and where to scan for water bodies. This is how we reduce operation costs. The mobile app helps coordinate the operation and the monitoring. We make sure that all the areas that were assigned for scanning were searched, and that all of the water bodies and the houses that were selected were treated.

So, you’re basically saying that using satellite data, and then also artificial intelligence, you can pinpoint people much more precisely to those water bodies that need to be treated, and by increasing the spacial distance coverage, basically you can eliminate malaria better? What was the idea initially? How do you scale this across a continent like Africa?

Yes, treating the water bodies is the idea, and scale is the challenge. Besides technology, it’s about finding good local partners, be it the government or a large NGO. We provide the technology and training, but it is the fieldworkers and the local management who have the ownership over these operations. Ultimately, they are the ones who serve their own communities.

Therefore you are bringing the technology, and the partners are bringing the people to make it scalable?

Yes. We bring technology and we also bring knowledge about which agent to put in the water. We are experienced because we did it in other countries, then we go to new countries to share our knowledge.

You already mentioned satellite data. There’s obviously a lot happening in that space at the moment. Can you talk a little bit about which

14 | THE DATA SCIENTIST

INDUSTRY CASE STUDY

kind of data you’re using and how you’re using the satellite data?

One of the challenges that we faced is resolution. If you have a large water body, you just see it from the satellite imagery. It then becomes a standard machine vision problem and you also have the near infrared, a channel where water is very distinct. But then you have water bodies that are smaller than your resolution. So it’s not about finding the water bodies themselves, but rather the areas that are suitable for water bodies. This is interesting because if you go to look at something smaller than your resolution, the context becomes very important. For example, you won’t find malaria mosquitoes in a river because they only breed in standing water. However, near that river, you’ll probably find many water bodies.

We started with a small neural network that detected only what we wanted it to detect. We then enlarged the networks so as to get better usage of the context of the area. It’s similar with topography (and this isn’t even artificial intelligence, it’s more traditional models of topography): you have water, going from a high area to a low catchment, so you can understand that it’s not only the absolute height of a specific point on the map, it’s also how it relates to other points in the area. We use both traditional models of topography and a neural network to understand where the water bodies are likely to occur. Again, if you give it more areas, it works better.

satellite imagery produced by the European Union, but also high resolution satellite imagery that one can buy. We help countries understand the pros and cons of each and make a decision. So far, we mostly focused on the low resolution part because it will be more convenient for many of our potential customers. We hope to gain more experience with high resolution satellite imagery.

Basically then, this model takes all of this input data and then predicts where water bodies could be, and that information goes in through an app to the workers in the field. Is that correct?

Yes. We have one more layer, which is locating the houses. If you have a water body in the middle of the jungle, you don’t care because malaria is transmitted only from person to person. The mosquito is merely the vector. So, we have one component that maps the water bodies and then one component that maps the houses.

The last component is about the proximity of houses to areas that potentially have many water bodies in it. Then, based on that, and based on their allocated budget, we define where to scan for water bodies. We then have one more component that takes it to the mobile app.

Malaria attacks poor communities and then prevents them from getting out of their poverty because it’s difficult to go forward. It’s a vicious cycle of poverty and disease that we want to help solve.

You mentioned you’re using conventional neural networks, basically to identify areas where there could be water bodies… what are the inputs for those models? You mentioned satellite data, but you’re not only using visible light channels, but also infrared - anything else?

We’re using satellite data, then topography, and also land use. We also use data on rain and humidity. For that, as part of our prize, IBM Watson also helped us in machine learning projects. They focused on the temporal part of it, because you need to know not only where the bodies of water are, but also when.

Obviously, at the start of the rainy season, you will find many more water bodies than after the dry season. But knowing the exact amount of rain, humidity and temperature, helps to better predict the abundance and location of water bodies.

That’s quite sophisticated modelling that you’re doing there already. For the data sources, are they open source or do we have to buy this kind of data?

This is up to the country we work with. There is free

And so these workers, what do they then do? Do they take the app, then take the information, and then they go to these locations and then they treat the stale water bodies with chemicals? Yes, they search for the water bodies. If we found water bodies from a drone or from satellite imagery, we can just direct them, but they can also report water bodies from the field. This is important because that’s how we feed the system. It’s machine learning and we need new data. That’s one thing. Then, they treat the water bodies. Treatment of the water bodies in the past involved chemicals, but this isn’t good because animals, and sometimes even people, drink from the water bodies.

The World Health Organisation has very strict regulations about which materials can be put into the water, but mostly we use a biological agent that is called Bti (bacillus thuringiensis israelensis). The good thing about Bti is that except for mosquitoes it doesn’t harm other animals. Not people, not cows, not frogs - not even other insects. Only mosquitoes and black flies (that transmit river blindness). So, it’s very environmentally friendly.

INDUSTRY CASE STUDY THE DATA SCIENTIST | 15

Can you talk about some specific user cases or field tests that you did with communities?

One interesting thing that we’ve done is with an NGO in Ghana. They fight malaria in their town and in the villages surrounding it, and they were very successful before us. They sprayed houses and did community activities with the village inhabitants, because education is also important so that people use the bed nets and go to the doctor if they have a fever.

They wanted to achieve zero cases, and so they approached us and together we implemented an operation against the water bodies. As I said, the very interesting part is that we managed to reduce more than 60% of the mosquito population in the town and the villages, which is an outstanding result.

It was a controlled trial: some areas we treated and in some not, so we were able to compare the impact. The cost was only 20 cents per person protected , which is extremely low. Other interventions cost about $5 per person protected, so it’s a very big difference. This operation spanned 100 days, but because it was so inexpensive, we could have used the budget they usually spend to run the operation year-round and scale it up to more villages in the area.

Another interesting operation was in Ethiopia, where we worked in a few villages and mapped the area. We learnt a lot from this operation because we saw how different fields are correlated with the existence of water bodies. For example, teff fields (teff is a kind of grain) did not have suitable water bodies for Anopheles mosquitoes, whereas in the grazing area where the cows were we saw hundreds.

How does the collaboration work? Do you have to be in the field when you work with these people in Ghana or Ethiopia, or is this a remote collaboration?

I’m a big fan of being in the field. It’s about user experience. It’s about science. It’s about the quality of training. It’s about understanding the specific problems. In each of our operations, even during COVID, we visited in person except for one operation in Kenya where, because of COVID, we weren’t able to make it. You learn a lot from such partners.

How do you measure how successful you are?

You have two measures. One is about mosquitoes. We catch mosquitoes. We don’t catch them to kill them, we catch them to count them - again inside the intervention area and then outside the intervention area to confirm the reduction rate of mosquitoes. And then, the most important measure is to count malaria cases to see if you reduce malaria cases.

And what are the results?

In Ghana, this is the first time we did an end-to-end trial. The results of mosquito reduction is amazing, it’s more than 60%.

INDUSTRY CASE STUDY 16 | THE DATA SCIENTIST

What timeframe is that 60% reduction over?

It’s 100 days, less than four months. Now we start our most ambitious operation, which is about malaria elimination - really elimination to zero. For that, we collaborate with the government of São Tomé and Príncipe. São Tomé and Príncipe are two islands, forming an African island nation. Because they are islands, it’s a closed system, so you don’t get incoming mosquitos and they don’t go out.

We want, in two years, to not only target the water bodies but integrate other interventions based on artificial intelligence planning, to understand where to do what, and then to eliminate the disease. If that happens, it opens up the opportunity to approach larger countries and offer them malaria elimination. Malaria elimination will save many people and will boost the economy. People understand that if you eliminate malaria from countries, the impact on the GDP and on their economy will be more than 10% in a few years. It affects agriculture, tourism, education - everything.

Obviously, there is an imminent health care cost or health care impact of malaria. But you mentioned at the beginning that it drives poverty, it has economic implications…

The sad thing about malaria, and actually about many things in our world, is that it impacts the poor more than it impacts the rich. You have more malaria in poor villages than in the wealthy neighbourhoods in the cities. For example, in India, you have more malaria in some tribes in the mountains than all of the rest of the population. Malaria attacks poor communities and then prevents them from getting out of their poverty because it’s difficult to go forward. It’s a vicious cycle of poverty and disease that we want to help solve.

What’s happening in terms of more widespread adoption across Africa, are you hoping that one day this will be across the whole of Africa, or is that a challenging thing to scale to that degree?

For us, it is not challenging. It’s software, basically. It’s not very difficult to scale. So scaling up is up to the Ministry of Health. If countries in Africa adopt the system, it will happen. If one country uses it, they will help not only themselves, but also neighbouring countries, because, as you know - mosquitoes don’t believe in borders. I really hope to see it across the world, and not only in Africa - in South America and in India where there is malaria as well. We must do our best to eliminate this disease. I think it’s very strange that in the 21st century we still have such disasters happening. We experienced 18 months of the Coronavirus pandemic and we know how difficult it is, so why do we allow a disease such as malaria to persist for decades or centuries? We must stop it.

Most of the deaths are in children. Is that right? Yes. Children under five is where most of the deaths are. And then there are also pregnant women who are greatly affected by the disease.

You see varying estimates. What would you put the estimates of the number of people per year affected by malaria?

I don’t have better estimates than the World Health Organisation, which estimated about 400,000 people last year. This figure grew by 15% or so because of COVID. With COVID, it became difficult to provide bed nets and health clinics were less available to treat malaria patients, which is what caused a surge in malaria cases.

You’ve worked a lot in this field of malaria prevention over the last couple of years. Are there any other organisations that you would want to mention that work on different approaches, who are maybe tackling the same problem?

If we improve the available vaccine it will be amazing, and some teams are trying to do so. Some tried to do this based on MRA technology, and some are trying to just take the existing vaccine, and with a few modifications, enhance its capability. Other groups try to do so by engineering mosquitoes - they want to put genes in the mosquito that means the mosquito itself will have a drug against malaria. Then the mosquito will not be infectious. This is a very innovative approach.

If it happens, it will be the first time in history where humanity has taken a species from nature and replaced it with other species with different genes. This is interesting, but we are still trying to understand how to do it in terms of technology and the safety of this method.

If people want to support you, what can they do?

Thanks. I actually prefer if people support the malaria NGOs. For example, Malaria No More and Only Nets are two good NGOs that really save many, many lives. If you buy, for example, 10 bed nets, it costs only $40 and you are potentially saving lives.

Where can people find out more about your work and about Zzapp Malaria?

Our website is Zzappmalaria.com

INDUSTRY CASE STUDY

THE DATA SCIENTIST | 17

We must do our best to eliminate this disease. I think it’s very strange that in the 21st century we still have such disasters happening.

When it comes to artificial intelligence chatbots, there is little hype around the models that are published in the public domain compared to those available from the big players in artificial intelligence. I am very much against hype and the idea that these complex models that resemble a human being are even close to what we define as artificial general intelligence. We are still not on that track - whether that’s unfortunately or fortunately, I don’t know. But that’s a fact. It would take a lot to explain what ChatGPT is, but more importantly, what should we expect from this type of model?

I’m also unsure about the enthusiasm around ChatGPT. I was never a big fan of the GPT family of models. But, I’ve slightly reconsidered my position - I won’t say I’m super excited about these models as I’ve been playing quite extensively with them in the last few weeks, as there are still things missing. The model is also behaving the way a large language model of this type is

expected to behave, regardless of what people say or the general public’s enthusiasm. It’s a very powerful model, that’s undeniable. It’s also very fun to use. I personally use it to create poems about pretty much anything that happens in my life, just for fun, or describing situations in which my friends and colleagues are involved in the form of a sonnet. That’s how I personally use ChatGPT. Of course, ChatGPT can be used for more important things, and tasks that can help you in your daily job if you use it with parsimony. That’s my advice. It’s not a silver bullet against anything or everything. You should always double check, or fact check all the answers that ChatGPT gives you. Because there is a point at which ChatGPT starts guessing things and also inventing things that probably never existed, but makes these facts look real. If you are consuming the response or the answer of a ChatGPT session without double checking, you may get into trouble if you’re using that answer for something important, for example. I mentioned the

CHATTING WITH CHAT GPT

18 | THE DATA SCIENTIST CHAT GPT

FRANCESCO GADALETA looks at the capabilities and limitations of the advanced language AI model that everyone is talking about.

word ‘guess’, and not by coincidence, because a guessing game is probably the closest exercise. This was in fact invented by Claude Elwood Shannon - and there is an amazing book about that. He created this game, which he named the ‘guessing game’.

This was essentially a way to teach computers to understand language. That was back in the days when artificial intelligence hadn’t been invented. Claude Shannon was the beginner and the pioneer of a lot of technological advances out there. These are things we take for granted, especially with communication and artificial intelligence - in particular NLP or language understanding. NLP was not even a term back at the time. Shannon invented this game in 1951, which consisted of guessing the next letter. If you know what ChatGPT does, and what all the family of GPT models do, these are doing exactly the same but on a word basis.

The models are guessing the next word given a certain context. There are several papers and a lot of tutorials out there that go into the technicalities of how ChatGPT works. But I would like to give you an explanation of what it is and what you should be expecting from a model of this type. The way ChatGPT has been trained, and how all the families of GPT models have been trained, is essentially guessing the next word given a certain context. Apparently, this is a game that gets interesting if you want to play at a human level. This is because you need to understand the context. In the case of Shannon, you need to guess the next letter. But in the case of ChatGPT, in order to generate and guess correctly the next character or word, you need to understand the context very well. This is why usually training models or building models of this type is strictly related to the fact that you are understanding language. You could not generate that letter or that word if you did not understand the context, and the context can be pretty much anything. It can be philosophy, religion, technical content, news, or politics; you name it.

The fact that a model is expected to guess the next word almost all of the time (approximately 99%), that’s not really the case. But let’s assume that happens. That would mean the model understands the context and therefore it can guess correctly the next letter or the next word, which is not the case. I mean, it is partially the case, but it’s also the case that these models are equipped with billions and billions of parameters.

Something has definitely changed with respect to when we were dealing with, for example, 60 billion parameter models (which is an amazing number of parameters) to 175 or more billion parameters. These are the models that we are dealing with today. There is a

flipping point where something is happening; something different happens from the perspective of the model.

It could also be that the model is so big that it relatively starts memorising things because it has much more bandwidth; more space in terms of a number of parameters. Meaning it has much more space to store and memorise whatever is provided from the training set. That could be the case. That was my very first conclusion about this large language model; the day they come with, let’s say, a trillion parameter model, we will have this amazing lookup table that is much more powerful than a simple lookup table, because it can look up things that are similar and not an exact match.

A lookup table allows you to search and to find some targets exactly as they are in your database or in your storage.

By using hashing or other techniques, one can do that very fast, for example in constant time or in O(log n) time, so ChatGPT looked more like a big lookup table. In fact, the family of GPT models looks like a big lookup table on steroids due to the fact that these models can consider text similarity and paragraph similarity. The concept of similarity is much more powerful than the concept of exact match (which exists since the ‘60s or ‘70s, or even before). It is powerful, but it is a mechanical thing. It’s not something that can generate the same level of enthusiasm in humans.

ChatGPT is the combination of three or more different modules that were not present in the models before. This is where I started changing my opinion about these models. When you combine three modules that I’ll go on to discuss, you get something that is much more powerful than the classic models; as the language models, we were used to until several months ago.

First of all, the GPT family of models is based on the concept of instructions. Before getting there, we have to say that when these models get trained, they get trained with a massive amount of text, and this text can come from pretty much anywhere. It can come from forums, chats, or websites. The entire Wikipedia site and Reddit have been used to train this model, so millions or billions of articles are publicly available. When it’s time to train these models, the amount of text they are exposed to is incredible.

However, despite the amount of available training data, there is something missing: a connection with the external world. Outside of that text, there is nothing. If

CHAT GPT

THE DATA SCIENTIST | 19

It’s not a silver bullet against anything or everything. You should always double check, or fact check all the answers that ChatGPT gives you.

you have some concept in textual format, like the sky is blue , or the colour blue , this might be associated with another concept that is present in the text; like a chair, a table, and so on.

There is no concept of the outside world or the scenario in which that concept is related to, or what that concept is related or refers to. That’s obvious, because the only input that these models receive is text, while human beings receive many more types of input. Humans have perceptions that come from pretty much all their senses. Moreover, we can read text, we can view, we can hear, we can touch, we can feel. That is probably the biggest limitation of machine learning models, and that’s normal because one is a model; mathematical or an algorithm. The other one is an organism or a human which is even more complex than a simple organism.

languages; for example, programming languages.

Programming languages are non-natural languages. In fact, they are formal languages. That is languages that are parsed and understood by a machine or by another algorithm to generate, for example, machine code. Java, C, C++, Rust, or Python are all programming languages and ChatGPT has been trained on programming languages too.

The amount of information that a code snippet carries can be incredible due to the presence of comments, headers or descriptions that developers augment their code with. There are even entire discussions written about code snippets. There are entire papers, with code in which the paper is describing what has been done exactly by the authors. There is enough material for a 175 billion parameter model to learn the most subtle relationships between comments and code. In summary, yet another way to bring the context out of the text.

With this said, there have been strategies used to train these models, and I refer to the entire family of the GPT models, which are trained by using instructions. Instructions are given by humans during training time in order to describe a task. For example, today you can ask ChatGPT to translate a certain text into another language. It’s because, during training, someone has instructed the model with a keyword that looks like

TRANSLATE <input text> <output text> letting the model learn that when there is a translation request of an input text into another text, it should be generating something similar to output text. The same happens for a summary or a description of a context.

If one asks ChatGPT to provide the summary of a text, that’s possible because, during training, there was someone who instructed the model with a summary; which is the instruction, the text to summarise, and the summarised text as the answer of that instruction.

The same goes for a lot more instructions that one can play with on ChatGPT. Myself included, I like playing with prompts; such as “from this story, make a poem out of it”. That’s my favourite these days.

The concept of instructions is relatively novel and powerful. It is powerful because it allows one to create that bridge between the text and the outside world. As a matter of fact, it’s an artificial way to bridge what is in the text to what is not, mitigating one of the biggest limitations of machine learning models and, more specifically language models.

The second feature that characterises ChatGPT and makes it different from the models that we have been playing with until now is dealing with non-natural

The third novel concept that is now first class citizen in ChatGPT is Reinforcement Learning with Human Feedback, RLHF.

It allows a human to always have control over the model. Controlling a 175 billion parameter model from diverging any conversation is anything but an easy task.

We know that deep learning models suffer whenever they are used in generative mode, that is when they generate data (text, images, sound), instead of performing predictions. The worst can happen when such models start generating “concepts” that were not present in the training set. We have experienced hyperacist models in the past, and chatbots impersonating Hitler. To avoid situations like those, the developers and the designers of ChatGPT have introduced a human factor that rewards the algorithm accordingly.

In my opinion, it’s the combination of these three things that makes a difference with respect to what ChatGPT or the family of GPT models, and all these large language models could provide in terms of experience and usability. However, there are limitations, and I must be critical here, especially when I read claims online that these models are approaching artificial general intelligence or that they will soon take over. I have read extensively about these models, and while they have impressive capabilities, they can also be dangerous.

The very first versions of GPT models were banned by developers, even from OpenAI, because they were considered too dangerous. In the sense that people could have abused the way, GPT models generated text and used it to create fake news or spread false information. These models are also highly biased, contain stereotypes, and do not understand language very well.

20 | THE DATA SCIENTIST CHAT GPT

The third novel concept that is now first class citizen in ChatGPT is Reinforcement Learning with Human Feedback, RLHF.

While it is important to acknowledge these limitations, I do not believe they are a significant issue. As always, it depends on how the models are used.

For example, Google’s search engine does not understand text in human terms, yet it provides accurate results most of the time. Similarly, language models like ChatGPT can be used for specific purposes without requiring a deep understanding of language. These models do not need to understand language in the way that humans do.

It is crucial to double-check or even triple-check the answers generated by these models, even if they make grammatical and semantic sense. Generated text can seem smooth and convincing, but it may contain contradictions or be logically impossible. Additionally, models of this type do not have a notion of time since they are trained on snapshots of data.

This means that, for example, if one asks who’s the president of the United States or Italy and who were the presidents before, ChatGPT cannot give you answers of this type because there’s no notion of time. Having no notion of time means there’s no way of telling which fact came first. President A and president B are both valid because they both were presidents at some point in time, just in two different timeframes. There is no notion of time, there is no notion of knowledge either. The so-called knowledge awareness is not present. The fact that what the model knows is a concept that is not there. That’s why they can make stuff up and mix it into real facts coming from the training set, plus the generated facts generated by the model itself, and they would still be mixed up and look legit. Because there is no awareness of knowledge, there is no knowledge

of knowledge. Another thing I found is that ChatGPT struggles with numbers and maths.

Mathematics is something that is not a piece of cake, except for the usual ‘two plus two’ and similar arithmetic questions. But for the rest, there is no capability that is at least human level capability for performing mathematics, and there is a reason behind that. It’s because the representation of mathematical concepts comes from text. There are much better ways to represent, for example, numbers and mathematical concepts than text. These are some of the limitations that I have in my head. There are probably many more. One, for example, is the fact that the number of parameters I see, I look at it as a big limitation because it doesn’t really help in the democratisation of these models and their availability.

We have to hope that OpenAI keeps ChatGPT available to the public. The day they will shut it down, we will have no ChatGPT and we will have to wait for the next player who has the financial capacity, and the infrastructure to provide a massive model like this one to the rest of the world. There is no democratisation in that respect. Also, there’s no democratisation in the way these models get trained. For that, one needs massive infrastructure and lots of data. These are very data hungry problems; terabytes of data is not even an exaggeration. Such requirements definitely restrict the number and type of people and organisations who can deal with and build models of this calibre.

THE DATA SCIENTIST | 21 CHAT GPT

FRANCESCO GADALETA is the Founder and Chief Engineer of Amethix Technologies and Host of the Data Science At Home podcast. datascienceathome.com

TIME SERIES I NG WITH DEEP LEARNING IN PYTORCH (LSTM-RNN)

AN IN-DEPTH FOCUS ON FORECASTING A UNIVARIATE TIME SERIES USING DEEP LEARNING WITH PYTORCH

By ZAIN BAQUAR

INTRODUCTION

Believe it or not, humans are constantly predicting things passively - even the most minuscule or seemingly trivial things. When crossing the road, we forecast where the cars will be to cross the road safely, or we try to predict exactly where a ball will be when we try to catch it. We don’t need to know the exact velocity of the car or the precise wind direction affecting the ball in order to perform these tasks - they come more or less naturally and obviously to us. These abilities are tuned by a handful of events, which over years of experience and practice allow us to navigate the unpredictable reality we live in. Where we fail in this regard, is when there are simply too many factors to take into consideration when we are actively predicting a large-scale phenomenon, like the weather or how the economy will perform one year down the line.

This is where the power of computing comes into focus - to fill the gap of our inability to take even the most seemingly random of occurrences and relate them to a future event. As we all know, computers are extremely good at doing a specific task over numerous iterations - which we can leverage in order to predict the future.

TIMES SERIES

22 | THE DATA SCIENTIST

WHAT IS A ‘TIME SERIES’?

A time series is any quantifiable metric or event that takes place over a period of time. As trivial as this sounds, almost anything can be thought of as a time series. Your average heart rate per hour over a month or the daily closing value of a stock over a year or the number of vehicle accidents in a certain city per week over a year. Recording this information over any uniform period of time is considered as a time series. The astute would note that for each of these examples, there is a frequency (daily, weekly, hourly etc) of the event and a length of time (a month, year, day etc) over which the event takes place.

For a time series, the metric is recorded with a uniform frequency throughout the length of time over which we are observing the metric. In other words, the time in between each record should be the same.

In this tutorial, we will explore how to use past data in the form of a time series to forecast what may happen in the future.

OBJECTIVE

The objective of the algorithm is to be able to take in a sequence of values, and predict the next value in the sequence. The simplest way to do this is to use an Auto-Regressive model, however, this has been covered extensively by other authors, and so we will focus on a more deep learning approach to this problem, using recurrent neural networks

DATA PREPARATION

Let’s have a look at a sample time series. The plot below shows some data on the price of oil from 2013 to 2018.

Many machine learning models perform much better on normalised data. The standard way to normalise data is to transform it such that for each column, the mean is 0 and the standard deviation is 1. The code below provides a way to do this using the scikit-learn library.

This is simply a plot of a single sequence of numbers on a date axis. The next table shows the first 10 entries of this time series. Just looking at the date column, it is apparent that we have price data at a daily frequency.

We also want to ensure that our data has a uniform frequency - in this example, we have the price of oil on each day across these five years, so this works out nicely. If, for your data, this is not the case, Pandas has a few different ways to resample your data to fit a uniform frequency.

SEQUENCING

Once this is achieved, we are going to use the time series and generate clips, or sequences of fixed length. While recording these sequences, we will also record the value that occurred right after that sequence. For example: let’s say we have a sequence: [1, 2, 3, 4, 5, 6].

By choosing a sequence length of 3, we can generate the following sequences, and their associated targets:

Another way to look at this is that we are defining how many steps back to look in order to predict the next value. We will call this value the training window and the number of values to predict, the prediction

TIME SERIES

[Sequence]: Target [1, 2, 3] → 4 [2, 3, 4] → 5 [3, 4, 5] → 6

THE DATA SCIENTIST | 23

Image by author.

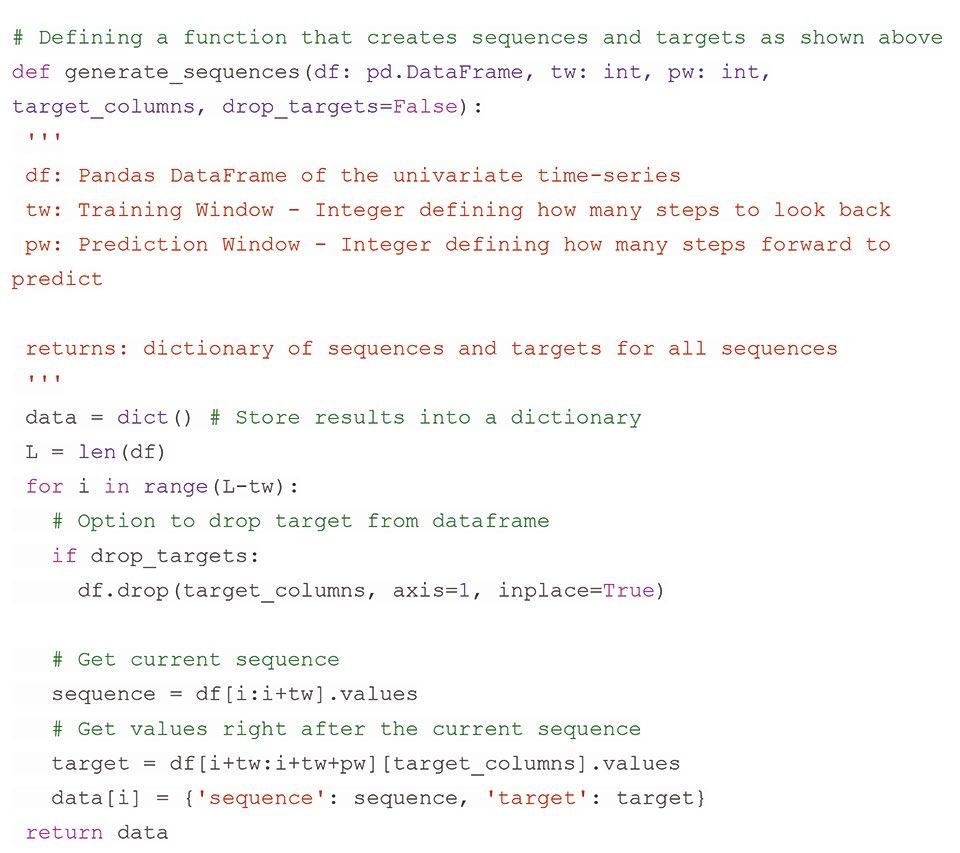

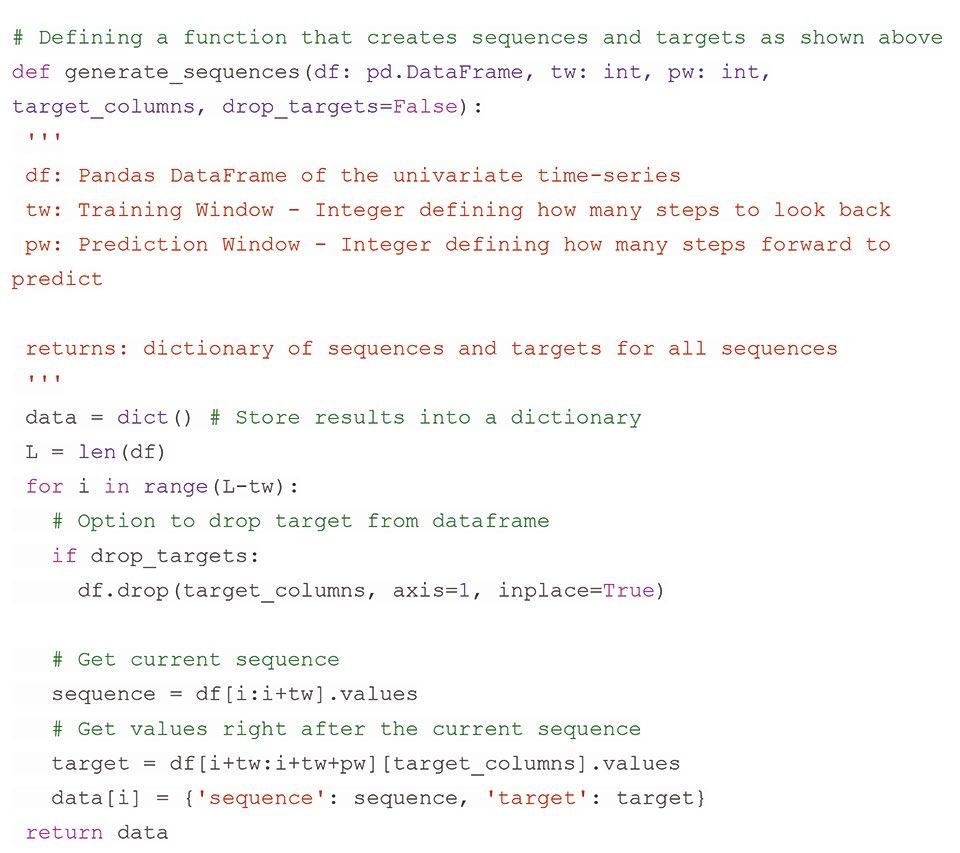

window . In this example, these are 3 and 1 respectively. The function below details how this is accomplished.

MODEL ARCHITECTURE

The class below defines this architecture in PyTorch . We’ll be using a single LSTM layer, followed by some dense layers for the regressive part of the model with dropout layers in between them. The model will output a single value for each training input.

PyTorch requires us to store our data in a Dataset class in the following way:

We can then use a PyTorch DataLoader to iterate through the data. The benefit of using a DataLoader is that it handles batching and shuffling internally, so we don’t have to worry about implementing it for ourselves.

The training batches are finally ready after the following code:

At each iteration the DataLoader will yield 16 (batch size) sequences with their associated targets which we will pass into the model.

24 | THE DATA SCIENTIST TIME SERIES

This class is a plug n’ play Python class that I built to be able to dynamically build a neural network (of this type) of any size, based on the parameters we choose - so feel free to tune the parameters n_hidden and n_deep_players to add or remove parameters from your model. More parameters means more model complexity and longer training times, so be sure to refer to your usecase for what’s best for your data.

As an arbitrary selection, let’s create a Long ShortTerm Memory model with 5 fully connected layers with 50 neurons each, ending with a single output value for each training example in each batch. Here, sequence_ len refers to the training window and nout defines how many steps to predict; setting sequence_len as 180 and nout as 1, means that the model will look at 180 days (half a year) back to predict what will happen tomorrow.

Here’s the training loop. In each training iteration, we will calculate the loss on both the training and validation sets we created earlier:

MODEL TRAINING

With our model defined, we can choose our loss function and optimiser, set our learning rate and number of epochs, and begin our training loop. Since this is a regressive problem (i.e. we are trying to predict a continuous value), a safe choice is Mean Squared Error for the loss function. This provides a robust way to calculate the error between the actual values and what the model predicts. This is given by:

The optimiser object stores and calculates all the gradients needed for back propagation.

THE DATA SCIENTIST | 25 TIME SERIES

Now that the model is trained, we can evaluate our predictions.

INFERENCE

Here we will simply call our trained model to predict on our un-shuffled data and see how different the predictions are from the true observations.

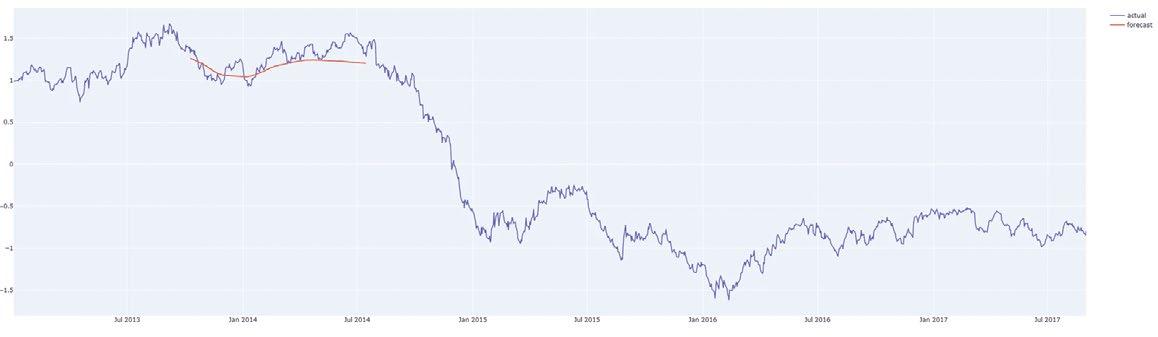

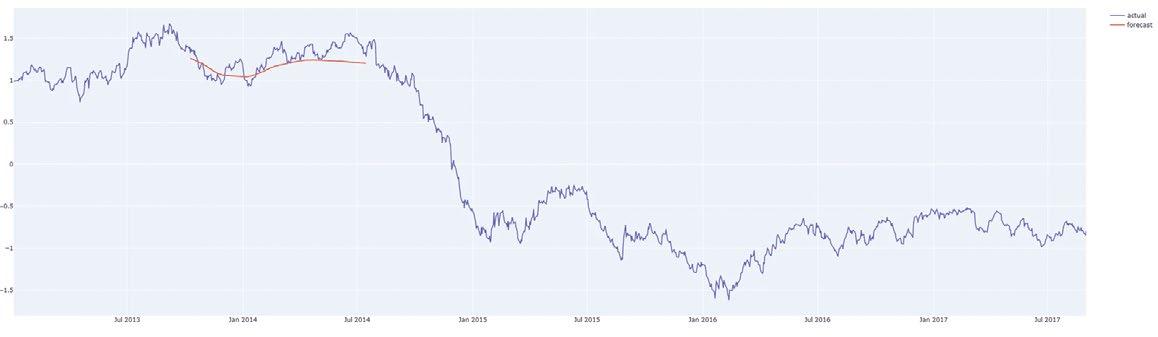

For a first try, our predictions don’t look too bad! And it helps that our validation loss is as low as our training loss, showing that we did not overfit the model and thus, the model can be considered to generalise well - which is important for any predictive system.

With a somewhat decent estimator for the price of oil with respect to time in this time period, let’s see if we can use it to forecast what lies ahead.

FORECASTING

If we define history as the series until the moment of the forecast, the algorithm is simple:

1. Get the latest valid sequence from the history (of training window length).

2. Input that latest sequence to the model and predict the next value.

3. Append the predicted value on to the history.

4. Repeat from step 1 for any number of iterations.

One caveat here is that depending on the parameters chosen upon training the model, the further out you forecast, the more the model succumbs to it’s own biases and starts to predict the mean value. So we don’t want to always predict too far ahead if unnecessary, as it takes away from the accuracy of the forecast.

This is implemented in the following functions:

Let’s try a few cases.