The Scholars' Journal has been printed on carbon balanced paper and has offset 0.036 tonnes of CO2 through Verified Carbon Standard (VCS) reduction projects thereby offsetting carbon emissions and helping to prevent climate change.

The Scholars' Journal has been printed on carbon balanced paper and has offset 0.036 tonnes of CO2 through Verified Carbon Standard (VCS) reduction projects thereby offsetting carbon emissions and helping to prevent climate change.

Universities are now specifically studying you – the students who have written in this journal and your peers. Because you are (mostly!) Generation ‘Alpha’, a new generation soon to start applying to Universities. Born between c.2009-10 and 2024, Gen Alphas are the largest single generation ever to exist: there are about 2 billion of you worldwide. You have grown up in an environment that is synonymous with technology: the year Gen Alpha started (2010) was the year the iPad was invented, Instagram was launched and ‘App’ was the word of the year. You have lived through the first modern global pandemic and your worldview has forever been shaped by it. You have a heightened environmental awareness in part because you also have the longest predicted life span of any generation so far, with some of you living into the 22nd century. You have already incredible brand and cultural influence as well as social media power, and, before this decade is out, as some of your generation start at University, you will have an economic footprint that is predicted to exceed $5.5 trillion US dollars.

What will you do with that power and possibility? I think the answers can be seen clearly in the topics you have chosen to write about in this journal and which clearly matter to you: from enhancing our medical quality of life to protecting our environment; from understanding the way our brains work and influence our behaviour to how we present ourselves to the world; from how we should engage with others to what sense we can make of the geopolitics that swirl around us. There is no shortage of hope for the future in reading your thoughts, arguments, concerns and ideas, which bear witness to your fantastic curiosity about yourselves and the world around you.

The question for institutions such as mine is how we can help you on your journey by delivering the kinds of experiences, skills and knowledge you want and need in a world in which AI seems to be able to do almost anything. And for me, above all, that is about how we can help you to experience being part of a global community of learners and practitioners, a melting pot of cultures, and to develop what is often termed ‘global competency’. In an age when the world seems increasingly crazy, and in which we can all easily lose heart as to our individual power to affect change, the ability to engage successfully with people from a myriad of different backgrounds, cultures and experiences – with respect, openness and genuine interest – will be fundamental in empowering you to work together both to respond to problems and to make a difference.

As you take your next steps in your journey, with curiosity, determination, ambition, resilience and enquiry as Cokethorpe rightly encourages you to do, give some thought also to this. 2025 sees the end of Generation Alpha and the beginning of Generation Beta: those born between 2025 and 2039. And you will be fundamental in shaping the world they grow into and the people they become!

Professor Michael Scott Professor of Classics and Ancient History, Pro-Vice-Chancellor (International) The University of Warwick, UK @profmscott

You do not have to go far to feel the crazy world Professor Scott refers to. Open your email; there is the keyboard-warrior’s rant. Scroll through your socials; you are engulfed by those needing to be affirmed. Turn on your TV; it’s a diet of disasters. It seems we are addicted to the easy answer; deserve to be morally outraged; feel an entitlement to be right. Can respect, openness and interest invigorate our curiosity about the uncertain and the unknown, to help us respond to problems so we can make a difference?

Yes: but a curiosity worth its name is the sum of many parts. Curiosity may lead us to enquire, but it requires sustained intellectual rigour to nurture it. Ambition is energising, but without determination in the face of failure, it may come to little. Should you be a scholar now, aspiring to be one in the future, or looking back on your past achievements, we hope this journal demonstrates the liberating wisdom of Immanuel Kant: ‘Dare to be curious'.

Miss Hutchinson and Mr Elkin-Jones Heads of Scholars

The Legal Drinking Age: Time for a Change?

Henry O'Brien - Lower Sixth Is Free Will an Illusion?

Will Chandler - Second Form

How Close are we to a Dystopian Future?

Immy Harris - Fourth Form

Is Farming Undervalued by Society in the UK?

Florence Nixey - Second Form

What are the Effects of Conspiracy Theories?

Katy Stiger - Fourth Form

Communism: the Worst or the Best 'ism'?

Joe Norman - Lower Sixth

Should We Limit Freedom of Speech?

Will Hansen - Lower Sixth

Appendix 62-63

Additional references

Eva Graves, Fourth Form, Gascoigne House

Allen Scholar - Scholar Supervisor: Sam Farr, Upper Sixth, Swift House Editor: Miss Hutchinson

One in 37 people worldwide will be diagnosed in their lifetime. 50 people are diagnosed every day in the UK alone. At least 901 people die every day. Parkinson’s Disease is a neurodegenerative disease that presents itself through various symptoms. Neurology has made significant strides in understanding and treating movement disorders, particularly Parkinson’s Disease. However, along with this extra research comes an understanding that there are many different brain disorders that fall under the umbrella the general public know of as 'Parkinson’s Disease'. Shown through a broad spectrum of symptoms, these disorders (known as Parkinsonian diseases or disorders) include conditions known as 'primary' disorders, such as Dementia with Lewy bodies, Progressive Supranuclear Palsy, and familial Parkinsonian disorders such as Huntington’s Disease. There is also another subcategory known as 'secondary' disorders, including drug-induced Parkinson’s and toxin-induced Parkinson’s. This paper will focus on the 'primary' diseases – specifically Parkinson’s Disease, Progressive Supranuclear Palsy, Dementia with Lewy bodies and familial Parkinsonian disorders. While most of them share common features such as tremors, rigidity, and bradykinesia (impairment of voluntary motor control and slow movements or freezing), the original causes and progression of each can vary unmistakably. Sometimes, treating a certain symptom can even exacerbate other symptoms. When seeking a diagnosis, this complexity means there is no single approach to treatment. The desires of different families or patients may be driven by a whole host of personalities, priorities and symptoms.

Common similarities between Parkinsonian Diseases

Parkinsonian Diseases exhibit overlapping symptoms, especially because they are all neurodegenerative diseases. This means they are chronic conditions that damage and destroy areas of the nervous system over time; chiefly the brain, breaking it down over time. These symptoms can be split into five categories: motor symptoms, cognitive symptoms, behavioural symptoms, sleep disturbances and autonomic dysfunctions.

Motor symptoms refer to symptoms affecting movement and balance. Bradykinesia, the slowness of movement, is one of the three main motor symptoms associated

with Parkinson’s Disease, but can also be common in all Parkinsonian disorders. Along with bradykinesia, rigidity (muscle stiffness) and tremors are the other main motor symptoms of Parkinsonian diseases; tremors can however vary in presentation. Postural instability, or balance issues, are also very common amongst patients with Parkinsonian diseases.

Cognitive symptoms are caused by mild cognitive impairments, which are problems with a person’s ability to think, learn, remember, use judgement and make decisions (Anon, n.d.). These cognitive impairments then lead to progressive cognitive decline, which refers to the gradual loss of thinking difficulties such as learning, remembering,

The patient’s experience: My grandmother’s journey towards getting an exact diagnosis In March 2020, my grandmother started having hallucinations. This was probably her first significant Parkinson’s symptom. However, she did not tell anyone about them until around a year later.

Having struggled with her blood pressure throughout adulthood, she had some high blood pressure scares that meant she had to go to the Emergency Room (or Accident and Emergency, as it is commonly still called in the UK) a few times. Eventually, in spring 2021, she ended up in an Intensive Care Unit (ICU), not because of high blood pressure, but instead due to dangerously low sodium causing neck pain. We believe the low sodium was caused by a diuretic she was put on due to the high blood pressure. During the ICU stay, after severe hallucinations, she was diagnosed with hospital delirium. Looking back, doctors and family alike now acknowledge this was likely to have been early Parkinsonian symptoms.

My grandmother’s General Practitioner (GP) recommended going to a speech therapist after her repeated struggles to both think of and then form words. Part of the therapy included a cognitive test known as a MoCA test, in which she got an unexpectedly low score, indicating severe cognitive impairments and issues with executive function. Her therapist then recommended visiting a neurologist.

The subsequent visit to the neurology office in autumn 2022 included a battery of tests, at the end of which the doctors stated they were 90-95% sure she had Parkinson’s Disease but did not, or could not, specify which form of Parkinson’s. This highlights the challenges associated with Parkinsonian diseases, because there are very few concrete tests available during life. Everyone in our family sighed with relief when we had a diagnosis, but little did we know that what would follow would be an ongoing struggle with multiple carers, ideas from professionals and countless hours in numerous doctors’ and therapists’ offices.

She was almost immediately put onto carbidopa levodopa, one of the only available drugs used to treat primarily the motor symptoms of Parkinson’s Disease. Because dementia symptoms are also a large part of her disease, she was also put on a dementia medication called donepezil. The levodopa significantly improved her stiffness and allowed her to move more freely. After fairly positive results with the initial dose she was prescribed, the doctors decided to up her dosage to see if they could gain a little more function. However, at this point the negative side

effects, such as falling asleep even as she was putting food into her mouth and extremely wobbly legs far outweighed any positives gained.

In February 2023, my grandmother started talking strangely (making nonsense sounds and stringing words together that had no connection) and having vision problems. Because these symptoms seemed as though they could be indicative of a stroke, they headed to hospital again. After doing some tests and noticing difficulties with eye movement, the hospital neurologist suggested that my grandmother may have PSP. Following this hospital stay, she was determined to be too compromised to live on her own and began splitting her time between two of my aunts’ houses.

However, when the idea of PSP was followed up with her neurologist, he was sceptical that this was the right diagnosis, and equally sceptical that it mattered one way or another as he didn’t believe changing the diagnosis would change the treatment plan. Despite this, my grandmother was sent to a neuro-optometrist to confirm, and they agreed that it was unlikely to be PSP. Regardless of these medical opinions, she has continued to exhibit some of the hallmark symptoms of PSP. This is significant because the life span of someone with PSP is shorter than most other Parkinsonian diseases. If families are made aware that PSP is a possibility, it will not be as shocking when they find their loved one suddenly at the end of their life.

Later on, in Summer of 2024, she was put on another motor symptom medication called amantadine that was originally used to treat influenza but now is often used to treat Parkinson’s patients. Despite improving her physical state significantly, this drug caused such severe confusion, dementia and other cognitive impairments, that she was taken off it within a week.

In Autumn 2024, my grandmother had an online appointment with a dementia specialist. After some discussion, the specialist thought that it was probable that she has Dementia with Lewy bodies (DLB) and recommended getting a DaTscan, which would identify Lewy bodies, but would not differentiate between whether they were causing DLB or PD. Our family was sceptical it was a good idea because of her negative history with hospitals and scans, and her neurologist was again not sure if it would make a big difference to her treatment plan. However, the priority of the decision was reduced when she started having falls and her general care needs reached a level where my aunts could no longer take care of her on

Freya Vincent, Third Form, Queen Anne House

Allen Scholar - Scholar Supervisor: Ella Hogeboom, Fifth Form, Swift House

Editor: Mr Elkin-Jones

As humanity rapidly improves our societies, our advances in technology increase at an ever-brisker pace. This includes the development of artificial intelligence, quantum computing, and smart clothing, to name but three. Accompanying this is the biomechanical process of genetic enhancement and eukaryotic modification. Recently though, the two sides – one in favour of genetic modification and the other against the process - have become clearer and simpler due to people educating themselves and realising what genetic modification actually entails: either ‘we should do it because it helps people have food with the development of Golden Rice’, (a lifesaving, highly nutritious plant that reduces sight loss in impoverished communities); or ‘we should not do it due to the risk of gone-wrong experiments wrecking the environment’, so outweighing the previous argument.

As always with humanity, the question is not if but when?

Since the days when humanity looked up to the sky and longed to fly, or looked further and set their sights on claiming the moon, we have been defying nature’s boundaries. Along with this comes the responsibility to do so in an ethical way, so as to not disrupt the delicate natural balance of the world, which (when considering the climate crisis as only one example) we so far have had a chequered track record to say the least. When it comes to biomechanical engineering, a significant risk is that we will never truly know the impact a new species has on the environment it lives in until it is released. The basic laws of science allow us to have an insight into the short-term causes. However, the long-term causes are more challenging to predict, with the wide range of risks growing as the species evolves.

Though many people claim to know what genetic modification is, most envision a Captain America like transformation where you step into a chamber and inject the super serum and out pops a perfect specimen of the species you are modifying. In reality, it is far more labour intensive and time consuming with the various trial and error that must occur to make a single organism successful. By crossbreeding plants and animals, our Stone Age ancestors realised they could boost the amount of food they produced. (BBC Bitesize, n.d.) Whilst, like our ancestors, it is possible to naturally change the traits in foods with selective breeding, this process takes a long time, and breeders may not know which change led to the desirable advantage being created. Modern-day science is allowing us to improve this process. In basic terms, genetic

times more beta-carotene per plant than specimens grown in the greenhouses (LSU, 2005 the article was printed, 2015 it was uploaded online). This is advantageous to the local peoples growing Golden Rice in their communities as they do not need to spend extra money on buying a greenhouse, or making greenhouse conditions, but they can get a higher number of vitamins that benefits the consumers that are deficient in vitamin A.

Although GMOs can clearly be beneficial to human health, the legality and ethics of the matter are challenged by many. This includes the society named Greenpeace. They led campaigns against genetically modify foods which prompted 107 laureates to write to them asking them to abandon their campaign in 2016 (Achenbach. J,2016). As well as this, in 2024 the Filipino Court of Appeals issued a cease-and-desist order for the growth of Golden Rice in the country, citing a lack of scientific certainty regarding its health and environmental impact (Bautista 2024).

Although the definite impact it has on health not being fully explored yet, humans should be allowed to genetically modify other species. The environmental impact it has is great, that is correct, but as the saying goes: 'With great power comes great responsibility' and with the chaos that humans have already inflicted on our planet, I believe that if we take responsibility, we should be allowed to genetically modify organisms. We have successfully shown that we can modify living cells to adopt desired traits in a safe, ethical way that benefits humans and causes no detrimental harm to the planet (an example of this is Golden Rice). People claim that the environmental impact is harmful to the planet because it disrupts the natural balance that nature intended.

Unfortunately, for those that stand against the motion of consuming and creating GMOs there has been no major disaster (if any at all) that paints GMOs in a negative way. However, that does not mean that one could not occur, but we have rules and regulations in place to prevent such a thing happening. The benefits and rewards to be reaped greatly outweigh the needle-in-a-haystack chance of a

catastrophe occurring and harming the population. There has been no GMO that has made it into the wider world and caused a large (or small) negative impact on the environment. This suggests that no disastrous events have occurred regarding GMOs and there should be no need for hostility towards them. Caution should be advised, and it would be natural for us as humans to feel this way towards something we know rather little about considering the many ways the genetic modification process could go wrong. Accompanying this, is the human desire to be curious and learn more about our planet. This is what makes us different to other animals. The drive we exhibit as humans that pushes us to dare to dream and drives us to make the breakthroughs in science that allow us to create things that really impact the world. Our ability to engineer beneficial foods that aid humanity and do not harm the planet should not be feared-but celebrated.

So far, GMOs and the Green Revolution (the 20th century agricultural project that utilised plant genetics, fertilisers and intelligent irrigation systems to bring food to the hungry masses (Spanne. A, 2021)) have helped an estimated one billion people evade starvation and secured the livelihoods and jobs of many more (Ventura. L, 2022).

Following the release of better crops that have been made drought resistant, or crops that do not require pesticides and fertilisers, farmers have not needed to buy products that aid plant growth. As a result, farmers have saved money and achieved larger profits than in previous years, when working with non-GMOs. On a global scale, this has saved the world 83 trillion US dollars and spared 223 million people from returning to the developing population by giving them a reliable food source (English. C, 2021).

As of now, there are lots of regulations to follow regarding the genetic modification process. These have been drawn up by the UK Competent Authority, (the Health and Safety Executive and the Secretary of State for the Department for Environment, Food and Rural Affairs acting in coalition) to form the GMO (CU): The Genetically Modified Organisms Contained Use regulations. This includes a risk assessment to be done on all GMOs and GMMs (Genetically Modified

Ollie Black, Second Form, Feilden House

Scholar Supervisor: Finn Van Landeghem, Upper Sixth, Vanbrugh House Editor: Miss Hutchinson

Whenconsidering the rise in diagnoses of neurodiversity, it is important to establish exactly what the term means. Today it is now used so broadly that there are many different definitions, some more technical than others. For example, the Oxford dictionary defines it as 'the range of differences in individual brain function and behavioural traits, regarded as part of normal variation in the human population' (OED online, 2024). If we were to take this definition of ‘normal variation’, then you could argue that a diagnosis may not be needed or be useful at all. However, Harvard medical school defines it as 'the idea that people experience and interact with the world around them in many different ways; there is no one 'right' way of thinking, learning, and behaving, and differences are not viewed as deficits' (Baumer and Frueh, 2021). What this definition does so well is acknowledge that although there may be significant differences in how peoples’ brains work, this is not a deficit, and if you do have a diagnosis there is nothing wrong with you, your brain just works slightly differently to what society statistically considers ‘normal.’

Using this definition as a broad umbrella term to describe ADHD, Dyslexia and Autism, we will examine to what extent has there been an increase in diagnoses. The figures reveal a startling increase: an article published on 19 August 2021 revealed that there was a 787%, exponential increase in recorded incidence of autism diagnoses between 1998 and 2018 (ACAMH). According to The Guardian newspaper '80 years ago, autism was thought to affect one in 2,500 children. That has gradually increased and now one in 36 children are believed to have autism spectrum disorder (ASD)'. Around one in 57 (1.76%) children in the UK is on the autistic spectrum, significantly higher than previously reported, according to a study of more than seven million children carried out by researchers from the University of Cambridge’s Department of Psychiatry, in collaboration with researchers from Newcastle University and Maastricht University.

There have been a number of different factors suggested to explain this increase. For example, Mrs Rushton, a Learning Support Teacher at Cokethorpe School, suggests that one of the leading factors is 'a greater acceptance of neurodiversity' (Rushton, 2024) throughout society;

whereas health writer Julia Ries believes that air pollution may play a role in the increase (Ries, 2023).

This essay will attempt to evaluate three of the most spoken about driving factors of said increase: we will consider access and reliability of testing; a growing awareness and understanding of neurodiversity, and finally the changing physical environment. Although all three factors are undoubtedly important, this essay will demonstrate that the most significant of the three is a change in physical environment, as it is the only factor that is arguably creating new cases instead of diagnosing pre-existing cases.

Testing for neurodiversity is at the best it has ever been, and more accessible than ever. We can see this in the rising demand for learning support teachers to help teach neurodiverse students. 'Heal and Care' (EHC) plans increased by 9.9% in 2021 alone. With a 23% rise in initial requests for an EHC plan since 2020 (protocol education). According to the BDA (British Dyslexia Association) 'both specialist teachers and psychologists can diagnose dyslexia'. Inevitably, this improved access to testing and earlier diagnosis or intervention will have a significant effect on the number of diagnoses. However, the BBC claims that only a tenth of the neurodivergent students receive a diagnosis and access to testing remains an issue, especially in less privileged students (BBC, 2019).

Some people are finding that if their child is not in private education, it is quite hard to get tested or be referred to get tested. Some children can go through their entire school life needing a diagnosis but are unable to be tested or diagnosed because of lack of resources in some schools. In addition, larger schools can struggle to notice if a child is exhibiting signs of neurodivergence: 'Out of 8.7 million school children in England, the report estimated about 870,000 of them have dyslexia but fewer than 150,000 were diagnosed, according to Department for Education figures' (BBC 2019). This is not just down to accessibility but

Bing Brown, Fourth Form, Queen Anne House

Scholar Supervisor: Alex May, Fifth Form, Vanbrugh House

Editor: Miss Hutchinson

Howdo you think the most intricate melodies from Beethoven and Liszt relate to the first scale you will learn on an instrument? They all rely heavily on the language of maths. Although they seem far apart, the worlds of music and maths have always had a strong bond, from the Ancient Greeks who were some of the best minds in human history, to Taylor Swift's hit song, Shake it Off. They both use mathematical patterns, whether they are aware of it or not. Numbers, sequences, and symmetry all work to create the best and most complete sound that we find so beautiful and of which all people can appreciate, whether it be Chopin’s Nocturne in E flat major or, more likely, Shake it Off. However, some people believe that they are from completely different worlds. In this essay I will explore how exactly maths influences and defines the music we all listen to.

It is said that one day, Pythagoras - the man who made one of the most famous equations in the world (A2+B2= C2)was walking down the street past some blacksmiths who were hammering away on their anvils. He heard hammer A and hammer B strike together and noticed that this produced a nice sound or in musical terms consonance. But when hammer B and hammer C struck together, they sounded not so nice (dissonance). Hammer A and hammer C produced another nice sound, and hammer A and hammer D produced a song like sound (Waldron, 2024). Pythagoras went to investigate and discovered that the hammers weighed 12, 9, 8 and 6 kilos, respectively. A and D were in a ratio of 2:1 which is the ratio of an octave in which you double the frequency or halve the frequency ie: an octave above a 440Hz sound would be 880 Hz and an octave below is a 220Hz. B and C weighed 9 and 8 kg and their relationship with D were 3:2 which is a perfect fifth and 4:3 which is a perfect fourth (Kenneth Sylvan Guthrie, David R 1987). The dissonant harmony was a 9:8 ratio which is also a 2nd. Nature has a hidden natural order of things and that extends to every corner of the world. Esperanza Spalding once said that 'solving an equation in maths is like mastering a piece of music. Nothing gives the same satisfaction as the end of a piece or equation, but you can also make the same mistakes, you can hit a wrong note and create dissonance or do a wrong calculation. They both are unnoticeable until it is too late, but when they are right the outcome is a feeling like no other' (Ancient Maths and Music).

You have all most likely heard the big names in music like Debussy, Beethoven, and Liszt. While they may not have been trying to use maths to make music, maths has been used. The most important of these, particularly in classical music, is the golden ratio and the Fibonacci sequence. For those of you who do not know what the golden ratio is, imagine two lines, A and B and A Is longer. The golden ratio would occur if A/B = A + B/A; the golden ratio is always 1.618 and is in nature everywhere; the spirals present on some animals, humans and most importantly music ( Schielack, Vincent.P 1987). If a piece of music is ten minutes long, it would be safe to assume that the climax of the piece would be about six minutes through. This creates a very natural, flowing melody and putting the emotional climax of the

piece at that position makes it incredibly pleasing to the ear. The greatest and most famous composer in history, Debussy, completely unknowingly uses the golden ratio in his most recognizable pieces (Howat Roy, 2009). This further illustrates how clever these composers are; to have the intuition to place everything so perfectly never ceases to amaze. Another closely related concept to the golden ratio is the Fibonacci sequence. The Fibonacci sequence is important to the way that the structure of the melody is arranged. For example, a musical phrase lasting three beats could be followed by a phrase of five beats and then followed by a phrase of eight beats etc. This gives the piece a natural movement and groups things nicely. This is also prevalent in time signatures in which time signatures like 5/8 will be grouped three and five which directly correlates to the sequence.

Jazz, similarly, is all about patterns. It is some of the most complex music to play, and the reason it is so difficult is because of the intricate patterns and sheer mental ability needed to improvise something on the spot. A big element of jazz is syncopation. You can think of syncopation as a fraction. Think about a standard 4/4 melody, it goes 1-2-3-4. Simple, right? However, to syncopate a rhythm, you have

to time everything on the 'off beat'. If we carry on in 4/4 the melody is often split into crotchets (1 beat), minims (2 beats) or quavers (½ a beat). Usually, you would emphasise the first, second, third and fourth beats. However syncopation requires you to emphasize a different beat, so you may emphasize not the first beat but maybe the first and half beats or if you are feeling confident the first and quarter beats (Brenna Yan Tin, 2024). A dotted crotchet starts on beat one and goes into beat two and then you emphasise a short quaver, this would be very off putting for the listeners' perception of the music, making it all the more interesting. Another extremely mathematical point of jazz is that of improvisation, a key part being the jazz scales. There are fifteen jazz scales, however, for the purposes of this article I will focus on the main three. These are the major scale, Dorian mode and Mixolydian mode. The major scale, although it is not a particularly complex idea it is the basis for all harmony. It is based on semi tones (like an F to an F sharp) and tones (for example F to a G). To construct a major scale, you would choose a starting note and go up in the pattern tone, tone, semitone, tone, tone, tone, and semitone. In short, it works because if you try to rearrange them in any other way it will disrupt the natural harmony and tension as the placement of the semitones help to keep it stable and not sound discordant and not as pleasing to the ear as it should be (B.W, 2024). The second one is Dorian mode. Dorian mode has an identical structure to a major scale except it is tone up. For example, in C major the Dorian scale would start on a D. The main difference is instead of having a major third it contains a minor third. Because of that minor third it is technically minor, however, unlike the natural minor scale it has a major sixth. This gives it a very identifiable jazzy feel, in terms of intervals it goes: tone, semitone, tone, tone, tone, semitone and tone. It is an extremely important scale to those who improvise jazz and without it everything would sound more pedestrian and boring (From Subject to Style, 1986). Finally, the Mixolydian mode is constructed on the fifth note of the major scale, so going back to C major the Mixolydian scale would start on G. Unlike Dorian mode it keeps the same major third meaning as a whole it is major. However, it contains a flattened seventh which gives it a bluesy and unfinished feel. The flattened seventh is exactly ten semitones above the tonic which is ever so slightly dissonant to the tonic giving it quite a 1920s film introduction feeling (Van der Merwe, 1989). In intervals it is: tone, tone, semitone, tone, tone semitone and tone. Again, the placement of semitones makes the scale lighter than the minor scale but stops the resolution of the major scale (Mel Bay, 1991).

However, some may argue that there are people who are incredibly musical and yet cannot do any maths. While this may be true there is an explanation, which is that different parts of the brain are needed for both. While music does contain lots of maths, it also requires an emotional nuance that does not require mathematical skills. Similarly, maths requires abstract reasoning and logic, which are not necessarily present in music. Musicians also tend to have a better auditory cortex and tend to have very good fine motor skills (MIC Science). On the other hand, Mathematicians use the part of the brain which is good at logic and reasoning which is called the frontal lobe (Stanislas’s, the Number Sense, 2011). Whatever the musician’s maths ability may or may not be, one thing is

clear; music is something that requires years and years of dedication and without that the musician will never be truly magnificent despite possessing innate talent. This leads to some people just giving up because they either are not seeing enough progress or just do not enjoy it. And some people just prefer maths so dedicate more time to it. Maths also requires more of a formal education from a teacher who really knows what they are doing, compared to music where teaching is also incredibly important, but musicality is innate and can be developed.

This article started with the Ancient Greeks and ended in the present day, that’s 500Bc to 2025 or 2525 years. The Greeks were the first to truly understand this complex relationship. They were the basis for all later discoveries like the Fibonacci sequence. It is through them that we are able to hear the wonderful music we have today. Equally Debussy and other famous composers were pioneers without even knowing it, their music which perfectly represents all the maths they may not have even known about further cements my point about their connection. And finally, jazz. It is the most complex form of music and the amount of quick thinking it requires is amazing. The scales and way they improvise is all maths, being able to do that on the spot really does require a very clever individual who knows exactly what patterns to do at exactly the right time. Pythagoras once said, 'There is geometry in the humming of strings, there is music in the spacing of spheres', this perfectly demonstrates the point I am making, the two co-exist and without maths there would be no music.

References:

Berle, A (1991) Mel Bay’s Encyclopaedia of Scales, Modes, and Melodic Patterns. Missouri Pacific, Mel Bay Publications. Accessed 3 December 2024.

Dehaene, S (2011) The Number Sense: How the Mind Creates Mathematics Oxford: Oxford University Press. Accessed 5 December 2024.

Guthrie, K (1987) The Pythagorean Sourcebook and Library: An Anthology of Ancient Writings Which Relate to Pythagoras and Pythagorean Philosophy: Michigan, Grand Rapids. Accessed 2 December 2024.

MIC (2014) Science Shows How Musicians' Brains Are Different from Everybody Else’s Accessed 5 December. Available at: https://www.mic.com/articles/96150/ science-shows-how-musicians-brains-are-different-from-everybody-elses

NOVA (2015) Ancient Maths and Music PBS Learning. Available at: https://www. pbslearningmedia.org/resource/nvmm-math-mathmusic/ancient-math-music/. Accessed 2 December 2024.

Roy, H (2009) Debussy in Proportion: A Musical Analysis. Cambridge, Cambridge University Press. Accessed 3 December 2024.

Schielack, VP (1987) The Fibonacci Sequence and the Golden Ratio. National Council of Teachers of Mathematics, Virginia, Reston. Accessed 3 December 2024.

Taruskin, R (1986) From Subject to Style: Stravinsky and the Painters. Berkely, University of California Press. Accessed 3 December 2024.

Van der Merwe, P (1989) Origins of Popular Style: The Antecedents of Twentieth-Century Popular Music. Oxford: Oxford University Press. Accessed 5 December 2024.

Wilson, B (2024) Lesson on How to Construct a Major Scale. Taught 20 May 2024.

Waldron, A (2024) Lesson on Pythagoras’s Philosophy. Taught 14 October.

Yan Tin, B (2024) Lesson on How to Play Syncopated Rhythm. Taught 12 March 2024.

Images:

Dolo Iglesias (2017) Person playing piano. Available at: https://unsplash.com/photos/ person-playing-piano-FjElUqGfbAw

and rewards can be received by many various factors, whether it is winning a football match, or eating a desired chocolate bar, to scrolling through TikTok. But how many of you know that these feelings are ultimately caused by a chemical called dopamine 'a biological chemical that gets released in neurotransmitters when there is an electrical impulse which allows the dopamine to bind to specific receptors on a receiving neuron' (NeuroLaunch, 2024a). This fascinating reaction impacts our lives both directly and indirectly every single day.

To explore how dopamine impacts our lives we shall delve into how our attention can be affected by dopamine levels, what is fake and real dopamine, how dopamine affects your cognitive control, and an experiment I conducted seeing if a 'human hard reset' is possible.

To begin with, we have to know about other biological chemicals that are in our brains. There are five different chemical that work the human brain with the most significant two being dopamine and serotonin. These chemicals are remarkably similar but have massive impacts on our well-being.

Serotonin affects our moods. When there is a deficiency of serotonin it can lead to anxiety, depression, and numerous other metal illnesses which at the moment around one in eight people suffer from. Whilst dopamine affects our motivation, when there is a great imbalance of dopamine, we can notice a lack of concentration and reduced pleasure in your normal enjoyable activities (Simply Psychology, 2021).

Completing a task is simple, you just need to work and stay focused on the job. However, people’s attention spans in the last decade have been on the decline. Researchers measured the attention span of the average person in 2004, 2012 and 2017. It showed that in 2004 the average attention span was two and a half minutes which is a good amount of time, but in 2012 that number halved to one and a quarter minutes. Then in 2017 it reduced further to 47 seconds (Mark, 2023).

There are many factors which may have been making it harder for us to concentrate and many people have different opinions regarding the cause. In this section of the article, we will discover a possible reason which is causing this signific drop.

Smartphones play a significant role in our everyday lives and most people who own a smartphone have some sort of social media nowadays and this plays a significant role making us distracted from our tasks. Eight hours of sleep a day is needed for your health. Any time lost from those eight hours is called sleep debt. If you only get seven hours of sleep a day your sleep debt will start accumulating and your focus level will reduce. What researchers discovered

was that the people with sleep debt spent more time doing lightweight activities like scrolling endlessly through social media.

When we see content that we enjoy watching, dopamine gets released to make us feel good, but the brain does not stop there. According to Pauline, 'The dopamine which gets released creates a feedback loop which reinforces behaviours leading to positive experiences, encouraging us to continue' (Pauline, 2024). These positive experiences can last for minutes, making us want more.

Here is where the satisfaction of social media compared to a football match differentiates. Winning a football match is a wonderful achievement which an individual may have worked hard to achieve; however, one is not guaranteed to win the match or even draw the match. Social media though, wins the game more or less every time. When Facebook was first created all the feeds were in chronological order making it harder to find what one liked. In 2009 Facebook changed how they displayed their feeds, using a new, improved algorithm to show users more of what they liked, so keeping them hooked into Facebook’s feeds (Social Media Today, 2015). This algorithm has the effect of increasing the amount of dopamine being released because the individual is always seeing what they want to see.

There are two ways in which dopamine is acquired. There is ‘fake’ dopamine and ‘real’ dopamine. Fake dopamine can be obtained by doing the easiest things where you do not really have to work for it like drinking alcohol, taking drugs, playing video games, and watching inappropriate content. These activities are simple and easy to do; they do not require much effort and are right at our finger tips. Maston asserts that '[fake] dopamine is ruining people’s potential and creating lives filled with regret instead of a life of passion and purpose' (Matson, 2022). However real dopamine is achieved by hard work, determination and even by setting goals for the future. It requires us to think and stimulate our brain keeping us mentally active.

The first time I heard about ‘fake’ and ‘real’ dopamine I was of the sense that the only difference must be the way that you obtain it. But there is actual a noteworthy difference. There are two places where dopamine is received in the brain. The dopamine desire circuit (‘fake’ dopamine acquired) and the dopamine control circuit (‘real’ dopamine acquired) (Matson, 2022). After acquiring ‘fake’ dopamine from, let us say, eating chocolate, it slightly deflates your dopamine control circuit. There is nothing to be concerned about here. The only thing that happens is that your brain starts to want more of this easy achievable dopamine where anybody can get it from. This explains why we lack motivation after receiving ‘fake’ dopamine because our brains want more of the ‘fake’ dopamine which is easy to access.

The term ‘cognitive’ means it is our feelings, emotions and thoughts. NeuroLanch state that if we obtain too much ‘fake’ dopamine it has the potential to impair our cognitive state; different sources of dopamine will influence our behaviours when setting and achieving goals. If our satisfaction is based off ‘fake’ dopamine we will think along those lines. So, if an individual is a gamer who plays many video games, the method of thinking will be somewhat similar to the video games that person plays. To get rid of ‘fake’ dopamine intake is impossible, and most likely is not good for one’s mental health, but reducing it in certain cases is better for the mental health of the individual, just like giving a screen time limit to ourselves and eating healthily.

We are now going to look further into how reducing ‘fake’ dopamine has the potential to improve our lifestyles, which I have done myself to try to improve my way of living.

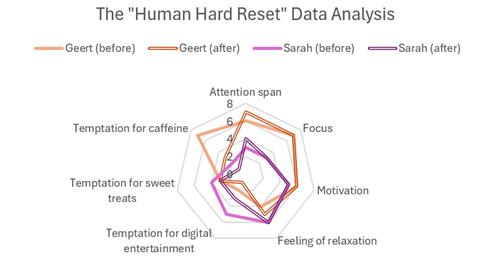

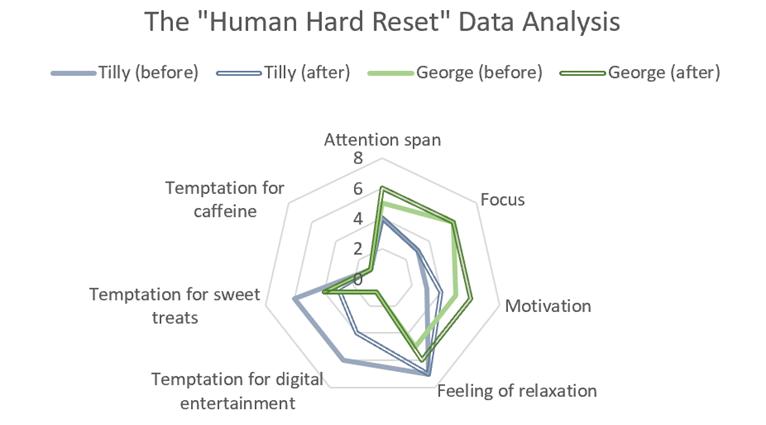

I conducted an experiment at home seeing whether, if we as a family cut back on our ‘fake’ dopamine, it would improve our motivation, cognitive control and attention.

The rules could not be more straightforward:

1. No caffeine/alcohol

2. Only health food

3. No digital entertainment

4. 30 minutes meditation a day.

This experiment was conducted on my family and myself which lasted for one week. Before the rules came into play, we had one week of normality where we measured their motivation levels and attention to tasks. This was measured by asking a few questions each morning and evening before and after the rules were in play.

Before the experiment began, I made my predictions which were that, on average, our attention spans would increase, the temptation for digital entertainment would decrease and the urge for caffeine will significantly decrease for the people who drink coffee.

For the first few days I must admit that it was a little bit unusual but was not difficult. As it progressed, it became more normal and incorporated into my lifestyle.

Many companies and universities have studied whether meditation can actually improve our attention spans, and in our experiment it was time to see if it actually proves to be so. The Association for Psychological Science declared that, after a long period of time, they noticed in their experiments that the task performance improved in their subjects. This was not the only source. Kurtzman (2019) in her article showed that a 'digital meditation program significantly improved attention and memory in healthy young adults' within six weeks.

Some of the results from the experiment were that:

1. There was no longer a craving for sweet treats and caffeine

2. Afterwards everyone felt more relaxed than before

3. Some people felt more concentrated, and motivated after the experiment.

These are the intriguing results from the experiment showing that doing a little can change a lot.

Personally, I believe that the most interesting result was that the subjects longer needed caffeine after the experiment. Caffeine is a chemical which stimulates the brain and metabolism making you more aware. During the beginning of the experiment scientists could see that the people who drank caffeine everyday were mildly tired quicker than before, but, while progressing through the experiment these tables turned. After finishing, for a few weeks afterwards my father didn’t drink any caffeine because he just didn’t need it anymore.

The reality is that dopamine is a very important chemical which is vital for the survival of humankind. Depending on the way you receive your dopamine, whether being ‘fake’ or ‘real’, there are potential side effects mentally and physically.

References:

Please see Appendix on page 62.

Images: Bennett, T (2018) iPhone X beside MacBook. Available at:https://unsplash.com/photos/ iphone-x-beside-macbook-OwvRB-M3GwE

Editor: Mr Elkin-Jones

Itis as universal as it is complex: pain is one of the most profound feelings, it is often felt beyond words. Although universal and affecting millions worldwide, it is unique to each person: it can vary from the sting of a paper cut to the debilitating agony of chronic illness. Yet despite being universal, the phenomena of pain has been debated intensely, dividing philosophers, neuroscientists, and medical practitioners alike. Pain? Could it be just a trick of the mind? Or is it an important biological response with a critical evolutionary purpose? Nevertheless, it is a critical role in human life, alerting an individual to possible danger. This essay will present a description of the unavoidable experience of pain and arguments for and against it as an illusion. Concepts of biology and psychology will highlight the complexity of pain and its significance in the human experience.

To begin this discussion on the paradox of pain, it is essential to understand the physiological mechanisms that construct this sensation. Beneath the smooth unblemished surface of our skin, which appears simple to the naked eye, is a complex web of interconnected roots and channels that holds a network of nociceptors. These nociceptors can be activated by a variety of prompts: mechanical (knife cut), thermal (kettle burn) and chemical (acid). These then send signals through the peripheral nervous system to the spinal cord using nerve fibres. This introduces the ‘first pain’ – the quick reflex that draws you away from the danger. While this occurs, the signals are ultimately passed to the brain. Here, the signals are processed and interpreted, resulting in the perception of pain, known as ‘second pain.’ The result of the pain pathways and intricate network of signals can have variations in effect, from sensitivity and response to the differing pain thresholds between individuals (Murphy, J. 2023).

Neuroscientists have characterised pain into two categories; acute pain (which serves as a protection), and chronic pain (which is more complex and not yet fully understood). Chronic pain is different form acute pain as it persists after an injury has healed. This may change factors of your central nervous system that influence your tolerance to pain sensations. These ramifications highlight that, while the experience of pain is real to many and necessary in everyone’s lives, it consists of layers that cannot be solely influences by biological factors.

The sensation of pain is not entirely determined by biological processes. It has been proven that psychological factors play a significant role as well. This is demonstrated through the mind-body connection. It illustrates how someone’s emotional state can impact their experience of pain: for example, suffering from anxiety or depression. For instance, individuals suffering from anxiety or depression often report heightened pain sensitivity, evidently suggesting that psychological well-being is convolutedly linked to pain perception.

Lorimer Moseley, a clinical and research physiotherapist and Professor of Clinical Neurosciences at the University of South Australia, has dedicated the majority of his research into the brain's role in chronic pain. His concluding theory posits that pain perception is mediated by cognitive and

emotional factors, which serve as a lens through which the illusion aspect of pain can be examined. According to his perspective, pain is influenced by an individual’s expectations, and past experiences. For example, a person may feel a greater level of pain when anticipating a negative incident (Psychoneuro 2016).

For example: imagine you are in a park enjoying a sunny day; you accidentally graze your knee on the rough grass after tripping. Your body's somatic sensory receptors send signals through nerve fibres to your spinal cord and then to your brain, indicating that something has happened to your knee. Normally, this would just result in a mild sensation of discomfort.

Now, let us change the context: Earlier that week, you had a bad fall while playing football, and you remember feeling intense pain in your knee. This memory is recent and still current in your mind. When grazing your knee this time, the sensation is amplified because your brain immediately remembers your previous injury. The nociceptors in your knee heighten your perception of pain, sending messages to your brain. The negative past experience has caused your brain to emphasise the potential threat.

Even though the impact from the graze is minor and does not cause any real injury, your brain interprets the experience through the lens of your past pain. Therefore, instead of feeling a simple discomfort, you experience sharp pain in your knee. This situation showcases how your brain's memory and context can influence your pain perception. This proves that pain is often more about what your brain believes is happening than the actual state of your body (Psychoneuro, 2016). This theory suggests that the context and cognitive framing of pain can alter one’s perception, raising questions about the extent to which pain is 'real' versus constructed by our minds.

One of the most compelling pieces of evidence for the proposition that pain perception may be altered by psychological processes is the placebo effect. The placebo effect refers to the phenomenon where individuals experience physical improvements after receiving a treatment that has no therapeutic value, solely because they believe it might work. Research has proven that placebos decreased pain levels across diverse conditions, from headaches to chronic pain syndromes.

The mechanisms behind the placebo effect are complex neurobiological responses. Many clinical trials have shown that placebos can evoke genuine physiological responses that reduce pain, further suggesting that the experience of pain can be shaped by one’s expectations and beliefs. The release of endorphins along with changes in brain activity patterns relate to pain perception. This exemplifies the idea that psychological factors intertwine with physiological sensations, showing that pain can be formed by cognitive processes, regardless of if the underlying injury remains unchanged (LeWine, H. 2017).

Distraction is another key factor that can influence an individual’s perception of pain. This provides further evidence that pain may not always align with physical reality. There are various forms of distraction that can change pain—be it cognitive, visual, or auditory. Clinical trials have been shown to reduce the feeling of pain. Techniques such as going on a walk, listening to music, or puzzles shifts focus away from pain sensations and decreases the intensity.

The Gate Control Theory, proposed by Ronald Melzack and Patrick Wall in 1965, offers a perfect example of how distraction influences pain. It is a nuanced understanding of how pain is perceived in the body (Physiopedia, 2024). The theory suggests that the spinal cord holds a 'gate' that can either allow or refuse pain signals from reaching the brain. There are two distinct types of activity that play a role: C fibres that send pain signals, and A-beta fibres that carry non-painful sensory information. When a painful stimulus occurs, C fibres are activated and open the gate, allowing pain signals to be processed. However, the simultaneous stimulation of A-beta fibres can close the gate. This limits the feeling of pain (Wendt, T. 2022).

The 'Proof’ of Pain in Action: an Albanian Anecdote

Last summer, my friend Sarah and I went hiking in Albania. About halfway up the trail, she tripped over a rock and fell, scraping her knee painfully. As expected, she winced and clutched her knee. Then something surprising happened. Instead of standing there in pain, she laughed it off and at once started to massage her knee while joking about her clumsiness.

To our astonishment, within moments, she was back on her feet and ready to continue the hike. I asked how she was feeling, bracing for a complaint about pain. 'Surprisingly good!' she said. 'I guess rubbing it helped!'

Reflecting on that moment, I realised it was a perfect illustration of Gate Control Theory in action. By rubbing her knee, Sarah activated the larger nerve fibres that blocked some of the pain signals from reaching her brain. This not only distracted her from the initial discomfort but also empowered her to move past the minor injury.

Other psychological factors such as attention and emotional state can also affect this gating mechanism. Sarah’s experience illustrates that pain is a complex experience shaped by both physiological and psychological components.

The current crisis of opioid use presents a critical reason for these discussions of pain management. Opioid drugs were the most prescribed dependency forming medications in England in 2021/22 with 39.6 million items at a cost of £307 million (Stanford Health Care 2021). With the rise in opioid prescriptions to manage both acute and chronic pain, concerns have developed regarding the over-consumption on pharmaceutical interventions. This is increasingly alarming as the rise of addiction and other negative outcomes is increasing. This crisis emphasises the importance of a strong understanding of pain, that goes further than masking symptoms with drugs.

Exploring alternative pain management strategies is essential to combat this global issue. Considering the evidence that pain can be influenced by psychological and cognitive factors, non-pharmaceutical approaches— including cognitive-behavioural therapy, mindfulness, and lifestyle modifications—offer promising channels for reducing pain while minimising the risks associated with opioids. Researchers have created tools that align with the pain management theory. For example, Buzzy, developed by Pain Care Labs, is a tool that uses a dual approach of high-frequency vibration and cold therapy, to follow the gate control theory (Pain Relief Research. 2023). This works by stimulating the large nerve fibres into ‘closing the gate.’ This product does not only offer a safe, effective method to pain relief but also reduces the need for opioids. Pain Care Labs is conducting trials to assess Buzzy's effectiveness in managing various forms of pain, including low back pain, chronic pain, and post-surgical recovery. The main focuses of these studies are how Buzzy can enhance patient comfort during needle procedures, such as injections or blood draws. However, developing technology along with the research into the Gate Control Theory can open new doors into drug-free pain management for the future. Furthermore, acknowledging that pain is not merely a physical sensation but a complex process of factors allows healthcare professionals to develop more comprehensive treatment plans that address both the physiological and psychological background of pain.

In conclusion, the search for the understanding of pain as an illusion uncovers a completely multi-dimensional phenomenon beyond a simple biological explanation. Mostly, psychological factors and cognitive processes - as well as the context of the experience itself - hugely affect one's perception of pain. They do not, however, deny its existence or value. Pain plays critical roles in human life, in alerting an individual to possible danger and instigating action for self-protection. Continuing research will discover more of the amazing intricacies of the perception of pain that are rendering increasingly evident the fact that complete understanding of pain must consider the biological and psychological dimensions. It becomes urgent to build management strategies with a view to include pain in its entirety.

References: Please see Appendix on page 62.

stability. Overall, forming NATO was a big promise to stick together for security. It showed a belief that peace could be maintained when countries unite and support each other. With a formal agreement, the member states pledged to help each other in times of trouble (Apps, P, 2024). They made it clear that they wouldn’t leave any country to face threats on their own.

What is NATO's purpose in the modern era?

Collective defence is one of NATO’s top priorities. This is of upmost importance for NATO. It means that if one country gets attacked, all the member countries are under attack. This idea comes from Article 5 (NATO Online, 2019) of the NATO treaty. It makes potential attackers think twice. Even though the Soviet Union is no longer a threat, we face new challenges such as cyber-attacks, terrorism, and different types of warfare. NATO has changed how it deals with these new issues to keep everyone safe.

Firstly, we look at crisis management. NATO doesn’t just sit back when things go wrong. It steps in to help during wars or urgent situations. For example, NATO has supported areas like the Balkans and Afghanistan. The goal is to help fix conflict zones and restore normality. By doing this, NATO shows its commitment to maintaining order and preventing problems from spreading. Cooperative security is also important.

NATO also works with non-member countries too, such as South Korea and Australia. (Haglund, D.G. 2019). They join in on drills and share key information to stay safe. It’s not just about protecting NATO countries’ borders; it’s about stopping problems before they start. By working with nations in the Middle East and North Africa, NATO can tackle challenges that cross borders. This helps make the world safer for everyone.

Cyber defence is another key focus for NATO. This issue is huge in our modern world. With so much happening online, protecting against cyber threats is essential. Cyber-attacks can harm economies and major systems. NATO has started to take this seriously. They’ve created plans and set up a Cyber Defence Centre to help member countries find and respond to cyber-attacks. They also do regular training to prepare everyone for potential threats.

Counter-terrorism is another large focus for NATO. Terrorism is still a real danger (GOV.UK, 2024). NATO is dedicated to fighting it. They work on sharing information, tightening border security, and training forces to respond. By addressing the roots of terrorism, NATO aims to keep its members safe and support the global fight against this ongoing issue. Human security is also gaining importance for NATO. This idea focuses on people’s rights, equality, and protecting civilians in conflict zones. NATO wants to make sure its actions reflect these values. By prioritising human security, they hope to create lasting peace and stability in troubled areas.

Finally, NATO knows it needs to keep up with the times. The world is always changing, and so are the threats we face. To stay effective, NATO is constantly updating its strategies. This includes investing in new technologies and ensuring that member armed forces can work together. By staying current, NATO keeps its position as a key player in global security.

Is NATO still as important as it was 50 years ago?

Fifty years ago, in 1974, NATO was deeply involved in the Cold War. This was a time of strong tension between the United States and the Soviet Union. NATO’s main job was to stop Soviet attacks in Europe. The threat was real, and countries built up their military forces to prepare for any possible aggression.

After the Cold War ended and the Soviet Union collapsed in 1991, many wondered if NATO was still needed. Without a clear enemy, the alliance struggled to find its purpose. But NATO quickly shifted its focus. It started working more on crisis management and cooperative security. NATO got involved in conflicts in the Balkans, Afghanistan, and Libya. This showed that NATO could handle a variety of issues and was not just focused on country-to-country conflicts.

Today, we can see NATO differently. One key reason for its continued relevance is the rise of Russia. Russia’s actions, like taking Crimea in 2014 and invading Ukraine, have raised alarms about security in Europe. These actions make NATO important again as a way to deter Russian aggression, much like it did decades ago. The expansion of NATO to include Eastern European countries shows its commitment to collective defence.

Another important role for NATO now is dealing with non-traditional threats. Problems like cyber warfare, terrorism, and hybrid threats are more common today (GOV.UK. 2024). NATO has worked hard to develop strategies to tackle these issues. For example, they set up

the Cyber defence Centre of Excellence and are actively fighting terror globally. This shows NATO’s commitment to facing today’s security problems. NATO is also all about teamwork. It partners with countries outside the alliance and other organisations to keep the world safer. This shows that security isn’t just a national issue; it’s something we need to work on together. By cooperating with countries in the Middle East, North Africa, and Asia, NATO is helping to build a more stable environment worldwide. However, NATO has some challenges it needs to address.

There are disagreements among member countries, especially regarding how to share defence costs. Some European allies are under pressure to boost their military spending. Additionally, rising isolationist views and nationalism in some member states (notably the USA) threaten the core idea of collective defence. Despite these hurdles, NATO remains a key player in global security. It shows the ability to adapt and tackle new threats. Its focus on teamwork and the commitment to keeping peace is why NATO is still relevant today.

Conclusion

NATO is increasingly important today. It's been around for a long time and has changed with the shifting geopolitical climate. As Russia is getting stronger again, it shows how crucial NATO is for keeping countries safe. However, every member has its own internal problems to deal with and this can cause struggles to share costs or have different priorities. Despite this they have demonstrated that they can come together to tackle security issues and manage crises. As long as there are threats to democracy, NATO will keep working to keep the world power in balance. Their job is to help keep peace and show that they stand united. This mission matters just as much today as it did fifty years ago. Plus, NATO is all about protecting democratic values and human rights. This commitment makes them a key part of the global safety plan. In a world that keeps changing and getting more complicated, NATO’s role becomes even more necessary. They play a big part in keeping things stable for everyone. In conclusion, NATO isn’t just a relic of the past. It's here to stay and will keep adapting to face whatever comes next.

References:

Apps, P (2024) Deterring Armageddon: A Biography of NATO. Wildfire. GOV.UK (2024) Defending Britain from a more dangerous world. [online] Available at: https://www.gov.uk/government/speeches/defending-britain-from-a-moredangerous-world.

Haglund, D G (2019) NATO | Founders, Members, & History. In: Encyclopædia Britannica [online] Available at: https://www.britannica.com/topic/North-Atlantic-TreatyOrganization.

Minogue, K R (2000) Politics : a very short introduction. Oxford ; New York: Oxford University Press.

NATO (2018) Nato. [online] NATO. Available at: https://www.nato.int/. Ukraine War | Latest News & Updates| BBC News (2019). World News - BBC News. [online] BBC News. Available at: https://www.bbcnews.com/world/Ukraine.

Images:

Eignatik17 (2021) Map. Available at: https://pixabay.com/photos/map-voyagecartography-travel-6513914/ Pexels (2017) Cargo plane. Available at: https://pixabay.com/photos/air-force-cargoplane-aircraft-2178863/ Tumisi (2018) Cyber security hacker. Available at: https://pixabay.com/photos/cybersecurity-hacker-security-3194286/

Every Christmas, families across the United Kingdom take time out of busy Christmas schedules to enjoy a theatrical experience that comes around once a year: pantomime. A stage show full of brilliant tunes, audience participation, a touch of slapstick, elaborate costumes and sets, and a script filled with hilarious jokes and satirical comments.

From where does this tradition originate? How far back does it date? Are the pantomimes that we see today a continuation of the tradition that was intended? This essay will endeavour to answer these questions.

Going back to the sixteenth and eighteenth centuries, we can trace the beginnings of what we know today as pantomime, then known in Italian theatre as Commedia dell’arte. Alongside Theatre Haus (2021), Broadbent (1901) states that it is famed for its four different types of masked characters. There is first the Zanni, perhaps the most iconic, essentially the clown of the show. Most recognised is the Arlecchino (or Harlequin), known for wearing a fool’s cap, a diamond patterned costume and for having a mischievous nature. Next, the Vecchi- a miserable, old man, greedy and possessive of money, property, and women. Then the

Innamorati – a pair of young, upper-class lovers, naïve and with much to learn about the world. Finally, Il Capitano. This character was arrogant and self-obsessed, often bragging about military skill and expertise, and taking any opportunity to prove it.

Nicoll (1987) asserts that Commedia dell’arte was originally performed outdoors on a platform in the Italian Piazza (Town Square) before travelling across Europe, sometimes reaching as far as Moscow.

Even older than Commedia dell’arte, the modern-day Pantomime can be traced back to the Atellan Farce which was a genre characterised by the disastrous, disorganised and absurd, which originated in the Roman era.

a society change over time in the same way that pantomime itself has changed.

Mayer (2003) tells us that by the early eighteen-hundreds, the performance – now called pantomime because it was an ‘exaggerated mime’ containing no dialogue due to theatre licensing issues – relied upon stories mainly adapted from European fairy tales, just as they are today. The titles were generally dual named, most commonly with 'The Harlequin' being the first name since the Harlequin was the most important character. The second name would have been the name of the other principal character. This would give the audience an idea of what to expect from the show.

As the eighteen-hundreds progressed, children began to go to the theatre a lot more during Christmas and other holidays, a tradition that continues today. They loved to watch the madness of the ‘Harlequinade’ (a part of the pantomime that included spectacular special effects, a chase, and a lot of slapstick comedy) unravel. The main premise was that the Harlequin and his lover were running away from the lover’s father, whose pursuit was slowed down by his servant clown and a 'bumbling policeman'.

In 1837, towards the end of the ‘Harlequinade’, pantomime faced a decline in popularity but was still fighting to stay alive. After 1843, when licensing changed to allow theatres to perform plays with dialogue, the importance of the silent ‘Harlequinade’ began to decrease. As is stated by an artefact in the Victoria & Albert Museum, the importance of the fairy tale element of the pantomime increased thanks to two writers called James Planché and Henry James Byron. Their use of puns and humorous word play have become a convention of modern-day pantomime. In the late eighteen-seventies, Augustus Harris, manager of Drury Lane, produced and co-wrote a series of extremely popular pantomimes, that focused mainly on the spectacle of the show, rather than anything else.

By the end of the nineteenth century the ‘Harlequinade’ became just an epilogue to the pantomime involving a display of dancing and acrobatics. It lingered for a few decades but eventually fizzled out from the repertoire of pantomime. The last ‘Harlequinade’ was performed at the Lyceum Theatre in 1939.

Moving to the present day, the pantomime tradition is thriving. Between November 2024 to January 2025, the Oxford Playhouse staged Sleeping Beauty and I was

Lottie Graves, Third Form, Gascoigne House Scholar Supervisor: Ellie Lunn, Fifth Form, Swift House Editor: Miss Hutchinson

Imagine walking into a room full of strangers and seamlessly blending in, not just in appearance, but with your voice, your accent, the very essence of your speech, as it subtly shifts to mirror those around you. There are people who can do so, or at least films and books would have us believe so. This proposes questions around what factors would play into this change. These may include aspects such as the sole linguistics (the way the words are leaving your mouth) right down to the psychological elements of social dynamics that are the result of transitioning between communities. Is there a pattern to this transformation or is it unique to each individual? Consider your accent as an elastic band – as your environment changes, this band stretches and changes too. This also suggest the possibilities of an accent stretching so far it ‘deforms’ and never returns to its original shape, or perhaps even snapping in the way elastic band may do so. Through comparing circumstances in which an accent change may occur, and considering multiple aspects that may cause an occurrence, we hope to uncover the forces that mould our accents to be more like those of the people around us. Can our accents really be as active as an elastic band?

How is an accent first formed?

In order to understand how an accent may change, it is important to know how this accent came about in the first place. The process of an accent developing initially starts almost as soon as a child leaves the womb. We are immediately exposed to the speech of many different people, each with a slightly different accent and, as we learn to speak, an accent will develop as well. Babies are soon able to differentiate between various inflections and intonations in their parents’ or caretakers’ voices. For example, they may laugh when their father says something that they think sounds funny or cry when their mother raises her voice because, in order to comprehend what is going on around them, they are focused in on every little thing, from the shape of your mouth when you speak to the rising and falling of your voice (The Speech and Language Department in collaboration with the Child and Family Information Group, 2016). As an expert in early childhood learning, Patricia Kuhl (2015) stated that an infant can perceive the full set of 800 or so sounds at birth.

These sounds, or phenomes, can come together to make any word from any language. The brain of a young child is constantly working on articulating and distinguishing all these different sounds at first, but as they become accustomed to the specific phonetic habits of their native language, their brains start to favor those sounds. This means that, over time, they will lose the ease with which they translate sounds that are not part of the linguistic environment they are exposed to. For instance, a child growing up learning to speak English in the UK will become more familiar and comfortable with the specific cadences of that dialect and modulate an accent accordingly. As a result, this child might not be able to pronounce a harsher ‘ar’ sound in the same way a child living in the USA would, simply because their brain has decided that information is not necessary for communication. Consequently, they will not acquire the specific set of motor patterns for that accent. This often makes it difficult to transfer to a new accent, although linguistics and phonetics specialist Dr. Nicole Whitworth (2021) makes it clear that it is not impossible.

Once infants reach around six months old, the sounds they make are much more purposeful and controlled. They might even begin saying things that we think we recognize, or sound similar to the words that are being said to them or around them, such as ‘ma’ or ‘ba’. If the child likes how this sounds, they will repeat it. This stage is often referred to as ‘babbling’ or what you may know as ‘babytalk’ and is part of the process called language acquisition (Crystal, D. 2010). Despite it starting at such a young age, children do not complete the language acquisition stage until they are around six years old (Cambell, R and Wales, R. 2010). During this period, the immediate social environment the child is living in is of even more importance. The more the infant is exposed to a certain type of speech, the more they will imitate it. This is also why, even within small geographical areas, accents can be very different with local communities developing their own distinctive linguistic features and speaking habits.

Nature versus Nurture

When considering how an accent might adapt according to the person’s environment, it is important to regard the influences of both nature and nurture during this transformation. Essentially, nature refers to the inherited and heredity influences on an accent's adaptation, whereas nurture is encompassing the idea of environmental and social causations: our upbringing, surrounding culture or relationships (Cherry, K. 2022).

The influence of genetic predispositions on the reformation of an accent generally comes from the individual’s anatomy. Certain phonetic capabilities are inborn, determined by physiological attributes such as the shape and size of the vocal cords, mouth, and nasal passages (Dengler, R. 2019). In this, biological predisposition lies the very foundation of how a sound is articulated and perceived. For example, some may find that imitating different accents comes more naturally due to their heightened auditory discrimination ability, the majority of which is determined by genetics. Furthermore, up to 60% of personality traits, such as an openness to new experiences or social adaptability, are inherited genetically and may lead to an accent change (National Library of Medicine, 2022). Individuals with such traits can easily turn to a new accent or change their way of pronouncing in order to correspond to the standards of speech in the new society that they are living in. This can

be illustrated by the fact that children from multilingual families can often easily change their accent depending on the social situation they find themselves in throughout their lives.

Throughout their earlier years, children are more likely to pick up new accents if they are exposed to them frequently, partly because they are still in their language acquisition stage and thus have a more malleable accent. With continued exposure to other speech patterns, the pronunciations children use may still be shaped and reshaped as they grow. Nevertheless, the degree of elasticity to which an accent is able to change ultimately diminishes with age. Though it can be debated whether this falls under the nurture aspect of this argument as it is predominantly due to the stage of talking they are in, it is probably more accurate to categorise it as nature.

On the other hand, the evolution of an accent is greatly influenced by environmental causes and social interactions. To begin with, there are many psychological factors that affect a person’s attitude and willingness towards changing their accent. Primarily, this would be a desire to belong or impress someone in the new community, especially if they have received unwanted comments regarding their previous accent or it is associated with a negative stereotype. To be excluded from a society, or feel as if that is the case due to your accent is difficult, and the likelihood

receptive to new sounds. As one grows older, the ability to adapt the accent diminishes but is never fully eradicated. And it is through social factors - to fit in or avoid possibly negative stereotypes, for example – that cause the reasons behind why and how our accents change really become more apparent.

This, therefore, places the elasticity of any particular person's accent in his or her hands as a product of compendium variables from nature to nurture. That intricate yet delicious recipe of nature, nurture and all the other complexities that make us who we are today ensures our accents will forever offer up the beauty, richness, and diversity within human communications.

References:

Bard, S (no date) Linguists Hear an Accent Begin, Scientific American. Available at: https://www.scientificamerican.com/podcast/episode/linguists-hear-an-accent-begin/ Accessed: 1 September 2024

Bryson, B (1991) Mother tongue. Penguin Books.