BRUNO MARSINO

COMPUTATIONAL DESIGNER SELECTED

BRUNO MARSINO

COMPUTATIONAL DESIGNER SELECTED

A detail-oriented and performance-driven Computational Designer with over 5 years of experience in creating immersive experiences, from physical artifacts to AI workflows and XR tools. Expert in Spatial Computing, XR Development, and Real-Time 3D Visualization, with proficiency in Generative AI Pipelines. Skilled in managing crossfunctional projects, delivering high-quality, innovative solutions in immersive design and interactive technologies.

Judson Studios, Los Angeles, CA - Coordinator of Design and Innovation

November 2023 - October 2024

● Responsible for heading up Judson studios’ department of Research and Development, turning conventional design processes into streamline AI products, aligned with the studio's product vision.

● Collaborated directly with cross-functional partners across production and marketing, streamlining workflows for quicker project turnaround.

November 2022 – October 2023

● Developed custom AI workflows for residential design using LoRA models, accelerating the design iteration process and improving efficiency for the studio’s designers.

● Created XR workflows for immersive visualizations across web, mobile, and XR devices, integrating audio, visuals, and smell via Arduino, which enhanced client engagement and presentations.

● Built a new website using HTML, CSS, JavaScript, and Three.js, delivering a dynamic platform for showcasing interactive 3D prototypes, increasing client interaction and satisfaction.

SCI-Arc, Los Angeles, CA – VR/AR Developer & Instructor

May 2023 – September 2023

● Developed VR/AR applications for mobile and Microsoft HoloLens using Unity and C# for architectural visualization and analog fabrication workflows.

● Worked with Soomeen Hahm on immersive design projects, integrating realtime rendering and digital twin technology to enhance accuracy between digital models and physical fabrication.

● Led a seminar under Maxi Spina, teaching AR development with Meta Quest, emphasizing the use of immersive technologies in architectural and product design.

Pedro Silva Studio, Santiago, Chile - Product Designer

April 2021 - October 2022

● Led the design and execution of product prototypes, utilizing VR and LiDAR for immersive, spatially accurate visualizations, reducing the product design cycle by 10%.

● Collaborated with cross-functional partners (graphic designers, architects, engineers, and artists) to conceptualize and deliver user-centered product designs, integrating client feedback and emerging market trends.

HONORS AND AWARDS

● Exhibitor at the 18th Venice Biennale, CityXVenice Virtual Pavilion

● Winner – “A Design for Marbella” Competition, Valparaíso, Chile

● Finalist – Madera 21 Sustainable Design , Santiago, Chile

Los Angeles, CA (213) 492-1513 brunomarsino@gmail.com

Linkedin: @brunomarsino Portfolio: Link

SKILLS

Unity

Unreal Engine

Blender

Rhinoceros

Fusion360

VR/AR Development

Spatial Computing

ARKit / ARCore

Digital Twin Technology

Real Time Rendering

Microsoft Hololens

Meta Quest

Arduino Integration

ComfyUI AI

Automatic1111 AI

Machine Vision (py)

Houdini

Python

HTML

C#

CSS

JavaScript

EDUCATION

Master of Science in Architectural Technologies

SCI-Arc

Los Angeles, CA

Master in Design Sciences

Adolfo Ibáñez University

Santiago, Chile

Bachelors in Design

Adolfo Ibáñez University

Santiago, Chile

LANGUAGES

English: Fluent

Spanish: Native

Participated as speaker and assistant for conferences and meetups including the Academic Congress of Design in Peru and Design Fair UAI in Chile .

Co -organized DesignLab events and lectures for students in Chile with over 65 + attendees at each event, The goal: to increase the average student’s assistance by 50 % .

Developed my teaching practice as a teacher assistant for more than 70 + students throughout 5 courses – both at SCI-Arc and UAI.

Represented both an educational institution and design teams in awards ceremonies and design events .

2. edit your massing

1. type your address

SKETCH. is a platform that compiles site-specific insights with text-to-image models allowing the user to input its own aesthetics throughout the massing optimization process.

3. prompt

The platform accelerates massing design phases through the performance and surface articulation

4. check your results

4. export your favorite AI sketch

massing decisions in the concept and schematic integration of topographical, climate

articulation AI models into Rhino and Blender.

project video

Simple back-end of how Sketch works, from the address input to a massing generator through custom algorithms to a variety of generative AI volumes and textures.

Through a convolutional solver the model generates a series of outcomes based on slope steepness, contextual elements and sunlight exposure.

early prototypes

With the release of early AI text- tovideo and NeRF models, a 3D workflow that merged the two was developed.

Custom buttons and prompt bar for the interface.

75% - 100% Sun Exposure

= Opaque Materials

= Walls

25% - 74% Sun Exposure

= Translucent materials

= Windows + walls

0% - 24% Sun Exposure

= Transparent Materials

= Windows

The chosen result is divided into 3 categories according to the percentage of sun exposure during a year.

The value of each face informs the generative model whether windows or walls are required according to the need to cover (or increase) the sun exposure.

1. market research

Developed a program with architects for active feedback on the early stages of the platform.

3. beta version

Launched a public beta hosted on Github for UI testing. key steps

3. beta version

Developed a digital arch. project based on one of the AI outputs as a proofof-concept.

project video

Beta platform

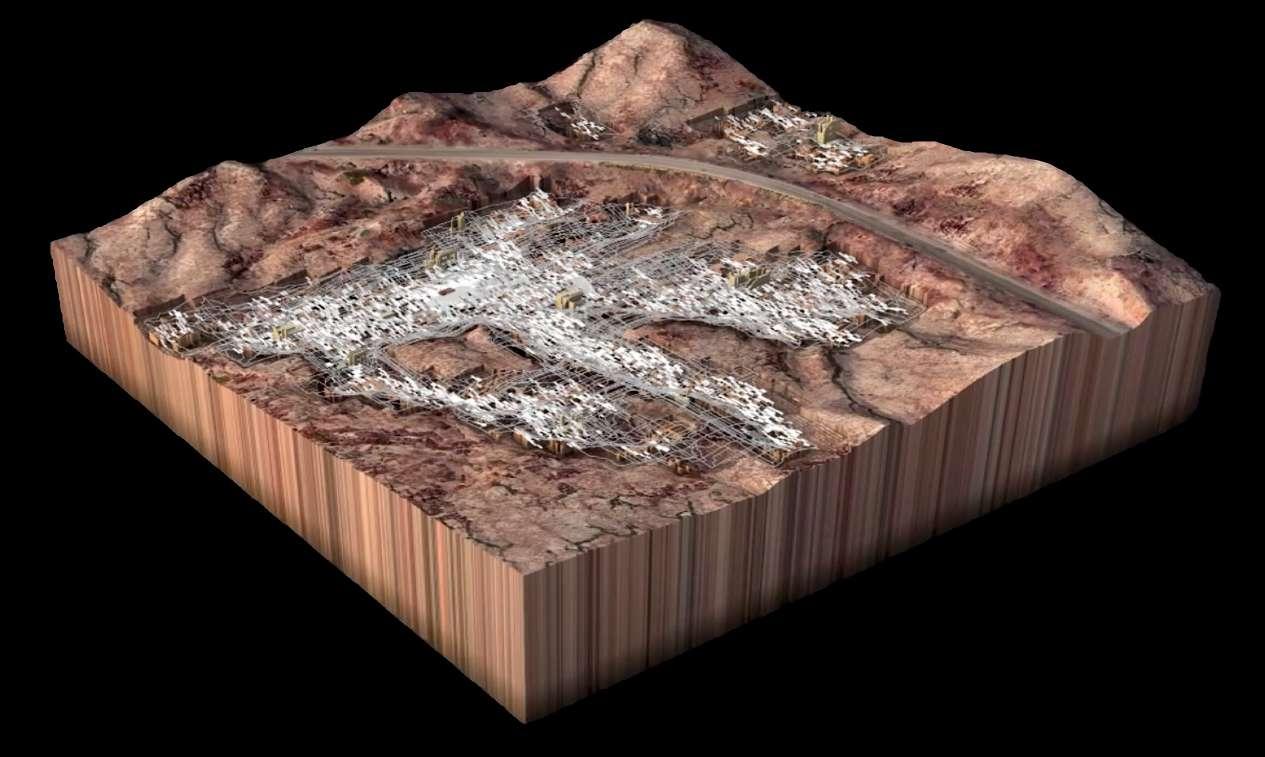

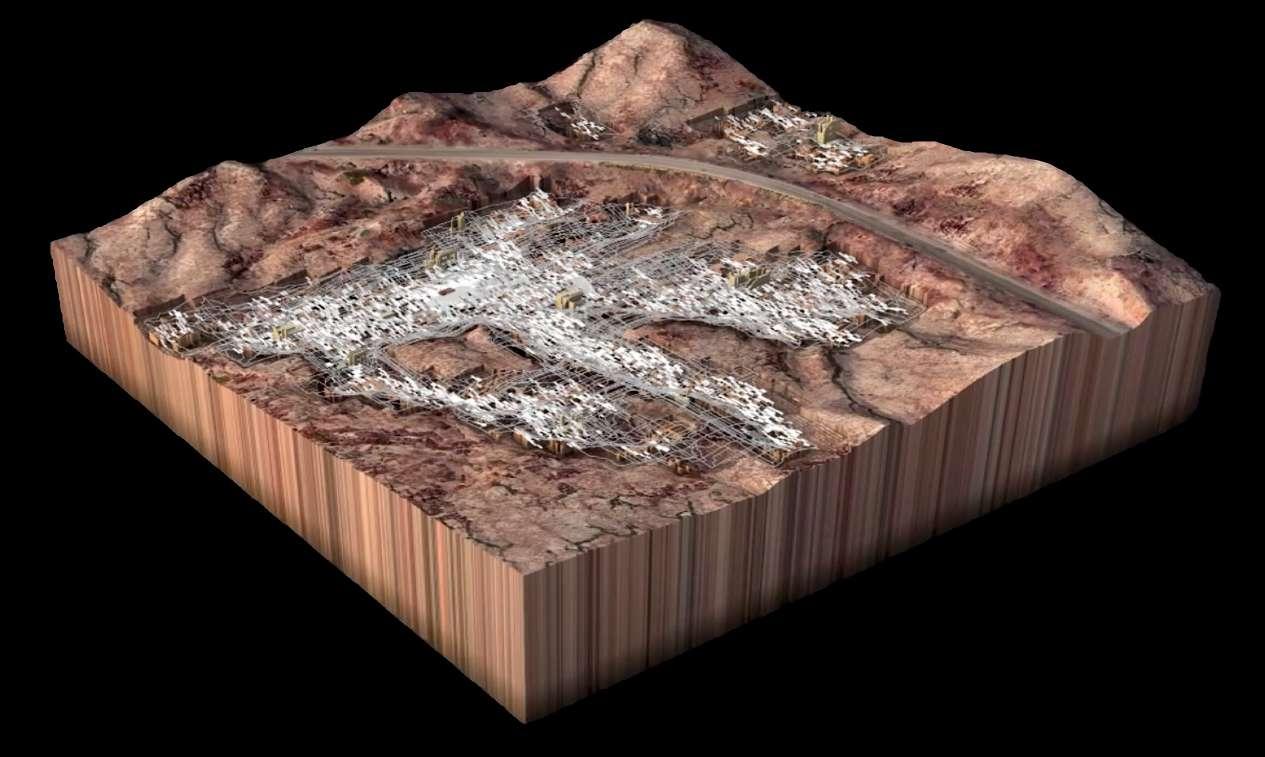

Research project focused on discovering and developing new workflows that merge LiDAR scanning and early AI tools (StyleGAN, Pix2Pix) alongside agent-based Python scripts for urban planning, presented at the 18th Venice Biennale.

Featured on:

1. Drone + LiDAR scan for 500x500mts site reconstruction.

2. CycleGAN for mapping reinterpretation, from original site aerial view to a desert.

4. Custom Pix2pix AI model trained on floorplans, generating different floorplans that fits every flat area excavated.

3. Python code for site prep. involves placing agents at the lowest point of every creek, defining the excavation's location, creating flat areas that follow the topography.

5. Pix2Pix to enhance the detail of floorplans creating various options to select and adapt into 3D.

6. Java code for extrusion of walls and ceilings bases on the generated floorplans.

7. Python script and Grasshopper definition for tiles and floors of every unit.

project video

8. Python and Java scripts for the generation of the roof structure, determining every edge of the building's walls and excavation, growing a structure that support over a 1000 solar panels that gives both shadow and energy to the units below.

During the Covid-19 pandemic, an was created, allowing users to navigate escape from

The 3D-modeled space and creatures Unreal and deployed as an interactive developed for the marketing

immersive VR and web experience navigate colorful creatures for a brief from lockdowns.

creatures were made with Blender and interactive web tour. An AR filter was also marketing campaign.

In a challenging landscape of steep slopes and a diagonal storm drain, YK House integrates effortlessly. Designed for three families, it harmonizes well with its surroundings.

Virtual Sales Room / Socovesa RealState

3DVista WebXR + WebRTC Platform for online sales experience, with all the project

3DVista WebXR + WebRTC Platform for online sales experience, with all the Virtual Sales Room / Socovesa RealState

3DVista WebXR + WebRTC Platform for online sales experience, with all the project Virtual Sales Room / Socovesa RealState

3DVista WebXR + WebRTC Platform for online sales experience, with all the Virtual Sales Room / Socovesa RealState /

web platform for online sales experiences, featuring a floorplan, 360º virtual tour, and video call capabilities, all hosted on 3DVista. This provides comprehensive and interactive way for potential buyers to explore and engage remotely.

/ Online.

project information, 360° virtual tours and pc/mobile video calls.

project information, 360° virtual tours and pc/mobile video calls. / Online.

project information, 360° virtual tours and pc/mobile video calls. / Online.

project information, 360° virtual tours and pc/mobile video calls. / Online.

Free Form examines translating freeform geometries into larger real-world contexts while maintaining precision This exploration envisions potential applications in building enclosures, aiding conventional construction methods with AR technologies.

Co-taught and developed a Unity-based interactive mobile and Microsoft Hololens app for AR model visualization and real-time feedback throughout the building process of a 1:1 parametric façade.

Featured on:

Starting from early stages of topological optimization workflows, until the development of a generative script, Generative Chairs explores the results of natural behavior through the lens of computational design, reducing weight and increasing performance.

stress map (sitting) - + - + pipe density agents flocking towards highest stress points generative chair v.5

< Stress = Thinner Membranes

> Stress = Thicker Membranes

At the final step of the project, different algorithms and programs were used to simulate similar behaviors An early version of topological optimization in Fusion360 (left) presented a cleaner output contrary to the custom script in Houdini (right)

In this exercise, the program recognizes multiple closely located objects and assigns a colored circle to each one. The circle changes color based on the proximity of these objects to each other.

project video. project video.

Building upon the previous exercise, the program assigns a bounding box to each object. This exercise will serve as the foundation for a generative structural project in which a digital membrane is generated based on the proximity of 2+ objects.

A LiDAR camera combined with a 6-axis robot arm enabled the creation of code-generated patterns based on the depth of an object's topography. AI-generated images and colors, produced with CycleGAN, were painted onto sculptures.

Custom Computer Vision Script was used to recognize depth in a foam sculpture. A custom algorithm generated patterns and colors based on depth data, which were then painted in real-time by the 6-axis robot arm equipped with a brush.