Year in review

Advancing transformational capabilities in science and technology to meet defense, security and intelligence mission needs through novel research and training experiences

2023

“ASU is a leader in national security and defense research, driving impact and new approaches toward critical technology areas such as cybersecurity, artificial intelligence, advanced communications and microelectronics. The Global Security Initiative is central to many of these efforts, convening world-class, interdisciplinary researchers and experts around missioncritical areas in support of national security.

This mission could not be more relevant or urgent, and we are proud to share samples of our progress in the following pages.

Nadya Bliss Executive director, ASU Global Security Initiative

Google finds what they're searching for in student cybersecurity researcher 12 Evaluating the Biden cybersecurity strategy ................. 14 Assessing critical vulnerabilities in health care software ................................................................................. 15 Securing the cyber frontier 10 02 Examining the danger of AI-generated disinformation 18 NDSI contributes to most detailed glimpse yet of Earth's seasonal data from past 11,000 years 20 Cutting through the clutter 16 03 Faculty’s collaborative research advances human-robot teamwork 6 The role of AI in higher education 9 Tomorrow’s teammates 4 01 CAOE

30-hour student competition 24 Trust,

measure our

in AI 26 Security problem solving 22 04 The future of microchips is flexible 30 Blueprinting domestic microchip manufacturing 31 Connecting research to strategic needs 28 05 By the numbers 32 06

hosts

but verify: The quest to

trust

Learn more about GSI.

01 Tomorrow’s teammates

Learn how we are leading the science of teaming.

44

This year’s rapid developments in artificial intelligence, often not considering the human component or only considering humans as an afterthought, have brought to light the importance of our mission.

Researchers at the Center for Human, Artificial Intelligence, and Robot Teaming (CHART) strive to augment human intelligence rather than replace it, and see consideration of the humans as essential to an effective human-AI team.”

Nancy Cooke Director of CHART “

5

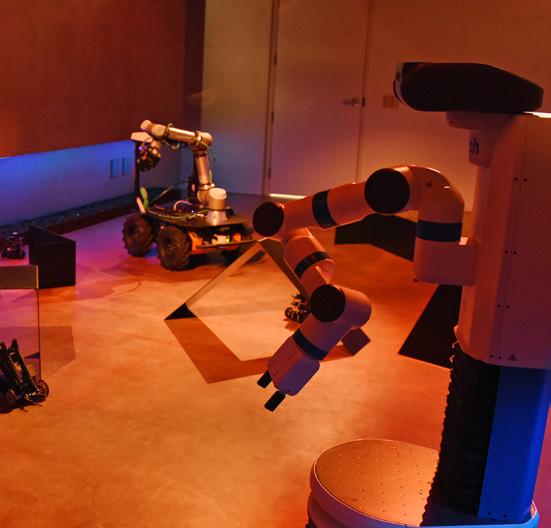

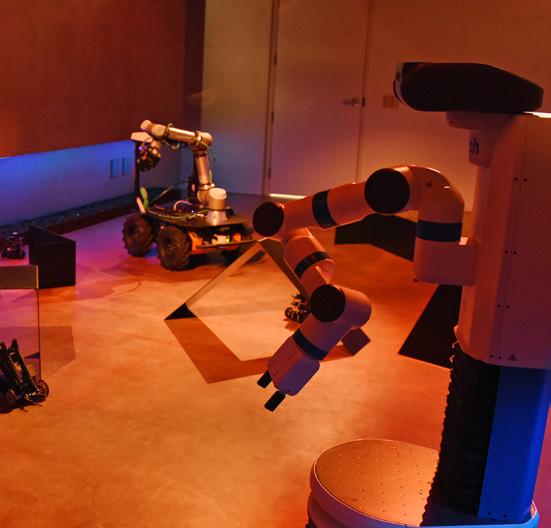

Faculty’s collaborative research advances human-robot teamwork

See the robots team up.

Redefining how robots and humans work together — from developing and deploying technologies to the management and evaluation of human, AI and robot teams — is the organizing principle behind the Center for Human, Artificial Intelligence, and Robot Teaming.

CHART’s many affiliate researchers are foundational to this mission, their interdisciplinary expertise driving the center’s impact across multiple fields, including space exploration, search and rescue and data privacy.

Cross-disciplinary research drives real-world impact

Take Wenlong Zhang, an associate professor in the School of Manufacturing Systems and Networks at the Ira A. Fulton Schools of Engineering. His research interests lie in the design, modeling and control of cyber-physical systems, with applications to health care, robotics and manufacturing.

He is one of many researchers bringing interdisciplinary thought to CHART’s General Human Operation of Systems as Teams Lab, a stateof-the-art scientific test bed and art installation. Within the GHOST Lab, researchers examine

people’s ability to work with robots and AI in scenarios such as a life-threatening meteor strike on a lunar colony.

“When we started discussions of the lab, we wanted to be able to create a space that could help us prepare for future space exploration and simultaneously be a space to simulate a group of soldiers working with next-generation army combat vehicles,” Zhang said.

Zhang was instrumental in defining GHOST Lab’s interdisciplinary thinking and collaboration as well as a place to demonstrate applicable research to mission-driven funding agencies.

Providing perspective on data privacy

Privacy researcher Rakibul Hasan believes data privacy is just as important as security, an outlook he lends to CHART’s research.

“I try to understand the problem space from the user, from the actual people who will be using the system, to know

6

their perspectives, and then identify usable fixes and implement them,” said Hasan, an assistant professor in the School of Computing and Augmented Intelligence. “Joining CHART appealed to me because we use so many techniques — from psychological and internet measurement methods to machine learning and artificial intelligence — to create real-world solutions.”

Hasan is currently working on a project on the intersection of privacy and disinformation. The project is looking into how we can truly understand what drives people to believe or not believe misinformation and how media consumption habits can affect their belief interest bases.

“One finding we had was that people who follow online sources had different results compared to those who use more traditional sources, like print or television. The first group is more likely to believe misleading misinformation,” he said.

The Center for Human, Artificial Intelligence, and Robot Teaming (CHART) is a multidisciplinary center leading the charge to develop methods to assemble the most effective humansynthetic agent teams in support of national security.

$18 million+ active research portfolio

1,570+ hours of human subjects research

110+ student and affiliated faculty

Accelerating human-robot collaboration through algorithms

Despite impressive advances to date, one of CHART’s main focus areas, human-robot teaming, has yet to achieve the goal of general-purpose robots that act alongside humans in the real world. Introducing algorithms that will accelerate the transition of robots out of the research lab and into long-term deployment is the focus of affiliate Pooyan Fazli.

“My long-term goal is to facilitate the coordination, communication, coexistence and mutual adaptation between robots and humans in dynamic, uncertain, adversarial and multigoal environments,” said Fazli, an assistant professor at ASU's School of Arts, Media and Engineering.

“The chance to collaborate with other fellow affiliates who share these same interests is an exciting opportunity for me. I believe that together, we are stronger under CHART’s umbrella,” he said.

7

YuMi: a robot that can skillfully manipulate objects

continued from page 7

Applying human-dog communication to teaming research

For many years, humans have been relying on dogs for their special capabilities, such as their ability to smell and hear things beyond human perception. However, while they may outperform humans at some things, there is the inevitable complication of interaction without natural language-based communication.

This challenge is one area of research for affiliate Heather Lum, assistant professor in human systems engineering at ASU’s Polytechnic School.

“You have to be aware of what the dog is telling you. If not, you cannot be an effective teammate,” Lum said. “We teach the dogs to have purposeful disobedience when it has information we do not yet know. This comes back to human-robot teaming — what are we going to do when a robot is disobedient for a beneficial purpose to the team?”

Lum also explores human factors psychology with an emphasis on human-technology interactions. She joined CHART from Embry-Riddle Aeronautical University with high hopes of connecting her exciting research interests to the work of the other affiliates who are doing similar work in the field of humanrobot teaming.

“Being at such a prestigious university that has other faculty in similar fields is amazing. Bouncing ideas off each other and interacting with others who share similar areas of focus is wonderful.”

The role of AI in higher education

In recent decades, the advent of machine learning and neural networks — among other key advancements in building artificial intelligence systems that mirror human capabilities — has dramatically shifted many landscapes, ushering in a new era of AI.

Today, AI’s fingerprints can be found everywhere — from voice assistants in our homes to advanced research tools in our labs. Education is no exception. In the not-so-distant future, AI-driven personalized learning platforms are set to reshape the learning experience, using personal data to tailor content based on each student's needs.

Lance Gharavi, a research affiliate with CHART, provided insight into how AI is currently shaping education across the university and beyond.

The promise of generative AI in higher education is compelling. It can bring abstract ideas to life through visual aids, enhancing teaching and learning. It can handle routine tasks, freeing educators to focus more on teaching and students' individual needs.

8

“I think it’s a marvelously exciting time to be a student, an educator, a researcher or an artist because we have to fundamentally look at our basic assumptions and ideas about how we interact with each other and our environment,” said Lance Gharavi, a professor in the School of Music, Dance and Theatre, part of the Herberger Institute for Design and Arts.

The challenge, Gharavi pointed out, is ensuring that we actively guide this transformative process.

“We have to ask ourselves why we’ve been doing things a certain way for decades, and maybe rethink our approach — and that is both exhilarating and anxiety producing.”

But where do we draw the line?

“There’s a problem with the limiting principle — a rule or guideline that tells us when to stop or how far to go with something,” Gharavi said. “On the one hand, we have something as simple as artificial intelligence like

spell check, and on the other, we have something as sophisticated as ChatGPT — it’s a complicated conversation. Because if we’re not acknowledging the messiness, we’re not being honest.”

But Gharavi acknowledged AI’s potential to enhance teaching and learning, as long as we keep an eye on the ethical implications.

“We find ourselves in a time that is both exhilarating and frightening. Change is scary and it's exciting,” Gharavi said. “I think the potential to radically transform higher education is really there, and we have to make sure that we are working vigorously to steer how that change happens.”

9

02 Securing the cyber frontier

Learn how we are addressing cybersecurity challenges.

1010

“

We’re seeing increasing acknowledgment of the impact that cybersecurity breaches have on society. The strategy introduced by the White House stands as a framework to strengthen the country's resilience to cyber threats.

At the core of this strategy lies education, encompassing fundamental cybersecurity knowledge for all, inclusive and adaptable education initiatives, and enhanced training to take our workforce to a higher level of expertise. The Center for Cybersecurity and Trusted Foundations (CTF) is proud to be a part of advancing these objectives.”

Adam Doupé Director of CTF

11

Students hack security camera in a snap

Months of work and experimentation paid off for student security researchers Mitchell Zakocs and Justin Miller when they successfully hacked a Wyze security camera in one minute and 44 seconds at Pwn2Own, held October 2023 in Toronto. As the only student team at the event, the pair represented CTF’s research lab, led by Director Adam Doupé. The rapid hack secured the lab a $6,750 cash prize.

“We find and report these zero-day vulnerabilities to the vendors so they can patch the vulnerability, as otherwise they can be discovered and used by bad actors — which is especially dangerous in something like a home surveillance camera,” said Zakocs.

Held twice a year, Pwn2Own is a security conference and hacking contest that highlights the vulnerability of widely

Google finds what they're searching for in student cybersecurity researcher

In October 2023, Google announced that Kyle Zeng was the recipient of the prestigious Google PhD Fellowship for his innovative solutions to cybersecurity attacks.

Zeng, the first ASU student to receive the coveted award, is a research assistant in CTF’s lab and a PhD student in the School of Computing and Augmented Intelligence.

“I was thrilled when I knew I got the award,” said Zeng. “This award means a lot to me.”

The Google PhD Fellowship is awarded globally to exemplary students in computer science and related fields, acknowledges students' contributions to their areas of specialty, and provides funding for their education and research. The fellowship also pairs recipients with a research mentor at Google.

“Even a big tech company like Google recognizes my research and is willing to sponsor me,” said Zeng. “Winning this award motivates me to dive further into meaningful research and bring better security to all users.”

Zeng’s research addresses the real dangers of vulnerabilities in the world of cybersecurity.

Undiscovered exploitable vulnerabilities in systems allow attackers to fully control a victim's mobile phone, laptop and all other electronic devices, Zeng said.

“Attackers can compromise a victim's system without them doing anything — not even clicking a link. It allows attackers to steal critical information, (like) bank accounts, from a victim, track their locations, record their conversations and more,” he said.

12

used devices and software. Simply by scanning a QR code and restarting the device, Zakocs and Miller were able to hack the camera and play music through it, demonstrating the vulnerability of such consumer products.

Zeng’s advisor, Tiffany Bao, said she is proud of his accomplishments. Bao is the associate director of research acceleration at the Center for Cybersecurity and Trusted Foundations.

“He is doing a lot of amazing and impactful research,” said Bao, an assistant professor in the School of Computing and Augmented Intelligence. “Zeng’s recognition means ASU is not only creating research but is making a social impact on cybersecurity to industry,” she said.

The Center for Cybersecurity and Trusted Foundations (CTF) focuses on the cybersecurity pipeline, providing opportunities for learners across ages and skill levels.

$54.5 million+ in submitted proposals in 2023

7,316 global users in the pwn.college training platform

14 research publications in top-tier security conferences and journals

13

Evaluating the Biden cybersecurity strategy

In March 2023, the Biden administration outlined its vision for a more secure cyberspace with its release of a National Cybersecurity Strategy, which places more responsibility on software developers and other institutions to have safeguards in place that ensure their systems cannot be hacked.

The administration also announced it is proposing legislation that would establish liability for software developer that fail to take reasonable precautions to secure their products. Additionally, the administration wants to incentivize businesses and developers to invest long-term in cybersecurity.

Nadya Bliss, executive director of the Global Security Initiative, weighed in on the administration’s plan following its announcement.

Editor's note: The following interview has been lightly edited for length and clarity.

Question: What are your general takeaways regarding the new strategy?

Answer: The emphasis on incentives is incredibly positive. One of the biggest challenges with cybersecurity is that generally we design everything with capability first in mind and security second. If you think about how the market functions, everybody wants the next best thing. As a result, we have this system that is really not designed for security.

Second, I think it’s positive that the strategy has focused on prioritizing the burden for cybersecurity on sectors

14

and companies that can bear it. Right now, too much responsibility falls on the individual.

Finally, the strategy is looking to the future of cybersecurity. Things like post-quantum encryption systems, artificial intelligence, biotechnology, clean energy, all of those have significant cybersecurity aspects. Sometimes they’re positive for cybersecurity, sometimes they increase the attack surface. These outlined research initiatives are another important aspect of the strategy.

Q: How much of a difference do you think offering incentives could make?

A: I think there is a significant benefit to elevating this to a core element of the strategy. I am the current vice chair of the Computing Community Consortium. We have a white paper on designing secure ecosystems that involves the notion of incentives. I think without having that as a top-level federal strategy, no progress is going to be made. The fact that it’s stated is very important. Whether or not it’s actually going to affect the security of our system is going to depend on specific domains and specific policies and how it is implemented.

Q: How much of this strategy do you think will actually be implemented?

A: I am feeling reasonably positive that this is going to be a high priority, as it is also aligned with a number of economic priorities and a number of other policy priorities, such as the CHIPS and Science Act, and all of the policy priorities around the new energy future. Cybersecurity is incredibly important in context of the changing climate.

Q: Final question. Why have products or software been designed with more capability in mind than security?

A: Think about what you’re looking for when you are buying something like a phone. Does it have a really nice camera? Is it fast? Very few people go to a store to buy a piece of technology and say, “Can you tell me what the security features are?”

I’m a computer scientist, and I ask those questions all the time, and usually the feedback I get from the person in the store is that no one ever asks this.

But I will tell you the focus is shifting. People are increasingly worried about their identity being stolen. They’re aware of data breaches. People worry about the resilience of infrastructure. People are thinking about these things a lot more and when it’s at the forefront at a national level, from policy issued by the White House, I think it’s a very positive focus.

Assessing critical vulnerabilities in health care software

Many health care systems and devices run on outdated infrastructures and code with known vulnerabilities and without software patches, putting much of the industry at great risk.

To address this, the Center for Cybersecurity and Trusted Foundations was awarded $10 million from the Advanced Research Projects Agency for Health in September 2023 to develop a cyber reasoning system called RxCRS to assess vulnerabilities in existing health care-related software.

The system will be compatible with legacy programs, search for vulnerabilities automatically and generate patches that meet the needs of the health care field.

CTF is assembling an interdisciplinary team from across ASU to develop RxCRS, drawing expertise from the Ira A. Fulton Schools of Engineering, Biodesign Institute and the School of Life Sciences.

15

03 Cutting through the clutter

Learn how we are defending against information-related threats.

1616

“

Information warfare is increasingly undermining the very fabric of our society by fostering division, eroding our collective reality, and weakening trust in the pillars of democracy.

At the Center on Narrative, Disinformation and Strategic Influence (NDSI), we understand that effectively countering information warfare is not only critical but a fundamentally sociotechnical problem that demands interdisciplinary solutions. NDSI is committed to empowering the public with tools to discern and withstand the challenges of living in a manipulated information environment.”

Joshua Garland Interim Director of NDSI

17

Examining the danger of AI-generated disinformation

GSI’s Center on Narrative, Disinformation and Strategic Influence conducts interdisciplinary research that develops tools and insights for decisionmakers, policymakers, civil society groups and communities in order to support holistic defenses against informationrelated threats to global democracy and the rulesbased order.

23 students contributed to research

21 scientific articles published

13 affiliate faculty across 9 departments

In many ways, 2023 was a breakout year for artificial intelligence, with explosive advancements and the adoption of generative AI in particular becoming more widespread. Since the debut of generative AI tools such as ChatGPT, a litany of alarms has sounded about the threats it poses to reputations, jobs and privacy. GSI experts say another threat looms large but often escapes our notice: how artificial intelligence tools can be used for disinformation and its associated security implications.

The holy grail of disinformation

“The holy grail of disinformation research is to not only detect manipulation, but also intent. It’s at the heart of a lot of national security questions,” says Joshua Garland, interim director at ASU’s Center on Narrative, Disinformation and Strategic Influence.

Detecting disinformation is a focus of Semantic Information Defender (SID), a federal contract with software company Kitware Inc. that ASU is participating

18

in. Funded by the U.S. Defense Advanced Research Projects Agency (DARPA), the SID aims to produce new falsified-media detection technology. The multialgorithm system will ingest significant amounts of media data, detect falsified media, attribute where it came from and characterize malicious disinformation.

“Disinformation is a direct threat to U.S. democracy because it creates polarization and a lack of shared reality between citizens. This will most likely be severely exacerbated by generative AI technologies like large language models,” said Garland.

The disinformation and polarization surrounding the topic of climate change could also worsen.

“The Department of Defense has recognized climate change as a national security threat,” he said. “So, if you have AI producing false information and exacerbating misperceptions about climate policy, that’s a threat to national security.”

The promise (and pitfalls) of rapid adoption

“Right now, we are seeing rapid adoption of an incredibly sophisticated technology, and there’s a significant disconnect between the people who have developed this technology and the people who are using it. Whenever this sort of thing happens, there are usually substantial security implications,” said Nadya Bliss, executive director of ASU’s Global Security Initiative who also serves as chair of the DARPA Information Science and Technology Study Group.

She said ChatGPT could be exploited to craft phishing emails and messages, targeting unsuspecting victims and tricking them into revealing sensitive information or installing malware. The technology can produce a high volume of these emails that are harder to detect.

“There’s the potential to accelerate and at the same time reduce the cost of rather sophisticated phishing attacks,” Bliss said.

Garland added that the technology’s climate impact goes beyond disinformation. Programs like ChatGPT are energy intensive, requiring massive server farms to provide enough data to train the powerful programs. Cooling those data centers consumes vast amounts of water, as well. Given the chatbot’s unprecedented popularity, researchers like Garland fear it could take a troubling toll on water supplies amid historic droughts in the U.S.

ChatGPT also poses a cybersecurity threat through its ability to rapidly generate malicious code that could enable attackers to create and deploy new threats faster, outpacing the development of security countermeasures. The generated code could be rapidly updated to dodge detection by traditional antivirus software and signature-based detection mechanisms.

19

NDSI contributes to most detailed glimpse yet of Earth's seasonal data from past 11,000 years

Joshua Garland, director of NDSI is among an international team of scientists that has completed the most detailed look yet at the planet’s recent climatic history, including seasonal temperatures dating back 11,000 years to the beginning of what is known as the Holocene period.

The study, published January 2023 in Nature, is the very first seasonal temperature record of its kind, from anywhere in the world.

“The goal of the research team was to push the boundaries of what is possible with past climate interpretations, and for us that meant trying to understand climate at the shortest timescales, in this case seasonally, from summer to winter, year-byyear, for many thousands of years,” said Tyler Jones, lead author on the study and assistant research professor and fellow at the Institute of Arctic and Alpine Research, or INSTAAR.

Jones teamed up with Garland to utilize his expertise

in information theory to reexamine ice cores from the West Antarctic ice sheet.

“Using information theory, we can target ice and resample it, using new technologies,” said Garland. “We're able to get a much clearer picture ofwhat was happening in the past, which is great. That means we maximized the value of the ice that we had.”

Their highly detailed data on long-term climate patterns of the past provide an important baseline for scientists who study the impacts of humancaused greenhouse gas emissions on our present and future climate. By knowing which planetary cycles occur naturally and why, researchers can better identify the human influence on climate change and its impacts on global temperatures.

20

Scott Ruston departs ASU to apply research expertise to new Navy initiative

Since entering the U.S. Navy reserves, Rear Adm. Scott Ruston has balanced dual careers as a Naval officer and an academic researcher and founding director of the Global Security Initiative’s Center on Narrative, Disinformation and Strategic Influence.

In May 2023, he departed GSI to apply his expertise in strategic communication and narrative analysis to a new Navy initiative called Get Real, Get Better, overseeing its rollout to more than 340,000 active-duty sailors and 100,000 reservists. The new initiative provides a framework for Navy teams, leaders and personnel to identify strengths and shortcomings and use that analysis to improve performance. Ruston believes his experience in critical analysis will help him shift the culture of the Navy.

“In my opinion, what defines our organizational culture is not a high degree of pause, step back, take a look from a different angle and analyze,” Ruston said. “But that's exactly what we do in research and scholarship. So, bringing that perspective and helping imbue that into the culture is an advantage I bring to the job.”

21

Security problemsolving 04

Learn about our tools and technologies that enhance homeland security operations.

22

“The Center for Accelerating Operational Efficiency (CAOE) stands at the forefront of homeland security innovation, channeling research toward innovative technologies and collaborative strategies.

As a recognized Center of Excellence, CAOE's impact extends from defending against cyber threats to optimizing responses to natural disasters, ensuring the Department of Homeland Security's operational efficiency and preparedness."

Ross Maciejewski Director of CAOE

23

CAOE hosts 30-hour student competition

Guarding ‘soft’ locations — places of worship, museums, schools, stadiums and other public places — from terror attacks presents a complex challenge for those protecting them. To obtain some fresh eyes and new perspectives on these challenges, the Center for Accelerating Operational Efficiency hosted a two-day student competition in February 2023.

The Designing Actionable Solutions for a Secure Homeland Hackathon, or DASSH, drew more than 20 teams from 11 colleges across the country to design effective responses to soft location threats for the Department of Homeland Security. The weekendlong student-engaged design challenge paired students with academic and industry mentors to solve problem scenarios.

The winning team was made up of five computer science students from ASU’s Ira A. Fulton Schools of Engineering: Nathan McAvoy, Fawwaz Firdaus, Kalyanam Priyam Dewri, Camelia Ariana Binti Ahmad Nasri and Rui Heng Foo.

Their design, titled Live Emergency Response System, secured them a $10,000 prize. It uses artificial intelligence in closed-circuit television cameras to detect firearms on a potential terrorist, and radar mapping tracks the target’s movements and provides first responders with information, including the suspect’s location.

“Our national security depends on the continuous innovation of strategies and the cultivation of a skilled workforce to foresee and prevent emerging threats,” said Ron Askin, executive director, CAOE. “DASSH provides an important opportunity to engage a new cadre of students in sharing their ideas and talents and, hopefully, to pursue Homeland Security Enterprise careers, ultimately minimizing the potential sources and consequences of disasters.”

24

The Center for Accelerating Operational Efficiency (CAOE), led by Arizona State University, develops and applies advanced analytical tools and technologies to enhance planning, information sharing and real-time decision-making in homeland security operations.

$21 million+ total research expenditures

170+ students

150+ affiliated research faculty from 60 institutions

25

Trust, but

verify: The quest to measure our trust in AI

Artificial intelligence is a growing presence in our lives and has great potential for good — but people working with these systems may have low trust in them. One research team at the Center for Accelerating Operational Efficiency is working to address that concern by testing a U.S. Department of Homeland Security-funded tool that could help government and industry identify and develop trustworthy AI technology.

“Things that would lead to low trust are if the technology’s purpose, its process or its performance were not aligned with your expectations,” said CAOE researcher Erin Chiou, who is also an associate professor of human systems engineering in The Polytechnic School, part of the Ira A. Fulton Schools of Engineering.

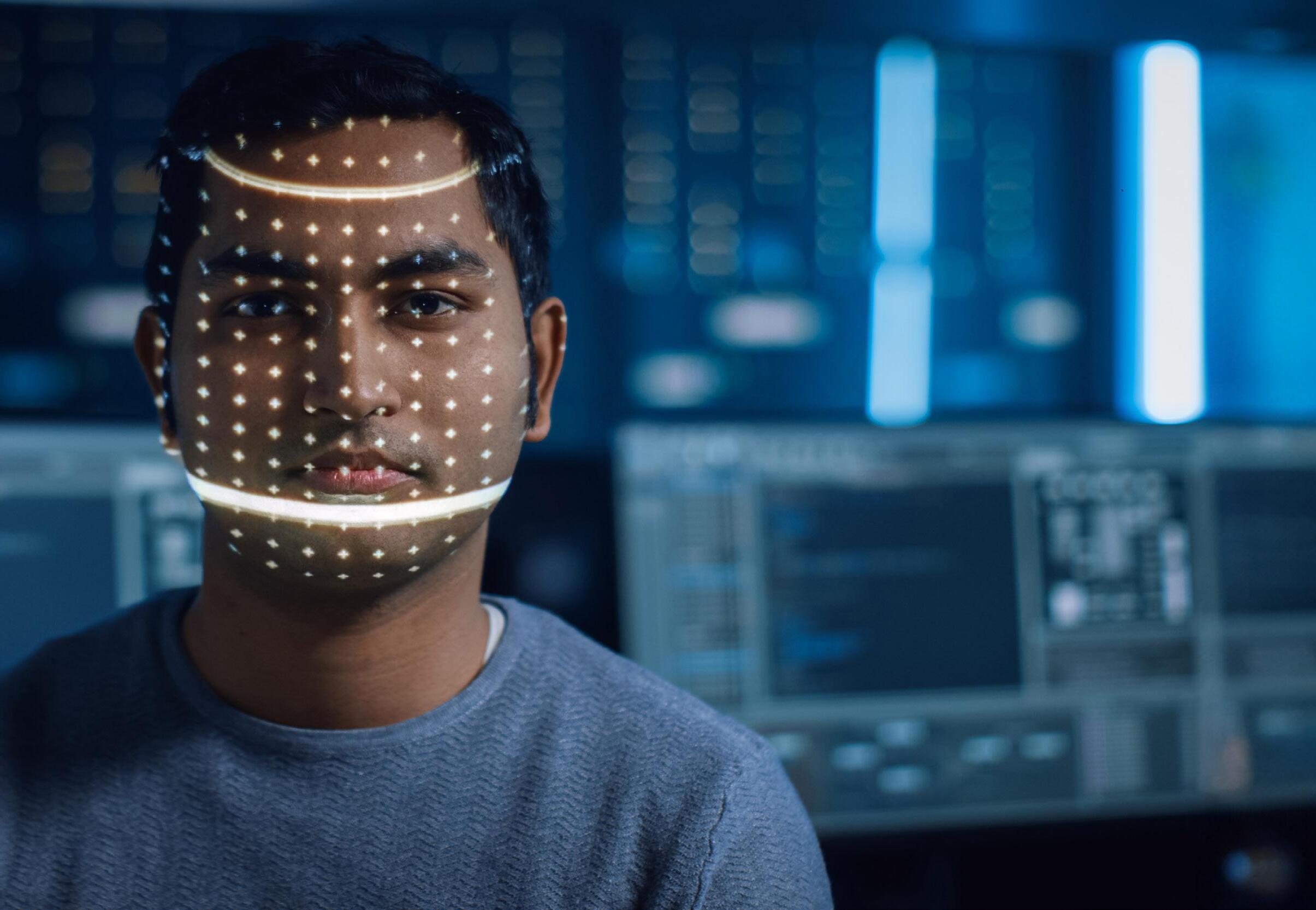

The tool, called the Multisource AI Scorecard Table (MAST), is aimed at aligning those expectations. It’s based on a set of standards originally developed to evaluate the trustworthiness of human-written intelligence reports. It uses a set of nine criteria, including describing the credibility of sources, communicating

uncertainties, making logical arguments and giving accurate assessments.

To test whether MAST can effectively measure the trustworthiness of AI systems, volunteer groups of transportation security officers interacted with one of two simulated AI systems that the ASU team created.

One version of the simulated AI system was built to rate highly on the MAST criteria. A second version was built to rate low on the MAST criteria. After completing their tasks with one of these systems, the officers completed a survey that included questions on how trustworthy they consider AI.

The Transportation Security Administration’s federal security directors from Phoenix Sky Harbor International Airport, San Diego International Airport and Las Vegas Harry Reid International Airport each organized volunteer officers to participate in the study.

CAOE has a history of working with the TSA. Previously, Chiou’s team participated in piloting a new AI-powered

26

technology at one of Sky Harbor’s terminals. The new screening machine uses facial recognition to help a human document checker verify whether a person in the security line matches their ID photo. That study showed that the technology increased accuracy in screening. The current project is testing whether new, MAST-informed features will affect officers’ trust perceptions and performance with the technology.

“The Transportation Security Administration team in Arizona has had the privilege to partner with ASU and CAOE for the past several years. We are particularly excited to have the opportunity to partner with them on this project involving artificial intelligence,” said Brian W. Towle, the assistant federal security director of TSAArizona. “With the use of AI rapidly growing across government and private sector organizations around the globe, there is significant value in increasing public awareness and confidence in this technology.”

For the fieldwork phase of the current project, the officers viewed images of people and ID photos as if they were

in the document checker position. The simulated AI system gave them recommendations for whether the images and ID photos matched. The officer makes the final decision about whether to let a person through the line, which is why their trust in the AI recommendation matters.

AI-powered face matching technology is already in place in at least one terminal of Sky Harbor, Chiou says, as well as similar systems in airports in the United Kingdom and Canada. While it may take a while for airports nationwide to acquire this technology, we are likely to encounter it more and more in the future as we travel.

If the ASU team is able to show that the MAST tool is useful for assessing AI trustworthiness, it will help in building and buying systems that people can rely on, paving the way for AI’s smooth integration into critical sectors, protecting national security and multiplying its power for positive impact.

27

05 Connecting research to strategic needs

28

In

the 1990s, the United States produced approximately 37% of the global microchip supply. Today, when microchips are more important than ever, that figure sits at 12%. Chips and advanced semiconductors underpin how we work, travel, stay healthy and defend the nation — they are vital components of computers, cellphones, transportation infrastructure, MRI machines and even fighter aircraft.

Our dependence on these devices and their overseas supply is cause for concern at the highest levels of the U.S. government, but the U.S. Department of Defense Advanced Research Projects Agency, or DARPA, is working to stay ahead of the issue.

With the Electronics Resurgence Initiative, DARPA is endeavoring to secure U.S. leadership in cross-functional, next-generation microelectronics research, development and manufacturing. The initiative serves both national security and commercial economic interests, enhancing competitiveness with a range of forward-looking programs and research awards.

The Global Security Initiative is aiding this effort by connecting researchers with funding opportunities to advance the research and domestic production of next-generation microelectronics.

29

The future of microchips is flexible

Professor Dan Bliss, who heads the Center for Wireless Information Systems and Computational Architectures, or WISCA, received a $17 million DARPA grant to support research into flexible chip architectures.

Computational architecture has become more complex in design and manufacture, triggering an escalation in power constraints. Bliss and his team have been working to create a new platform for complex, high-performance processors that are more power efficient and easier to use, with a new kind of chip that provides an alternative processing pathway.

WISCA is developing radio chips that can mix and filter signals using software instead of hardware, allowing more devices to transmit and receive signals without interference — potentially improving mobile and satellite communications.

“Typically, you must pick either rigid, efficient processing or flexible processing that is 100 times less efficient,”

said Bliss, a faculty member of the School of Electrical, Computer and Energy Engineering in the Ira A. Fulton Schools of Engineering. “With our approach, we simultaneously enable both characteristics by integrating advanced accelerators; dynamic, intelligent resource management; and sophisticated runtime software. Our advances have the potential to dramatically improve systems for everything from your cellphone or WiFi router to sophisticated DOD satellites.”

The project also has implications for retooling U.S. electronics manufacturing to be more competitive on the world stage and to abate challenges to electronics security.

30

Blueprinting domestic microchip manufacturing

The Next-Generation Microelectronics Manufacturing, or NGMM, is DARPA’s program to develop systems to domestically build 3D heterogeneously integrated, or 3DHI, microelectronics. 3DHI microelectronics use a new architecture that can improve chip efficiency compared to current 2D and 3D designs. The program is part of DARPA’s larger Electronics Resurgence Initiative.

Hongbin Yu, a professor of electrical engineering, leads a team funded by a $1.5 million grant through the NGMM program.

“We’re thinking about how we can make the electrical connections reliable and cost effective,” said Yu, a faculty member in the School of Electrical, Computer

and Energy Engineering, part of the Fulton Schools. “We’re also looking at aspects of how to simplify the manufacturing process. We have to do it cheaply; if you spend $1 billion to make one 3DHI microsystem device, that doesn’t work.”

DARPA’s ultimate goal is to create a manufacturing facility, or multiple facilities if needed, that will enable industry, government and academia to create prototypes of 3DHI devices on a small scale across the U.S., with the additional aim of improving national security.

31

06

By the numbers

32

Through our expertise, public leadership and decision-maker engagement, GSI is driving advances in critical technology areas key to national security. Our research expenditures have more than tripled over the past nine years.

In fiscal year 2023, GSI research and programming centers generated:

93 $28.8M+ $210M+

total research expenditure

proposals submitted in FY23

in total research expenditures since 2015

69

active grants across the portfolio in FY23

© 2024 ABOR. All rights reserved. 1/2024

33 FY ’15 ’16 ’17 ’18 ’19 ’20 ’21 ’22 ’23 $30 M $25 M $20 M $15 M $10 M $5 M

globalsecurity.asu.edu Global Security Initiative is a unit of ASU Knowledge Enterprise. Produced by ASU Knowledge Enterprise. ©2024 Arizona Board of Regents. All rights reserved. GSI year in review 5/2024. @asu_gsi ASU Global Security Initiative youtube linkedin asu-global-security-initiative Follow Us Join our mailing list and stay up to date on how we're addressing global security challenges.