The Future Regulation of Artificial Intelligence is Taking Shape The European Commission (‘EC’) published its White Paper on Artificial Intelligence in February 2020, and in April 2021, published its proposal for a Regulation Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) (the ‘AI Act’). With the AI Act, the European Union (‘EU’) was the first out of the gate globally with a proposal for a comprehensive framework for regulating AI, but as discussed below, the UK and others have proposals of their own. An initial consultation period elicited hundreds of responses from across industry, governmental and public bodies, and civil society, which highlight some of the key areas that will shape the legislative debate as the AI Act progresses toward adoption. A human-centred focus and a wide scope of application

and compliant with the law, including respect for fundamental rights.

The Explanatory Memorandum accompanying the official text of the AI Act frames the EC’s aim for the new law: AI should be a tool for people and be a force for good in society with the ultimate aim of increasing human wellbeing. Rules for AI available in the Union market or otherwise affecting people in the Union should therefore be human centric, so that people can trust that the technology is used in a way that is safe

The AI Act runs to 85 articles and 9 annexes, setting out the proposed rules for placing on the market, putting into service and use of AI systems in the EU. Like the General Data Protection Regulation (‘GDPR’), the AI Act will have extra-territorial effect where the ‘output’ of an AI system is used in the EU.

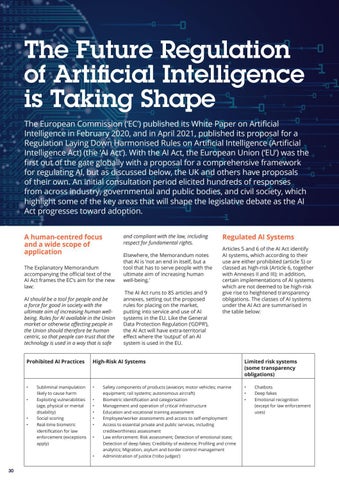

Articles 5 and 6 of the AI Act identify AI systems, which according to their use are either prohibited (article 5) or classed as high-risk (Article 6, together with Annexes II and III); in addition, certain implementations of AI systems which are not deemed to be high-risk give rise to heightened transparency obligations. The classes of AI systems under the AI Act are summarised in the table below:

Prohibited AI Practices

High-Risk AI Systems

Limited risk systems (some transparency obligations)

•

•

• • •

•

• •

Subliminal manipulation likely to cause harm Exploiting vulnerabilities (age, physical or mental disability) Social scoring Real-time biometric identification for law enforcement (exceptions apply)

• • • • • •

•

30

Elsewhere, the Memorandum notes that AI is ‘not an end in itself, but a tool that has to serve people with the ultimate aim of increasing human well-being.’

Regulated AI Systems

Safety components of products (aviation; motor vehicles; marine equipment; rail systems; autonomous aircraft) Biometric identification and categorisation Management and operation of critical infrastructure Education and vocational training assessment Employee/worker assessments and access to self-employment Access to essential private and public services, including creditworthiness assessment Law enforcement: Risk assessment; Detection of emotional state; Detection of deep fakes; Credibility of evidence; Profiling and crime analytics; Migration, asylum and border control management Administration of justice (‘robo judges’)

Chatbots Deep fakes Emotional recognition (except for law enforcement uses)