Authored by: Raghabendra Rout

Introduction

Memory is the backbone of modern computing systems, and the shift from DDR4 to DDR5 represents one of the most significant generational leaps in recent years. DDR5 DRAM products provide low-latency, high-performance, almost infinite access endurance and low-power consumption, which makes them suitable for applications requiring complex system deployments to hand-held devices. DDR5 memory devices support data rates at a lower I/O Voltage than DDR4. DDR5 DRAMs and dualinline memory modules (DIMMs) based on DDR5 DRAMs are industry-standard products driven by the Joint Electron Device Engineering Council, or JEDEC.

DDR5 Features and Applications

DDR5 features data rates of up to 6400 MT/s at 1.1V I/O voltage, addressing the needs of several applications, such as:

• Networking

• Cloud Computing

• PCs and Servers

• Embedded Computing

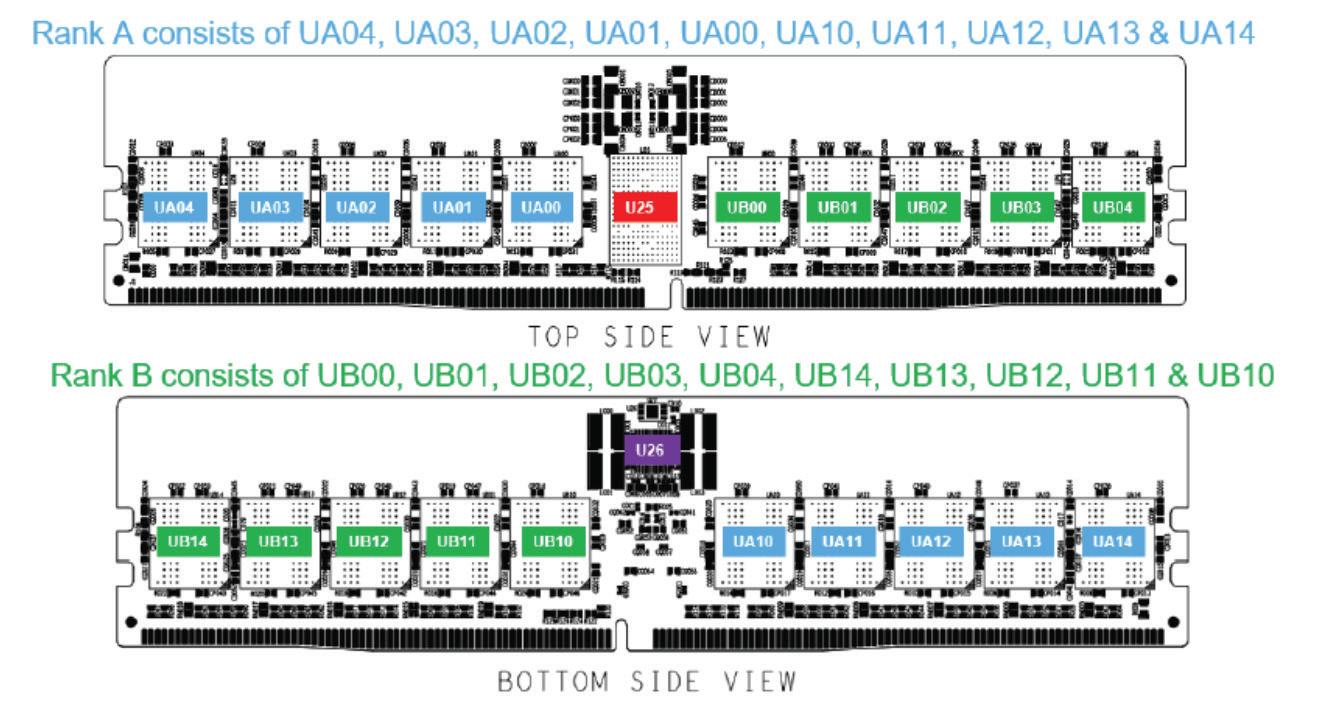

DIMMs are built using x4-, x8- or x16 DRAMs which can cater to applications that typically have different priorities. Some compute-intensive applications can implement their memory sub-system using x80 DIMMs, based on more cost-effective x8- or x16 DRAMs. Such applications can also leverage the higher Reliability, Availability, and Serviceability (RAS) features of DDR5-based DIMMS to minimize the downtime during memory-related failures.

DDR5 DIMMs are a natural progression from DDR4 DIMMs or earlier iterations and provide system designers with an easy path to upgrade their product offerings. DDR5 DIMMs at 4800 MT/s (DDR5 4800) are planned for release followed by higher speed grades (DDR5 5600, DDR5 6400). DDR5 DIMMs support burst lengths of 16 beats, better refresh/pre-charge schemes allowing higher performance, a sub-channel DIMM architecture offering better channel utilization, integrated voltage regulators, an increased bank-group for higher performance, and Command/Address on-die termination (ODT), a few of the many new DDR5 features enabling higher performance.

How DDR5 is different from its previous DDR4 technologies

• DDR5 was developed to provide significantly higher memory bandwidth, addressing the growing demand for CPU cores to access more memory bandwidth per core. To achieve this, DDR5 deliberately increases latency compared to DDR4, trading lower access time for greater throughput. Workloads that are latency-sensitive but do not require the enhanced bandwidth of DDR5 may continue to benefit from DDR4 memory.

• In addition to higher bandwidth, DDR5 incorporates several reliability, availability, and serviceability (RAS) features to maintain signal integrity and channel robustness at elevated speeds. Key enhancements include the Duty Cycle Adjuster (DCA), on-die error correction code (ECC), DRAM receive I/O equalization, Cyclic Redundancy Check (CRC) for both read and write operations, and internal DQS delay monitoring, all of which contribute to improved channel stability and error resilience at high data rates.

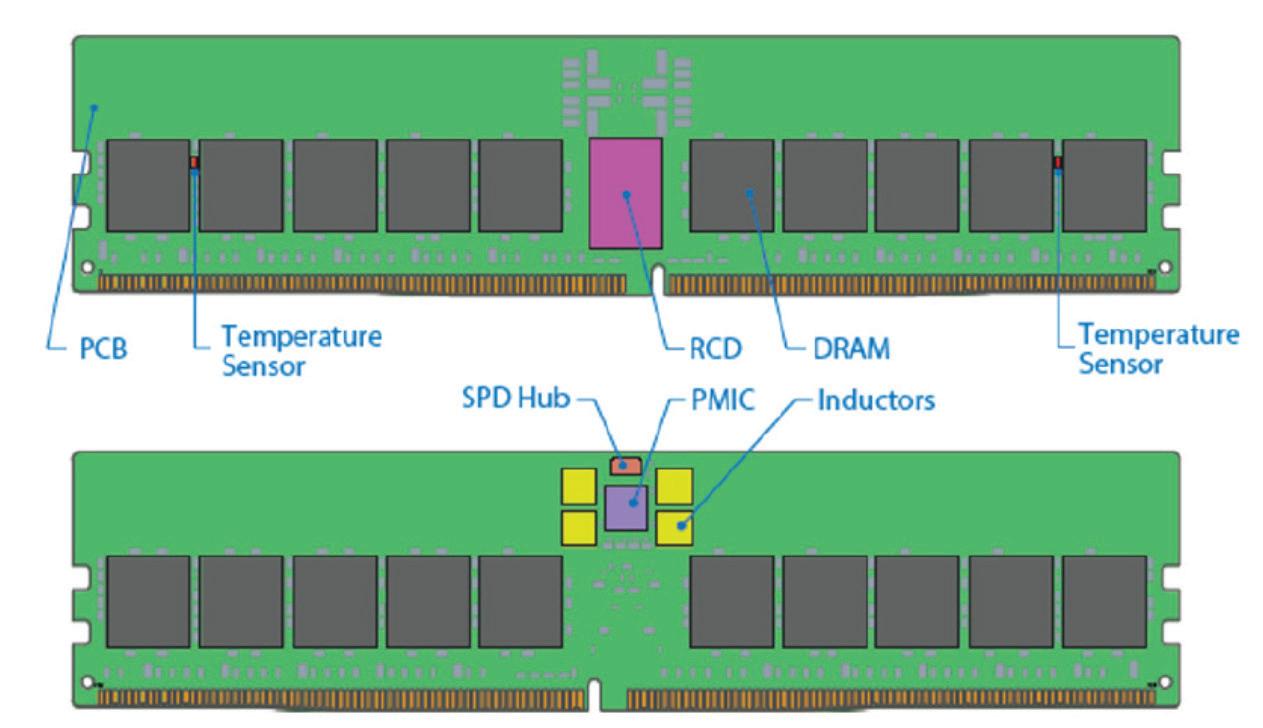

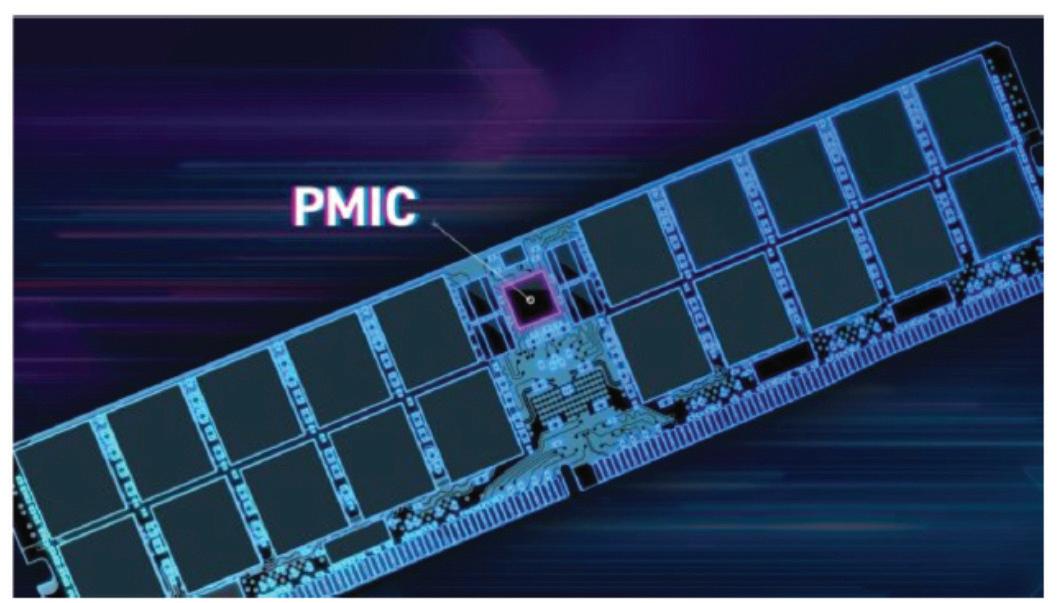

• A critical distinction between DDR4 and DDR5 memory modules lies in the redesigned power architecture, where the Power Management IC (PMIC) has been relocated from the motherboard to the DIMM itself. This on-module voltage regulation enables more precise power delivery, improves overall voltage stability, and enhances signal integrity across the memory module, supporting higher data rates and greater system reliability.

3. Shows the difference in power architecture between DDR4 (left) & DDR5 (right) based system

DDR4 vs DDR5 Uniqueness: DDR4 offers simpler power architecture, lower latency, and moderate bandwidth for standard workloads, whereas DDR5 provides on-DIMM power management, higher bandwidth, advanced RAS features, and support for high-density DIMMs optimized for high-performance, data-intensive systems.

Table 1: Comparison features of DDR4 and DDR5 technologies and exploring the key differences

voltage regulation improves power distribution, stability, and signal integrity

(up to 8400 MT/s+)

(deliberate trade-off)

(DCA, on-die ECC, DRAM I/O equalization, CRC for RD/WR, internal DQS monitoring)

more memory throughput per CPU core

latency compensated by increased bandwidth

channel robustness and error resilience at high speeds

DDR DRAM Speed Migration Path

The evolution of DRAM speeds illustrates the industry’s ongoing effort to balance performance scaling with system constraints.

• DDR4-3200 transitioned to DDR5-4800, delivering roughly a 50% performance uplift at launch, with slightly increased latency but significantly higher throughput per core. Subsequent DDR5 iterations, such as DDR5-6400, introduced Electrically Induced Physical Damage (EIPD) protection and support for high-density 64 Gb DRAM, offering lower-cost alternatives to TSV-based designs.

• High-speed DDR5-8000 RDIMMs focus on 1 DIMM-per-channel (1DPC) configurations to maximize bandwidth, trading off some density flexibility. Looking forward, DDR5 RDIMMs are projected to reach around 9.2 GT/s at end-of-life, while MRDIMMs could extend to 14.4–17 GT/s.

• DDR6 Gen1, expected at 16 GT/s, represents approximately a 50% uplift over DDR5 RDIMM EOL, aligning with CPU roadmap requirements. This speed migration path highlights both opportunity and challenge: each step increases memory performance but also drives higher power consumption and greater system design complexity.

Integral Role of PMIC in DDR5 DIMM Design

• In DDR5, the integration of the Power Management IC (PMIC) marks a major shift in memory architecture, moving voltage regulation from the motherboard to the DIMM itself. This change is critical because DDR5 operates at lower voltages (1.1V vs 1.2V in DDR4) but with higher current demands, requiring stable and efficient local power delivery to support multi-Giga transfer speeds. This helps calculate the power consumption of the DIMM with greater accuracy by tracking the output current values of the PMIC at the various operating modes of the memory module.

• The PMIC improves signal integrity by reducing voltage ripple and noise, enables fine-grained per-DIMM power regulation, and enhances efficiency by tailoring supply levels to workload conditions. It also manages power sequencing, transient response, and telemetry, ensuring reliable operation even at high densities where capacities can exceed 256GB per DIMM.

• For system architects, this decentralization simplifies motherboard VRM (Voltage Regulator Module) design but shifts thermal and monitoring considerations onto the module. Overall, the PMIC is a cornerstone innovation in DDR5, enabling higher bandwidth, better energy efficiency, and scalability to meet the demands of modern AI, cloud, and high-performance computing systems.

From Motherboard VRMs to On-DIMM PMICs: How DDR5 Redefines Idd

As outlined earlier, the key difference between DDR4 and DDR5 lies in where voltage regulation occurs—shifting from the motherboard to the DIMM itself. This architectural change makes Idd values less relevant at the module level, since they are no longer the primary basis for estimating power consumption.

In DDR4 and earlier, Idd values were essential for module-level design since voltage regulation was handled on the motherboard. With DDR5, however, on-board PMICs manage voltage regulation directly on the DIMM. This changes the methodology:

• Module datasheets specify “PMIC input current”, not DRAM Idd currents.

• Power must be calculated across the “PMIC-managed rails”, not by summing Idd.

• Idd still exists for individual DRAM chips but is used mainly by DRAM vendors, not system designers.

Why Higher Speed DDR5 DIMMs Draw More Power

1. Increased Switching Activity

• Power in digital circuits is proportional to switching frequency and voltage. DDR5 operates at 4800–8400 MT/s, significantly higher than DDR4 (2133–3200 MT/s). More frequent toggling of transistors leads directly to higher dynamic power consumption.

2. Higher Density DRAM Cells

• DDR5 supports 16Gb–24Gb densities per chip. Larger banks and arrays require more refresh operations and background current, increasing standby power.

3. Additional On-Die Features

• On-die ECC and advanced error detection add extra logic circuits, raising both static and dynamic power.

4. Power Management IC (PMIC) Overhead

• DDR5 DIMMs integrate a PMIC for local voltage regulation. While beneficial for signal integrity and fine-grained control, the PMIC itself introduces a small efficiency penalty.

Why This Trend is Still Beneficial for the Memory Industry

1. Performance per Watt Gains

• Although absolute power increases, bandwidth per watt improves compared to DDR4. For example, moving from DDR4-3200 to DDR5-6400 nearly doubles bandwidth while power rises by a smaller factor.

2. Support for Data-Intensive Workloads

• AI, machine learning, high-performance computing (HPC), and cloud workloads demand massive memory throughput. DDR5 enables these markets to scale by expanding opportunities for DRAM vendors.

3. Market Differentiation and Innovation

• The shift to DDR5 allows memory manufacturers to introduce new low-power variants, RDIMMs, and server-focused DIMMs, creating segmentation and revenue opportunities.

4. Foundation for DDR6 and Beyond

• DDR5 establishes an architecture with local PMICs and advanced signaling, laying the groundwork for future generations that will demand even more bandwidth and energy efficiency.