13 minute read

Factfulness Meets Superforecasting

By Ed McKinley

Two movements aim to nudge society toward a more data-driven search for truth and probability

The theater reverberated with laughter and applause as a Swedish physician put a humorous spin on a profound message. Paradoxically, he was capturing the crowd’s imagination and winning their affection by revealing their ignorance.

“In the last 20 years, how did the percentage of people in the world who live in extreme poverty change?” the late Hans Rosling asked the audience at one of his TED Talks. He added that “not having enough food for the day” was his definition.

His listeners entered their estimates on handheld devices offering three options. They could declare extreme poverty had doubled, stayed about the same or was reduced by half.

While their choices were being tabulated, Rosling announced that only 5% of Americans had responded correctly in a previous iteration of the test. Yes, 95% didn’t know the percentage of the population in extreme poverty had fallen by 50% in just two decades.

But the audience at this particular TED Talk had done much better. Thirty-two percent got it right, nearly equaling the 33½% a group of chimpanzees would score by responding at random, he told the group.

This was their introduction to what Rosling called “Factfulness,” a crusade to combat false notions of how the world works.

Accurate forecasting begins with reliable data. That’s why this special section of Luckbox combines two of our favorite movements: Factfulness and Superforecasting.

Think of Factfulness as the quest to banish wrong-headed but commonly held misconceptions about the world and then replacing them with the truth. It cleans the slate for making useful predictions.

Superforecasting begins with a foundation of truth and applies scientifically proven techniques to establish the probability of certain outcomes—instead of guessing what may happen. Factfulness can be a good place to start.

Readers who want to hone their prognostication skills can pursue Factfulness and Superforecasting in the pages of two books. One is called Factfulness: Ten Reasons We’re Wrong About the World—and Why Things are Better Than You Think, and the other is titled Superforecasting: The Art and Science of Prediction.

Both books are family affairs.

ALL IN THE FAMILIES

The late Hans Rosling, a professor of international health at Stockholm’s Karolinska Institute, cowrote Factfulness with his son, Ola Rosling, and daughter-in-law, Anna Rosling Rönnlund.

In a different time and place, Philip Tetlock, a professor at The Wharton School of the University of Pennsylvania, wrote Superforecasting with journalist Dan Gardner. They based the book on research Tetlock performed with his wife, Barbara Mellers, who’s also a Wharton professor.

Both books have earned praise in high places. Billionaire Bill Gates called Factfulness “one of the most important books I’ve ever read.” The Wall Street Journal lauded Superforecasting as “the most important book on decision-making since Daniel Kahneman’s Thinking Fast and Slow.

So, exactly how did these scholarly families develop their approaches to wisdom in discussions around dinner tables 4,000 miles apart?

ORIGIN STORIES

The idea of Factfulness was hatched to address a vexing problem Hans Rosling was facing. His daughter-in-law Rönnlund shared the genesis with Luckbox.

“He was working in global health and trying to teach the students a bigger picture of global trends and proportions, so they would understand what the world was like,” she said. “He was always frustrated that he didn’t manage to give them the proper overview.”

Meanwhile, on another continent, Superforecasting grew out of an academic undertaking called the Good Judgment Project. It was a series of prediction tournaments sponsored by the Defense Department to pit groups of experts in various fields against each other and arrive at better forecasts.

Although the two movements began with differing goals, they arrived at some similar conclusions. Both rediscovered a venerable piece of wisdom—the critical importance of humility.

AVOIDING OVERCONFIDENCE

Discouraging people from thinking they know more than they do became one of the chief goals of the Factfulness book and the Gapminder Foundation the family formed to gather and share the data that informs Factfulness, Rönnlund said.

“The whole book,” she declared, “is about becoming more humble about what we know about the world and make us realize that we need to spend a little time actually checking the numbers before we trust our gut feeling when we make decisions.”

Overconfidence in a set of facts can also undermine group decision-making, noted Mellers, who’s been instrumental in the foundational and ongoing research that underlies Superforecasting.

“When you don’t know the people you’re dealing with, you use the confidence they express in their answer as a cue to knowledge,” she said. “If that confidence is uncorrelated to accuracy, it can take you south.”

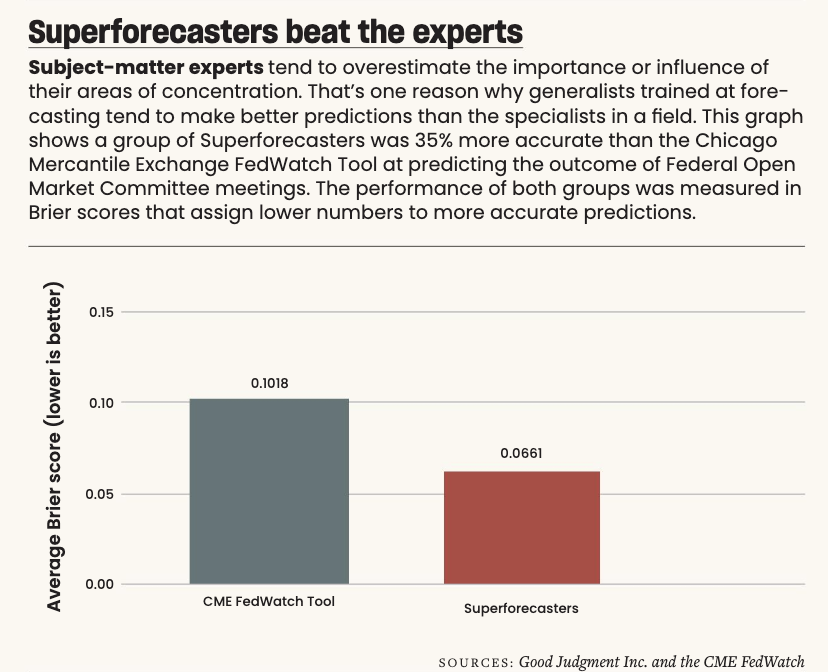

Research in both the Factfulness and Superforecasting camps also indicates experts exhibit a strong tendency to overestimate the importance of whatever is happening in their areas of specialization. Nuclear physicists, for example, tend to overstate the probability of nuclear war.

So, let’s keep humility in mind as we look more closely at how the two movements have addressed interrelated problems.

FACTFULNESS

The Rosling family identified 10 “instincts” that prevent us from seeing the world as it really exists. They called the two most important the “gap instinct” and the “negativity instinct.”

“When we’re talking about the gap instinct, we have the tendency to always talk about the extremes and the outliers and we forget the majority,” Rönnlund said.

Almost everything resides along a continuum between the poles, not at the ends of the spectrum. That fact bears directly upon the outdated view so many cling to about the distribution of wealth in the world.

Fifty years ago, most of the planet’s population lived in either extreme poverty or relative wealth. Plotted on a chart, people tended to fall into either the rich group at one end or the poor group at the other—with a wide gap in between.

These days, the chart looks more like a solid line with most people somewhere in the middle instead of at the extremes. It changed because of great strides forward in many parts of the world.

Luckbox views the gap instinct as a liability in financial commentary. Pundits issue endless predictions of bear or bull markets, even though conditions fall between those extremes 90% of the time.

Perceiving such gaps where they don’t exist hampers decision-making, Rönnlund cautioned. “If we’re going to be more data-driven, then we need to focus on the majority, rather than the few outliers,” she asserted.

Negativity, another instinct that impairs decision-making, tends to come naturally to many of us because of what constitutes “news,” Rönnlund said.

“Even though we might have a positive trend overall, the only thing people hear about will be the dips,” she noted. “Something may be on the rise or getting better and better, and we think that it is getting worse and worse.”

Besides the negativity instinct and the previously mentioned gap instinct, some of the other instincts with more or less self-explanatory names include fear, size, generalization, blame and urgency.

Others might require definitions. They include “straight line,” which conveys the false impression a trend will continue unchecked forever; “destiny,” which implies an unalterable fate; and “single perspective,” which results from relying on just one source of information.

Does subduing those instincts promote accuracy? Editors at The Washington Post apparently think it does, as evidenced by the paper’s decision to work with the Gapminder Foundation to use Factfulness to refine its newsgathering.

Plus, it’s our contention at Luckbox that keeping those 10 instincts at bay to achieve a state of Factfulness could serve as a basis for becoming a lot more like a Superforecaster.

SUPERFORECASTING

The Good Judgment Project, which began in 2011, has attracted tens of thousands of volunteers eager to hone their forecasting skills. The top 2% displayed the prowess to earn the Superforecaster designation, and tests show they’re 30% better than government intelligence experts at making accurate predictions.

These days, an extension of the project is continuing to take the guesswork out of predictions, thanks to the collaboration of four professors working on something they call the BIN model of forecasting. The model improves predictions by helping would-be prophets identify and deal with three components of forecasting: bias, information and noise. Hence, the B-I-N designation.

Let’s examine each of those factors as understood through research led by Ville A. Satopaa, a professor at INSEAD, a graduate business school with campuses in several countries. He has worked on the research with Tetlock, Mellers and Marat Salikhov, a professor at the New Economic School in Moscow.

Information: The more facts we have, the better our forecasting. With no information, it’s best to predict a 50% chance an event will occur, meaning we simply don’t know. But as intel flows in and indicates a certain event will or will not happen, we can place ever-greater probability on the outcome.

The more evidence we gather that something will occur, the closer we are to assigning a probability of 100%. But if the information we’re compiling makes it more and more likely an event won’t take place, we can assign a probability closer to 0%.

So, information is the stuff of good predictions. But what interferes with good forecasting?

Bias: Forecasting errors that occur in a systematic way are called bias. Positive bias makes us forecast too high a probability of something happening, while negative bias pushes us to assign a probability too low.

As mentioned earlier, experts often show signs of bias in their forecasts because they place too high a value on the significance of their fields of study.

Noise: The other impediment to good forecasting is noise. It differs from bias because it’s not systematic. Instead, it occurs at random.

If three financial analysts review the same accurate report and come away with three different conclusions, that’s an example of noise.

THE BIN MODEL

The four professors working on the BIN model studied what separates Superforecasters from the rest of us.

They found that elite group’s excellence at making accurate predictions breaks down this way: 50% of their advantage comes from eliminating noise, 25% results from setting aside bias and 25% occurs because they have more information.

Three so-called “interventions” can enhance the good factors and minimize the bad.

First, almost any forecaster can be taught to think more probabilistically, avoid overconfidence and seek information from reliable sources—like prediction markets and scientific polls.

Second, Superforecasters can be brought together to tap into the “wisdom of the crowd,” which the professors say is like putting them on the intellectual equivalent of steroids.

Third, forecasts can be tracked as the time for an event grows closer. Over time, bias decreases, information increases, and noise remains about the same.

But then there’s another approach altogether.

HUMANS OR MACHINES

Relying on an algorithm instead of human Superforecasters eliminates the problems of headaches, moods and all sorts of inconsistencies.

But the AI approach also has drawbacks, noted Mellers, who’s been instrumental in Superforecasting since the beginning.

“How would you feel if an algorithm decided if you should be charged with a crime?” Mellers asked rhetorically. That nightmare of legal jeopardy illustrates why the future of forecasting belongs to a hybrid of human and machine, she maintains.

While awaiting that symbiosis, we can take individual or group action to improve our forecasting. And organizations based on Factfulness and Superforecasting are there to help.

IMPROVE YOUR FORECASTS

Individually, those seeking to make better predictions can avail themselves of the wisdom between the covers of the Factfulness and Superforecasting books.

Or they can take the additional step of contacting the organizations created by the movements. Factfulness is promoted by the Gapminder Foundation, and Superforecasting’s results and methods are spread by Good Judgment Inc.

The nonprofit Gapminder Foundation has been amassing reliable data and pushing aside falsity since its inception in 2005. A year later, it began offering TED Talks to share its optimistic but realistic vision. Its website is brimming with innovative visual aids that make its facts easier to understand, remember and apply. The foundation also makes Factfulness speakers available to groups and companies.

The Good Judgment Project at Wharton spun off a commercial enterprise called Good Judgment Inc. to offer forecasts and forecasting training. It provides daily updates on the future of political, economic and social issues. Partners and clients include corporations and government agencies. Luckbox recommends its two-day forecasting course.

Factfulness and Superforecasting both aim to create a better world by convincing the population to think mathematically and keep their facts straight. But will it ever happen?

“It will be many slow steps before we have people being totally data-literate,” said Rönnlund. “We need to find a way where most people realize or learn in school that some basic statistical thinking or data literacy is more or less necessary to make proper decisions in life and at work.”

Facts Banish Folk Tales

We’re often wrong about the world simply because we don’t keep up with the times, and these two graphics from the Factfulness book illustrate the problem.

Many of us divide the planet into two camps—the developed world and the developing world. Let’s analyze that viewpoint by considering two propositions we know to be true.

Families in poorer countries tend to have lots of children but many die early, while people in richer nations have fewer children and most live to reach adulthood or even old age.

The illustration below that’s marked 1965 reflects data from that year—a time when the perception of a bifurcated planet made sense. The circles represent countries, and their size corresponds with their population. Clearly, the distribution of the circles shows that when it comes to childbearing and survival most countries were either developed or not in those days.

But much has changed since then. People in most places are giving birth to fewer babies these days, and those children are more often growing up and surviving to a ripe old age.

That’s why the illustration marked 2017, which illustrates data from that year, shows the preponderance of countries now qualify as developed or have progressed toward that status.

So, the recent data discredits the outmoded idea of a world neatly divided into two groups—one of richer countries and another of poorer ones. What’s more, the seemingly insurmountable gap between the two categories has disappeared.

The numbers also dispel the cynical notion of a planet in decline. Contrary to out-of-date beliefs, the inhabitants of Planet Earth are becoming richer, healthier and better-educated.