39 minute read

PEMAC CERTIFICATIONS

Congratulations

The following professionals have received PEMAC recognition between August 1, 2020 and July 31, 2021.

Maintenance Management Professional (MMP) Certification

Abbas Zanjani Alexander Inglis Alexandre Crépeau Alkesh Kotia Andy Jones Anthonio Nader Anthony N. Mwangi Barry Dellaire Benedicto Malaso Benjamin Bandola Brendon Binns Bruce MacLennan Chris Cooper Christine Abejo Christine Sumbler-Brasz Cliff Williams Cody Munford Curtis Hornsby Daniel Pellan Darcy Moore Darren Jones Daryl Du Preez Edgar Trimm Erik Willihnganz Foued Boulghobra Francis Jobin Francois Renaud Garth R. James Gary Anderson Geoffrey Harris George Giroux Gino Talbot Greg Cromwell Greg Dewar Greg Paxman Guillermo Bolivar Harold Bishop Harry Schummer Himanshu Shah Hormoz Shoghi Houssem Chouaibi Jackson Valentine Harare Jagjit Kainth James Cawthorpe James Reyes-Picknell Jason Spears Jean-Ludovic Sossou Jean-Philippe Asselin Jean-Pierre Pascoli Jeanne Hunter Jeff Down Jeff Lyons Jeffrey Tuma Jian Wang John Groff John Jones John Lindberg Kenneth Preston Kevin Johnson Khaleel Mohammed Kim Heuchert Kumar Mani Kyle Korner Larry Chandler Mark Delorey Mark Dieno Mark Villeneuve Matt Marx Matthew H. Pegg Md Manjurl Karim Michael Acorn Michael Brown Michael Minckler Mohamed Elsarraf Muhammad Rizwan Natasha Hanna Nicholas Findlay Norman Kerswell Olusegun Ige Oluseyi Paul Ayedero Oreva Oboghor Pascal Tremblay Paul Purchase Quinton. J. North Ramsey Aitkenhead Raouf Aloui Raymond Palmquist Renato Peña Richard Andrews Rocky DeCoste Ross Wert Russell Ketcheson Ryan Fraser Ryan Trafiak Said El Hamdi Said Irsalane Sajeevan Jeevakaran Shannon Mercier Shemroy Manning Spencer de Klerk Stephen Farrell Steve Bartlett Steve Fréchette Steven Leacock Tanner Kittler Taylor Christie Ted Branscombe Terrance Mohammed Tracy Hatch Trevor Dalheim Tyler Rae Victor Nguyen Viktor Goas Vipin Kumar Vishal Vardhan Wayne Halverson William Brook William Cutler Zubair Kharadi Certified Asset Management Professional (CAMP) Certification

Amanda Aasen Andrea J Loveday Ania Orlowska Benjamin Gibas Carole Gillingham Christopher B Keehn Christopher Kulagowski Emma Petch Fernando Landeros Geoffrey Bragg Heather Miller Ian Michael Flood Laszlo Takacs Marc Richard Maytinee Vatanakul Mehran Teymour Beigi Zargham Nimesh Arun Patel Richard Paquet Robert Gillis Robert Lash Ryan Spark Stanley Sawchuk Stephanie Agar Taylor Schmidt Virginia Liu William Mintern Zdravko Djuric

Maintenance Work Management (MWM) Certificate

Adam Young Alexander Stekolin Andrew Nalle Persaud Anthony N Mwangi Barry McCambridge Brad Wheeler Bryce McMahon Chris Melendy Christine Sumbler-Brasz Daniel Fallbacher Daniel Macdonald David Daly David Shaw Doug Niemi Eric Martel Francis Ogamba Gary Wicentowich Geoffrey Harris Greg Eljoke Jackson Valentine Harare Jammie Duquette Jeffrey Millar Joerg Baader Kevin McNutt Kevin Mitchell Kyle Irvine Kyle Sprague Lionel Weseen Mark Hirsch Mark Mendonsa Mark Stasiuk Michael Buckingham Nicholas Whitson Nives Bernard Norman Kerswell Oreva Oboghor Patrick Boden Pratik Patel Quinton North Richard Mercredi Ripal Shah Robert Banzon Robert Martin Ryan Berg Shara Selvaratnam Shawn St.Amand Shehzad Khan Steven Ringma Sudarsha Brahma Rajolu Vincent Okojie Vishal Vardan Wyatt Hughes Zachary Baker

On My Bookshelf

Developing Performance Indicators for Managing Maintenance and Benchmarking Best Practices in Maintenance Management by Terry Wireman By Leonard G Middleton

This is another discussion about two books that have earned their place on my bookshelf. As you can tell by the extra-long title, this is a “twofer.” The books in question are by Terry Wireman, and I consider them closely related.

As is sometimes the case, the books I have aren’t the most recent editions, as there are later editions of both. I’ve owned and read the books and got value from them well before the later editions were even published. For me, there’s limited value to purchase the books again to get any additional insight the revisions might provide.

I purchased Performance Indicators first, as it was published before Maintenance Management. In addition, I could find no other books dedicated, and directly related, to maintenance performance measures.

As a result of my work experience, I’m a great believer in the effectiveness of the use of performance measures to drive behaviour. However, I’m also a cynic, having observed when these measures haven’t driven the behaviours desired by the organization. The focus needs to be on improving performance, not just on improving the numbers being measured.

The book starts by providing context through a chapter called “Developing the Maintenance Function,” followed by a chapter called “Developing Maintenance Functions.” It then provides chapters on performance indicators for a number of maintenance-related processes, including preventive maintenance; predictive maintenance; supply chain; workflow; CMMS; training; operational involvement; and total productive maintenance; to name a few.

The chapters on performance indicators are similarly structured with a short description of the function and a listing of related performance measures. Partway through the chapter is a list of potential problems, followed by indicators to identify and measure the magnitude of those problems.

Even the indicators themselves are structured in a pattern, with an overview of the indicator and the calculation; where the values come from; a discussion of potential target values; and then relative strengths and weaknesses.

To get the most value from this book, you should read the first two chapters to understand the context, then flip to those areas where improved performance is critical and find which indicators would best measure your

organization’s relative performance. It’s unnecessary to read the book from cover to cover if your focus is to address current issues in your organization.

To accompany your efforts in applying performance measures to drive behaviours, you might also want to review this article from the PEMAC Shared Learning Library, entitled “Effectively Communicating Performance Measures”.

I’ll state my bias around the title to start. I cringe whenever I hear the term best practices. To me, it implies that there’s some expectation for a common set of practices that could be applied to all organizations and in all circumstances, where all those practices would be best. That has not been the case in my professional experience. Maybe, having spent considerable time in the auto industry, I’m more of a YMMV (your mileage may vary) kind of guy, and think that context is important.

There are few books on the benchmarking process, and even fewer on benchmarking maintenance management that also include recent numbers or trends. This book was of interest to me, as I purchased it sometime after managing and doing the analysis for a couple of major benchmarking projects (project management, electrical and instrumentation maintenance management); analyzing data on a couple of others; and evaluating the benchmarking methodology used by a client on another.

There are few publicly available databases or analyses from benchmarking. Benchmarking requires a high level of effort, has a long duration, and thus has a large investment for the organization managing the benchmarking to undertake—and with little incentive to share the outcomes with actual or potential competitors. And that raises another issue: how to obtain industry information from competing organizations within an industry. It can be done, and to maintain the confidentiality of the source of the information, one can potentially use an independent industry association. Another approach is to hire a trusted third party to perform the benchmarking to maintain the confidentiality of the data and the source of it; that was one of my roles, as noted above.

Data comparability can be a problem, due to differences in context, industry or organizational conventions, or accounting rules, for example. Therefore, definitions, or understanding that definitions of the data may vary, thus impact the final analysis. Even with all the limitations mentioned here, the data and information provided from benchmarking are useful. You just need to understand the limitations of the information provided, their potential impact on your analysis, and what impact they can have on the decisions that will result from them.

Like Performance Indicators, this book provides context at the beginning with a chapter on analyzing maintenance management (including the questions and their scoring), followed by a chapter on benchmarking fundamentals. Not all of the questions are quantitative; many are qualitative.

A discussion of the results from the questionnaire follows, divided into chapters on maintenance organizations; training; work order systems; planning and scheduling; preventive maintenance; inventory and purchasing; reporting and analysis; world-class maintenance management; and integration of maintenance management.

This book is extremely useful to better understand the alternate approaches taken and their relative value. It can help provide some insight on how to achieve better performance. However, like Performance Indicators, the focus needs to be on improving performance, not on improving the numbers. I’ve heard horror stories where organizations have found that higher performers had a certain level of staffing. Those organizations would then mandate a reduction in their staffing levels without understanding what changes would need to be made to effectively operate at those staffing levels without significantly increasing their risk.

If you’re a maintenance or reliability specialist, both of these books deserve a place on your bookshelf. They certainly earned their place on mine.

PEMAC Member Spotlight - Tracy Hatch

Tracy’s career journey proves that careers in maintenance, reliability and asset management are for people who aren’t afraid to take on a challenge and learn as they go. By Cindy Snedden, PEMAC Executive Director

Tracy Hatch was five years into building a logging business in northern Manitoba with her then-husband when both personal and professional aspects of the partnership began to unravel. Given that they had two small children at the time, Tracy decided it would be prudent to channel her talents and energy into a career path that would allow her to be self-supporting in the future.

In addition to the business skills she’d gained running a small business, Tracy had a strong post-secondary foundation, including a two-year diploma from an Electronic Repair Technician program and a Chemical Engineering Technologist diploma (Honours). So she responded with high hopes when she saw the requirements for an Aboriginal preplacement program that Manitoba Hydro was sponsoring that could lead to a lineman apprenticeship. During the qualifying process, however, she found the pole-climbing requirement challenging, managing only one out of the five required climbs before deciding that the lineman trade might not be for her. She was promptly referred to a similar program for the electrical and millwright trades, where she passed the entry requirements and went on to complete the nine-month preplacement program. She was subsequently hired by Manitoba Hydro as an Apprentice and then progressed to achieve Journeyman status as a Power Electrician in 2009.

Tracy’s first permanent position with Manitoba Hydro was at the Pointe du Bois Generating Station on the Winnipeg River— Manitoba’s earliest generating station—where many of the electrical, civil, and mechanical systems have been in place since it was first built in the early 1900s. “It was old-school cool,” says Tracy. “I really liked working there. We had to fix things, not just replace components as we often do in the digital age.”

From there, she went into the electrical construction side at Manitoba Hydro, working on upgrades to generating units at various plants over the next several years. Sometimes she would be attached to one plant for a longer project. The last of these was the Wuskwatim Generating Station, during its commissioning phase. “I got there in 2012 as it was transitioning to operations. I took a position as the Electrical Engineering Technician and then moved into the Maintenance Planner role.”

Tracy worked as a Maintenance Planner

with Manitoba Hydro for the next seven years and undertook continuing professional development courses with the support and encouragement of her employer. She achieved a Certificate in Management: Utilities Management—a joint course between Manitoba Hydro and the University of Manitoba—which led to a CiM professional designation. She also took PEMAC’s Maintenance Management Professional (MMP) program, completing it in 2020.

With MMP certification in hand—and with children grown and positioned to take care of themselves in northern Manitoba (her daughter as a welding apprentice and her son as a lineman)—Tracy headed to the market to see what opportunities might be out there. Her search resulted in more than one offer and she opted for adventure, again, when she took a position a little outside her comfort zone (in a new province and a new industry) as an Electrical Planner with Resolute Forest Products, a pulp and paper company in Thunder Bay, Ont., in March 2020, just as major pandemic precautions were hitting.

“This has been a big learning curve. It was a new industry, and just the terminology difference blew my mind. But also, I had no connections here and didn’t know the area. I had no idea where I would live. I just came. But it has worked out well for me. I had been working eight days on and six days off and at camp jobs at Manitoba Hydro. Now I have a Monday-to-Friday job and live relatively close to work. I have my evenings and weekends and lots of places in the Thunder Bay area to explore and enjoy.”

Within five months, Tracy interviewed for, and was offered, Operations Maintenance Coordinator at Resolute. In this role, she is a key liaison between operations and maintenance, so the job can be intense. “I have to function at a high level all day and ensure my ducks are in a row, or else there can be miscommunication or no communication and work doesn’t get done,” she explains. “It’s an advantage that I’m coming from a Crown corporation where the systems were very well defined and formal. Here, I’m actively participating in building and refining the systems and procedures—and, quite often, I’m leading the change. I have to set boundaries and insist on things being done a certain way with specific people signing off to be sure they’re in the loop. I’ve built a number of different Gantt charts and have continually refined them through five or six planned shutdowns. The superintendents are starting to proactively share the information I need because they understand the processes I’m building. There’s a sense you’re always competing with production priorities, but I’m making the case that the work management system is an asset to this company—it’s just as important as the paper machine.”

As for skilled trades as a career path, Tracy says there’s a shortage of skilled labour. “I think it’s caused by a combination of things: an overemphasis on university education, but also so many young people are growing up in this digital age in urban environments where hands-on skills aren’t required. I’ve seen apprentices who don’t have knot-tying skills, for example, who maybe feel foolish for [knowing how to do] that. But it’s not their fault. When did they have the chance to learn the importance of a good knot? My kids obtained dual high school diplomas (combined trades and academic programs). They were raised with an outdoors lifestyle and had opportunities to try out various trades and work placements in high school,” she says.

“I’d encourage young people to combine study with undertaking work that gets their hands dirty, and to target their next opportunity and prepare for it as they go along. This is what my kids have done, and I hope I’ve influenced them. I can see they’ll do well no matter what they choose to do in life.”

Tracy is grateful for the combination of experience and education that has prepared her for her current role. And, she observes that there are personal characteristics, too, that contribute to success in maintenance planning and scheduling. “You have to have a certain personality to be a planner/scheduler. If you don’t have a natural tendency to make a list and plan things out, you’re going to struggle. But then, you also have to be able to go with the flow if something changes. If we stray from the schedule because something unexpected happens, I can handle that, no problem. Having the plan serves you whether or not something unexpected happens.”

By James Kovacevic, MMP, CMRP, CAMA, Principal Instructor Eruditio LLC

Unreliability, a term I first read about from the late Paul Barringer, can be defined as “the inability to be relied upon or trusted.” Initially, Paul used the term to define the process for determining the costs associated with plant failures. While I’m sure he used it in other ways as well, I’ve found it helpful to describe all of the issues we want to address and prevent when improving plant performance. Reducing unreliability involves addressing all of the different ways assets prove to be unreliable—such as wear-out, or random failures—but I believe an often-overlooked area of opportunity is human error.

Human error is defined as “the failure to perform a task (or the performance of a forbidden action) that could lead to the disruption of scheduled operations or damage to property and equipment.”1 In other words, when an action is performed incorrectly and leads to an impact on plant operations it’s a human error. Thinking about it this way, one might realize that there’s a tremendous opportunity out on the plant floor to address unreliability by preventing human error.

Exploring human error a bit more deeply, John Moubray, the Originator of RCM II, identified a type of human error as psychological. Psychological errors are related to the causes of the mistakes made. Psychological errors are divided into two types: unintended and intended. Unintended errors occur when someone does a task incorrectly. An intended error occurs when someone deliberately sets out to do something, but what they do is inappropriate. The intended error can be either a mistake or a violation. A mistake is a misapplication of a good rule, an application of a bad rule, or an inappropriate response to an abnormal situation. A violation is when someone knowingly and deliberately commits an error.2

Now that we understand human error, what can we do about it? Frankly, a lot of different things can be used to address psychological errors within our plants. Based on the different types of psychological errors, we can address three of the types, not the violation. In order to understand what we can do to reduce human errors, we can learn from nuclear navy and aviation. If we can understand what the U.S. Navy did with SUBSAFE and what aviation did to address the infant mortality failure curve (from the Nolan and Heap study), we can apply those techniques to our operations.

First, we need to focus on procedures. You must be thinking, Why procedures? We only hire qualified technicians. But even qualified, experienced technicians may not have worked with the same type of assets used in your facility. In addition, what may have been acceptable in terms of wear or degradation at their past employers, may not be acceptable in your facility. For example, a PM routine that has

an inspection step of “inspect pump” may be interpreted as a quick visual inspection by one mechanic and a detailed inspection by another. With procedures, the expectations can be clearly defined in terms of what is, and isn’t, acceptable.

Continuing with procedures, we can leverage them to ensure critical steps aren’t missed. You may also be wondering how technicians can miss critical steps. Unfortunately, it happens more often than you think. The best way to explain it is through an analogy. You may have driven to work or from work and arrived at your destination, without remembering the drive. This occurs because you may have had important things on your mind, and your mind went on autopilot to get you to your destination. The same thing happens to experienced staff members while performing repairs or PM. They can reference procedures to ensure critical steps or specifications are achieved. But procedures alone won’t eliminate all of the errors that drive unreliability.

It’s often not enough just to develop procedures and make them available to staff. Staff members must be trained on the procedures and qualified on them. This would involve walking the technician through a specific procedure and maybe having them perform it under the guidance of an experienced technician. Once the technician can perform the activity without issues, then they may be assigned the work in the future. Now, to ensure the work is performed to the requirements outlined in the procedure, the organization may choose to implement work audits or another method of verification.

Another tool used to address unreliability (often used in conjunction with procedures and training) is the checklist. Now, I’m not referring to any old checklist with 50 checkboxes, but instead, a well-thought-out checklist adhering to the principles identified in The Checklist Manifesto. In The Checklist Manifesto, author Atul Gawande outlines how checklists should be short and easy to complete and, more importantly, only contain items that are critical or often overlooked. Not everything goes on a checklist; nor is everything a training tool. A checklist should be used by experienced, trained staff to reduce the chance of overlooking critical steps and prevent severe consequences.

By combining these three tools, any organization can significantly reduce the number of human errors that occur. Think about the military and its assets. Often you will find a 21- or 22-year-old working on and maintaining nuclear propulsion systems. Not only does the military have severe consequences if the assets do not work, but it also has to deal with a continuous turnover of staff. It addresses this issue by focusing on training, procedures, adherence to procedures, and checklists.

Most of the organizations where we work may not have the same level of consequences as the military if there’s a human error, but we have consequences nevertheless. As a result, organizations can scale procedures, training, verifications, and checklists to their needs. For example, an organization may realize it has significant issues with shaft alignment or bearing installation, but not a belt or chain installation. In this case, procedures, training, and a checklist can be developed for shaft alignment and bearing installation, but not the other tasks. The training may also focus on just the actual task of aligning the shaft and not every job plan or procedure that includes.

These same techniques can also be applied to operations and how our equipment is operated. Many of the failures experienced in the plant can be traced to improper operation or set-up of the asset, so why not address those through the same human error reduction techniques?

Addressing human error is critical to reducing the unreliability of equipment and often doesn’t come with a high cost. It doesn’t require a large number of capital funds or a huge team to implement it. Start small and address the most common issues, and slowly build up the procedures, training, and checklists over time. Remember, planners should be developing procedures every day, so make sure you leverage that work. If you start to address human error, you’ll find longer-lasting equipment and fewer infant mortality failures—and you’ll slowly become less reactive.

1 Hansen, Frederick D. Human Error: A Concept Analysis. s.l.: Journal of Air Transportation, Vol. 11, No. 3, 2006. Human Error: A Concept Analysis 2 Moubray, John. RCM II. s.l.: Industrial Press Inc., 1997.

By Dr. Agwu E. Agwu, PhD, CMRP, PMP

Have you had situations where the project team could wait to move on before hand over? Where the maintenance team had no input to the project design and equipment selection? Where the project team noticed that the project was getting over budget and behind schedule and had to sacrifice maintenance on the alter of time and cost constraints? What about a case where the project was handed over with no or incomplete CMMS and asset register, with inadequate maintenance staffing, generic maintenance plans and task lists (if any), incomplete (if any) bills of material, undefined maintenance strategies, inadequate equipment criticality and a myriad of supply chain and material availability related issues? If you answered yes to any of these questions, rest assured that you are not alone. It is strange to believe that with almost a quarter of the 21st century gone, maintenance teams still encounter very stressful first three years after project handover. Instead of “enjoying our new car” with full warranty for the first four years, we spend the first three years modifying the plans, fixing project errors with high CM/PM ratios, and managing unplanned downtimes and equipment failures. We spend time searching for and optimizing project and equipment documentation, right sizing manpower, developing and facilitating training programs, developing CMMS, building up asset registers, equipment hierarchy and bill of materials, and generally fighting fires. These are avoidable high stress activities. In some maintenance circles, the perception is that maintenance people age faster than others – perhaps the reason is not far-fetched!

Imagine with me a $350M project with a projected 18 months schedule, 98% projected availability over a 25-year life cycle. Imagine that you receive the project as scheduled and on budget. Imagine also that you achieve nameplate production target at start up, sustain the nameplate production at the planned maintenance cost within the first three months. Let’s call this Project A. This may seem a too simplistically utopian scenario, but is it? What if the project is built to scope with the right stakeholders involved from initiation? What if the project is handed over with a fully integrated CMMS and reliability management program at start up? What if you achieve an adequate and fully trained maintenance staffing that were part of the project from planning stage, spotting and fixing main-

tainability issues before handover? What if you have an adequate equipment criticality and materials management process, an 80% PM/CM ratio and a synergy between operations and maintenance teams? What if...? Perhaps our imaginations and the reality may not be too far apart. You can now understand the stressors and the immense stress levels maintenance teams are often exposed to from project handover.

Very often startup times are prolonged due to maintainability issues discovered during mechanical completions (if maintenance personnel are part of the mechanical completions). In most cases, these operability and maintainability issues are discovered long after completions with resultant interruptions to ramp up. Maintenance teams have been found to spend the first three years after handover on modifications, breakdown repairs, staffing, skills level upgrade, CMMS and associated asset register, equipment hierarchy, maintenance plans and task lists development, and documentation processes implementation. Experts are quick to give this period a name in the maintenance and reliability bathtub curve – “infant mortality”, but must the “infants always die?” Maintenance readiness in projects could become a very effective process to reduce this “infant mortality”, optimize maintenance cost and asset life cycle, and more importantly, reduce the very high stress levels among maintenance persons within this window. To understand this concept better, we must first get into the minds of project managers and see project management from their perspective.

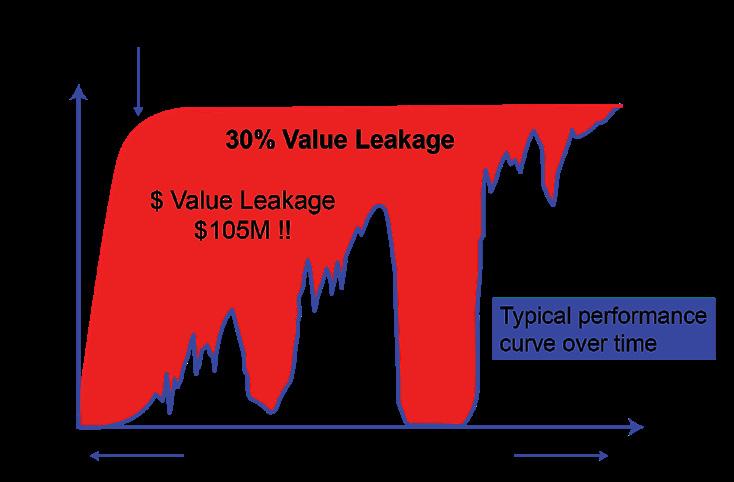

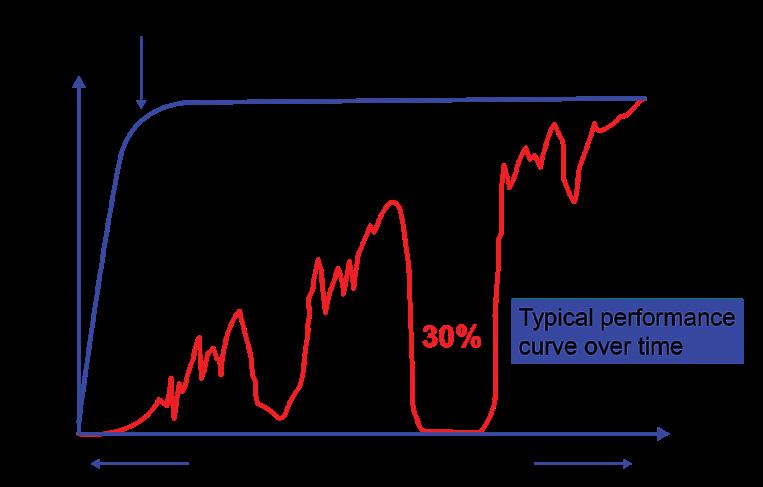

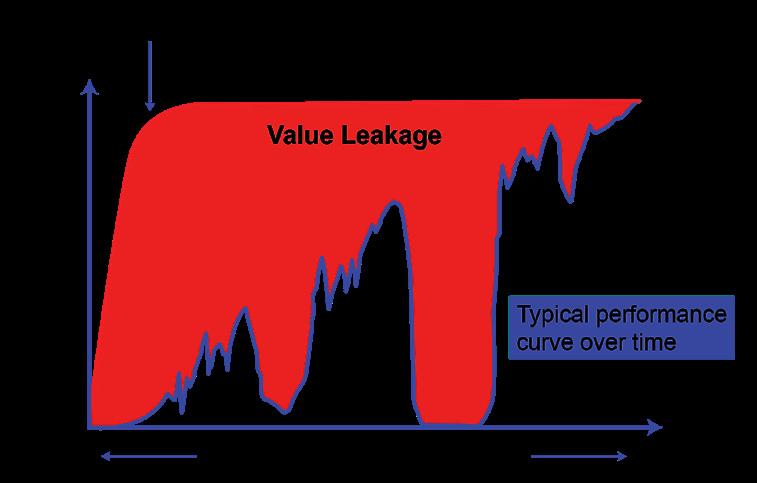

Fig 1: A curve of project performance over time, showing value leakage as a percentage of project cost Fig 2: A curve of project performance over time, showing sources of value leakage Fig 3: A curve of project performance over time, showing value leakage

Project Managers’ Perspectives

With increasing complexity of projects, project managers are more than ever struggling with diverse and often conflicting stakeholders’ objectives. Traditionally, especially in the Americas, Middle East, Asia, and Africa, projects are generally seen from the perspective of the triple constraints. According to the Project Management Body of Knowledge (PMBoK) A project is “a temporary endeavor undertaken to create a unique product, service, or result” with consideration to completing the scope to the defined quality, within schedule and within budget. By this definition the project is considered a success if these constraints are achieved. With deliverables defined at the start of the project, the project team remains only focused on these defined deliverables. They view scope changes as irritations and “it is not in the scope” becomes their mantra when confronted with potential changes irrespective of if these could impact the asset life cycle – maintenance should deal with everything else after handover! Operations and maintenance see this from a different perspective. Asset Owners’ Perspectives

Operations and maintenance are interested in the asset life cycle performance. They align more with the perspective of UK OGC’s Projects in Controlled Environments (PRINCE2) that there is life after the project. The users define not what they want done, but what they hope to achieve. The project focus and success are defined not by the triple constraints, but by the organizational perspective of continued business justification throughout the asset life cycle.

The inclination of project teams to want to quickly “toss projects across the fence” and move on to the next project creates a potential for value leakage. This value leakage includes all costs incurred within the first year after commissioning, to achieve nameplate production. This excludes the planned operations and maintenance costs. Research puts this leakage to about 30% of the project cost! Consider Project B with similar expectations as Project A above. A 30% value leakage from the $350M project cost results in a value loss of about $105M by the end of the first year of operation!

When you factor in production losses during unstable ramp up, unplanned and often extended downtimes and frequent equipment breakdown, the total cost increases.

You can therefore begin to imagine pressure and high stress levels within the maintenance teams at this point. This stress level is exacerbated by the inadequately resourced maintenance staff with minimal new equipment specific training, frequent re-engineering and management of change, and documentation transfer inconsistencies. The need to mitigate this was the reason for Operational Readiness.

Operational Readiness

Operational Readiness (OR) is a focused, proactive, and systematic process to ensure a successful and seamless commissioning, startup, and operation of a new project. The key deliverables of OR include timely asset start up, flawless startup, ramp up to steady state, sustainable life cycle performance, and ultimately, the shrinking of value leakage.

OR is structured in three stages. Stage

one considers the readiness of the asset for handover. It covers asset functionality, operability, health and safety, documentation, organizational and assurance plans. Stage two considers the readiness of the asset for flawless commissioning and start up. Stage three considers the readiness of the asset owner for asset take over. It covers elements such as embedding operators into the project, training, management systems, life cycle operational expenditures, asset integrity, and operational philosophies. Despite the best intentions of OR, value leakage still persists within the first yea(s) of project delivery and most of this has been attributed to maintenance related issues. Perhaps it is time to rejig OR and make a separate case for maintenance readiness in projects.

Maintenance Readiness

Research has identified key reasons for the value leakages. These include the late engagement of maintenance staff, inadequate asset register, incomplete maintenance management systems, inadequate maintenance plans and task instructions, inadequate training of maintenance persons, and inadequate project information documentation. In one case study post start up, the maintenance personnel were unaware of specific maintenance requirements for equipment, and the maintenance cost was 35% above budget. In that same case study, there was considerable amount of rework and re-engineering, and it took the plant nine months to achieve 50% of nameplate production. In addition, the maintenance team had to rebuild the maintenance plans and task lists within 2 years with an additional overall cost overrun of 32%. Furthermore, there were multiple high severity unplanned shutdowns in addition to related health, safety, environmental and reputational issues.

This is one of the most common sources of stress among many maintenance teams. Maintenance is often considered an “addon” rather than an integral and critical success factor in a project. Most often, the asset is “tossed over” to the maintenance team post commissioning and the team begins the long and arduous journey to put things right. The organization would thereafter inadvertently transfer the pressure of fixing project errors to maintenance. We are expected to “perform” and would usually be blamed for subsequent equipment failures and downtimes. Another key stressor is the readily unavailable project documentation months after handover! When they eventually become available, they are voluminous, sometimes in hard copies without proper indexing and usually not usability mapped. This makes it especially both difficult to search for specific data, or transfer into CMMS. One of the most common stressors occur when the equipment selection is made with project cost control in mind, instead of life cycle maintenance cost, spare parts availability, and general maintainability. Again, maintenance is made to pick up the slack with very stressful first year(s) of operation and maintenance. We spend most of the first year(s) relying on past experiences, learning on the fly, “fixing” project errors and with our usual big heart, taking the blame for the consequences of most of those errors with expansive smiles on our faces! This cannot continue. Maintenance Readiness must be implemented across organizations to save lives.

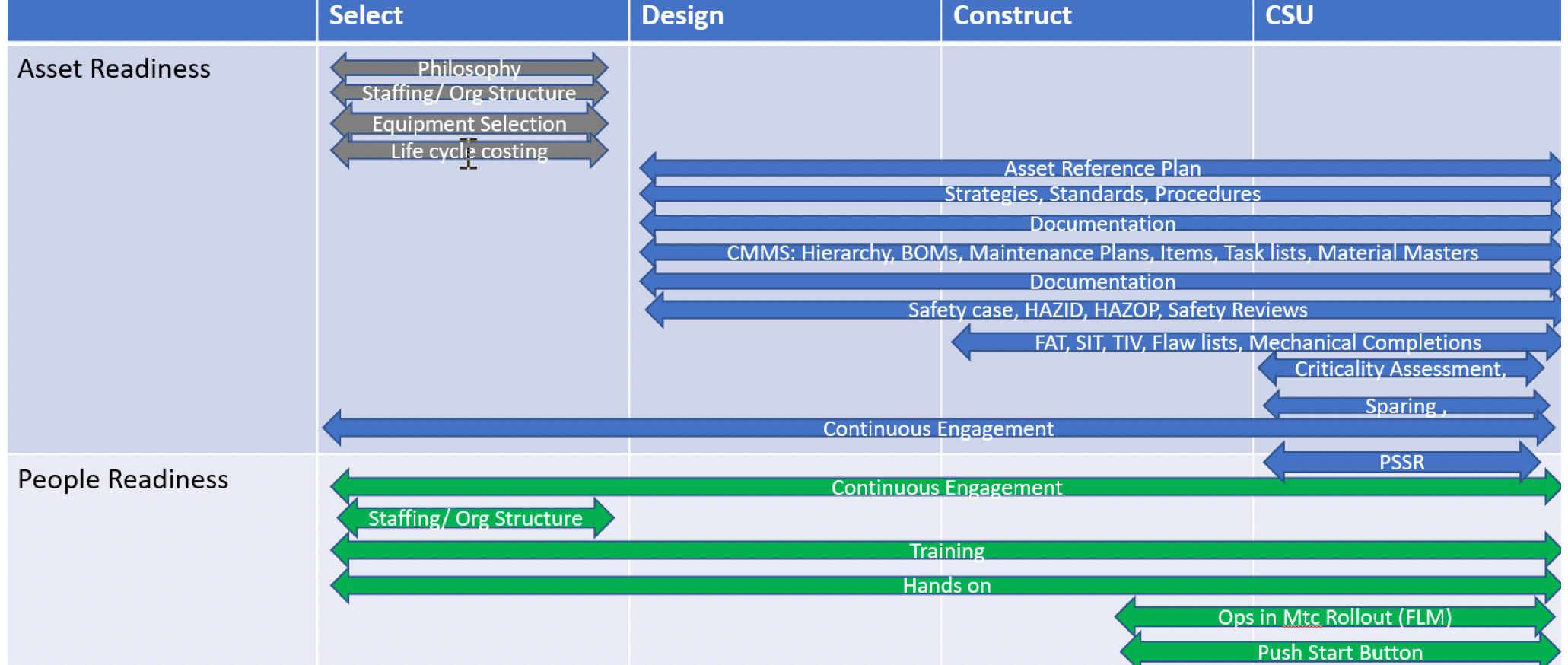

There are two aspects to maintenance readiness: asset readiness and maintenance people readiness.

The Way Forward

In a research from 2021, an offshore gas platform, a power generation facility and an oil producing plant had the operations and maintenance teams in place from the early days of engineering. This increased the project personnel cost by about 18%. They participated in every aspect of the project from specification considerations, planning, design, construction, commissioning and eventual handover. They knew where “all the bodies were buried” The maintenance team helped in equipment selection, project documentation, gap analyses, and safety reviews. They identified training and staffing needs early and worked to bridge those gaps. They populated the asset registers, set up the CMMS, performed criticality analyses, set up documentation libraries, mapped the documents for usability, and had 100% of the maintenance plans and task lists built with their correct cycles and strategies. The maintenance teams were tasked by the organization to symbolically “push the start button”. Despite the about 18% increase in project manpower cost, there was a total cost saving of nearly 39% by the first year of operation. In addition, the assets were started ahead of schedule with further savings in additional production, as well as a reduction in equipment failures and downtimes of about 70% when compared with previous projects within their first year of operation. The most important aspect of this was that the maintenance teams had a near stress free first year of

operation. These examples emphasize the need for maintenance readiness in projects.

There are two aspects to maintenance readiness: asset readiness and maintenance people readiness. Asset readiness starts at the project “select” stage, at which point the maintenance team must be involved in the determination of the asset maintenance philosophy. By the end of the “select” stage, the maintenance organization must be fully resourced and involved in equipment selection and life cycle planning. By the end of commissioning, the asset register must be fully documented and must be exactly the same as physical equipment installed, P&IDs, single line drawings, and control system architecture documents. This information must also be transferred to the CMMS with consistent tag IDs and hierarchy down to their BOMs. In addition, the material masters, the maintenance plans and task lists must have been loaded into the CMMS with appropriate frequencies and strategies. Finally, the asset criticality assessment must be complete, all critical spares documented and available, and material master information loaded into CMMS.

People readiness refers to the assurance that the maintenance organization is in place early enough to get the deliverables ready before handover. It also involves their training, and hands on involvement in project design, construction and commissioning and start up. Ultimately the aim is that when the asset is ready for handover, the maintenance team is ready to assume ownership and maintain the asset flawlessly so as to shrink the usual value erosion during the first year of operation.

Conclusion

Maintenance readiness in projects might eventually add a few dollars to the project manpower cost, however the savings from the usual value shrinkage in the first year of operation would more than compensate for this. Let us not “spoil the ship for a ha’pworth of tar” as the old English saying goes. Maintenance readiness not only reduces the stress factors usually encountered by maintenance people in the first year(s) of operation, it additionally gives the organization more added value from the savings in life circle cost. It should therefore be a must have for every organization. Let’s start the #de-stress maintenance through maintenance readiness in projects.

Dr Agwu E. Agwu PhD CMRP PMP Agwu E. Agwu is a maintenance manager with over 26 years maintenance and reliability experience. He has presented at different conferences in US, Canada, The UK, Italy, and Nigeria. He is passionate about enhancing professionalism in maintenance. He holds a PhD in Operations and Systems Management with specialization in High Reliability Organizations.

Photo: © xmentoys / Adobe Stock

By Dr. Janet Lam

It seems the promise of machine learning and artificial intelligence changing our worlds is always right around the corner. Indeed, maintenance professionals are receiving frequent proposals for the next best technology driven solution that claims to solve all of our maintenance problems. Let’s discuss how to evaluate different solutions so that we can make the most of the emerging technologies.

In any asset-intensive industry, there are various metrics and data that are produced. Some fall into the category of operational data, that measure the effectiveness of the overall process. Another category is maintenance data, that can be used to measure the efficiency and efficacy of the maintenance procedures. As the economy enters the fourth industrial revolution and the Industrial Internet of Things becomes more prevalent, so is the abundance of data that is being produced from our equipment. With such an abundance of data, analytical methods that have not been available before are becoming mainstays.

This article will help prepare maintenance practitioners to communicate with programmers and scientists in the area of machine learning by discussing key concepts, taxonomy, various procedures that form the machine learning process, and how to evaluate different machine learning algorithms.

Traditional vs. Machine Learning analysis

The key difference of a machine learning approach from a traditional method of analysis is in the open-endedness of the questions that can be asked and answered through the analysis. Whereas traditional programming compute user inputs and parameters into outputs, machine learning approaches examine and extract patterns and models from sets of inputs and outputs.

In the machine learning in case, it’s not necessary for the various potential parameters to be identified by a user in advance. Rather, the algorithm selects the most ideal features for the model.

A major advantage of this open-ended nature is that the potential models are no longer limited by the hypotheses of the user, but that unexpected variables and relationships may be revealed.

A sample set of questions could be:

Traditional

What is the relationship between availability, maintenance compliance, and percent mean preventive maintenance? What are some metrics that affect availability, and how much?

Model classes

While there are many (and growing) machine learning models, there are a few that classes that are very prevalent, and can be applied broadly.

Regression models generate a function that can predict continuous values given a set of inputs. The machine availability with respect to some features is an example of a regression model.

Classification models generate a function that predicts discrete values given a set of inputs. Since the values are discrete, they can be used to classify data points into different categories. Whether a set of sensor readings indicate a problem in the equipment or not, is an example of a

Machine learning

Photo: © ryzhi / Adobe Stock

If a machine learning-generated model is applied to known data, then each data point and predicted classification will fall into one of four categories: Actual value (from original data)

Good health Not-good health

Predicted value (from model) Good health True positive False positive

Not-good health False negative True negative

classification problem.

Dimension reduction methods are used for simplify very large datasets with many variables into fewer variables. Optimization of dimension reduction entails selecting variables that do not provide statistically significant information, such as those that are highly correlated with others.

The model class should be selected based on the problem is being solved. It’s possible that a single project may use several different models in different classes, in order to solve the larger problem.

Measuring effectiveness

Within each class of ML model, there are some standard approaches to measure whether one model is sufficient, better or worse than other models.

Regression models

In regression models, the final product is a function that maps inputs to predicted values. However, test data has both inputs and true outputs. Thus, the error of a model can be a function of the difference between the true output value and the predicted output value. The two main measures of model error are

Mean absolute error – take the absolute value of each error and take their average.

Mean squared error – take the square of each error and take their average.

Both quantities sum up error, but the difference in squaring rather than averaging is that larger errors are penalized more than smaller errors.

Classification models

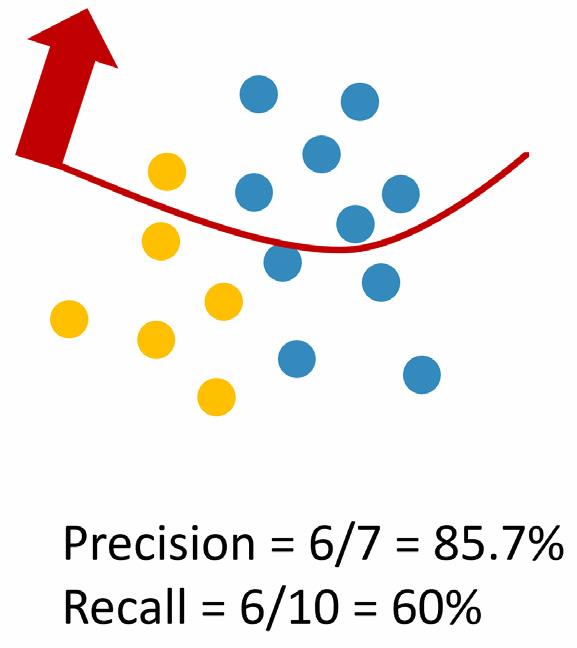

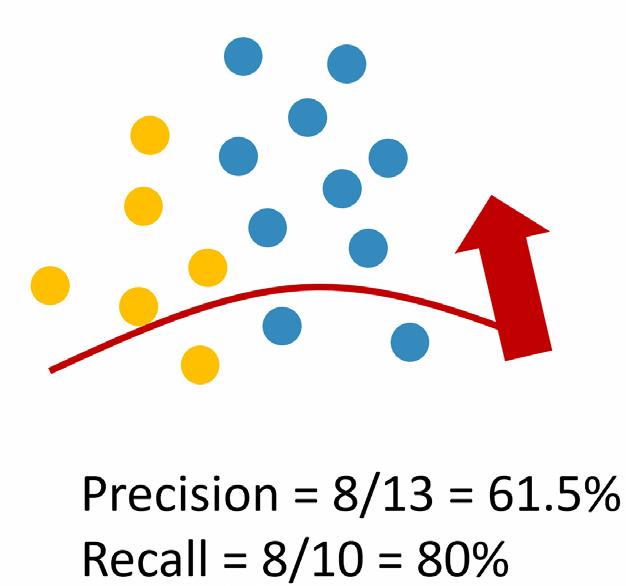

In classification models, the final product is an algorithm that assigns each new data point to a predefined category. The categories and their classification criteria are determined from existing data and the machine learning algorithm. Thus the error of such a model will be a measurement of misclassified data points. For simplicity, let’s consider a binary classification model, perhaps that a certain set of sensor readings are associated with equipment that is in good health, versus not-good-health. The predictions that fall into the false positive and false negative quadrants are clearly errors, but to evaluate one algorithm over another, we take a more holistic view.

Accuracy – is the percentage of all predictions that were correct

Precision – is the percentage of positive predictions that were correctly predicted. In other words, when the algorithm predicts that the equipment is in good health, how often is it actually in good health? You can imagine that an algorithm that predicts that every equipment is in good health would make you feel good, but the model would not be useful, since many of the units would actually be in bad health.

Recall – is the percentage of positive values that were actually predicted to be positive. In other words, how many of the equipment in good health did we find? There may have been equipment that was in good health, but they were predicted to be in not-good health.

These are some of the main metrics that are used to evaluate classification algorithms.

Conclusions

The concepts discussed in this article give an overview of the key differences between machine learning and traditional data analysis, as well as the main types of algorithms, and how to evaluate them. It is our hope that maintenance practitioners and managers can use this knowledge to communicate clearly with software developers and data scientists in implementing emerging technologies at their workplaces.

Dr. Janet Lam holds a PhD in Industrial Engineering from the University of Toronto. She has been working in the field of maintenance optimization since 2008, with an emphasis on optimal scheduling of inspections for condition-based maintenance. More recently, her research interests have extended to machine learning approaches for maintenance and asset management. Through her work at C-MORE, she has applied academic research directly with industry partners, including those in mining, utilities, transportation, and the military. As the Assistant Director of C-MORE, Janet is involved with cultivating strong relationships with industry partners and developing maintenance engineering resources that are both useful and current.

Canadian Leaders in Asset Management are Active in Global Developments

Since 2011 PEMAC has been actively engaged with like-minded organizations from around the world through two different not-for-profit societies: the Global Forum on Maintenance and Asset Management and the World Partners in Asset Management.

Global Forum on Maintenance and Asset Management

The Global Forum on Maintenance & Asset Management (GFMAM) is a non profit organization founded to promote and develop the maintenance & asset management professions by collaborating on knowledge, standards and practices. As the name suggests the GFMAM is a forum – where the member associations come together to discuss the development of the maintenance and asset management practice, to consider challenges that global cooperation may help to overcome, to form relationships and partnerships on projects of mutual interest, and to help assure alignment without constraining development. Documents that have been produced through GFMAM projects are available for free distribution. Use of content from these documents is encouraged as long as it is properly credited to the GFMAM.

Three new publications have recently been made available on the gfmam.org website under the ‘Publications’ tab: 1. The second edition of the Asset Management Maturity document now titled, “Asset Management Maturity - A Position Statement” along with two new companion publications, 2. “Specification for an Asset Management Maturity Assessor” and 3. “Guidelines for Assessing Asset Management Maturity”.

Several more GFMAM publications are near ready to publish. To stay informed you can add yourself to the interest list as you download any of the publications on the site.

World Partners in Asset Management (WPiAM)

Established in late 2014,the WPiAM also a notfor profit organization and an example of a partnership of like-minded organizations (including PEMAC) that formed through relationships that developed at the Global Forum. The focus of the WPiAM is to develop, assess and recognize competence in Asset Management, for the benefit of their non-profit member organizations and of the asset management community globally. WPiAM has set standards of knowledge and practice for Certified Asset Management Assessors (CAMA Certification) and has recently unveiled specifications for a laddered Global Certification Scheme (GCS). PEMAC has aligned our new CTAM, CPAM and CSAM certifications to this global scheme. Recognition for MMP and CAMP towards these certifications has also been confirmed.

To learn more about CAMA: https://

www.pemac.org/recognition/certification/ cama-certification

To learn more about the GCS and PEMAC’s aligned certifications see:

• a full article in spring 2021 issue of PEMAC Now • an update in this issue on page 23 • PEMAC website: www.pemac.org/recognition/certification/gac

Photo: © Cory / Adobe Stock