Introduction to Digital Logic and Components

MISSOURI UNIVERSITY OF SCIENCE AND TECHNOLOGY

R. JOE STANLEY & ROBERT S. WOODLEY

MISSOURI UNIVERSITY OF SCIENCE AND TECHNOLOGY

R. JOE STANLEY & ROBERT S. WOODLEY

Identify what is a digital system.

Identify, represent, and convert numbers using different number systems that are related to digital systems.

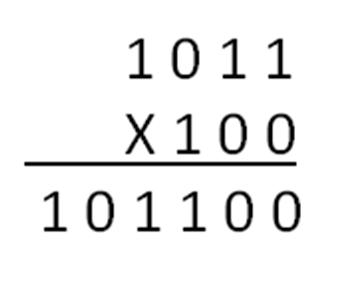

Perform arithmetic operations using different number systems that are related to digital systems.

Define digital systems

Define digital system design

Define and represent numbers using different number bases

Add, subtract, divide, and multiply numbers using different bases

Convert numbers between bases

Define and represent signed binary numbers using 2’s complement

Add, subtract, and multiply binary numbers using 2’s complement

Represent and use binary fractions

Define binary encoding schemes

Digital circuit design integrates user identifiable inputs with digital components to process the inputs for generating user identifiable outputs. User identifiable inputs may be keys on a keyboard, on/off switches, buttons on a remote control, temperature sensors, joysticks to perform movement, tire pressure sensors, door and window sensors for a security system, light or motion sensors, among many others. User identifiable outputs may be turning on/off light emitting diodes (LEDs), audible speaker sounds, turning on/off electric motors, turning on/off household appliances such as microwave ovens or washing machines, among many others. Note that the inputs use electrical components, analog and/or digital, that must be converted to a form that can be used in a digital system to generate the outputs. The outputs may require electrical components to represent the operation using the digital circuit.

Digital circuit design requires exposure to electronic circuits and devices used as inputs and outputs, as well as the digital components, which are much of the focus of this book! If you have ever taken off the cover of a remote control, cell phone, computer, furnace, electronic toys, etc., you were likely to see a green printed circuit board (PCB). An example of a PCB is shown in Fig. 1-1 below. The PCB has a number of components including the power supply, integrated circuits (digital components), connectors or user input/output peripherals. Some of these items are highlighted.

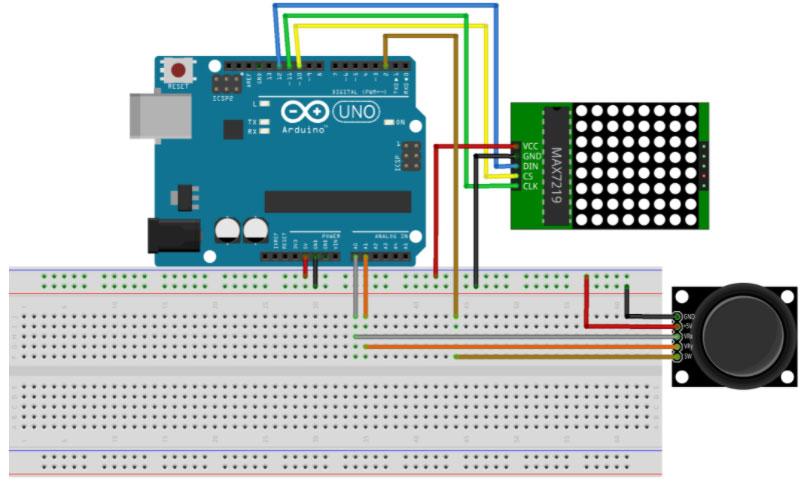

A circuit example with a joystick and 8x8 matrix display connected to an Arduino Uno computer board is shown in Fig. 1-2 below. The joystick and matrix display are connected to the Arduino Uno computer board using a prototyping device called a breadboard. A breadboard provides a row and column structured layout for wire connections between the different components in the circuit. Joysticks are analog input devices that have electrical components called potentiometers provide x and y axis position changes. These x and y potentiometers are connected to analog to digital converter (ADC) circuits to provide values that can be used in a digital circuit. The 8x8

Fig. 1‐1. Printed circuit board example with components1

Fig. 1‐1. Printed circuit board example with components1

matrix display is an output peripheral device that is used in this example circuit to display the joystick movements.

Extending the above illustrations, digital logic design involves the use and integration of electrical and physical components with electrical and computer properties such as power, current, voltage, logical operation, protocol or convention, and user interface.

This textbook will explore different facets of digital circuit design. We will begin in this chapter examining different data encoding schemes that can be used as inputs or outputs of digital circuits. The data encoding schemes also provide the basis for performing and interpreting data values in digital circuits.

Decimal: 0-9 (base 10)

Binary: 0, 1 (base 2)

Hexadecimal (hex): 0-9, A, B, C, D, E, F (base 16)

Octal: 0-7 (base 8)

In this section, commonly used number systems in digital systems are examined, including binary (base 2), decimal (base 10), hexadecimal (base 16), and octal (base 8) number systems and how to convert numbers from one base to another. The counting digits for these number systems, bases, for number representation are shown (right). Note that since hexadecimal is base 16, we represent the numbers 10 through 15 with the symbols A, B, C, D, E, F so that each position is only occupied by a single character.

Fig. 1‐2. Digital system input examples2With decimal as the base for number representation, the number can be expressed as a power of 10 sum as illustrated:

210 power of 10 positions

943(10) = 3x100 + 4x101 + 9x102

Each base 10 number digit corresponds to a power of 10 position, starting with the rightmost digit (to the left of the decimal point which is implied in this case since this number is an integer) as 100, the next digit to the left is the next power of base 10 as 101 increasing the power of the base going from right to left), and so on. The value 943 decimal is the weighted sum of base 10 raised to the power position times the corresponding digit in base 10 over all of the digit positions in the number. The conversion of 943 decimal (base 10 given in ()) is shown above with the powers of 10 given above each decimal digit.

Below is an example for representing a binary (base 2) number and its conversion to decimal (base 10).

3210 power of 2 positions

1011(2) = 1x20 + 1x21 + 1x22 + 1x23 = 1+2+8 = 11(10)

The binary number 1011 is shown with powers of 2 above each digit and the associated weighted sum translating each binary digit and power of 2 value into decimal to obtain the decimal representation.

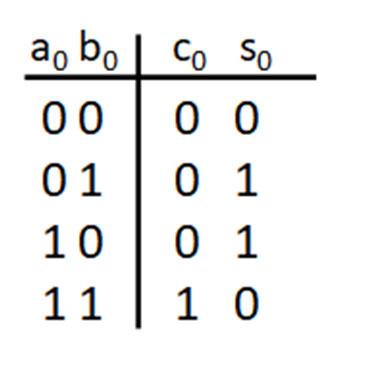

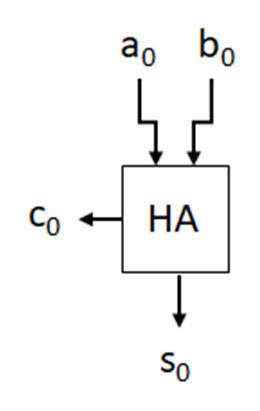

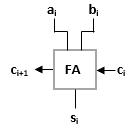

We now look at fundamental arithmetic operations, addition and subtraction, for number representation in different bases. Consider the following decimal addition example, 254 + 697. Starting with the rightmost digit position, add the decimal digits. Adding 4 and 7 to get 11, since 11 is greater than the base 10, the base 10 is subtracted from the digit sum. So, 11 (digit sum) – 10 (base) = 1, which is a base 10 digit that is given as the sum digit with a carry of 1 to the next digit position to the right. Adding 5 and 9 and the carry of 1 from the previous digit addition gives a sum of 15. Since this sum is greater than or equal to the base 10, the base 10 is subtracted from the sum of 15 to get 5. The digit 5 is put in this sum position with a 1 carry out to the next digit position. Finally, 2 and 6 and the carry out 1 are added for a sum of 9. Since 9 is less than the base 10, i.e. a valid digit for base 10, the base 10 does not need to be subtracted from 9, and there is no carry (or carry out of 0) for the next digit position. The value 9 is for the digit in the sum.

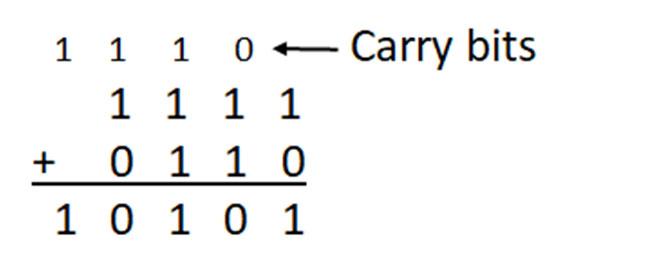

Consider applying the same approach to adding binary numbers. In the example below, 0111 (7 decimal) is added to 0011 (3 decimal). Adding the rightmost digits 1 and 1 to get 2. Two is greater than or equal to the base 2, so the base 2 is subtracted from the sum of 2 to give 0, which is put in this sum digit position and 1 is carried to the next digit position to the right. For the next binary digit position (bit position), the digits 1 and 1 and the carry out 1 are added to give 3, which is

subtracted from the base 2 to get a value of 1 put in this digit position for the sum. A 1 is carried out to the next bit position. The process is repeated to generate the base 2 sum of 1010 (base 10 value of 10).

Accordingly, this approach is applied to adding numbers for different bases. Below are addition examples for octal and hexadecimal numbers, respectively.

Starting with the least significant (rightmost) position, 6+4 in octal equals 10 in decimal. Since 10 is above the highest counting digit (7) in octal, subtract the base 8 from the decimal sum value (10-8) to get the octal sum digit 2 with a carry of 1 to the next digit position. Adding the carry and octal digits in the next position (1+5+2) gives 8 in decimal. Since the sum is above 7, subtract the base 8 from the decimal sum value (8-8) to find the octal sum digit 0 with a carry of 1 to the next digit position. Adding the octal values 1 (carry)+7+0 yields 8, which is adjusted (8-8) to 0 with a carry out of the most significant digit of 1. The final sum is 1002 (octal).

For hexadecimal addition, consider A5F+2CB. Again, start with the sum for the least significant digit, F+B, which is a decimal sum of 15+11=26. Since 26 is greater than the highest hex digit (F) decimal value (15), Using the process to perform decimal number addition can be applied to other number bases. In a similar fashion, use the process to perform decimal number subtraction for other bases.

Examples of subtraction for decimal, binary, octal and hexadecimal bases are shown below with borrowing to determine digit values.

Most digital systems use binary as the basis for operations performed. The following is terminology commonly used with binary values. Each individual binary digit is referred to as a bit. The number of bits, n, is denoted as an n-bit binary word. An 8-bit word is referred to as a byte. In an 8-bit word (as illustrated below), the upper 4 bits and lower 4 bits are designated as the upper (blue) and lower (yellow) nibbles, respectively.

Given the 8-bit word, 1110 1010. This value by default is an unsigned, so the decimal equivalent is given as: 0x20 + 1x21 + 0x22 + 1x23 + 0x24 + 1x25 + 1x26 + 1x27 = 234 decimal.

In the example above, the 8-bit binary word is converted to its decimal equivalent. In the context of working with other number systems, particularly those associated with digital systems, converting the values from other number systems to decimal is of interest for user (human) interpretation of values. In the encoding of numbers from other bases, the numbers may contain integer and/or fractional values. In converting decimal values to other bases, two iterative methods are presented:

1) Successive divisions to convert the integer portion of a decimal number.

2) Successive multiplications to convert the fractional portion of a decimal number.

The method of successive divisions is used to convert integer portions of numbers to their decimal representation. The steps for this method to convert integer decimal values to binary (base 2) are:

a) Divide the integer decimal value by the base to convert (base 2 in this case). This division generates a quotient and a remainder. The remainders will be one of the valid counting digits for the base. For binary, the valid counting digits are 0 and 1. The quotient and remainder for the division are written down to be used in the next steps.

b) Repeat step a) until the quotient is 0.

c) Generate the converted binary number by putting a decimal point preceding the first remainder. Then, list the remainders from each step, starting with the first remainder placed to the left of the decimal point, from right to left. The final remainder is the leftmost bit of the formed binary word.

An example using the Successive Divisions approach to convert 29 (decimal) to binary is given below. For converting 29 decimal to binary using the successive divisions method, the resulting binary word is 11101. In order to verify that this decimal representation is correct, sum the power of 2 positions times the associated binary weight. A short-hand way to get the decimal equivalent is to provide the decimal equivalent for each power of 2 at each power of 2 position and generate the weighted sum by multiplying the binary weight (0 or 1) by the associated decimal equivalent at each power of 2 position. This is shown in the lower right side of the figure above.

A second example is shown below to convert the decimal value 59 to its binary equivalent.

Convert 5910 to binary.

59/2 = 29 1 (Least Significant Bit)

29/2 = 14 1

14/2 = 7 0

7/2 = 3 1 3/2 = 1 1

1/2 = 0 1 (Most Significant Bit)

Convert 7210 to binary, octal, and hexadecimal.

Note that these methods can be applied to converting decimal integer numbers to any base. The following example (below, left) shows the successive divisions method applied to converting 72 decimal to binary, octal, and hexadecimal bases. In order to convert 72 decimal to octal, divide by 8 (the base) at each step to generate the octal remainders (values 0-7) to form the octal equivalent word. A similar approach is used to convert 72 decimal to hexadecimal (base 16).

Using the Successive Divisions method directly provides conversion from decimal to another base. For values in binary, octal, and hexadecimal, a direct approach can be used for converting among these bases.

1001000(2) = 72(10)

Successive Multiplications is one approach that can be used to convert fractional decimal values to other number bases. For mixed decimal numbers (numbers with integer and fractional components), decimal integer conversion to base X is done using Successive Divisions, and fractional integer number conversion to base X is performed with Successive Multiplications. Successive Multiplications uses the following steps to convert a decimal fractional number of the form 0.a:

a) Multiply the decimal fractional number by base X to give a number of the form b.yyyyyy.

b is counting digit for base X.

b) Separate the product from step a) into b and the remaining fractional number 0.yyyyy.

c) Repeat step a until one of the following conditions is met:

i. 0.yyyyy equals 0.

ii. 0.yyyyy repeats the value from a previous iteration, which shows that the converted fractional number is a repeating fraction.

iii. The desired number of bits has been reached for the converted fractional value.

d) Form the base X fractional number by starting with a decimal point before the first counting digit b in the sequence of multiplications. Then, place the counting digits b in order to the right of the decimal point going from left to right. So, the last counting digit b from the sequence of multiplications is the rightmost digit in the resulting fractional word in base X.

Convert 0.687510 to binary.

0.6875x2 = 1.375 1 (Leftmost Bit)

= 0.75 0

Convert 0.687510 to octal. 0.6875x8 = 5.5 5 (Leftmost Value)

= 4.0 4 (Rightmost Value)

A couple of examples follow to present the successive multiplications process.

In the first example, 0.6875 decimal is converted to binary (left, top). For this example, the successive multiplications approach is completed when the remaining fractional value is 0. The lower righthand portion of the example figure shows the translation of the binary fractional value to its decimal equivalent

based on the power of 2 positions of the fractional bit values. The next example (left, bottom) presents converting the decimal fraction 0.6875 to octal using the successive divisions method.

In the third example (below), the successive divisions approach is applied to determine a binary fraction based on a desired number of bits to represent the decimal fractional value of 0.7 within an error limit of 10%.

Extending the successive multiplications method to the conversion of 0.7 decimal to binary, the calculations and resulting fractional binary word are shown (above, bottom). The successive multiplications method yields a repeating binary fractional value, as observed with the repeat of the decimal fractional value 0.4 in the iterative steps. The binary fractional value repeats 0110 if the successive multiplications steps are continued.

An interesting interpretation of the binary fractional value of 0.7 is that it is a repeating value, meaning that the decimal value of 0.7 cannot be represented exactly in binary. Approaches such as the IEEE 754 single and double precision floating-point standard are used to provide uniformity in the way that binary fractional values are represented, including the precision of those values.

How many bits (binary) are required to represent 0.710 with a 10% error limit?

Extending the successive divisions process to convert 0.7 to binary:

1 (from above)

step above)

line 2 from above, which gives a repeating binary sequence of 0110)

Binary fraction for 0.7: 0.10110

The final example (below) shows the conversion of a mixed decimal number to binary using the successive divisions and successive multiplications methods for the integer and fractional portions of the number, respectively, which are combined to provide the mixed binary representation of the number.

Convert 38.2510 to binary.

Binary representation:

Converting decimal integer and floating-point values to other bases has been shown using successive divisions and successive multiplications approaches. In this section, conversions are presented for binary values to octal or hexadecimal values and octal to hexadecimal (and vice versa) value translations using binary representations. The process to convert a binary value directly to octal uses the substitution of binary value combinations for each octal digit. The octal digits 0-7 require three-bit binary word combinations to represent each octal digit. Starting with the decimal point in the binary word, place bits in groups of 3 going from right to left. If there are fewer than 3 bits in the leftmost group of bits, then append 0s on the left side to get the three-bit group. Then, translate each group of three bits to its corresponding octal digit. An example of the binary to octal conversion is shown (left, top). Note that the process for this example is for the integer conversion, grouping bits starting with the least significant bit to the most significant bit. If the binary word has a fractional component, the process to convert the binary fraction to octal consists of starting with the decimal point and placing bits in groups of three going from left to right. If the rightmost group has fewer than three bits, then append 0s to obtain a group of three. Then, translate each group of three bits to its corresponding octal digit.

Extending this example to convert the same binary number to hexadecimal (left, bottom), groups of four binary bits are converted to the associated hexadecimal digit. With 16 hexadecimal digits, there are 24 binary combinations associated with these hexadecimal digits, giving four binary bits per hexadecimal digit. Starting at the decimal point with the rightmost bit for the integer portion of the binary number and placing bits in groups of four going from right to left. If there are fewer than four bits in the leftmost grouping, then append 0s to obtain a four-bit word. Convert each group of four bits to its associated hexadecimal digit and maintain the order of the hexadecimal digits to form

The next example (below, left) shows conversion of the binary floating-point word 01101110011000.101110101 to octal and hexadecimal representations using grouping of threeand four-bit words, respectively.

Convert 0110111011000.1011101012

and hex. Octal

word: 1010111110111100

value: 0DD8.BA8

A final example (above, right) shows converting a hexadecimal value to octal using four-bit words to represent each digit to form the binary representation and, then, using the three-bit grouping process to form the octal values for the octal word.

r’s Complement (base r)

r’s complement of N = rn – N

r = base

n = number of integer digits

N = decimal number (r‐1)’s complement of N = rn – rm – N

m = number of fractional digits

In digital systems, r = 2 for the 2’s complement approach to represent signed numbers. The example below shows the 2’s complement representation for a 4-bit integer (n=4).

In this representation, the most significant bit is the sign bit for the number. Each bit in the 2’s complement representation has an associated power of 2 weight. So, the most significant bit is not only the sign bit (s) but has a weight that contributes to magnitude of the signed value. In the above example, the sign bit is in bit position 3 with a weighted value of s x (-1) x 23. When s = 1, the weight 23 is multiplied by -1 to provide a negative component in representing the signed number. When s = 0, the weight 23 is multiplied by 0 so that there is no negative component in representing the signed number. The other bits of the 2’s complement representation provide positive contributions to the weighted sum to yield the signed decimal number equivalent. In the above example, bit positions 0, 1, and 2 provide the terms N0 x 20, N1 x 21, and N2 x 22 that are added with the sign bit term to give the signed decimal number equivalent.

Consider the example of applying the r’s complement definition for r = 2 with the number N = 7. For this example, let n = 4 (4-bit 2’s complement word) and m = 0 (integer, no fractional component to the number). N = 7 is positive. N in binary is therefore N = 0111, where the weighted sum is 0 x -1 x 23 + 1 x 22 + 1 x 21 + 1 x 20 = 7. This is the 2’s complement representation for positive 7 using a 4-bit word. Applying the 2’s complement definition to N = 7 gives 24 – 7 = 9. In binary, 9 is 1001. As an encoded 4-bit 2’s complement value, the figure shows the decimal

equivalent of the signed 2’s complement number, which is -7. From this example, applying the 2’s complement definition to a fixed bit number (designated by n) gives the negative of the number N. Applying the 2’s complement method is also referred to as taking the 2’s complement of a number Taking the 2’s complement of a number in 2’s complement format is finding the negative of the number.

For example, using the r’s complement definition with r = 2 (binary) and n = 4, taking the r’s complement of N = 7 yields 24 - 7 (rn – N) or 9. In binary, 9 as a 4-bit value is 1001 (n = 4). The signed value associated with the 2’s complement value 1001 is -1 x 1 x 23 + 1 x 20 = -7. Thus, taking the 2’s complement of a number is finding the negative of the number.

In the previous example, the 2’s complement definition (r’s complement with r = 2) was applied explicitly. The following presents a derivation of the algorithmic process to apply the 2’s complement definition.

Derivation of process to take the 2’s complement process Definitions:

Using the 2’s complement and 1’s complement definitions for the example of N = 7, taking the 2’s complement of N for fixed values of n (number of integer bits) and m (number of fractional bits) involves the steps:

a) Taking the 1’s complement of the binary value of N which is flipping the bits (replacing 1’s with 0s and replacing 0s with 1s) of N. The quantity of flipping the bits is referred to as taking the 1’s complement of N.

b) Add 2-m (since m = 0, then 2-m = 1) to the 1’s complement value to obtain the 2’s complement of N.

Performing these steps yields the same value for the 2’s complement as found by explicitly applying the 2’s complement definition.

The previous example applied the 2’s complement definition to integers (m = 0). The 2’s complement representation can also be applied to floating point numbers for signed number representation using the general r’s and (r-1)’s complement definitions for nonzero m. The following (below) presents an example of representing fractional binary value and applying (taking) the 2’s complement.

Taking the 2’s complement of fractional values

most significant bit is truncated/discarded)

=

showing that taking the 2’s comp of N Is finding the negative of the number for specified n and m.

Note from the example above that taking the 2’s complement of a binary word is finding the negative of the decimal value equivalent with or without a fractional component to the number. Taking the 2’s complement of a binary word involves the steps:

a) Flipping the bits of the binary word (1’s complement)

b) adding 2-m (m is the number of fractional bits in the number).

These steps apply whether the 2’s complement format number has or does not have fractional bits in the number. Translating the 2’s complement form of the binary word to decimal is done using the most significant bit (leftmost bit) as the sign bit with a weight of 2n-1, which is the same whether or not there are fractional bits in the number. The fractional bits are added to the sum with weights as 2-1, 2-2, etc. starting at the decimal point and going from left to right, respectively.

Steps:

1) Using successive divisions, the binary form of 61 = 111101 38 = 100110

2) 8‐bit representations of 61 = 00111101 38 = 00100110

3) Take 2’s comp of 38 (n = 8, m = 0, N = 38, r = 2) 11011001 (1’s comp of 38) + 1 (20) 11011010 (2’s comp of 38)

4) Add 61 to 2’s comp of 38

1 1111 00111101 (61)

+ 11011010 (‐38)

1 00010111 (23)

Discard carry out of most significant bit (n = 8)

5) Subtraction solution using n = 8, m = 0

Solution: 00010111 (23)

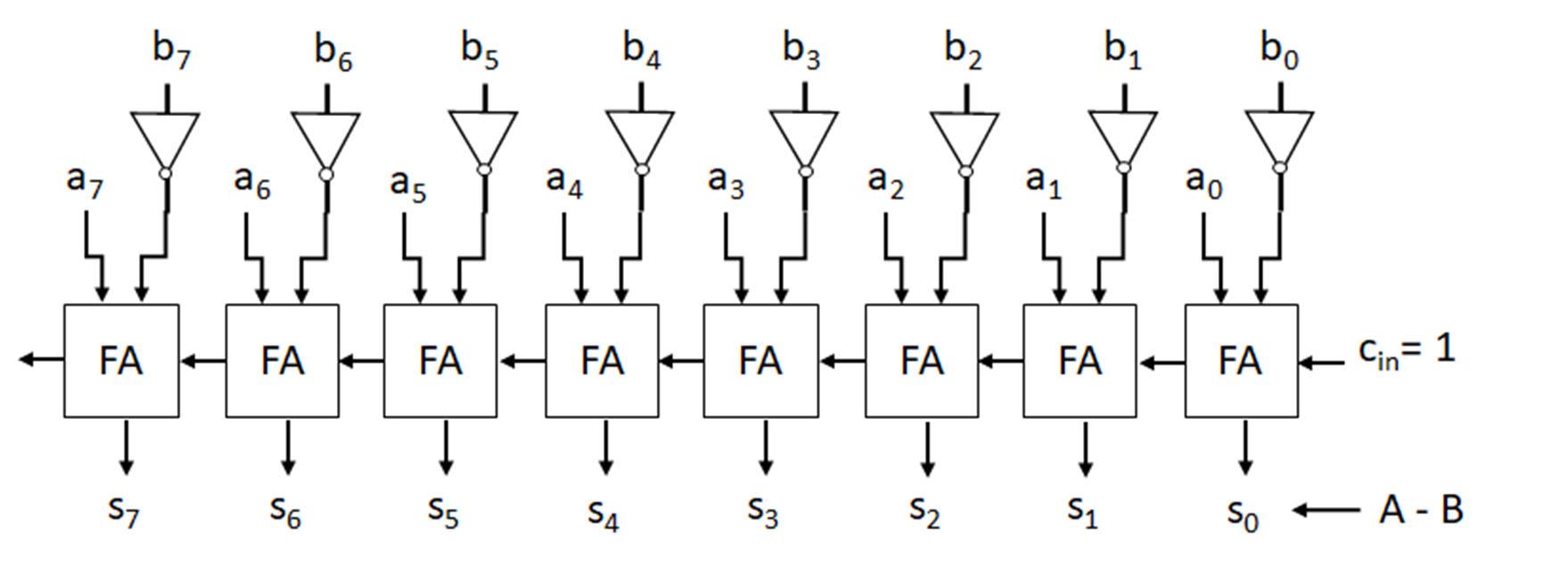

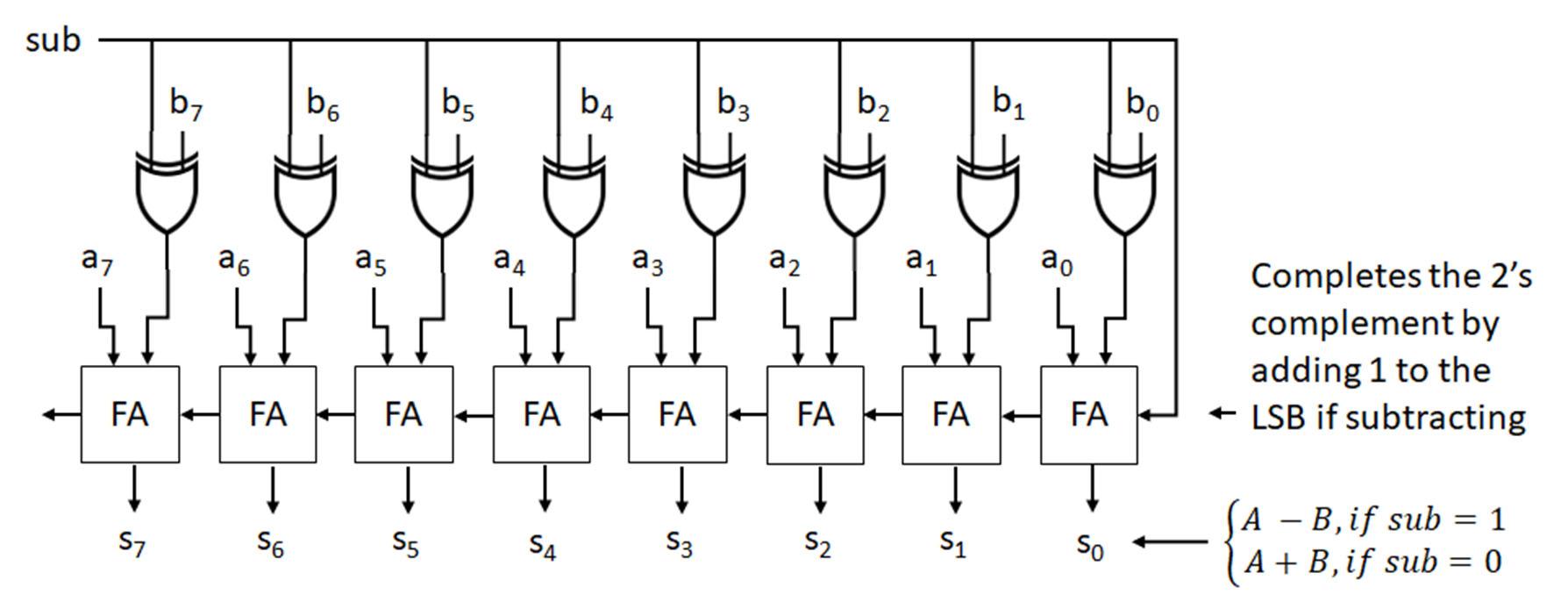

In digital systems, the 2’s complement format and operations are most commonly used for signed addition and subtraction operations. The figure (left) shows the 2’s complement setup for performing subtraction, which is given as substituting the subtraction operation A-B with adding the negative of B to A (A+(B)). Negative B is obtained by taking the 2’s complement of B and adding this value to A. For 2’s complement arithmetic operations including addition and subtraction, both numbers must be expressed in 2’s complement form using the same number of bits (n). When performing the arithmetic operation, the carry of out of the most significant bit is discarded, as the result must also be n bits.

An example 47-56 is shown (below) performing 2’s complement subtraction using an 8 bit (n = 8, m = 0) 2’s complement representation for the numbers. For 8 bit 2’s complement representation, the decimal numbers for the subtraction (A and B) are found in binary using a method such as successive divisions. If the binary conversions for these numbers are fewer than 8 bits, 0s are padded to 8 bits to give positive representations. Then, the 2’s complement of the number to be subtracted (2’s complement of the binary number for B) is taken to give the negative of the number.

47 47 ‐> 00101111

‐56 56 ‐> 00111000

‐9 111

2’s comp of 56 = 11000111 + 1 11001000 (‐56)

A is added to -B to determine the difference. The carry out of the most significant bit is discarded, so that the difference is in 8 bit 2’s complement format.

00101111 (47)

+11001000 (‐56)

11110111 (‐9) (solution is 8 bits)

Binary words can be interpreted to be signed or unsigned numbers. Given the 8-bit binary word 10110001. The unsigned translation of this word is 1x20 + 0x21 + 0x22 + 0x23 + 1x24 + 1x25 + 0x26 + 1x27 = 1+16+32+128 = 177 decimal. The signed 2’s complement translation of this word is 1x20 + 0x21 + 0x22 + 0x23 + 1x24 + 1x25 + 0x26 + 1x(1)x27 = 1+16+32-128 = -79 decimal. As we will see in later chapters, digital systems perform operations with unsigned and signed numbers. For 8-bit 2’s complement words, the signed value range is 01111111 (127 decimal), which is the most positive integer value. The most significant bit is the sign bit in 2’s complement format. With the most significant bit as 0, there is no negative term for this number, and the remaining bits are positive terms for the sum in determining the decimal equivalent. The value, 00000000, for 0 decimal is encoded as a positive number with a lead bit of 0. The most negative value, 10000000, is 1x(-1)x128 + 0 = -128, with the sign bit as a 1 (negative) and 0s for the remaining bits to provide no positive terms in the sum. So, the 8-bit 2’s complement value range is 01111111 (127) to 10000000 (-128). The 2’s complement signed value range differs depending on the number of bits in the binary word. The unsigned value range for an 8-bit word is 0 for 00000000 and 255 for 11111111, where the most significant bit has a weighted term of 1x27 (128).

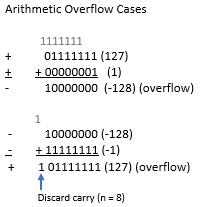

The binary word size of the operand values provides a value range for signed or unsigned arithmetic operations. For the addition of the 8-bit values 00000001 + 01111111 = 10000000. For unsigned addition, the sum is 1+127 = 128, which is within the range of the 8-bit unsigned numbers (0-127). For 2’s complement signed addition, 00000001 and 01111111 are positive 2’s complement values, 1 and 127, respectively. The sum, 10000000, is -128 as an 8 bit 2’s complement value. The expected sum is positive 128, which is not a valid positive number in 8-bit 2’s complement form. This condition where the result of the arithmetic operation goes outside of the valid value range is called arithmetic overflow.

Cases of arithmetic overflow are shown in the figure (right). From the figure, 2’s complement signed value arithmetic overflow can occur when adding two positive values, which can result in a sum that exceeds the maximum positive value in the value range. Arithmetic

overflow can also occur when adding two negative values, which can result in a sum that exceeds the maximum negative value in the value range. Adding positive and negative values or adding negative and positive values will not exceed the maximum positive or negative value range in 2’s complement signed format. Understanding how to interpret the results of signed and unsigned arithmetic operations is important because digital systems are designed with a limited, fixed number of bits for number representation.

Digital systems interface with a variety of human interaction devices and applications using binary encoding schemes. A couple of examples of those schemes are presented here. Binary Coded Decimal (BCD) is an encoding scheme that represents the decimal values 0-9 for displays such as seven segment displays that are commonly used in household appliances, clocks, etc. The BCD scheme requires a 4-bit binary word to represent the 10 decimal digits 0-9 with words 0000-1001. The combinations 1010-1111 do not represent valid single digit decimal values, so are designated as not used.

BCD values may be used in operations such as addition. An example of adding the BCD values 8+6 is shown (right, top). From the example, adding decimal values 8+6 yields 14 which is 1110. This value is not a valid BCD combination. There are 6 binary combinations that are not valid BCD combinations for decimal values above 9. In order to translate a value above 9 into BCD format, add 6 to the value to remap the value into the BCD value range. Adding 6 creates a carry out of the most significant bit to yield two BCD values. For this example, adding 6 (0110) to 14 (1110) gives 0100 (4) with a carry out of 1. The BCD sum is 1 0100 for BCD values 1 4, which is the sum of 8+6 in decimal.

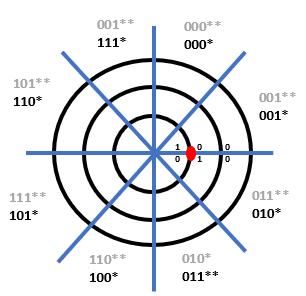

The second binary encoding scheme presented here is Gray code. Gray codes are commonly used for data encoding in electromechanical systems such as instrumentation and robotic positioning. The tip of a positioning sensor is shown in Fig. 13 (right, bottom). The analog sensor values correspond to a position in one of eight octants. Concentric circles partition the tip positioning with individual bits assigned to each region for labeling positions using octants with 3-bit

combinations. Let the octants be labeled 000 (0), 001 (1), 010 (2), 011 (3), 100 (4), 101 (5), 110 (6), and 111 (7) going in a clockwise direction (given in black and denoted with *). Let the sensed value be represented as the red dot shown on the figure below. The sensed value is near bit boundaries where the binary combinations of the bit position may be encoded as 000, 001, 010, 011. The variations of the bit encoded combinations may differ by up to two bits (such as 001 and 010 OR 000 AND 011). With adjacent positions having labels that may differ by more than one bit, there may be discontinuities in the sensed positions. The possible encoded value of 011 is not in the actual 011 octant. Accordingly, an octant labeling scheme is needed with adjacent octant positions differing by a single bit.

Gray codes provide an example of such an encoding scheme. The rules to form Gray codes with any number of bits are:

1) 1-bit Gray code has two codewords: 0, 1

2) First 2n bits of n+1-bit Gray code are n-bit Gray codewords written in order appending a leading 0.

3) Last 2n bits of n+1-bit Gray code are n-bit Gray codewords written in reverse order appending a leading 1.

Applying these rules, the determined 1-bit, 2-bit, and 3-bit Gray codes are shown below. The Gray code labels assigned to each octant in the sensor positioning example are given in red. From the detected position represented as a dot in the senor positioning example, the bit boundaries give the possible labeled octant combinations of 001 and 011. These combinations differ by a single bit, allowing for adjacent positions to be maintained as valid labels for the actual detected position. The 3-bit Gray code labeling for the senor tip position example in Fig. 1-3 is shown denoted in gray with **. The use of Gray codes is also useful in the analysis of logic equations using Karnaugh maps, which will be examined in Chapter 3.

1Developed by Roger Younger at Missouri University of Science and Technology.

2https://exploreembedded.com/wiki/Analog_JoyStick_with_Arduino. Accessed May 12, 2022.

Formulate logic expressions

Implement expressions using logic gates

Express and manipulate expressions using Boolean algebra

Identify basic logic operations and associated logic symbols

Identify logic identities and algebraic laws

Determine logic functions and truth tables

Use Boolean algebra to write combinatorial functions

Simplify logic expressions using Boolean algebraic reduction

Identify complete logic sets

Draw logic diagrams from combinatorial functions

Find a function from the logic diagram

Identify integrated circuits associated with logic gates

Formulate logic functions for hardware implementation

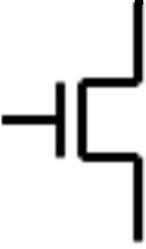

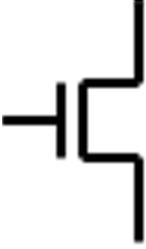

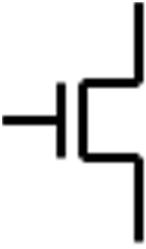

Digital systems use a variety of inputs and outputs with digital components to perform required operations. The inputs, output, and operations conventionally use binary values represented electrically. Analog inputs are converted to binary values based on voltage ranges prescribed by the technology used in the digital components. The outputs of the digital components are binary values in voltage ranges for the prescribed digital component technology. Examples of digital component logic families include Resistor Transistor Logic (RTL), Transistor-Transistor (TTL) technology, Emitter Coupled Logic (ECL), and Complementary Metal on Oxide on Semiconductor (CMOS). Within each logic family, there are variations of IC implementations based on speed, power usage, power supply voltage, input/output voltage ranges for logical 0s and 1s, physical size, among others. The context of examining logic families in this textbook are to: 1) illustrate digital circuit implementation considerations and 2) highlight logic gate configuration.

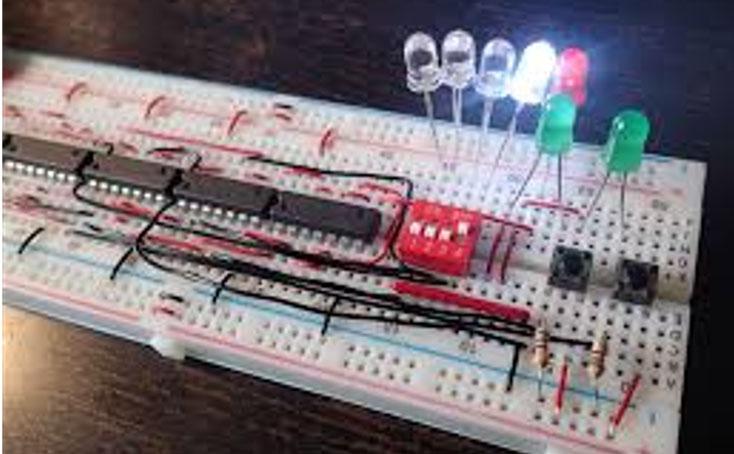

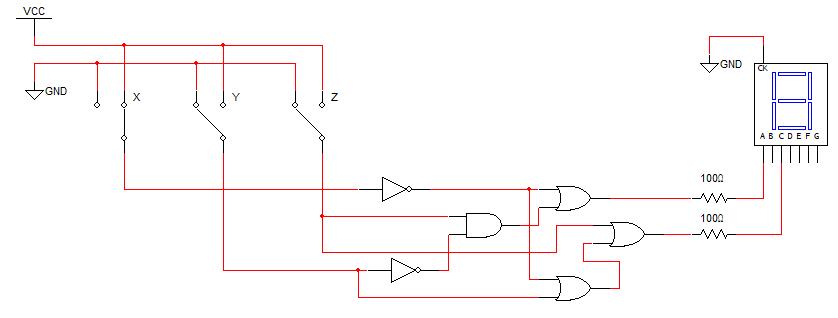

Fig. 2-1 above presents an example of a digital circuit. The circuit input is three switches (there are actually four switches, but only three are connected) with the output having seven light emitting diodes (LEDs) that are configured in a 7-segment display. The switch inputs, with a specific combination of the switch values as on or off, are connected (wired) to electrical components (ICs) to perform logical operations to generate logical outputs that turn on/off the individual LEDs to form the number 5 in the 7-segment display. This digital circuit is implemented on a prototyping platform called a breadboard. The breadboard has organized holes, called slots, where the pins of ICs may be inserted into the board. The column groups of five slots are electrically connected in

Fig. 2‐1. Digital circuit implementation using a breadboard with digital components1

Fig. 2‐1. Digital circuit implementation using a breadboard with digital components1

the breadboard so that wires and/or pins with the same logical or electrical value may be placed in the same column group. Also, notice that there are several (highlighted in yellow) column groups of two slots with red or blue horizontal bars across the breadboard. The horizontal bars are referred to as rails, with all slots in the same horizontal row sharing the same electrical value. Conventionally, the power supply value, typically 5 V, and the reference (also called ground), 0V, are connected (wired) from the power supply to the different rails to provide power and ground connection points for the circuit inputs, digital components, and outputs for the digital circuit. The purposes for this example are to: 1) highlight that the end goal of digital circuit design is prototyping and implementation, 2) provide the context for the design of digital circuits, 3) provide the context for the usage of digital components in digital circuits. The primary focus on this textbook is to develop an understanding of the theoretical, mathematical, and logical core concepts needed to design and apply digital components with specific operations for digital circuit design. Digital components, their functionality, utilization, and application in digital systems will be presented. Beginning in this section, the underlying digital logic is presented for digital components, logic operations, and logic expressions. The fundamental logic operations used in designing digital circuits and operations include the NOT (inverter), AND, OR, EXCLUSIVEOR, EXCLUSIVE-NOR, NAND, NOR. Logic operations utilize binary variables, which can take on the values 0 and 1. In digital systems, binary variables take on the form of analog inputs such as switches, buttons, window sensors, door sensors, etc. Analog inputs such as these have voltage ranges between the power supply value and the reference value, commonly referred to as ground. Voltage ranges, depending on the IC logic family technology, are translated into binary values that are interpreted as logical 0s and 1s. Digital circuits receive the analog voltages for the inputs, interpreted as 0s and 1s within the circuit, perform the logical operation within the circuit to generate an interpreted 0 or 1 logical output represented as an analog voltage. Combinations of one or more binary variables are used to express each logic operation. A truth table provides all input binary variable combinations with the associated output of the logic operation. The logic operations with truth tables and logic gate representations are presented in the next section. Other than the NOT operation, which has one input variable, all logic operations are introduced with two variable inputs.

Given a binary variable A that can take on the values 0 and 1. For the NOT operation:

�� 0, ��ℎ���� �� �� �� 1

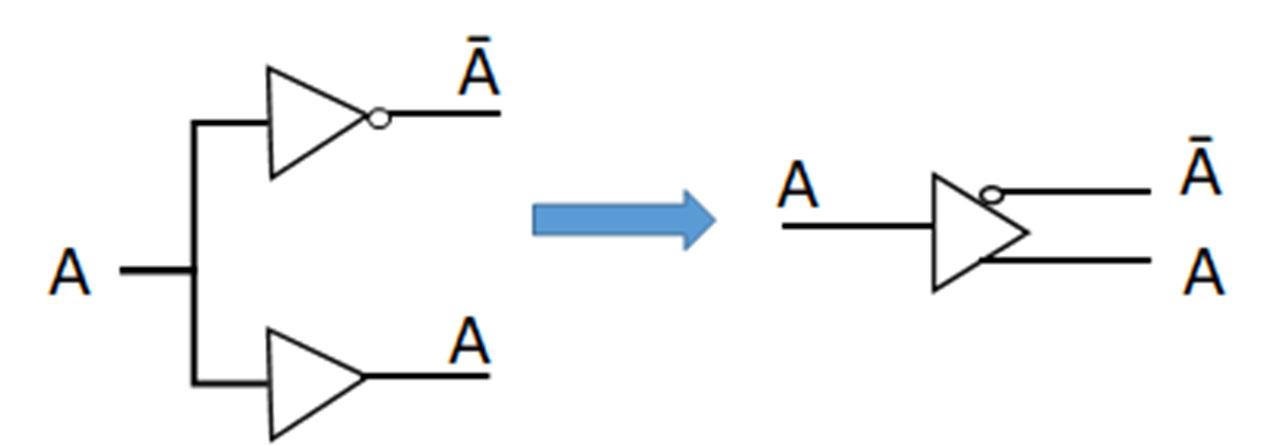

As the notation shows, there are several operators that refer to the NOT operation. This textbook will primarily use the first two forms. In digital logic, the NOT operation is also referred as INVERT and COMPLEMENT. Inverting a variable means applying the NOT operation to the

variable. The truth table for the NOT operation with the input variable A, and the logic symbol for the NOT operation, more commonly referred as the inverter, are given as follows.

The bubble shown on the inverter is commonly used in digital logic to denote the NOT operation.

Using two binary variables (A,B), the logical OR operation is defined as:

and is expressed as A+B = AvB. The truth table for the two variable OR operation and the associated OR gate logic symbol are given below.

Using two binary variables (A,B), the logical AND operation is defined as:

1 and is expressed ��∙�� AB = A^B. The truth table for the AND operation and associated logic symbol are shown below.

1,

Using AND, OR, and INVERT gates, any arbitrary logic network or function can be implemented. Any combination of logic gates that can implement any arbitrary logic function is referred to as a complete logic set

There are additional logic gates that are commonly used to implement logic functions in digital circuits. The NAND operation is interpreted as NOT-AND, which is expressed as:

The truth table and associated logic symbol for NAND are given as:

The NOR operation is interpreted as NOT-OR, which is logically expressed as:

The truth table and associated logic symbol for two input NOR operation are:

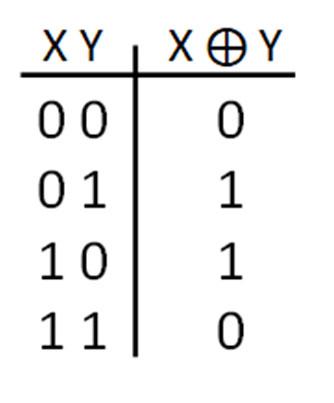

The Exclusive-OR (XOR) operation is interpreted for the two-variable case as:

if A = 1 OR B = 1 but not both, then the XOR is 1.

Logically, the XOR operation is shown as: A⨁B. The truth table and gate symbol for the XOR gate are given below.

All of the logic operators presented to this point, except for the inverter, have been shown with two inputs. The OR, AND, NAND, NOR, and XOR gates have more than two input IC components available. Consider the XOR gate with three inputs ABC. Its truth is shown below. In order to find the XOR gate outputs for each combination of ABC, the outputs for the two input A and B XOR are found for all combinations of ABC (truth table shown to the left). Since C does not affect the XOR of AB, the XOR entries for AB are the same for both C = 0 and C = 1. After finding ��⨁�� , the ��⨁�� entries are determined with the associated input values of C (XOR the column entries with the downward arrows shown on the truth table). Inspecting the truth table entries for ��⨁��⨁�� , ��⨁��⨁�� is 1 whenever the combination of input variables ABC with a 1 value is an odd number. The ABC input combination entries in the truth table with an odd number of variables with 1 values are highlighted with left-pointing arrows by the truth table (with the total number of 1 values for ABC). Thus, the XOR is an odd function. If you had an XOR gate with 20 input variables and an odd number of those variables are 1s, the output of the XOR gate is 1.

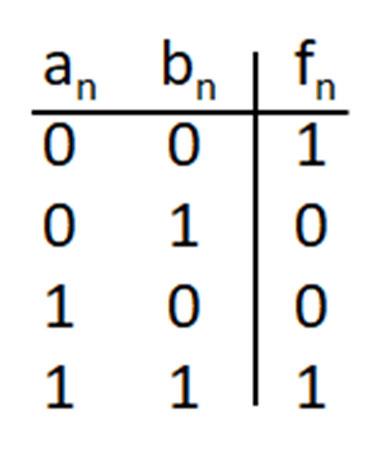

The Exclusive NOR operation is NOT-Exclusive OR, denoted as XNOR. The logical operator for the XNOR gate is given as A⨁B ��⊙�� . The truth table for the XNOR gate is shown below. Since the XOR operator is an odd function and the XOR operator is the complement of the XNOR operator, the XNOR operator outputs a 1 whenever there are an even number of input variables with 1 values. Thus, the XNOR is an even function.

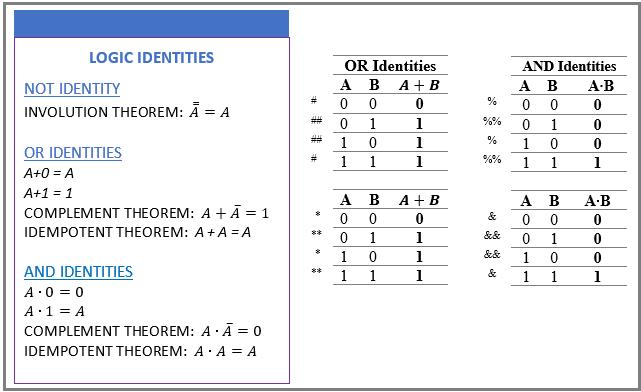

In this section, logic identities are presented to utilize logic gates for designing and implementing digital circuits. The Logic Identities provide the basis to manipulate individual logic variables (shown in Fig. 2-2 below). The involution theorem logically shows the application of the NOT operator to a logic variable. Let A = 0. By the involution theorem, 0 1 0. For the OR identities, consider the truth tables for the OR operation (OR Identities in Fig. 2-2). Let B = 0. A + 0 = A (denote as *), and A+1 = 1 (**). From the right truth table, the idempotent theorem A + A = A (#), and the complement theorem �� �� 1 (##). For the OR identities, consider the truth tables for the AND operation (AND Identities right). Let B = 0. From the left truth table, A ∙ 0 = 0 (%), and ��∙ 1 = A (%%). From the right truth table, the idempotent theorem ��∙�� �� (&), and the complement theorem ��∙�� 0 && .

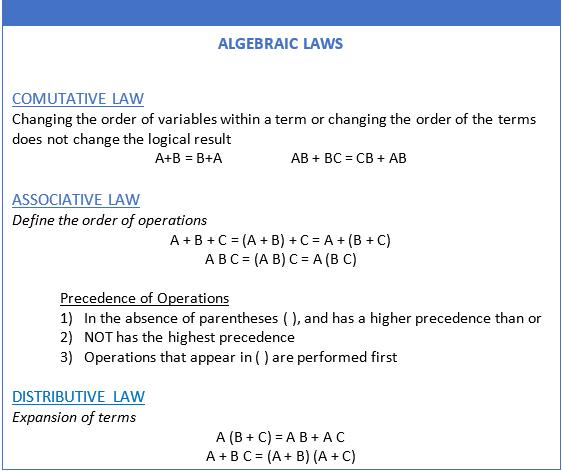

Fig. 2-3 (above) presents Commutative, Associative, and Distributive algebraic laws with the precedence of logic operations that are used to represent logic functions. The Commutative Law provides for logic variables and terms to be placed in different positions within logic expressions without impacting the logical equivalence of the expression. The Associative Law gives the order of logic operations to represent and evaluate logic expressions. The core three logic operators are NOT, AND, and OR. NOT has the highest logic precedence. The AND operator has a higher precedence than OR. Logic expressions in parentheses are evaluated first in following the precedence of operators. From the algebraic laws, Fig. 2-4 (below) shows three logic function examples to show the order of operations to evaluate each function.

Fig. 2‐3. Algebraic Laws used for expressing and manipulating logic functions.

Fig. 2‐3. Algebraic Laws used for expressing and manipulating logic functions.

There are two forms of the distributive law to formulate logic functions based on combining variables using parentheses. The distributive law A (B + C) = A B + A C is an intuitively mathematical combination and expansion of terms. The distributive law A + B C = (A + B) (A + C) is less intuitive but logically ORs the isolated variable (A) with each variable in the other term (B C), ANDing the resulting terms. Fig. 2-5 gives a couple of examples applying this distributive rule.

Two additional Boolean algebra tools for manipulating logic expressions are DeMorgan’s theorems, which are stated as: 1)

DeMorgan’s theorems relate NOR with AND NAND with OR operations. The truth tables for each DeMorgan’s theorem are shown in Fig. 2-6 below to verify the logical equalities.

For each DeMorgan’s theorem, break the NOT (line) between the variables through the OR AND operator, then replace the OR with an AND or operator, respectively, and keep the NOT with each variable on each side of the replaced operator.

Fig. 2‐5. Examples manipulating a logic function using the distributive rule.Using these logic operations, identities, algebraic laws, and theorems, examples of finding a logic function from a truth table, finding a truth table from a logic function, and drawing a logic circuit (logic network) from a logic function are presented. A logic function or logic expression utilize combinations of logic operations with binary variables.

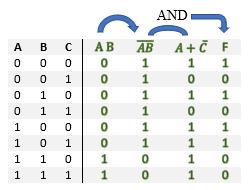

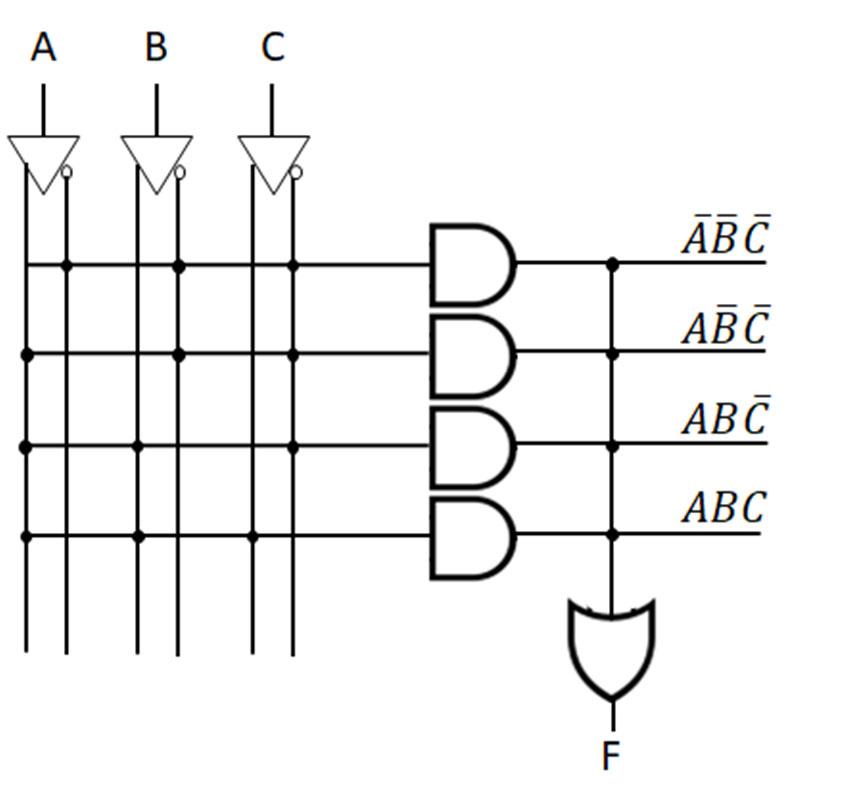

To find a logic function from a truth table, consider the truth table example in Fig. 2-7 above. There are three input variables A, B, and C with output F, with 0 and 1 entries arbitrarily chosen for this example. With three input variables, there are 23 = 8 rows in the truth table, with decimal equivalents of 0 (ABC = 000) to 7 (ABC = 111). Applying the logic identities to each 1 output (F) entry, the combination of variables A, B, and C are found to create a logical 1 term. The F = 1 entry for ABC = 000 can be phrased as an if-then rule, namely: if A = 0 AND B = 0 AND C = 0, then F = 1. Translating this if statement to a logical term is done by making each variable form as a logic 1 value ANDing the variable forms to create the logical term. In this case, to express A = 0 as a logical 1, use A’, which translates as 0’ = 1. The same is true for B = 0 and C = 0 to give the forms B’ and C’. From the logic identity X 1 = X, ANDing the variable combinations A’B’C’ = 0’ AND 0’ AND 0’ = 1 AND 1 AND 1 = 1 to yield F = 1. This process is repeated for all F = 1 entries in the truth table. The individual terms are found for all of the 1s entries. The logic function F is obtained using the logic identity 1 + X = 1 to logically OR all of the individual terms formed from the 1s entries in the truth tables. For this truth table example, there are four 1s entries for F, giving F = A’B’C’ + A’BC + AB’C’ + ABC as the resulting logic function. If the any of the input logic variable combinations is satisfied, then the term in F is a logic 1. The logic identity 1+X = 1 means that if any of the terms is a logic 1, then F = 1.

Fig. 2‐7. Example of finding a function from a truth table.In order to find the truth table for F in the example (Fig. 2-8), the truth table for the individual terms can be found, logically ORing the truth tables from each term using the identity 1+X =1 to give the truth table for F. For the first term A’B, A = 0 to give A’ = 0’ = 1 with B = 1 for A’B = 0’ AND 1 = 1 AND 1 = 1. Finding the truth table for A’B, the if-then rule: if A = 0 AND B = 1, the truth table entries are 1s (0s otherwise). Note, the term only depends on the values of A and B. The value of C does not matter to satisfy the if then rule in determining the truth table entries. A similar process is applied for finding the truth tables for the other terms AC and BC’. Once the truth table entries are found for all of the terms (denoted with arrows), then the truth table for F is found by ORing the truth table entries for those terms using the identity 1+X=1.

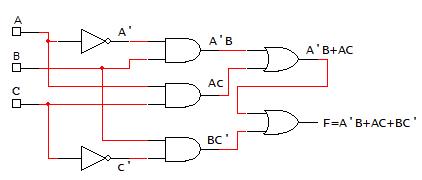

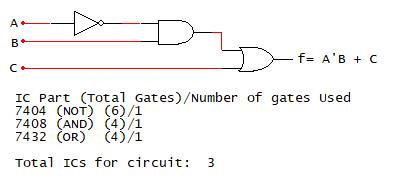

Determine the logic network (digital circuit) for �� ���� ���� ����

In the next example (Fig. 2-9 above), a logic network (digital circuit) is determined for a given function f. In the logic network implementation, the symbols for the logic gates are substituted for each logic operation. A’ is replaced by placing a wire from the variable A to the input of an inverter. The output of the inverter is A’. The term A’B is formed taking a wire from the output of the inverter (A’) to the input of a 2-input AND gate with a wire from the variable B. The output of this AND gate is A’B. For the second term, AC, wires are drawn from the variable A to the 2input AND gate. Since a wire has already been drawn from the variable A, a solder point is made on the existing wire for A and the wire is drawn to the input of the 2-input AND gate. This solder point indicates that the wires are connected. A similar process is performed to generate the term BC’. For combining the individual terms, 2-input OR gates are shown with wires from the outputs

Fig. 2‐9. Example of drawing the logic network from a function.

Fig. 2‐9. Example of drawing the logic network from a function.

of two of the AND terms as inputs to an OR gate. The output of the this OR gate has a wire drawn as an input to a second OR gate with the remaining AND gate output, with F as the output of the OR gate. Note that many gates can be expressed using with more than 2 inputs. In the example above, a 3-input OR gate could have been used to take the wires from the three AND gates to produce the function output f.

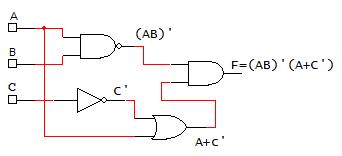

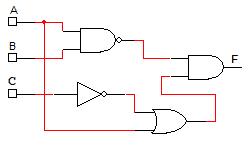

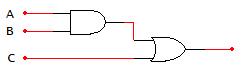

In next example (Fig. 2-10 below), the logic expression and truth table are determined from the logic network (digital circuit), there are 3 input variables (ABC) and one output (F). In order to determine the logic function from the logic network, work from the logic network inputs to put together the individual terms from the logic gates going from left-to-right. The term from the output of each logic gate is used as an input to the next logic gate in the logic network until the output of the logic gate is F. The truth table for F can most easily be obtained by finding the truth table for the individual terms and combining the terms. In this case, there are two terms (AB)’ and (A+C’). The truth tables for those terms can be found and combined with AND operation (since these terms are ANDed to yield F).

Determine the logic function and truth table from the given logic network.

In the previous sections, binary variables, logic operators, logic identities, and algebraic laws were introduced to represent and interpret logic functions. The manipulation of logic functions using these logical and algebraic operations is referred to as Boolean algebra. The goal of obtaining and manipulating logic functions is to put the logic function into a form that can be implemented using hardware components. TTL components were introduced for the different logic operators.

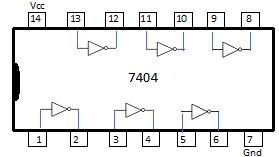

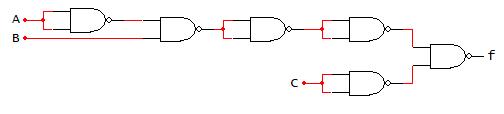

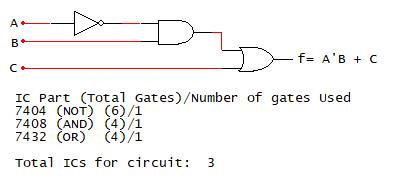

For digital circuit implementation purposes and examples presented in future chapters, each logic operator is referenced by a TTL part. The TTL 7404 is the digital component for the inverter (see Fig. 2-11 below). The 7404 is a standard part with many manufacturers. An example of the datasheet for the 74LS04 is given at http://pdf.datasheetcatalog.com/datasheets/70/375318_DS.pdf. The 74LS04 is one of the variants that uses a low power Schottky (LS) transistor in the inverter physical component implementation. This IC is a commonly used variant in the physical breadboard implementation of digital circuits. The datasheets for ICs can be found at websites such as www.datasheetcatalog.com and www.digikey.com. The IC, in this case, is a dual inline package (DIP). The schematic is called a pinout diagram. The pinout diagram shows that there are six inverters on a single IC, with power (Vcc) and ground (Gnd) for powering the IC. An example of the TTL 7404 IC is shown in Fig. 2-12 below with a picture of the IC used in a breadboarded circuit labeled with a yellow arrow. The datasheet provides details such as the power supply voltage (Vcc), the voltage levels for the input and output variable(s), the electric current ratings or operating conditions for the IC, the timing or speed for transitioning the input value to the associated output value (typically on the order of nanoseconds), the temperature range for using the IC, and the physical dimensions of the IC. For TTL technology, the standard power supply is 5V. The six inverters work identically and may be use in any order.

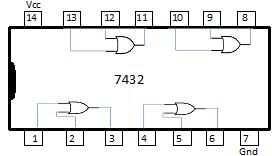

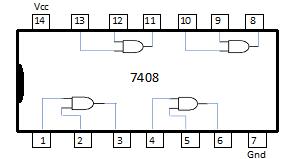

A table is shown below in Fig. 2-13 presenting the standard TTL ICs for the logic gates presented in the previous sections. The TTL parts provide a practical example of wiring the connections to generate and combine the terms in the function to generate the function. Conventionally, the binary variable inputs are given as switches, and the output of the function is displayed using a light emitting diode (LED). For wiring the connections in the breadboard implementation, datasheets are needed for each IC (also referred to as a digital component).

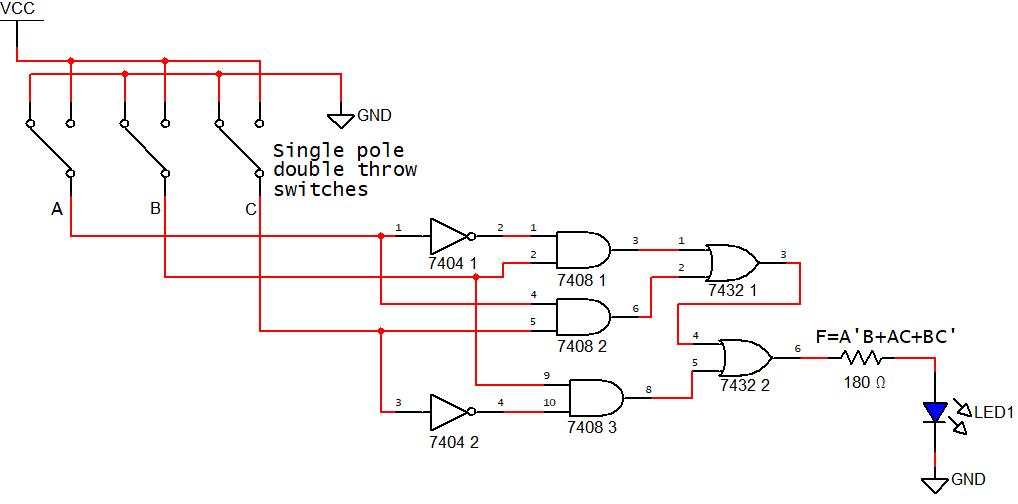

The datasheets provide details about using the ICs, including voltage, current, and temperature requirements, as well as physical IC implementation details with a pinout diagram. The pinout diagram shows the usage for each pin, including power and reference (ground) and the input(s) and output(s) for each logic gate. Datasheets can be obtained for digital components from the manufacturer’s website or from common websites such as http://datasheetcatalog.com and http://digikey.com. An example of a logic function implementation with the wiring connections such as would be done on a breadboard is presented in Fig. 2.14. The pin numbers on the different ICs are shown with each gate.

Determine the logic network (digital circuit) for

IC Part/Number of gates

7404 (Inverter)/2

7408 (2‐input AND)/3

7432 (2‐input OR)/2

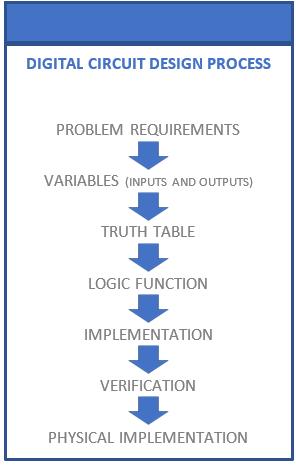

The digital circuit design process (Fig. 2-15) begins with a problem statement to specify the problem. From the problem specification, the design requirements are identified. Binary input and output variables are determined with the associated interpretations of logic 1 and 0 values for each variable. Using the problem requirements and input and output variable designations, truth table is found. From the truth table, a logic function is derived. Based on the problem requirements, the logic function is implemented using logic gates or other digital components (presented in Chapter 5). Conventionally, digital circuit design tools are used to simulate the logic function implementation for truth table verification. Then, the simulated logic function implementation is translated to a physical circuit implementation such as a breadboard. We will explore the various steps in the digital circuit design process throughout this and remaining chapters of the textbook, beginning with the problem statement and requirements in the next section.

Figs. 2-16 and 2-17 present examples of the digital circuit design process to determine problem variables and the associated truth table.

Problem Requirements: A fire sprinkler system should spray water if the heat sensor is sensed and the system is set to be enabled.

Input variables: h = heat sensor (high heat is sensed)

Specify values: 0 = no high sensed heat, 1 = high sensed heat

E = system enable

Specify values: 0 = system not enabled, 1 = system enabled

Output variable: f = turning on/off sprinkler system

Specify values: 0 = sprinkler system off, 1 = sprinkler system on

Apply problem requirements with input and output variables to determine the truth table: A fire sprinkler system (f) should spray water if the heat sensor is sensed AND the system is set to be enabled

Problem Requirements: A car alarm system should sound if the alarm is enabled and either the car is shaken or the door is open.

Identify Variables

Input variables: s = door is shaken

Specify values: 0 = door is not shaken, 1 = door is shaken

d = door is open

Specify values: 0 = door is open, 1 = door is closed

e = system is enabled

Specify values: 0 = system is not enabled, 1 = system is enabled

Output variable: f = car alarm sound activation

Specify values: 0 = not activated, 1 = activated

Find the Truth Table

A car alarm system should sound if the alarm is enabled AND either the car is shaken OR the door is open.

IF e = 1 AND s = 1, THEN f = 1

IF e = 1 AND d = 0, THEN f =1

OTHERWISE, f = 0

e = 0 (system not enabled)

There are a variety of considerations in implementing logic functions. In the previous section, logic function implementation was illustrated using a breadboard with TTL components. This example highlights some of the considerations such as the size of the implemented circuit in terms of the number of components used and the number of logic gates on those components. Much of digital logic explores different types of digital components that can utilized to implement logic functions and perform logic and arithmetic operations. This book presents a number of those digital components with the context of their application and utilization in digital circuit implementation.

Logic gates and identities and algebraic laws have been introduced to implement logic functions. The goals of digital circuit implementation are:

1) Minimize the number of digital components to reduce the power requirements and size of the digital circuit.

2) Configure the logic function in a form that meets the speed or timing requirements for the application using the logic function. For different logic families (TTL, CMOS, etc.), variations of digital components are often available that are fabricated with enhanced speed and reduced power to promote digital circuit implementation to meet a variety of application requirements. In addition, reducing the complexity of the logic functions contributes to satisfying goals 1 and 2.

3) Manipulating the logic function for implementation using specified logic operations or digital components. In some cases, manipulating a logic function for implementation using specific logic operations may contribute to meeting goals 1 and 2.

In this section, logic function simplification is examined using Boolean algebra to facilitate meeting digital circuit implementation goals. The truth table can be used to verify the equality of logic expressions, as shown in the verification of the DeMorgan’s theorems. Logic expressions can be manipulated for simplification and to put expressions in different forms for implementation. For simplification, logic functions are manipulated to eliminate redundancies. A logic expression redundancy is where one or more variables or terms contributes nothing unique to the logical equivalency (same truth table for the function with or without those variables or terms). Fig. 2-18 gives a summary of the logic identities for manipulating expressions using Boolean algebra.

OR‐based AND‐based

A + 0 = A �� ��

A + 1 = 1 A ∙ 0 = 0

A + A = A ∙ 1 = A

�� �� 1 ��∙�� 0

A + B = B + A AB = BA

A + B + C = (A + B) + C ABC = (AB)C

A(B + C) = AB + AC A + BC = (A + B)(A + C) ���� �� �� ��

Fig. 2-19 through Fig. 2.25 present Boolean expression simplification examples to eliminate redundancies. The context of logic expression simplification based on the logic gates and digital components required for Boolean expression implementation are also shown.

Simplify the expression using Boolean algebra into the simplest form.

g = xyz + xz Logic gates needed to implement (before simplification)

3 2‐input AND gates (7408) needed: [xy] [(xy)z] [xz]

1 2‐input OR gate (7432) needed: [(xy)z] + [xz]

2 parts (1 7408 IC and 1 7432 IC) using 4 total gates

Simplification steps

g = xyz + xz

g = (xz) (y + 1) Distributive Rule AND A 1=A AND Commutative Rule (ABC = CAB)

g = (xz) (1)

1+A = 1

g = xz A∙1 = A

Simplest form

g = xz Logic gates needed to implement (after simplification)

1 2‐input AND gate (7408) needed: xz

1 part (1 7408 IC) using 1 gate

From Fig. 2-19, the unsimplified function g = xyz + xz requires three 2-input AND gates (TTL component 7408) and one 2-input OR gate (TTL component 7432), which means that breadboarding the function g has 2 TTL digital components. In the reduction/logic simplification process for this function, the identities applied are given for each step. The simplification process is completed when no additional identities can be applied to eliminate any redundancies. The simplified function g = xz requires one 2-input AND gate. The simplification process here eliminates three logic gates and one TTL component, resulting in the breadboard implementation only using one TTL 7408 2-input AND gate component.

Let X = A+B is common in both terms so use X to substitute in h h = (X+C)(X) Substitute X in h

= XX + XC

= X + XC

Distributive Rule (L (M+N) = LM + LN)

From Fig. 2-20, the logic function h has two ANDed terms with ORed forms of the variables. In this case, the two terms have A+B in common. Substituting X = A+B in the function h makes the simplification process simpler. Applying the logic identities to simplify h is done until redundancies are eliminated. Then, A+B=X is substituted to get the final functional form.

Show A + BC = (A+B) (A+C)

Steps

(A+B) (A+C) = AA + AC + AB + BC

Logic Identity or Algebraic Law

Distributive rule (A (B+C) = AB + AC), Communitive Rule XY = YX

= A + AC + AB + BC X∙X = X (Idempotent theorem‐AND)

= A (1 + C + B) + BC

= A (1) + BC

Distributive rule (A (B+C) = AB + AC)

1+X = 1

= A + BC X 1 = X

Proof of equality

The example in Fig. 2-21 is a proof of the distributive rule form with A+BC = (A+B)(A+C). The important step to note in the simplification process is taking A+AC+AB+BC = A(1)+AC+AB+BC. Then, A(1)+AC+AB+BC = A(1+C+B). Using A=A(A) instead of A=A(1) results in A+AC+AB+BC = A(A+C+B)+BC. The term A+C+B does not simplify any further. In applying this distributive rule the term A+BC is given as ORing the isolated variable A with each variable in the other term, ANDing each ORed term generated. In this case, A is ORed with B to give (A+B), and A is ORed with C to give (A+C). The two ORed terms are ANDed to yield the distributive rule form, which is (A+B)(A+C). This distributive rule is very useful in simplifying Boolean expressions, especially as a last resort when no other identities can be applied.

The example in Fig. 2-22 (below) presents two approaches to simplify the function. Approach 1 illustrates the use of the distributive rule (from example 3) for simplification. X+X’Y’ contains an isolated variable (X) with the other term having the complement of that variable (X’). The distributive rule is applied by ORing X with each variable in its form in the other term, ANDing the resulting terms, giving (X+X’)(X+Y). X+X’ = 1, eliminating this term. So, X+X’Y’ = X+Y’. Applying distributive rule in this condition effectively eliminates the complement of the variable in the other term.

In approach 2, the terms in the unsimplified expression are examined for commonalities. XY’ has a common X with the term XY, and XY’ has a common Y’ with the term X’Y’. With XY’ having commonalities with other terms, creating an extra copy of XY’ can be used to simplify each of those other terms. Since A+A=A, the extra copy of XY’ maintains the equality of the expression (XY’ + XY’ = XY’). Adding the copy of XY’ allows XY’ to be paired with the other terms to simplify the expression.

Example 4: Boolean algebra logic function simplification (Proof)

Show XY + XY’ + X’Y’ = X + Y’ using 2 approaches:

Proof

Simplify

Example 5: Boolean algebra logic function simplification

From Fig. 2-23 (above), the logic expression (g) is to be simplified in a form that uses the fewest number of logic gates, excluding inverters. There is no restriction on the number of inputs to the logic gates in the simplified form using the fewest number of logic gates. Two solutions are presented here. In approach 1, the terms under the NOT bar are expanded using the distributive rule, and then the identities are used to simplify the expression. There are multiple forms in simplifying the expression that use two logic gates, namely a(b’c)’ and a(b+c’). Eliminating the redundancies from g also provides the simplified form ab+ac’, which uses three logic gates (two AND gates, one OR gate), which does not provide the implementation that uses the fewest number of logic gates. There is a difference between simplifying the logic function that eliminates redundancies and the form of the simplified function that uses the fewest number of logic gates. If the goal of this problem is to find the simplest form of the function g, a(b+c’) or ab+ac’ are valid solutions.

In approach 2, g is treated as a NAND expression using DeMorgan’s theorem to represent and combine the individual NAND terms. The resulting expression is simplified using the identities. Using this approach, there is only one form of the simplified expression that uses two logic gates (a(c’+b)), and two forms of the expression that yield the simplified forms (a(c’+b) or ac’+ab).

Eliminate complement of isolated variable

in term �������� (see yellow term highlight for example variable elimination)

The example presented in Fig. 2-24 is more complex than the previous examples, utilizing multiple applications of the distributive rule in finding the functional simplified form. In the intermediate

form of the expression w’x’z+y’+wx, wx is NOT the complement of w’x’; rather, wx is the complement of (wx)’ or (w’+x’). So, the distributive rule cannot be applied using those terms.

c is common to both terms

The example in Fig. 2-25 uses substitution for common groups of terms in the expression to aid in the simplification process. Without substitution, the terms are expanded to simplify the expression (what is done above is a simpler expression than without substitution).

Implementing logic functions utilizes combinations of logic gates. A combination of logic gates that can be used to implement any arbitrary logic function, which consists of the AND, OR, and NOT operations, is referred to a complete logic set. AND, OR, and NOT (inverter) gates constitute a basic complete logic set. Implementing logic functions using this combination of gates is referred to as using AOI logic. Examples of complete logic sets include:

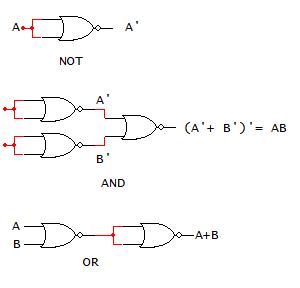

DeMorgan’s theorems provide the basis to showing that complete logic sets 2-5 can be used to implement AND, OR, and NOT operations necessary to implement any logic function. The following sections show how NAND-only logic and NOR-only logic are separately complete logic sets and how to implement functions using only these gates.

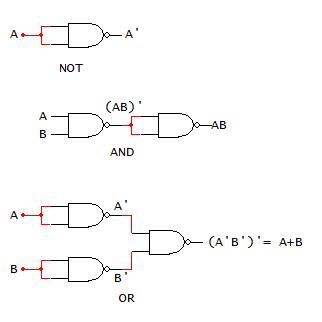

For NAND-based logic, logic functions are implemented using only NAND gates (different numbers of NAND gate inputs may be used in this approach). As previously state, NAND-based logic relies on the ability to implement AND, OR, and NOT operations using only combinations of NAND gates. The truth table and NAND gate manipulations to perform these operations are shown in Fig. 2-26 below.

The following steps provide the process to implement a logic function using only NAND gates:

1) Draw the logic function using AOI logic, i.e. with AND, OR, and NOT (inverters) gates.

2) Substitute the NAND gate only combinations for each AND, OR, and NOT gates in the AOI implementation (as shown above).

3) Eliminating redundant gates, specifically double NAND-based inverters from the circuit from step 2.

4) Determine the logic function for the NAND only implementation of the logic function to verify truth table equivalence to the original logic function from step 1.

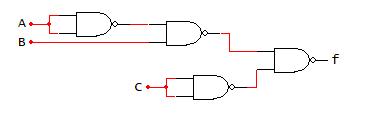

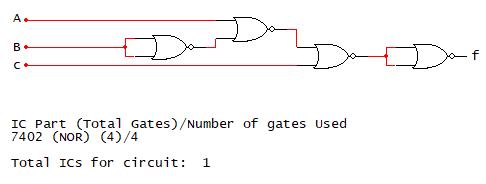

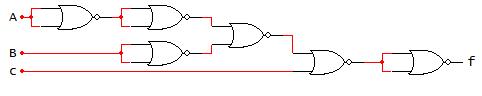

An example of implementing a function using NAND-only gates is presented in Fig. 2-27. As a consideration for implementation, the number of TTL components required to breadboard this function is shown in the AOI implementation.

Implement �� ���� �� using NAND‐only logic

Draw f using AOI

NAND Gate Substitutions

Remove Double Inverters

Functional Equivalency:

From Fig. 2-27, the AOI implementation of the function f requires three TTL components, using one NOT, AND, and OR gate on each of the components. Performing gate substitutions and eliminating NAND double inverter redundancies yields the function f = ((a’b)’c’)’, which is shown as equivalent to f = a’b+c. The NAND-only implementation requires four 2-input NAND gates, which is the number of 2-input NAND gates present on the TTL 7400 component. Thus, the function f can be implemented using only one TTL component compared to three TTL components using an AOI implementation. Accordingly, the NAND-only implementation provides the basis to reduce the size and power necessary to physically implement this circuit.

NOR-based logic is similar to the NAND-based logic only utilizing NOR gates to implement logic functions. The application of NOR-based logic to logic function manipulation requires determining combinations of NOR gates to represent the NOT, AND, and OR operations. If this cannot be done, then NOR-based logic does not represent a complete logic set. The truth table, DeMorgan’s theorem derivations, and NOR gate combinations to implement NOT, AND, and OR operations are shown in Fig. 2-28 below. The process for representing a logic function using a NOR-only representation includes the same steps as the NAND-only form. The gate substitution process is illustrated for the function f = a’b + c in Fig. 2-29 below.

Remove Double Inverters

As shown in the NAND-only implementation example, the AOI implementation for f requires three TTL parts, which is the same in this example. Performing the NOR-only gate substitutions for the NOT, AND, and OR gates from the AOI circuit and eliminating the double inverter redundancies. The resulting NOR-only form of the function is f = ((a+b’)’+c)’’, which is shown to equal f = a’b+c. Using the NOR-only 2-input gate representation, four NOR gates are needed to implement f. Since there are four 2-input NOR gates on a single 7402 TTL component, one 7402 component is needed to implement f.

The example function f = a’b + c implemented using NAND- and NOR-logic representations illustrates two approaches that can be utilized to simplify and reduce the TTL components needed to implement f. The goal of using Boolean algebra and techniques such as NAND- and NOR-only representations is to develop a toolbox that logic function implementation can be manipulated to address size/space, power, parts available, among other considerations. It must be emphasized that different approaches provide more practical and advantageous implementations than others. Much of the presentation in the remainder of this book explores digital components and techniques for digital circuit design and implementation.

1The breadboard example is a design problem to use Dual Inline Package (DIP) switches to display individual characters in an eight-character string on a seven-segment display. The switches and power supply for the Integrated Circuits are provided from a breadboard companion (www.breadboardcompanion.com).

Express logic functions using structured forms

Manipulate logic functions using Karnaugh maps (K‐maps)

Formulate and identify combinatorial functions in sum‐of‐products (SOP), product‐of‐sums (POS), minterm, maxterm structured forms

Construct Karnaugh maps for functions with 2 to 4 variables

Find minimal SOP and POS form function

Build NAND‐NAND and NOR‐NOR function forms

Minimize functions with Don't Care conditions

Construct Variable Entered Map (VEM) style K‐maps

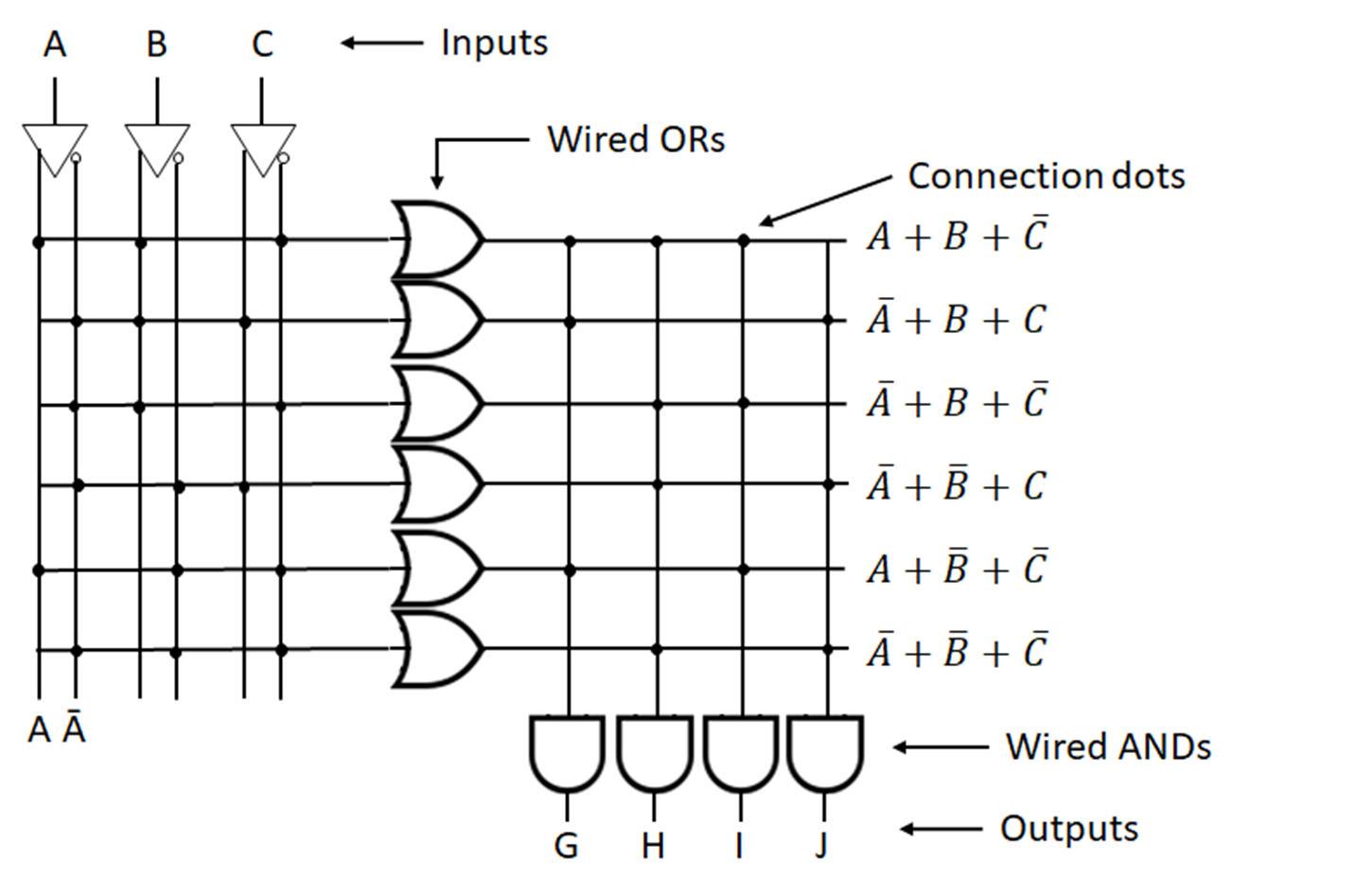

In the previous chapter, topics covered include binary variables, truth tables, logic functions, logic gates, logic identities, Boolean algebra for logic function simplification, and complete logic sets for representing logic functions. These topics are related to the digital circuit design process. In this section, an alternative approach is presented for representing, examining, and simplifying logic functions using a more structured approach.

Structure logic is writing expressions that use various types of regular and repeated forms. Structured equations provide a useful starting point for analysis because they provide a uniform view of the problem specification. Structured equations are sufficient to directly implement logic functions. Two types of structured forms include:

1) Sum of products (SOP)

2) Product of sums (POS)

Sum of products is a structured form with variables in individual terms ANDed (products) that are ORed together (sum). An SOP expression with every variable present in each term in either normal (uncomplemented) or complemented form is referred to as canonical SOP form. Fig. 3-1 shows SOP and canonical SOP function examples. The top expression for f below has all three variables in the first term (xyz’) but does not have an x variable form in the second term (yz) and does not have a y variable form in the third term (xz). The bottom expression for f below has x, y, and z in every term (ANDed term) in either normal or complemented form, which are ORed together. This expression is in canonical SOP form.

In order to convert an SOP expression to a canonical SOP expression, the following steps are taken:

1) Identify the missing variables in each AND term of the SOP expression.

2) For each AND term missing a variable, AND the existing variables in the term with 1.

3) From step 2, replace the 1 with X+X’, where X is the missing variable in the term.

4) Apply the distributive rule to expand all of the terms with X+X’ substitutions.

5) Apply the identity X+X=X to eliminate extra copies of terms that may have been created with the expansion process.

The resulting expression will have every variable in every term in either normal or complemented form to yield a canonical SOP expression. An example of SOP to canonical SOP form conversion process is given in Fig. 3-2 below.

Product of sums is a structured form with variables in individual terms ORed (sums) that are ANDed together (product). A POS expression with every variable present in each term in either normal (uncomplemented) or complemented form is referred to a canonical POS form. Fig. 3-3 presents POS expression examples. The top expression for f below has all three variables in each term (a’+b’+c) and (a+b’+c). So, f is in canonical POS form. The middle expression g below has x and y in the first OR term (x’+y) but is missing a y variable in the second term (x’). The expression h is in POS form. The bottom expression for h = xy’ can be interpreted as an AND term in specifying an SOP expression. h can also be interpreted as a POS expression considering each variable as a separate term. The resulting terms do not have any OR variable combinations, but the AND of individual terms has the general form of a POS expression.

In order to convert a POS expression to a canonical POS expression, the following steps are taken:

1) Identify the missing variables in each OR term of the POS expression.

2) For each OR term missing a variable, OR the existing variables in the term with 0.

3) From step 2, replace the 0 with X’ (X’ = 0), where X is the missing variable in the term.

4) Apply the distributive rule (A+BC = (A+B)(A+C) to expand all of the terms with X’ substitutions.

5) Apply the identity X X=X to eliminate extra copies of terms that may have been created with the expansion process.

An example of forming a canonical POS expression from a POS expression using these steps is given in Fig. 3-4 below.

Finding the AND terms directly from the 1s entries in a truth and ORing those terms produces a canonical SOP expression. In order to find a POS expression from a truth table, the following steps are performed:

1) The AND terms for each 0 entry are found from the truth table.

2) The AND terms are ORed to form the complement of the function.

3) The complement of the function found in step 2 is determined, applying DeMorgan’s theorem ( (X+Y)’ = X’ Y’ ) to translate the OR terms X and Y to AND terms.

4) Apply DeMorgan’s theorem ( (XY)’ = X’+Y’ ) to convert the AND terms X’ and Y’ to OR terms.

5) The resulting expression with individual OR terms that are ANDed together is the canonical POS expression.

An example of applying this process is presented in Fig. 3-5 below to extract the canonical POS expression from the truth table.

Take complement of both sides to find F: �� ������ ������

Apply DeMorgan’s theorem to right‐hand side: �� ������ ������

Apply DeMorgan’s theorem to each term: ��

Note that the variables in each ORed term of the POS expression are the complements of variable forms in the SOP expression for �� from the truth table. From the example above, the SOP term ������ is represented as �� �� �� . The same process is applied to all SOP terms, with the resulting OR terms ANDed to form the POS expression.

In this chapter, we have presented approaches to represent functions in a common format, namely canonical SOP and POS forms. This common format for expressions facilitates the usage of common analysis techniques for function representation and implementation. The next section builds upon this common format to express individual function terms and to express functions.

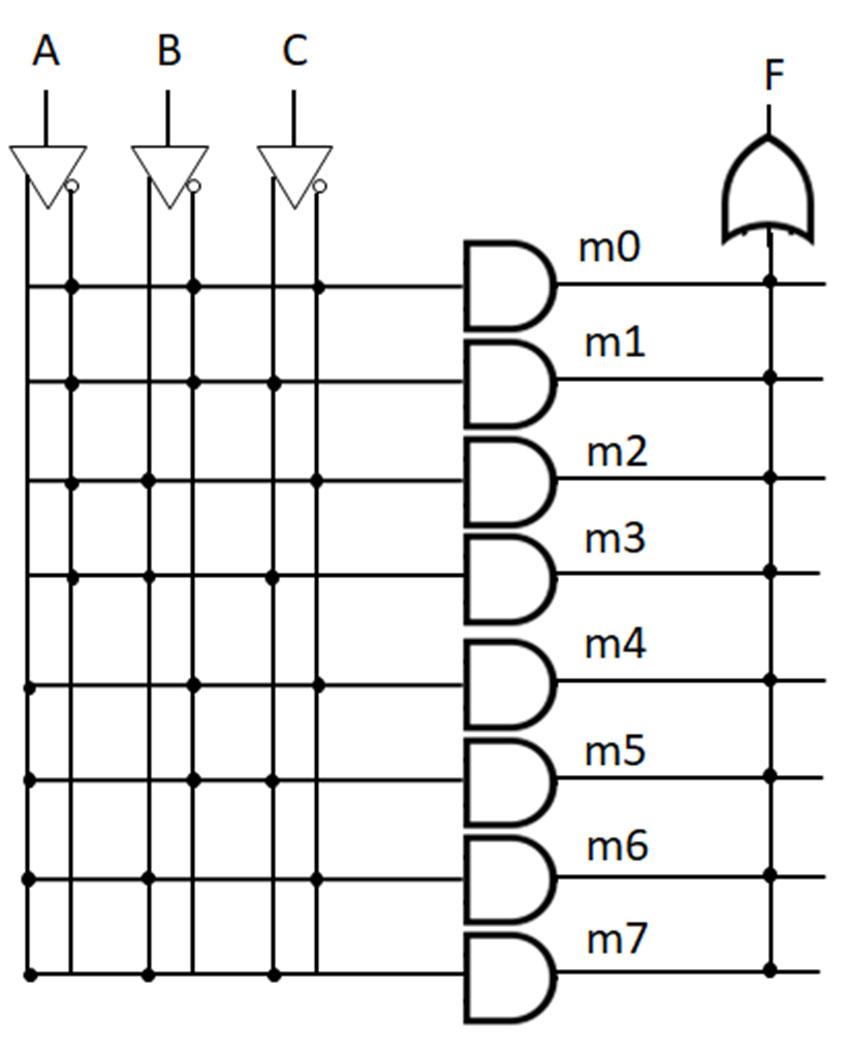

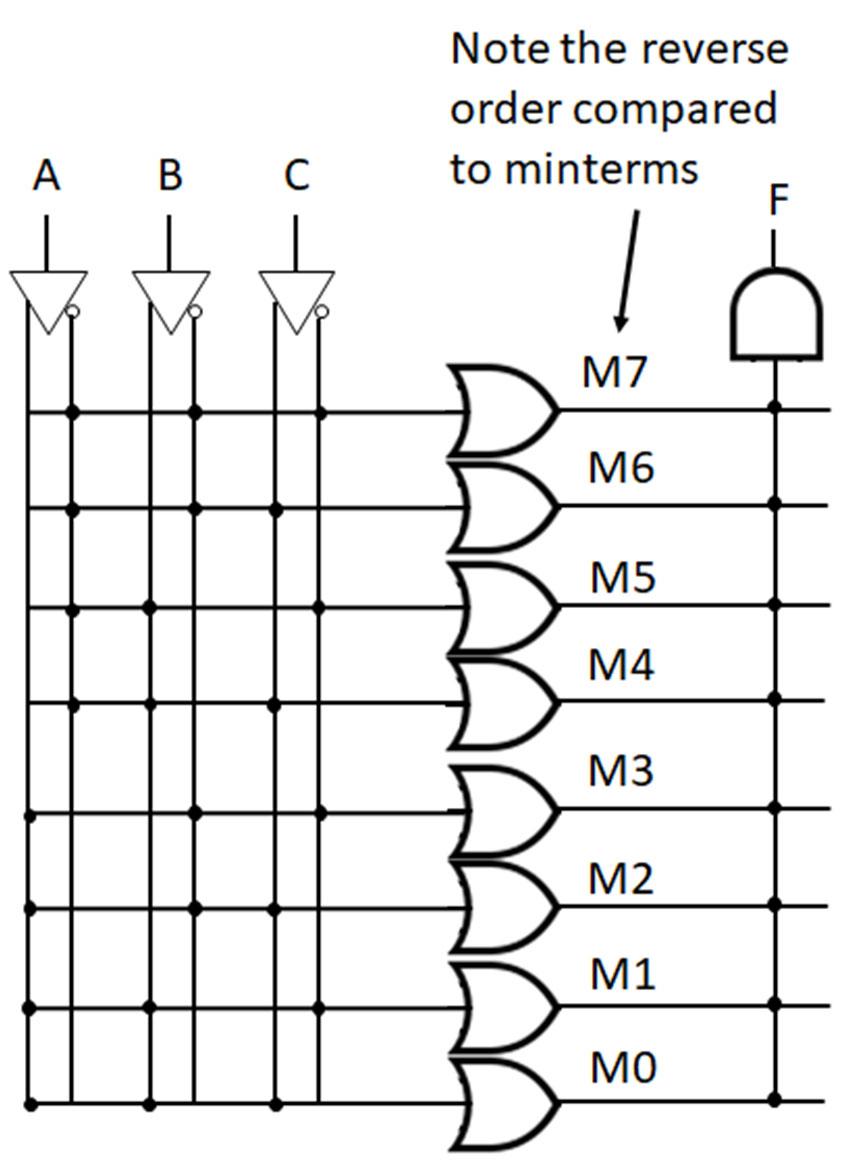

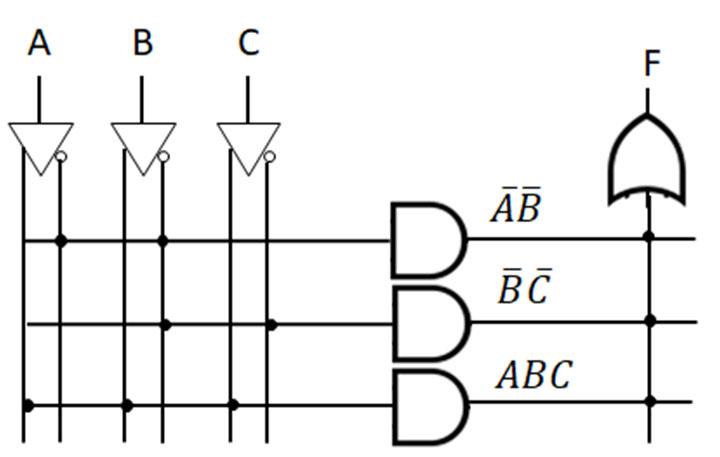

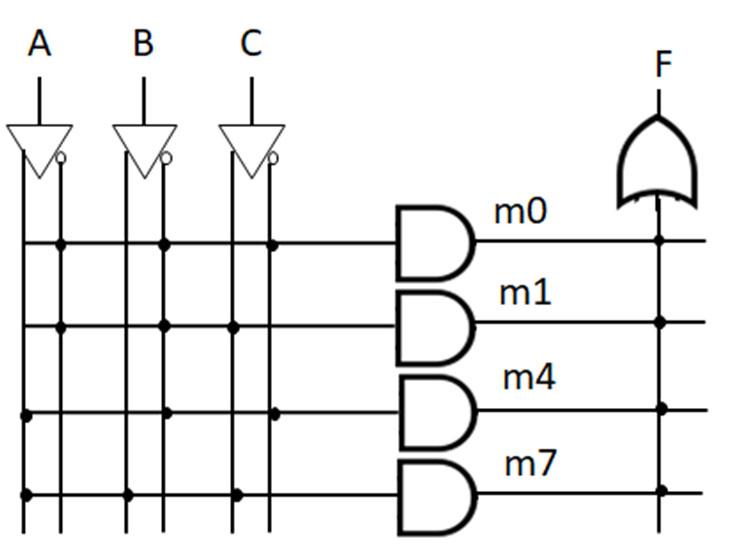

Minterms refer to the individual terms of a canonical SOP expression (1s entries in the truth table) and provide a shorthand way to refer to those terms. For given function f below, the individual AND terms correspond to 1s entries in the truth table.