21 minute read

Lessons Learned During One Year of Commercial Volumetric Video Production

TECHNICAL PAPER

Lessons Learned During One Year of Commercial Volumetric Video Production

Advertisement

By Oliver Schreer, Ingo Feldmann, Peter Kauff, Peter Eisert, Danny Tatzelt, Cornelius Hellge, Karsten Müller, Sven Bliedung, and Thomas Ebner

ABSTRACT commercial production. The following improvements In June 2018, Fraunhofer Heinrich Hertz Institute (HHI) together were integrated: with Studio Babelsberg, ARRI, Universum Film AG (UFA), and ■ Process-dependent color grading to support different Interlake founded the joint venture Volucap GmbH and opened a processing steps with best suited input datacommercial volumetric video studio on the film campus of Potsdam- ■ Mesh registration, to enhance temporal consistency Babelsberg. After a testing phase, commercial productions went live of the sequence of meshesin November 2018. The core technology ■ An encoding scheme to supfor volumetric video production is 3D port streaming of volumetric human body reconstruction (3DHBR), developed by Fraunhofer HHI. This technology captures real persons with our novel volumetric capture system and creates naturally moving dynamic 3D models, which can then be observed from arbitrary viewpoints in a virtual or augmented environment. Thanks to the large number of test productions and new requirements from customers, several lessons have been learned during the first year of commercial activity. The processing workflow for capture and production of volumetric video has been continuously evolved such as process-dependent color grading and a new encoding scheme for volumetric video. Furthermore, speThe creation of highly realistic dynamic 3D models of humans is useful in many different application scenarios. Obviously, the use of volumetric assets enriches the development of immersive augmented reality (AR) and virtual reality (VR) applications. However, volumetric assets at this level of quality are also requested in 2D movie production, where several, previously assets ■ Integration of a fully programmable lighting system to achieve best possible lighting conditions for fast movements of actors ■ A new relighting approach to adapt the texture of the volumetric asset to the lighting conditions in the virtual scene ■ First tests with a treadmill to overcome limitations in the capture volume for walking persons. The creation of highly realistic dynamic 3D models of humans is cific challenges have been tackled, for unknown, views of the actor useful in many different applicainstance, fast movements and relighting need to be rendered. tion scenarios. Obviously, the use of of actors. volumetric assets enriches the devel-

Introduction

opment of immersive augmented Keywords reality (AR) and virtual reality (VR) applications. However, Augmented reality (AR), production, virtual reality (VR), volumetric assets at this level of quality are also requested in volumetric video 2D movie production, where several, previously unknown,

views of the actor need to be rendered.

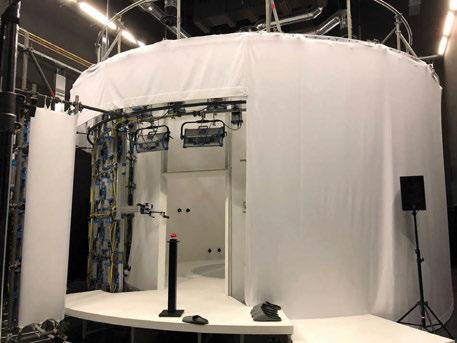

n this paper, some recent developments in the pro- Capture System Overview I fessional volumetric video production workflow are presented. After an overview of the capture system The capture system consists of an integrated multicam era and lighting system for full 360° acquisition. A cylin and the production workflow, several enhance- drical studio has been setup with a diameter of 6 m and ments of the workflow are presented, thanks to a height of 4 m (Fig. 1, left and right). The studio is the experiences gathered during the first year of equipped with 32 20-megapixel cameras arranged in 16 stereo pairs. The system completely relies on a visionDigital Object Identifier 10.5594/JMI.2020.3010399 based stereo approach for multiview 3D reconstruction Date of publication: xx xxx xxxx and does not require separate 3D sensors. Two hundred

FIGURE 1. View from outside the rotunda (left) and inside the rotunda (right).

twenty ARRI SkyPanels are mounted behind a diffusing tissue to allow for arbitrarily lit background and different lighting scenarios. This is a unique combination of integrated lighting and background. All other currently existing volumetric video studios rely on green screen and directed light from discrete directions such as the Mixed Reality Capture Studio by Microsoft1 and the studio by 8i.2

Workflow Overview

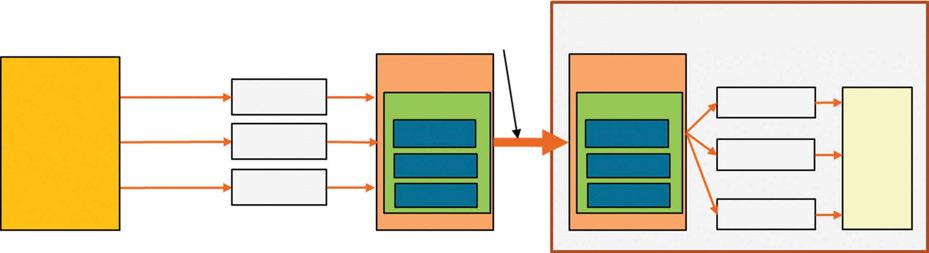

To allow for professional production, a complete and automated processing workflow has been developed, which is depicted in the flow diagram in Fig. 2. At first, a color correction and adaptation of all cameras is performed, providing equal images among the whole multiview camera system. After that, a difference keying is performed on the foreground object to minimize further processing. All cameras are arranged in stereo pairs and are equally distributed in the cylindrical setup. Thus, an easier extraction of 3D information from the stereo base system along the viewing direction is achieved. For stereoscopic view matching, the IPSweep algorithm3,4 is applied. This stereo-processing approach consists of an iterative algorithmic structure that compares projections of 3D patches from left to right image using point transfer via homography mapping. The resulting depth information per each stereo pair is fused into a common 3D point cloud per frame. Then, mesh postprocessing is applied to transform the data to a common computer generated imagery (CGI) format. As the resulting mesh per frame is still too complex, a mesh reduction that enhances the capabilities of the target device5 and adaptability to sensitive regions (e.g., face) of the model is made.6 For desktop applications, meshes with 60k faces are used, whereas for mobile devices 20k faces are appropriate. Once this processing step gets over, a sequence of meshes and related texture files are available, where each frame consists of an individual mesh with its own topology. This has some drawbacks regarding the temporal stability and the properties of the related texture files. Therefore, a mesh registration that provides short sequences of registered meshes of the same topology is applied. To allow the user to easily integrate

color adaption / grading keying

depth estimation 3D data fusion 3D point cloud

mesh postprocessing

FIGURE 2. Production workflow.

mesh reduction

mesh encoding

mesh streaming import to AR/VR app

volumetric video assets into the final AR or VR application, a novel mesh encoding scheme has been developed. This scheme encodes the mesh, video, and audio independently using state-of-the-art encoding and multiplexes all tracks into a single MP4 file. On the application side, the related plugin is available for for Unity and Unreal to process the MP4 file, decode the elementary streams (ESs), and render the volumetric asset in realtime. The main advantage is the generation of a highly compressed bit stream that can be directly streamed from a hard disk or over the network using, for example, hypertext transfer protocol (HTTP) adaptive streaming. Unity7 and unreal engines8 are the two most popular realtime render engines. They provide a complete 3D scene development environment and a realtime renderer that supports most of the available AR and VR headsets as well as operating systems.

Process-Dependent Color Grading

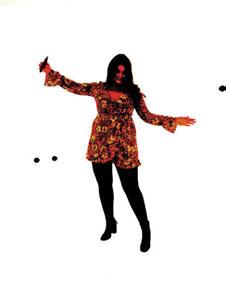

Thanks to the large number of test productions and new requirements from customers, several lessons have been learned during the first year of commercial activity. The processing workflow for capture and production of volumetric video has been continuously evolving, and novel processing modules and modifications in the workflow have been introduced. The unique lighting system offers diffuse lighting from any direction. As a result, the texture of the objects is quite flat without any internal shadows. In Fig. 3 (left), the original raw image after capture is shown. This diffuse lighting offers the best possible conditions for relighting of the dynamic 3D models afterward at the design stage of the VR experience. However, the raw image data have to be adapted according to the needs of different processing steps in the overall volumetric video workflow. Therefore, different color gradings are applied to support best input data for the following algorithm modules:

Keying, that is, segmentation of foreground object from background Depth processing Creative grading for texturing.

For keying, a grading with high saturation is applied to optimally distinguish the foreground object from the lit white background. In Fig. 3 (right), an example of highly saturated grading for keying is depicted. In Fig. 4 (left), the grading for depth processing is shown. The aim of this grading is to achieve the best possible representation of structures. Hence, especially dark image parts of the person are graded brighter. The diffuse lighting from all directions leads to a very flat image. Therefore, and finally, a natural appeal of the person requires a third way of grading, especially to recreate a natural skin tone (Fig. 4, right). This final grading is then used for backprojection of the texture onto the final 3D model.

FIGURE 3. Raw image (left) and grading for keying (right).

Novel Modules for Completion of End-to-End Workflows

The two most popular render engines, Unity and Unreal, do not provide optimal tools or workflows for integration of volumetric video. Currently, they can handle animated objects well, but temporarily changing meshes are still challenging. To optimally support customers and end users in using this new media format, several additional tools and processing modules were developed. The result of the 3D video processing workflow is independent meshes per frame that consist of individual topology and texture atlas.

To create sequences of meshes with identical topology and to improve temporal stability of the related texture atlas, a mesh registration is performed.9 After definition or automatic selection of a key mesh, succeeding meshes are computed by reshaping the key frame to the geometry of neighboring frames while preserving topology and local structures. Bidirectional processing is performed to better deal with sudden topology changes of the mesh sequence. Once the deviations of the registered mesh reach a defined threshold, a new key mesh is set. This mesh registration has two advantages. Only for key frames, a new mesh has to be created, while for the registered meshes, only 3D

FIGURE 4. Grading for depth processing (left), and creative grading for texturing (right).

Volumetric Video

Mesh

Texture Map

Audio Mesh Enc

Video Enc

Audio Enc

Mesh ES

Video ES

Audio ES bit stream (mp4 file)

MP4 Mux

Track Mesh

Track Video

Track Audio Player / plugin (e.g. Unity/Unreal)

MP4 DeMux

Track Mesh

Track Video

Track Audio Mesh Dec

Video Dec

Audio Dec Player

FIGURE 5. Mesh encoding, multiplexing, streaming, and decoding.

vertex positions have to be adapted. Second, the texture atlas remains the same due to the same topology of the mesh.

A texture atlas example can be seen in Fig. 8 (left). For this, texture information of adjacent triangles is combined into a texture patch, if the normal vectors across all triangles do not exceed a given threshold. Otherwise, a new texture patch is created. Then, all texture patches are arranged in a texture atlas, starting with the largest patch in the bottom-left corner. To efficiently apply a classical block-based video coding method, two mechanisms are incorporated into the texture atlas: First, the topology is preserved across each registered mesh sequence, that is, shape and position of all patches within the texture atlas remain the same, while only the video content of each patch may change. Second, the empty space between all patches is interpolated (as shown in Fig. 8, right), such that the block-based video processing does not produce high rates, when coding image blocks across patch boundaries. In addition to the higher encoding efficiency, this texture atlas processing also allows easier grading of the texture for the registered period of meshes.

The registered mesh sequence is then compressed and multiplexed into an MP4 file and can, therefore, be easily integrated into the dedicated plugins for Unity or Unreal. The meshes are encoded with a standard mesh encoder (Fig. 5). Currently, Draco10 is used, in which the connectivity coding is based on Edgebreaker.11 Other mesh codecs are Corto,12 Open 3D Graphics Compression of Motion Picture Experts Group (MPEG) and Khronos Group,13 or MPEG activities on Point Cloud Compression14 (where triangular mesh compression support is currently investigated).

The texture atlas is currently encoded with H.264/ advanced video coding (AVC), while an extension to H.265/high efficiency video coding (HEVC) and H.266/ versatile video coding (VVC) is foreseen in the future. This will lead to additional data rate reduction for the compressed stream by maintaining the same level of quality. Finally, the audio signal is encoded with a standard audio encoder. The three different ESs are multiplexed into an MP4 file ready for transmission. On the receiver side, plugins for Unity and Unreal allow for easy integration of volumetric video assets into the target AR or VR application. These plugins include the demultiplexer as well as related decoders and perform realtime decoding of the mesh sequence. Typical data rates in our first experiments are 20 Mb/si for registered mesh sequences targeting mobile devices with 20k faces per mesh. For desktop applications that are capable of rendering 60k meshes, a data rate for registered meshes with a data rate of 39Mb/sii is achieved. In both experiments, the texture resolution is 2048 × 2048 pixels at a frame rate of 25 frames/sec.

Production Challenges

Fast Movements

The recording of very fast movements, even of individual objects, demands a quick adaptation to the light conditions on the set. Normally, this would be possible only with conversion measures and considerable time consumption during production. Volucap, however, uses a fully programmable lighting system, which allows light moods or templates to be loaded and used at the touch of a button. This is particularly important as Volucap allows itself to react easily to changing requirements during production. In the example with the basketball player Josh Mayo (Fig. 6), special light adjustments for recording very fast movements could be offered by the flexible light control without losing time in the mounting of lights.

Relighting of Actors

For a convincing integration of an actor into a virtual scene, it is necessary to have possibilities to adjust the lighting afterward, for example, if the exact final illumination in the 3D scene was not known at the time of shooting or if certain light settings were necessary due to special clothing or movements. A few convenient

i 20k Seq.: Individual rates for mesh, video, and audio data are 10 Mb/s, 10 Mb/s, and 133 kb/s, respectively. ii 60k Seq.: Individual rates for mesh, video, and audio data are 29 Mb/s, 10 Mb/s, and 133 kb/s, respectively.

FIGURE 6. Fast movements like those of a basketball player (left) require high standards of the lighting system: view into the ceiling of the capture stage (right).

FIGURE 7. Same actor with different lighting (left) and two versions of relit textures integrated in the VR environment (right).

ways have, therefore, been developed to transfer the lighting of the 3D environment on to the actors. These integrate seamlessly with current pipelines such as Autodesk Maya, Nuke, or Houdini.

In the left image in Fig. 7, three different versions of relit texture of the basketball player are shown. The right image shows two different texture versions of the basketball player in the virtual environment. The left version of the basketball player fits convincingly to the ambient lighting of the scene. A before–after comparison of an original texture atlas and the same texture with integrated ambient lighting is shown in Fig. 8.

Treadmill

The cylindrical studio offers a recording volume with an average diameter of 3 m, which is quite comfortable for many productions. However, it is not possible to record more extensive movements, for example, walking along a road, without further ado. This spatial limitation is bypassed with the help of a treadmill (Fig. 9). This

FIGURE 8. Original texture atlas (left), and texture with integrated ambient lighting (right). FIGURE 9. Extensive walking movements captured on a treadmill.

allows two different approaches to be taken. On the one hand, a walking movement can simply be recorded over a longer period of time. On the other hand, a short walking cycle can be recorded and played back in a loop later, as is also the case with conventional animations.

Summary

After one year of commercial production in the new volumetric video studio of Volucap GmbH, a number of modifications and improvements in the workflow have been incorporated. A major improvement related to processing is the introduction of different gradings of the original footage accommodating the requirements of the workflow. To provide a complete end-to-end workflow satisfying the needs of the customers, a mesh registration and mesh encoding scheme has been developed. This significantly reduces the overall amount of data of the volumetric assets and allows for easy integration into standard render engines. Finally, specific challenges have been tackled that resulted from recent productions. Hence, examples were given for fast moving actors, creative relighting according to the final VR scene and continuous walking scenarios. The huge demands on this new capture and production technology will lead to new additional challenges, which will be researched and solutions for it would be developed by Fraunhofer Heinrich Hertz Institute (HHI) and Volucap together.

References

1. “Mixed Reality Capture Studio Microsoft,” Accessed: 6 Aug. 2020. [Online]. Available: https://www.microsoft.com/en-us/ mixed-reality/capture-studios 2. “8i,” Accessed: 6 Aug. 2020. [Online]. Available: https://8i.com/ 3. W. Waizenegger et al., “Real-Time Patch Sweeping for High-Quality Depth Estimation in 3D Videoconferencing Applications,” Presented at SPIE Conf. Real-Time Image Video Process., San Francisco, CA, 2011, doi: 10.1117/12.872868. 4. W. Waizenegger et al., “Real-Time 3D Body Reconstruction for Immersive TV,” Proc. 23rd Int. Conf. Image Process. (ICIP 2016), Phoenix, AZ, pp. 360–364, 25–28 Sep. 2016.

5. T. Ebner et al., “Multi-View Reconstruction of Dynamic RealWorld Objects and Their Integration in Augmented and Virtual Reality Applications,” J. Soc. Inf. Display, 25(3):151–157, Mar. 2017, doi: 10.1002/jsid.538. 6. R. M. Diaz Fernandez et al., “Region Dependent Mesh Refinement for Volumetric Video Workflows,” IEEE Int. Conf. 3D Immersion (IC3D), Brussels, Belgium, pp.1–8, Dec. 2019. 7. “Unity,” Accessed: 6 Aug. 2020. [Online]. Available: https:// unity.com 8. “Unreal Engine,” Accessed: 6 Aug. 2020. [Online]. Available: https://www.unrealengine.com 9. W. Morgenstern, A. Hilsmann, and P. Eisert, “Progressive Non-Rigid Registration of Temporal Mesh Sequences,” Proc. Eur. Conf. Visual Media Production, London, U.K., pp. 1–10, Dec. 2019. 10. Google, “Introducing Draco: Compression for 3D Graphics,” Accessed: 20 Jun. 2019. [Online]. Available: https://opensource. googleblog.com/2017/01/introducing-draco-compression-for-3d.html 11. J. Rossignac, “Edgebreaker: Connectivity Compression for Triangle Meshes,” IEEE Trans. Vis. Comput. Graph., 5(1):47–61, Jan./Mar. 1999. 12. Corto, Accessed: 20 Jun. 2019. [Online]. Available: https:// github.com/cnr-isti-vclab/corto 13. “Open 3D Graphics Compression,” Accessed: 20 Jun. 2019. [Online]. Available: https://github.com/KhronosGroup/glTF/ wiki/Open-3D-Graphics-Compression 14. S. Schwarz et al., “Emerging MPEG Standards for Point Cloud Compression,” IEEE J. Emerg. Sel. Top. Circuits Syst., 9(1):133–148, Mar. 2019.

About the Authors

Oliver Schreer is the head of the Immersive Media & Communication Research Group, Vision & Imaging Technologies Department, Fraunhofer Heinrich Hertz Institute (HHI), Berlin, Germany, and an associate professor at the Technical University of Berlin, Berlin. Since December 2019, he has been coordinating the Horizon 2020 Coordination and Support Action XR4ALL. His main research fields are 3D video processing and immersive and interactive media services and applications exploiting augmented and virtual reality technologies. He has published more than 100 articles and was the main editor of the books 3D Videocommunication (2005) and Media Production, Delivery and Interaction for Platform Independent Systems: FormatAgnostic Media (2014) both published by Wiley. In May 2019, he and his colleagues, I. Feldmann and P. Kauff, were awarded the Joseph-von-Fraunhofer Prize “Realistic people in virtual worlds—A movie as a true experience.”

Ingo Feldmann is the head of the Immersive Media & Communication Group, Vision & Imaging Technologies Department, Fraunhofer HHI. He received a Dipl.-Ing. degree in electrical engineering from the Technical University of Berlin, in 2000. Since September 2000, he has been with Fraunhofer HHI, where he has been engaged in various research activities in the field of 2D image processing, 3D scene reconstruction and modeling, digital cinema, multiview projector–camera systems, realtime 3D video conferencing, and immersive TV applications. His current research focuses on the field of future virtual reality, augmented reality and mixed reality applications, and volumetric video acquisition. In May 2019, he and his colleagues, O. Schreer and P. Kauff, were awarded the Joseph-von-Fraunhofer Prize “Realistic people in virtual worlds—A movie as a true experience.”

Peter Kauff is the former head of the Vision & Imaging Technologies Department, Fraunhofer HHI. He also acted as a co-head of the Capture & Display Systems Group in his department and was a co-founder of the “Tomorrow’s immersive Media Experience Laboratory (TiME Lab)” at Fraunhofer HHI. He received a Dipl.-Ing. degree in electrical engineering and telecommunication from the Technical University of Aachen, Aachen, Germany, in 1984. He has been with Fraunhofer HHI since then. He was involved in numerous German and European projects related to advanced 3D video, 3D cinema, 3D television, tele-presence, and immersive media. In May 2019, he and his colleagues, O. Schreer and I. Feldmann, were awarded the Joseph-von-Fraunhofer Prize “Realistic people in virtual worlds—A movie as a true experience.”

Peter Eisert is a professor for visual computing at the Humboldt University of Berlin, Berlin, Germany, and heading the Vision & Imaging Technologies Department at Fraunhofer HHI. He received a PhD degree with the highest honors from the University of Erlangen, Erlangen, Germany, in 2000. Then, he worked as a postdoctoral fellow at Stanford University, Stanford, CA, on 3D image analysis, facial animation, and computer graphics. In 2002, he joined Fraunhofer HHI, where he is coordinating and initiating numerous national and international research projects. He has published more than 200 conference and journal papers and is an Associate Editor of the International Journal of Image and Video Processing as well as in the Editorial Board of the Journal of Visual Communication and Image Representation. His research interests include 3D image/ video analysis and synthesis, face and body processing, image-based rendering, deep learning, computer vision, computer graphics in application areas like multimedia, security, and medicine.

Danny Tatzelt works as a freelance cinematographer, editor, and director based in Berlin. He graduated with a degree in cinematography from the University for Film and Television, Konrad Wolf, Potsdam-Babelsberg, in 2014 with the documentary Viajar, followed by a masterscholars program completed with a 360° film Berlin in 2016. During his academic years, he held the Studienstiftung des deutschen Volkes scholarship. In addition to classical film works, he has also specialized in virtual reality (VR)- and augmented reality (AR)-film productions. In close collaboration with Fraunhofer HHI, he designed the Volumetric Capture Studio that led to the founding of Volucap GmbH, Potsdam-Babelsberg, Germany, the first commercial volumetric video studio on the European continent.

Cornelius Hellge heads the Multimedia Communications Group, Fraunhofer HHI, since 2015. He received a Dipl.-Ing. degree in media technology from the Ilmenau University of Technology, Ilmenau, Germany, in 2006 and a Dr.-Ing. degree (summa cum laude) with distinction from the Berlin University of Technology, Berlin, Germany, in 2013. In 2014, he was a visiting researcher with the Network Coding and Reliable Communications Group, Massachusetts Institute of Technology, Cambridge, MA. He is responsible for various scientific as well as industry-funded projects. Together with his team, he regularly contributes to ThirdGeneration Partnership Project (3GPP) (RAN1, RAN2, and SA4), Motion Picture Experts Group (MPEG), Joint Collaborative Team on Video Coding (JCT-VC), and JVET. He has authored more than 60 journal and conference papers, predominantly in the area of video communication and next-generation networks and he holds more than 30 internationally issued patents and patent applications in these fields. His current research interest is in new formats for volumetric video for mixed reality services over 5G networks.

Karsten Müller received a Dr. Ing. degree in electrical engineering and a Dipl. Ing. degree from the Technical University of Berlin, Germany, in 2006 and 1997, respectively. He has been with the Fraunhofer Institute for Telecommunications, HHI, since 1997, where he is currently the head of group. His research interests include coding of 3D and multidimensional video, as well as compression and representation of neural networks. He has been involved in international standardization activities, successfully contributing to the International Organization for Standardization/International Electrotechnical Commission (ISO/IEC) Moving Picture Experts Group for work items on visual media content description, multiview, multitexture, 3D video coding, and neural network representation. Here, he cochaired an ad hoc group on 3D video coding from 2003 to 2012. He has served as the chair and an editor for IEEE conferences and as an associate editor for the IEEE TransacTions on image Processing.

Sven Bliedung is a CG industry veteran and the director and chief executive officer of Volucap, a state-of-the-art volumetric capture studio, located in PostsdamBabelsberg, Germany. He founded Slice, an immersive media production company after he founded a high-profile visual effects company called VFXbox. He is also the founder of the Virtual Reality Association Berlin Brandenburg (VRBB e.V.) and today he is the chief executive officer of Volucap, the state-of-theart volumetric capture studio.

Thomas Ebner joined the Fraunhofer HHI in 2008. He is a scientist and a software developer in the Immersive Media & Communication Group of the Vision & Imaging Technologies Department. As a project manager for volumetric video for virtual reality/augmented reality (VR/AR), he took a leading role in integrating dynamic 3D reconstructions of real persons into immersive experiences and expanding the group’s X Reality activities. Since 2018, he has also been the chief technology officer of Volucap, a volumetric capture studio located in Potsdam-Babelsberg, Germany. He holds a diploma degree in media and computer science from the Technical University of Dresden, Dresden, Germany. From 2005 to 2006, he was with the Division of Computer Engineering, Digital Dongseo University Busan, Busan, South Korea.

Presented at IBC 2019, Amsterdam, The Netherlands, 12–17 September 2019. This paper is published here by kind permission of the IBC & Fraunhofer HHI.