5 minute read

Data Integrity And Computer System Validation (CSV

COMPUTER SYSTEM

VALIDATION (CSV)

Advertisement

By Emma Boulton

Trevor has 25 years’ experience in the pharmaceutical industry as an IT, CSV and Data Integrity specialist including 15 years in Quality Assurance for sponsors, service providers and as an independent consultant. Trev has taught on the MSc in Quality Management at Cranfield and teaches at the University of Hertfordshire on its MSc course in Pharmacovigilance. Trev is a Fellow of the Research Quality Association (FRQA) and is a tutor for Quality Risk Management and Data Integrity. He is currently the Chair of the RQA’s Digital Information Governance Information Technology (DIGIT) committee and Cross-GxP Data Integrity working party.

Trevor’s 25 years of knowledge about this very niche topic was both engaging and enlightening. His presentation started with some key definitions and took us through the data integrity aspects of validation with a tie in with the General Data Protection Regulation (GDPR).

What is a computer system?

It’s a set of hardware, software, procedures and people which together perform one or more data capture, processing, analysis and reporting functions, e.g. on regulatory study data.

What is validation?

The Food and Drug Administration (FDA) define validation as establishing documented evidence which provides a high degree of confidence that a specific process will consistently produce a product meeting its predetermined specifications and quality attributes.

ISO 9000 define it as confirmation by examination and through provision of objective evidence that the requirements for a specific intended use or application have been fulfilled.

Proving that the system is fit for purpose

The presentation then moved on to the Good Automated Manufacturing Practice (GAMP) and the problems that can be encountered when deciding the GAMP software category that an application consists of into:

• Cat 1 – Infrastructure Software - Qualified installation (name, version), indirectly tested through use of application

• Cat 4 – Configured Software - Qualified installation & configuration. Testing of configuration (operational qualification (OQ)/ performance qualification (PQ))

• Cat 5 – Custom Applications - Full lifecycle support and validation

However, Trevor stated that the categories follow the rules outlined below:

• If I write a PV System that can be configured for the individual, it is Cat 5

• If I sell a service provider (SP) that system, for the SP it is Cat 4 • If I sell ‘pre-built configurations’ to the client, it is Cat 3* *This is somewhat theoretical and significant testing would be recommended. Moving on to validation, typical validation models and risk assessments are shown in images 1 and 2.

There are 4 steps for validation, as listed below: 1. A system of Quality Assurance 2. A GxP risk / critical assessment as per point 1

3. The intended purpose documented as per point 1

4. Evidence the system performs to 3 as per point 1 and in accordance with point 2

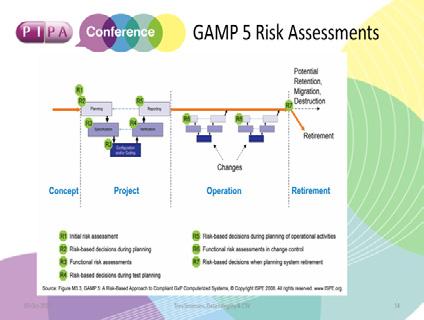

Trevor was keen to reiterate that risk assessments for a system should start during the planning stage and continue through to system retirement (see image 3).

Image 1

Image 2 Image 3

One of the risks there are with our GXP systems is that data integrity, and therefore GDPR, requirements should be embedded as part of the risk assessment and continue through the system lifecycle. If GDPR is not embedded, you need to question how a request received to erase or correct someone’s personal data can be achieved, and how can you prove that it has been done? In a world of product transfers being more and more frequent, this issue can quickly escalate and become an issue that no-one wants to face.

Initial Risk Assessment

• GxP risk / determination

• System impact

• What is the data supporting? • Licenced products • Clinical trial data (primary / secondary endpoint / reconstruction)

Planning Risk Assessment

• Supplier assessment • Lifecycle / deliverables approach • What do you need to validate?

Another scenario that Trevor described to us is audits. The right to audit and inspection support is routinely put into contracts and agreements but what does that mean? Are locations, and therefore legal jurisdictions, agreed upfront as well? Trevor told a humorous story of going to an audit in the US for a client. The team of people that were needed for the audit had travelled from India to the US. When the sessions were being run, the body clock of the poor auditees was telling them it was the middle of the night and they should be asleep. Which wasn’t the most productive environment for an audit.

Summary

• Data integrity is always intended to be a focus of CSV • Focus on the data criticality & specification of its requirements • GDPR - understanding subject data and their rights • The validated system should be based on the data flow within the system. From intake through to outputs (i.e. ICSR reporting, line-listings etc.) • Success requires a partnership between QA, the business, IT & engineering to mitigate the risks to the data • CSV must follow a controlled process

• To get a positive result to data integrity validation you need negativity. In simple terms, sometimes a test needs to fail to confirm the system works as expected/ required. In PV terms, if you are testing reporting rules it’s as important to test that rules won’t work, or fire as expected as well as testing that they do work or fire in the right circumstances.

Trevor gave us an invaluable insight into the world of data integrity and how it relates to what we do on a daily basis. Personally, my take home was a better understanding of what is involved in validating a system and to ensure that GDPR, data integrity and validation of the system’s use should be part of contract set-up and review with vendors.

Emma Boulton Napp Pharmaceuticals Ltd PIPA Committee Member