1 minute read

SENSING

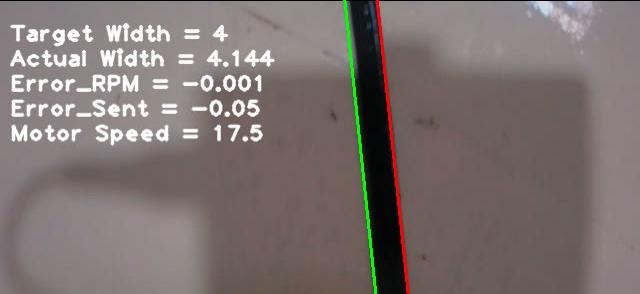

Printing with sustainable materials proves to be challenging due to the myriad number of print parameters that are needed to be calibrated for each new batch of material. Hence we saw an opportunity to automate this task, which conventionally requires meticulous visual feedback and manual fine-tuning. An OpenCV script was used to detect the print, determine the width of the print and correspondingly calculate the error in RPM of the extruder motor based on the distance of the camera to the print as seen in Fig.1. The robot-speed and extrusion temperature is kept constant during the test while extruder speed is calibrated. Following this, we can progress with the actual print. This proof of concept can be extrapolated to sensing and feedback during the course of an entire print using thermal and/or depth cameras to ascertain flow-rate uncertainties common with pellet extruders, or older batch of materials that is affected by humidity.

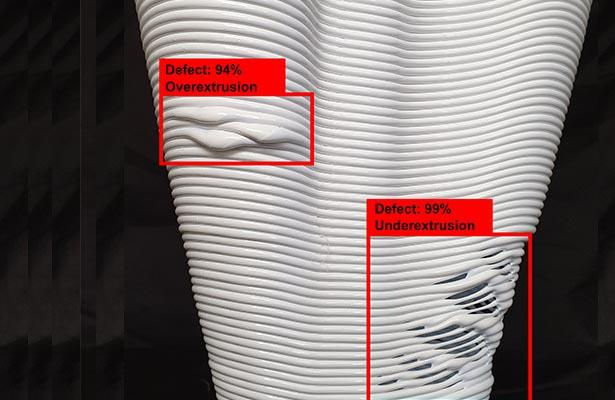

AI-Build, a London-based company, recently revealed a similar vision-based feedback system integrated with their robot-printer setups. They were successful in creating a data-set for various print failures and the ways of troubleshooting them and integrate these learn parameters in the model to correct a print in real-time based on the learnt parameters. This system represents our vision based on the number of printing faults like warping, stringing, delamination, over/under-extrusion. The machine-learning algorithms can make it easier to incorporate print parameters like extrusion temperature, robot speed, print motor speed. These can be tailored to solve a particular problem using a combination of these parameters, while minimizing the printtime.

Advertisement

Fig 1. OpenCV script controlling the extruder motor speed for calibrating the print parameters

Fig 2. AI Build’s system based on computer-vision and an ML dataset for real-time feedback