1 minute read

Noise (N (0, 1)) along with 1 KHz and 20 KHz frequencypeaks ispresent

from IRJET- Fault Detection and Diagnosis of Various Mechanical Components in a Nuclear Power Plant Combi

Volume: 08 Issue: 08 | Aug 2021 www.irjet.net p-ISSN: 2395-0072

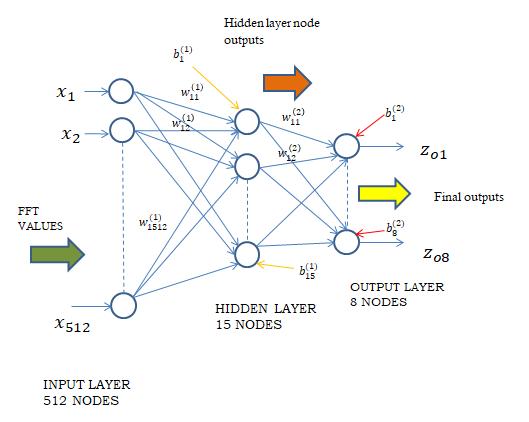

The architecture of the ANN that has been configured has been shown in Fig-10.

Advertisement

Fig-10: Proposed Artificial Neural Network Architecture

3.2 Optimization algorithm

Mini-Batch Stochastic Gradient Descent has been used as the optimization algorithm. It takes a small sample of data points instead of a single data point during each iteration. This sample of data points is called the mini-batch and hence the name of the optimization algorithm.

3.3 Loss function

In this work Mean Squared Error has been used as the loss function. By taking the mean of the squared differences between the actual or the target values and the predicted values, the loss is calculated.

3.4 Activation function

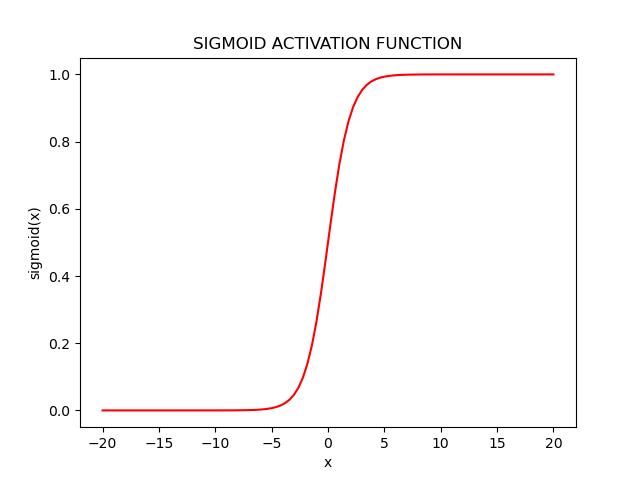

In this work both sigmoid and Rectified Linear Unit (ReLU) have been used as activation functions. Sigmoidis a function and it is plotted as ‘S’ shaped graph as depicted in Fig-11. Equation: f(x) = 1/ (1+ e^-x) Fig-11: Plot showing the sigmoid activation function

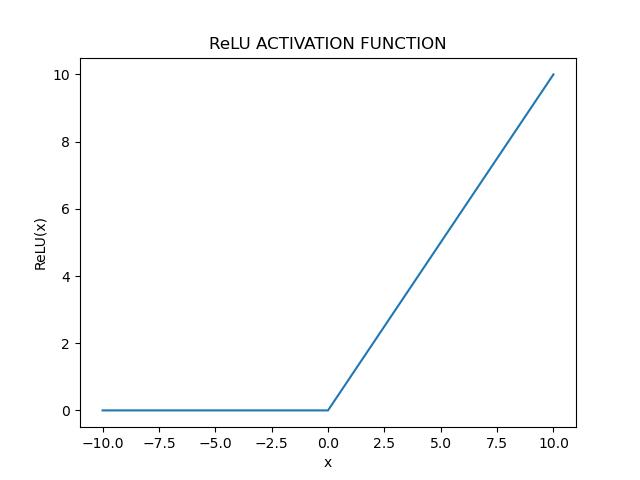

ReLU is a commonly used activation function. Equation: R (x) = max (0, x)

R(x) =0, when x<0

x , when x>0, ReLU is half rectified (from the bottom)

The range of the function is between 0 and infinity. The plot of ReLU activation function has been shown in Fig-12.

Fig-12: Plot showing the ReLU activation function

The entire code for the proposed ANN model has been implemented using python.