12 minute read

Naked Truth: AI Ethics in EdTech

I’m a pretty well-adjusted father of 2 and like to think I have my head on straight when it comes to knowing right from wrong. I’m one of those annoying people who boast about being part of the Xennial micro generation, where I’m too old to be Gen X and too young to be a Millennial, growing up with an analogue childhood and slowly maturing alongside tech. I played on Commodore 64, Sega Megadrive and Gameboy. I used to record my music on cassette from the Top 40 each Sunday and the only porn I was exposed to as a teen was page 3 of the Sun. I didn’t get a mobile phone until I was 18 and I consider myself lucky that growing up, I didn’t have to deal with 24/7, always-on exposure to absolutely everything going on in the world. I wasn’t nagged by notifications and I was never made to feel shit by “friends” on an app, who I’ve never met in real life.

I’ve smugly sat by and watch as the world got sucked into doom-scrolling through social media, I don’t understand why my wife sends me 20 instagram reels a day and I still enjoy selecting a CD to play in my car, rather than allowing Spotify or Amazon’s algorithms to pick what I listen to. So yeah - I’ve got analogue morals and I know how to apply them in a digital world.

But then, along came AI.

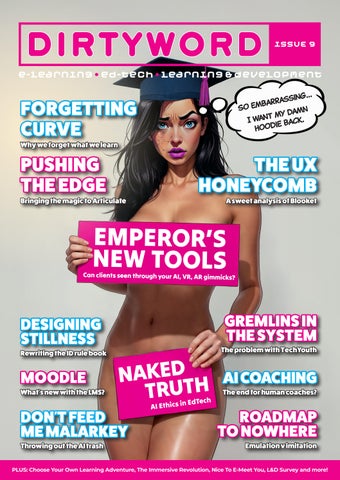

As a kid, all I ever wanted to do when I grew up was write and draw comic books. I’ve written countless novels that will never see the light of day, but I always struggled to make the time and effort to draw a full comic. So the power of AI image generation instantly piqued my interest. Dirtyword’s mascot, Whisper, was originally drawn by me. Properly drawn. But then I used AI to refine her, pose her and take her to the next level. I know there’s a whole argument about AI stealing from artists, and this article isn’t about that, but as far as I’m concerned, I created Whisper, not artificial intelligence. AI is just a tool that allows me to realise and augment the creativity that was always there.

So I know just how powerful AI can be with a little bit of patience and a lot of practice. I still refuse to use it for writing, as I enjoy the process of basically having conversations and arguments with myself as I type, but I know that more and more people are using their favourite LLM to generate articles, emails and, dare I say it, online courses. And that’s ok - AI generation of text and images is something that, like it or not, is here to stay, and it’s up to us to figure out how to use it ethically and responsibly.

But what do those words actually mean?

So-called “Deep Fakes”, where AI can create images and videos of people doing or saying things that never actually happened, threaten to create a world where we can no longer trust our own eyes. We are already having to deal with fake news, kids being blackmailed with AI porn of themselves and political parties weaponising AI to undermine the competition - none of which is ethical or responsible.

The cover of this issue shows just how easy it is to take an innocent concept and sexualise it with AII’m waiting for the backlash on that one and hold my hands up to going for the shock factor to get your attention. I promise Whisper will be back in her hoodie next issue.

But this is a magazine about e-learning, so what should ethical and responsible AI use look like in learning? We can’t - and shouldn’t - stop educators or students from using Artificial Intelligence to create and/or undertake learning but we need to establish a framework for what that looks like, so that we aren’t all getting ChatGPT to pass exams on subjects that are full of hallucinated AI misinformation in the first place.

Educators using AI and LLMs for research and course creation

Remember when ChatGPT first came out and everybody had delusions of getting it to write a best-selling novel in an instant? Well, that spilt over into education and I know more than a few people who thought that AI was capable of writing an entire course, complete with engaging activities and perfectly worded explanations. The reality, however, is a bit more… murky. An educator’s primary responsibility is to impart accurate, relevant, and engaging knowledge. AI and Large Language Models (LLMs) can be powerful tools in this endeavour, but they are just tools, not replacements for human expertise and critical thinking.

Ethical use for educators starts with transparency. If you’ve used AI to generate parts of your research, to outline your course structure, or even to draft some of your text, you should be upfront about it. There seems to still be a sense of shame in admitting to using AI to help write, which is weird, when we’ve all been taught how important it is to reference other people’s research from “real-world” sources. You wouldn’t plagiarise a textbook, so why would you hide the fact that an AI helped you brainstorm or formulate ideas? A simple disclaimer at the beginning of a module or a section could state, “Elements of this content were generated or assisted by AI, then reviewed and refined by a human educator.” This not only models good practice for your students but also manages expectations.

Beyond transparency, there’s the crucial element of verification. LLMs are notorious for “hallucinating” information, confidently presenting made-up nonsense as facts. It’s easy to become complacent and think that because Google developed Gemini, the info it spits out is accurate - but that complacency is dangerous. It’s critical that any information generated by an AI for course content or research is rigorously fact-checked against reliable, human-authored sources. There’s an old journalism quote that says, “Never ask a question you don’t already know the answer to,” and it’s an excellent analogy for quizzing AI. Make sure you’re able to separate fact from fiction, either using your own knowledge or by digging into journal articles, academic texts, and reputable news outlets. Don’t just copy and paste; interrogate the AI’s output. Does it make sense? Is it consistent with established knowledge? Does it cite its own sources (if it even provides them)? If the answer to any of those is “no”, then you need to dig deeper. Your reputation, and more importantly, your students’ understanding, depend on it.

Finally, purposeful integration is key. AI shouldn’t be used to automate away the intellectual heavy lifting of course design. Instead, leverage it for tasks that enhance efficiency and creativity. Need fresh ideas for a discussion prompt? Ask AI. Want to quickly summarise an academic paper to get the gist before diving in? Use an LLM. Struggling to articulate a complex concept in simpler terms? AI can offer different phrasings. But the ultimate pedagogical decisions – what to teach, how to assess it, and how to foster genuine understanding – remain firmly in your human hands.

Learners using AI to pass courses

Just yesterday (mid-June ‘25 for those reading this in the future), I watched a video where a student whipped out a laptop at his graduation ceremony and showed his class - and the world - on screen exactly how he used ChatGPT to pass his course for him. Despite the best efforts of educational institutions, this was inevitable, and we don’t yet know the scale of the problem. And it is a problem. The temptation is obvious. Stuck on an essay? ChatGPT can whip up a first draft in seconds. Facing a tricky multiple-choice quiz? An AI might just have the answers. But, as my (and everybody else’s) Dad used to say, “If you cheat, you’re only cheating yourself.” Using AI to pass a course often means sidestepping the very learning process the course is designed to facilitate.

The ethical framework for students needs to revolve around academic integrity and genuine learning. Just like plagiarism from a human source is unacceptable, submitting AI-generated work as your own, without proper acknowledgement, falls squarely into the realm of academic misconduct. Institutions need to establish clear policies on AI use, specifying what is permissible and what constitutes cheating. Is using an AI to brainstorm ideas acceptable? Probably. Is asking it to write your entire assignment? Probably not.

More importantly, students need to understand why genuine learning matters. If a student relies solely on AI to complete assignments, they miss out on developing critical thinking skills, analytical abilities, and the capacity for independent problem-solving. They might get a good grade, but they won’t truly know the subject matter. Back to my Dad - he was an accountant and he always said that using a calculator to pass a maths exam without understanding the underlying principles might get me the right answer, but I haven’t learned the maths. And he was right, which is why I stick to writing and design.

Educators should encourage students to use AI as a learning aid, not a shortcut. Can an AI help you understand a difficult concept by rephrasing it in simpler terms? Yes. Can it generate practice questions for you to test your knowledge? Absolutely. Can it provide different perspectives on a topic to broaden your understanding? Indeed. The focus should shift from “Can AI do this for me?” to “How can AI help me learn to do this better?” This requires active guidance from educators, setting clear expectations, and designing assessments that measure genuine understanding rather than just the ability to generate a plausible-sounding output. Perhaps even incorporating AI use into assessments, where students are tasked with critically evaluating AI-generated content or using AI tools to enhance their own work, while still demonstrating their individual learning.

Using AI to create supplementary materials that support learning

Now, this is where my inner comic book artist gets excited! The ability to conjure up custom images and even short video clips on demand has changed my social feeds beyond all recognition. Last month, about 3 billion people used AI to generate pictures of themselves as toy action figures. And the month before that, everybody was making Studio Ghibli-style profile pics. Most of these people couldn’t even hold a pencil correctly, let alone draw but AI is a game-changer for creating engaging and accessible learning materials. Imagine teachers explaining complex scientific processes with bespoke animations or bringing historical events to life with AI-generated scenes. The potential to enhance understanding and make learning more vivid is immense.

The ethical considerations here primarily revolve around authenticity, representation, and copyright. Firstly, authenticity: while AI-generated visuals can be incredibly persuasive, it’s crucial to be transparent if they are not real photographs or footage. If you’re illustrating a historical event with AI-generated imagery, it should be clear that these are artistic interpretations or reconstructions, not actual records. Misrepresenting AI-generated visuals as authentic documentation could lead to misinformation and erode trust. A simple caption like, “AI-generated illustration for conceptual understanding,” or “Scene created using AI,” can make all the difference.

Secondly, representation: AI models are trained on vast datasets, and these datasets often reflect existing societal biases. This can lead to AI generating images that perpetuate stereotypes or misrepresent certain groups. It’s our responsibility to critically review AI-generated visuals for fairness and inclusivity. Are you portraying diverse individuals in your examples? Are you avoiding reinforcing harmful stereotypes? If you’re generating images of people, are they representative of the global population, or are they skewed towards one demographic? This requires a conscious effort to challenge and refine AI outputs to ensure they align with principles of equity and respect.

Finally, copyright remains a thorny issue and just this month, Disney and Universal have launched legal proceedings against Midjourney, the company at the forefront of AI image generation. It seems they aren’t too happy with Geoff in Accounts sprucing up his budget report with fine art images of Darth Vader and Spider-Man. While I believe AI is a tool, the legal landscape around AI-generated content and the datasets they’re trained on is still evolving. Educators should be mindful of potential copyright implications, especially if the AI output too closely resembles existing copyrighted works. For now, your safest bet is to clearly attribute copyrighted characters where appropriate. Until clearer legal rules are established, exercising caution and focusing on original concepts augmented by AI is the most responsible approach.

Just like that time I made cheese on toast in the air fryer, AI tools can now create pretty much anything you can imagine - but that doesn’t mean that you should. Used carefully, with a bit of common sense and a genuine reason, we can harness AI to improve the way we deliver learning, from structure through to design, and personalised learner experiences. But it demands transparency, critical thinking, and a commitment to genuine learning from both educators and students alike - because no serious learning professionals want to read an EdTech magazine with a naked woman on the cover, even if AI made it look really cool. From now on, I promise to only use AI ethically and responsibly - who’s with me?