8 minute read

WHY DOES L&D STRUGGLE TO PROVE ITS WORTH?

5app’s Philip Huthwaite asked 100 leaders for their views

Everyone in L&D talks about measuring the impact of learning. But talk is cheap, and if we’re pinning all our hopes on LMS logins or course completions moving the needle, we might need to lower our expectations.

Until now, it’s been virtually impossible to truly understand the impact of our learning programmes. But what if the high-level vanity metrics we’ve been using are entirely the wrong ones? What if the real story of learning’s effect on business performance is hiding in plain sight, just beyond the reach of our traditional tools?

We recently spoke with over 100 learning and talent leaders to explore these questions, and their candid responses reveal a landscape of frustration, but also a glimmer of hope for a new approach. From struggling with soft skills to tech letdowns, I’m pleased to reveal the key themes and insights from conversations with the industry’s biggest movers and shakers about why measurement matters, and how we can get better at it.

Soft skills, hard data

“I’m especially frustrated with soft skills measurement! Soft skills are really hard to measure, especially when it comes to how those skills are applied in real-world situations.”

We all know that soft skills are important – but typically, they end up being overlooked and undervalued in the workplace. We don’t have an easy way to measure them, and line managers are not in every meeting to assess who’s communicating well, who’s the best at coaching and who needs to improve their active listening skills. The tech just hasn’t been there to make measuring soft skills easy. So, no tech coupled with a hugely time-consuming, manual effort leaves L&D with no real opportunity to understand how soft skills are showing up across the business.

Of course, the danger here is that we end up sticking to measuring what’s easy. That tends to be hard skills, like coding or equipment mastery, while those allimportant soft skills fall by the wayside. Reducing everything to what’s easy to measure, vs what we should be measuring, is a dangerous game, and it means we’ll miss out on huge chunks of the L&D impact story.

Tech letdowns and tool fatigue

“I’m frustrated by the lack of insight our tech gives us into real behaviour change and application of learning.”

Every single L&D team in the world has invested in disappointing learning tech at some point. These tools promise valuable insights, but inevitably, those insights don’t go beyond ‘How many people logged in last month?’ or ‘Do learners prefer videos or PDFs?’. Useful for the L&D team… but why would anyone in the wider business care?

Many of the L&D leaders we interviewed made it clear that there’s a vast disconnect between what vendors promise and the reality of what tools really offer. They find themselves overwhelmed (by too many bells and whistles they’ll never need), underwhelmed (thanks to products that don’t align with the fancy marketing) or misled, when they find that yet again, their new tool doesn’t allow them to access the data they so desperately want. This, understandably, has led to widespread tool fatigue.

Having been burned so many times by tools that don’t do what they were expecting, L&D leaders are highly suspicious of new tech. This means that lots of learning teams are stuck with tech that doesn’t do what it should do, but with no better alternative to take its place. It’s no wonder so many businesses are so dependent on manual spreadsheets!

The seduction of vanity metrics

“Most learning measurement is shallow, based on feelings or engagement rather than being linked to performance outcomes or ROI. Most companies avoid deeper measurement because they fear accountability.”

One thing I heard loud and clear is that L&D leaders know vanity metrics aren’t great… they just don’t have access to anything better. No L&D professional wants to rely on ‘happy sheets’ or course signups to ‘prove’ learning impact… but what else is there?

One of the most common reasons for succumbing to the temptation of LMS logins is that this is what senior leadership teams are familiar with, and what they want to see. They don’t understand that learner engagement isn’t the same as real learning, so they’re satisfied with a 20% increase in logins or 95% course completions. But that doesn’t mean we are!

The frustration we heard from these L&D leaders was palpable. They feel stuck measuring things they know don't really matter, while all that juicy impact data sits untouched. It's like logging every visit to the gym, without tracking if anyone's getting stronger or fitter.

There’s a will… but no way

“I’ve yet to work in an organisation that effectively tries, let alone is capable of understanding the genuine impact of training.”

One thing we heard loud and clear is that L&D leaders know what they should be measuring… but they simply don’t have a way to do it. Sometimes it’s because of the lack of tech, sometimes it’s because they don’t know how to make it happen, but the result is always the same: measuring what’s easy, not what matters.

Actually, this area is where we saw a lot of vulnerability from the leaders. Many of them admitted that they simply don’t have the necessary skills, such as data literacy, or they’re overwhelmed with other work, or they can’t get buy-in from their business leaders to make measurement a priority.

A sense of apathy or lack of faith in the L&D team from senior leadership teams was a very common theme from our interviews. We heard things like ‘If your leaders are asking you to prove ROI, you’ve already lost’, and that leaders tend to lose interest in any metrics beyond engagement figures. When it feels like your own business leaders don’t care, it’s easy to see how L&D gets discouraged – and that’s why so many learning leaders are looking for ways to make them care.

Good old Kirkpatrick Levels 3 and 4

“Most companies I work with stop at Kirkpatrick Level 1, with reaction surveys right after training. There’s very little follow-up to check for knowledge retention or behaviour change.”

If we had a pound for every time we heard the words ‘Kirkpatrick levels’ in our interviews, we’d have a nice chunk of change by now!

Almost everyone we spoke to acknowledged that they stuck to Level 1 (Reaction – those ubiquitous happy sheets), or possibly Level 2 (Learning – did the learners learn what they needed to?), with Level 3 (Behaviour change) and Level 4 (Business results) broadly ignored. Some L&D professionals have committed themselves to using real-world assignments and integrating L&D data with CRM data to draw a link between sales training and sales growth – but even then, it’s hard to 100% prove the causal relationship between learning and business results.

What L&D is crying out for, then, are simple, intuitive tools to connect learning to real business outcomes. We know how important it is to move up to Kirkpatrick Level 3 and Level 4 – we just need a little help getting there.

The dirty truth about learning data

The honesty and candour from the L&D leaders we spoke with are clear: the data we are currently collecting is not enough. We know what we should be measuring - the true impact on soft skills and business outcomes - but until now, the tools to do so have been lacking.

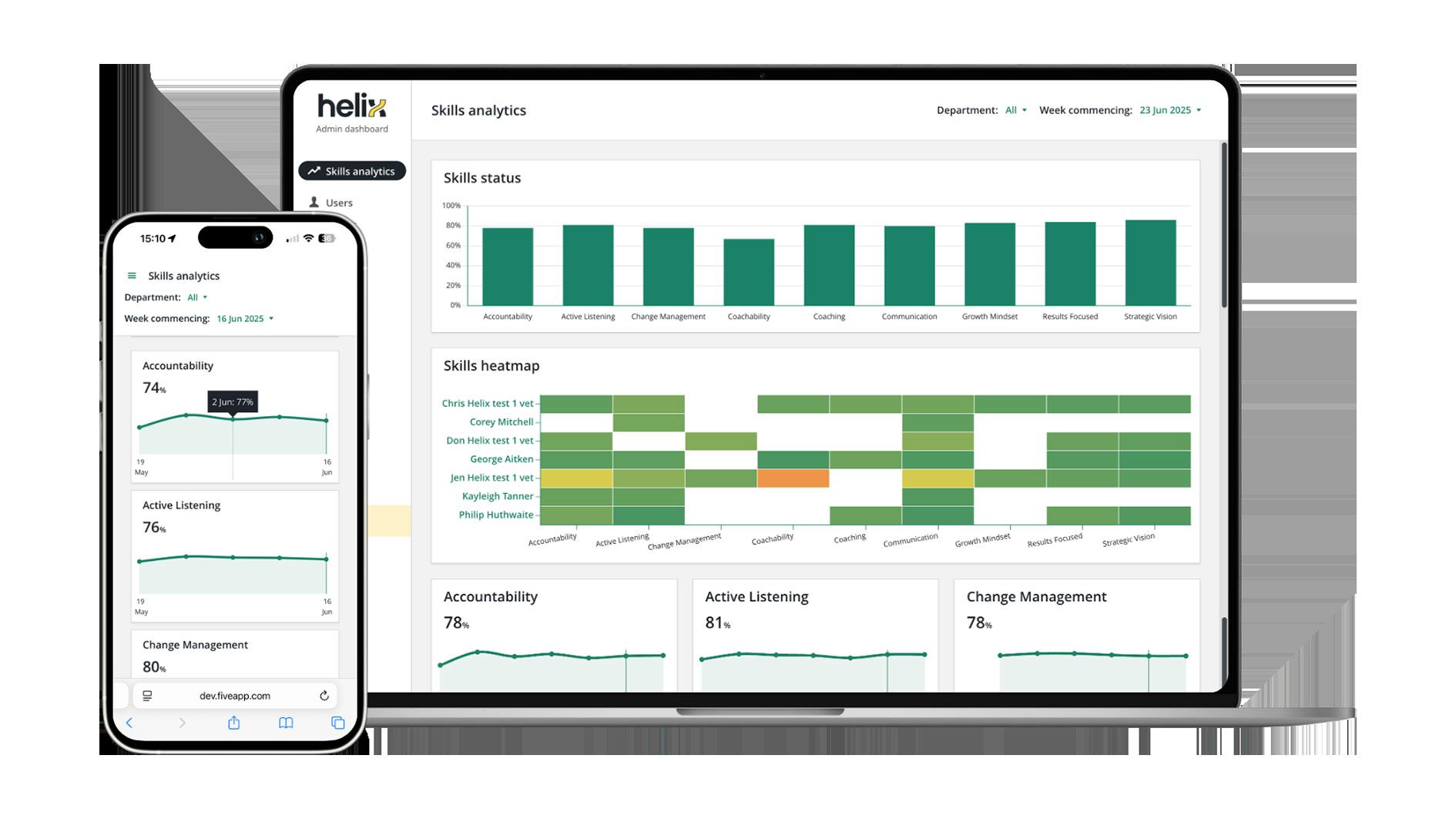

Fortunately, the rapid advancement of AI is creating new opportunities for skills intelligence. The conversation is shifting from passive engagement metrics to active, in-the-moment insights. For instance, our new AI-powered solution, Helix, is one example of how this technology can be applied. It’s designed to measure soft skills like coaching and active listening directly in virtual meetings, providing users with feedback in the flow of work. It then emails you a handy summary immediately after the meeting to reveal which skills you displayed, including suggestions for improvement.

The path to better skills measurement is messy, and the perfect solution may not yet exist. But the conversation we’ve had with so many of you shows that there is a collective will to ditch vanity metrics, ask better questions, and tap into real-world data.

The challenge now is to face these numbers head-on and begin the hard work of truly understanding the impact of our efforts. The technology to help us get there is finally here. The question for all of us is: how will we use it?

Philip Huthwaite is 5app’s CEO. He provides hands-on expertise across sales, marketing and product development, and has a strong interest in using AI to drive success in learning and talent management.